Multi-tenancy is one of the key constructs in Cloud Director. This section covers the constructs of Provider Virtual Data Centers, Organization, and Organizational Virtual Data Centers.

Provider Virtual Data Center

A provider Virtual Data Center (pVDC) makes the vSphere compute, memory, and storage resources available to VMware Cloud Director. The pVDC aggregates the resources within a single vCenter Server by mapping several or all the vSphere clusters. The pVDC also is associated with Datastores (vSAN or otherwise) and storage policies are made available within the pVDC. The pVDC can be bound to an NSX network pool or vSphere VLAN-backed network pools to create networks.

A pVDC network can be created without the network pool backing. In this case, the networks cannot be auto-created. Networks can only be consumed, implying that all network segments and port groups must be pre-created.

Before an organization deploys VMs or creates catalogs, the system administrator must create a provider VDC and the organization VDCs that consume the Provider VDC resources. The relationship of provider VDCs to the organization VDCs they support is an administrative decision. The decision can be based on the scope of your service offerings, the capacity and geographical distribution of your vSphere infrastructure, and similar considerations.

When the deployment of VNFs and CNFs into a converged cluster is supported, an effective approach is to map the vSphere resource pools to a pVDC instead of adding the entire cluster. However, in most deployments, the entire vSphere cluster is added to the pVDC.

Because a pVDC provides an abstraction layer against which resources (compute, RAM, storage) are allocated to tenants, system administrators can create pVDCs that provide different classes of service, based on performance, capacity, and features. Tenants can then be provisioned with organization VDCs that deliver specific classes of service as defined by the pVDC. Before you create a provider VDC, consider the vSphere capabilities that you plan to offer your tenants. Some of these capabilities can be implemented in the primary resource pool of the pVDC.

One such example of this is to create a separate PVDC for User-Plane versus Control plane workloads. However, pVDCs are more commonly used to represent different resource domains. The different workload types can be placed inside the Tenant Constructs (Organization / Organization VDC) and different resource allocation models can be used to separate different workload types.

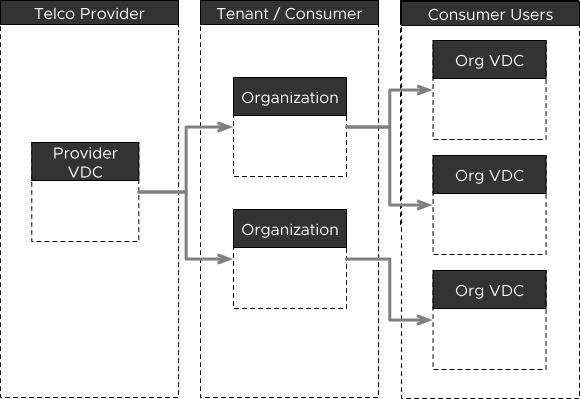

The following diagram illustrates the relationship between Provider VDC, Organization, and Organization VDC.

An Organization can be built using resources from multiple Provider VDCs and is not tied to the Provider VDC construct.

The Organization VDC construct is backed by the resources from a single pVDC, although different OrgVDCs within the same organization can be deployed across different pVDCs.

Organization

The Organization construct is the key component of multi-tenancy in Cloud Director. The Organization is the logical security boundary within a Cloud Director tenant. The Organization is the construct within which all tenant resources reside. These resources include Organizational Virtual Data Centers, Users and IDM / SAML integration points, Role-Based Access Control, catalogs, networking constructs, and so on.

Organization Virtual Data Centers

Within an organization, units of resources are carved out of the Provider VDC (pVDC) in the form of an Organizational Virtual Data Center (OrgVDC). The pVDC creates the required OrgVDC as resource pools within the vCenter Server allocated to the pVDC. The configured resources of the OrgVDC (Compute, Memory, Storage Policies, and Network) have mapping constructs within vSphere to provide the required capabilities.

OrgVDC allows the tenant workloads to be instantiated and powered on.

Allocation Models

To allocate resources to an organization, you must create an OrgVDC. An OrgVDC obtains its resources from a pVDC. The allocation model determines how and when the compute and memory resources of pVDC are committed to the OrgVDC. The OrgVDC maps to resource pools on the underlying vSphere environment.

The allocation model defines the amount of available resources and the commitment of those resources to the VMs contained within the OrgVDC.

The following table describes the vSphere resource distribution settings at the VM or resource pool level based on the OrgVDC allocation model:

Allocation Model |

Resource Pool Setting |

Virtual Machine Setting |

|---|---|---|

Allocation Pool |

|

Resource guarantee and limits are inherited from the resource pool. |

Pay-As-You-Go |

No resource guarantee or limits at the resource pool level |

Resource limits are set at the VM level based on the configuration of the OrgVDC. |

Reservation Pool |

|

Resource Guarantees and Limits are not defined by default. However, they can be configured per workload. |

Flex |

See the following Note. |

See the following Note. |

The Flex model can be implemented with compute and placement policies.

CPU and RAM configuration can be configured at both the OrgVDC and VM levels. However, the placement and compute policies provide more flexibility in workload placement and sizing.

The Flex model supports all configurations possible through other allocation models.

Each allocation model can be used for different levels of performance control and management. The suggested uses of each allocation model are as follows:

Flex Model:

With the flex model, you can achieve fine-grained performance control at the workload level. VMware Cloud Director system administrators can manage the elasticity of individual organization VDCs. Cloud providers can have better control over memory overhead in an organization VDC and can enforce a strict burst capacity use for tenants.

Note:The flex allocation model uses policy-based management of workloads.

Allocation Pool Model:

Use the allocation pool model for long-lived, stable workloads, where tenants subscribe to a fixed compute resource consumption and cloud providers can predict and manage the compute resource capacity. This model is optimal for workloads with diverse performance requirements.

With this model, all workloads share the allocated resources from the resource pools of vCenter Server.

Regardless of whether you activate or deactivate elasticity, tenants receive a limited amount of compute resources. Cloud providers can activate or deactivate the elasticity at the system level and the setting applies to all allocation pool organization VDCs. If you use the non-elastic allocation pool, the organization VDC pre-reserves the VDC resource pool and tenants can overcommit vCPUs but cannot overcommit any memory. If you use the elastic pool allocation, the organization VDC does not pre-reserve any compute resources, and capacity can span through multiple clusters.

Note:Cloud providers manage the overcommitment of physical compute resources and tenants cannot overcommit vCPUs and memory.

Pay-as-You-Go Model:

Use the pay-as-you-go model when you do not have to allocate compute resources in vCenter Server upfront. Reservation, limit, and shares are applied on every workload that tenants deploy in the VDC.

With this model, every workload in the organization VDC receives the same percentage of the configured compute resources reserved. In VMware Cloud Director, the CPU speed of every vCPU for every workload is the same and you can only define the CPU speed at the organizational VDC level. From a performance perspective, because the reservation settings of individual workloads cannot be changed, every workload receives the same preference.

This model is optimal for tenants that need workloads with different performance requirements to run within the same organization VDC.

Because of the elasticity, this model is suitable for generic, short-lived workloads that are part of autoscaling applications.

With this model, tenants can match spikes in compute resources demand within an organization VDC.

Reservation Pool Model:

Use this model when you need fine-grained control over the performance of workloads that are running in the organization VDC.

From a cloud provider perspective, this model requires an upfront allocation of all compute resources in vCenter Server.

Note:This model is not elastic.

This model is optimal for workloads that run on hardware dedicated to a tenant. In such cases, tenant users can manage the use and overcommitment of compute resources.

Traditionally, the Pay-As-You-Go model was used for control-plane based network functions, as the resource guarantees are a percentage of the overall requested resources. With the PAYG model, resources can be consumed continually within the pVDC limits.

User-Plane workloads were traditionally deployed into a reservation pool, although this pool does not support elastic resources. Only a single resource from the pVDC can be used as an available resource for this pool.

The flex model allows all the configuration settings (as listed in the table below) to be combined in different ways and function in the same method as other models but with additional flexibility and more granular control of VMs and compute policies.

The following table describes the configuration settings across different resource pool models.

Flex Allocation Model |

Elastic Allocation Pool Model |

Non-Elastic Allocation Pool Model |

Pay-As-You-Go Model |

Reservation Pool Model |

|

|---|---|---|---|---|---|

Elastic (Can consume multiple resource pools) |

The Elastic setting is based on the organization VDC configuration. |

Yes |

No |

Yes |

No |

vCPU Speed |

If a VM CPU limit is not defined in a VM sizing policy, vCPU speed might impact the VM CPU limit within the VDC. |

Impacts the number of running vCPUs in the Organizational VDC. |

Not Applicable |

Impacts the VM CPU limit. |

Not Applicable |

Resource Pool CPU Limit |

The CPU limit of an Organizational VDC is defined based on the number of VMs in the resource pool. |

Organization VDC CPU allocation |

Organization VDC CPU allocation |

Unlimited |

Organization VDC CPU allocation |

Resource Pool CPU Reservation |

The CPU reservation of an Organization VDC is defined based on the number of vCPUs in the resource pool. Organization VDC CPU reservation equals the organization VDC CPU allocation times the CPU guarantee. |

Sum of powered-on VMs and equals the CPU guarantee multiplied by the vCPU speed, multiplied by the number of vCPUs. |

Organization VDC CPU allocation multiplied by the CPU guarantee |

None, expandable |

Organization VDC CPU allocation |

Resource Pool Memory Limit |

The memory limit of an Organizational VDC is apportioned based on the number of VMs in the resource pool. |

Unlimited |

Organization VDC RAM allocation |

Unlimited |

Organization VDC RAM allocation |

Resource Pool Memory Reservation |

The RAM reservation of an Organization VDC is apportioned based on the number of VMs in the resource pool. The organization VDC RAM reservation equals the organization VDC RAM allocation times the RAM guarantee. |

Sum of RAM guarantee times vRAM of all powered-on VMs in the resource pool. The resource pool RAM reservation is expandable. |

Organization VDC RAM allocation times the RAM guarantee |

None, expandable |

Organization VDC RAM allocation |

VM CPU Limit |

Based on the VM sizing policy of the VM |

Unlimited |

Unlimited |

vCPU speed times the number of vCPUs |

Custom |

VM CPU Reservation |

Based on the VM sizing policy of the VM |

0 |

0 |

Equals the CPU speed times the vCPU speed, times the number of vCPUs |

Custom |

VM RAM Limit |

Based on the VM sizing policy of the VM |

Unlimited |

Unlimited |

vRAM |

Custom |

VM RAM Reservation |

Based on the VM sizing policy of the VM |

0 |

Equals vRAM times RAM guarantee plus RAM overhead |

Equals vRAM times RAM guarantee plus RAM overhead |

Custom |

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

Create at least a single Provider VDC. |

Required to create Organizations and corresponding Organization VDCs. |

None |

Create an Organization per vendor. |

Ensures isolation between different vendors in the environment. |

None |

Create at least a single Organization VDC per Organization. |

Allows the Organization to deploy workloads. |

If not sized properly, an Organization VDC can have unused or overcommitted resources. |

Use the Flex allocation model. |

Allows for fine-grained control of resource allocations to each organization VDC. |

None |

Configure storage and runtime leases for production VDCs to not expire. |

Production workloads must be run until their end of life and then decommissioned manually |

By not setting any leases, workloads that are no longer being used continue to run, resulting in wasted resources. |

Cloud Director Policies

Administrators can configure Provider VDC compute policies that provide Host affinity type rules, allowing VMs to run on a constrained set of hosts. Such constraints can include the requirements for SR-IOV, GPU-enabled nodes, licensing issues, or other concerns the provider might have to address. These policies align with DRS VM/Host mappings within the vSphere environment.

The OrgvDC compute policies consist of placement and sizing policies. Placement policies allow the OrgVDC to consume one or more provider placement policies. This capability provides the flexibility to place different network functions or even different elements within the same network function to different placement policies. This simplifies and ensures the placement of User-Plane and Control-Plane policies.

The Sizing policy allows the provider to provide machine sizes in terms of CPU and Memory resources. The Sizing policy allows the tenant to choose the sizing policy on a per VM basis to suit the deployment model. When using a compute policy, the provider can specify the number of vCPUs, the clock speed, and the amount of memory, and the reservation and limits.

These policies in conjunction with the Flex allocation model ensure pre-determined placement and sizing for tenant workloads.

A single OrgVDC compute policy (a placement policy or a sizing policy ) can be applied as the organization default through the UI.

Without a default sizing policy, reservations and CPU speeds are configured as per the OrgVDC default configuration.