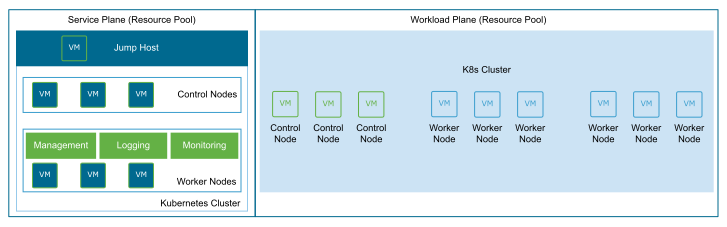

The Service and Workload Planes are the core components of the Cloud Native Logical architecture. The service plane provides management, monitoring, and logging capabilities, while the workload plane hosts the Tanzu Kubernetes cluster required to support Telco CNF workloads.

Service Plane

The service plane is a new administrative domain for the container solution architecture. The design objectives of the service plane are:

Provides isolation for Kubernetes operations.

Provides application-specific day-2 visibility into the Kubernetes cloud infrastructure.

Provides shared services to one or more Kubernetes workload clusters

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

Use a dedicated Resource Pool to separate the service plane Kubernetes cluster from the workload plane clusters. |

|

|

For a single pane of glass monitoring of Telco workloads running in the workload clusters, deploy Monitoring and Logging services as required by the CNFs in the Service Plane cluster. |

|

|

Deploy Fluentd in the Service Kubernetes Cluster. Use Fluentd to forward logs to vRealize Log Insight for centralized logging of all infrastructure and Kubernetes components. |

|

|

Assign a dedicated virtual network to each Service plane for management. |

|

The security policy must be set so that only required communication between service plane management and management network is allowed. |

Reserve a /24 subnet for the Nodes IP Block for each Service Plane for management. |

|

Additional IP address management overhead |

Workload Plane

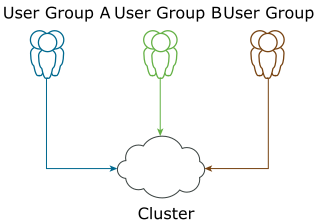

Shared Cluster Model: A shared cluster model reduces the operational overhead of managing the Tanzu Kubernetes clusters and Kubernetes extension services that form the Cloud Native Platform. Because clusters are shared across a larger group of users, Kubernetes namespaces are used to separate the workloads. Kubernetes Namespace is also a 'soft' multi-tenancy model. There are multiple cluster components that are shared across all users within a cluster, regardless of the namespace, for example, cluster DNS.

When using Kubernetes namespaces to separate the workloads, K8s cluster admins must apply the correct RBAC policy at the namespace level so that only authorized users can access resources within a namespace.

As the number of users, groups, and namespaces increases, the operational complexity of managing RBAC policies at a namespace level increases.

A shared cluster works effectively for predictable workload types that do not require strong isolation. If a single Kubernetes cluster must be used to host workloads with different operating characteristics such as various hardware or node configurations, advanced Kubernetes placement techniques such as toleration and taint are required to ensure proper workload placement. Taint and toleration work together to ensure that pods do not schedule onto inappropriate nodes. When taints are applied to a set of worker nodes, the scheduler does not assign any pods that cannot tolerate the taint. For implementation details, see the Kubernetes documentation.

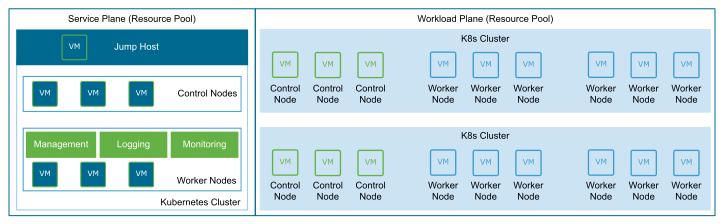

The following figure illustrates the shared cluster model:

The shared cluster model leads to large cluster sizes. Large cluster sizes shared across many user groups impose restrictions on the operational life cycles of the shared cluster. The restrictions include shared IaaS resources, upgrade cycles, security patching, and so on.

Kubernetes deployments must be built based on the Service-Level Objectives of the Telco workload. For maximum isolation and ease of performance management, Kubernetes tenancy must be at the cluster level. Run multiple Kubernetes clusters and distribute the Kubernetes clusters across multiple vSphere resource pools for maximum availability.

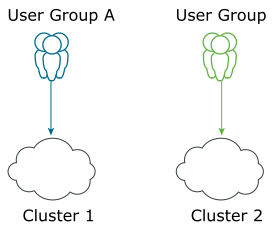

Dedicated Cluster Model: A dedicated cluster model provides better isolation. Instead of authorizing a large number of users and using RBAC policies to isolate resources at a namespace level, a Kubernetes Admin can customize the Tanzu Kubernetes cluster configuration to meet the requirements of the CNF application. Instead of one giant heterogeneous cluster, a Kubernetes admin can deploy dedicated clusters based on the workload function and authorize only a small subset of users based on job function, while denying access to everyone else. Because cluster access is restricted, the Kubernetes admin can provide more freedom to authorized cluster users. Instead of complex RBAC policies to limit the scope of what each user can access, the Kubernetes admin can delegate some of the control to the Kubernetes user while maintaining full cluster security compliance.

The following figure illustrates the dedicated cluster model:

The dedicated Kubernetes clusters approach can lead to smaller cluster sizes. Smaller cluster sizes offer a small "blast radius," therefore easier for upgrades and version compatibility.

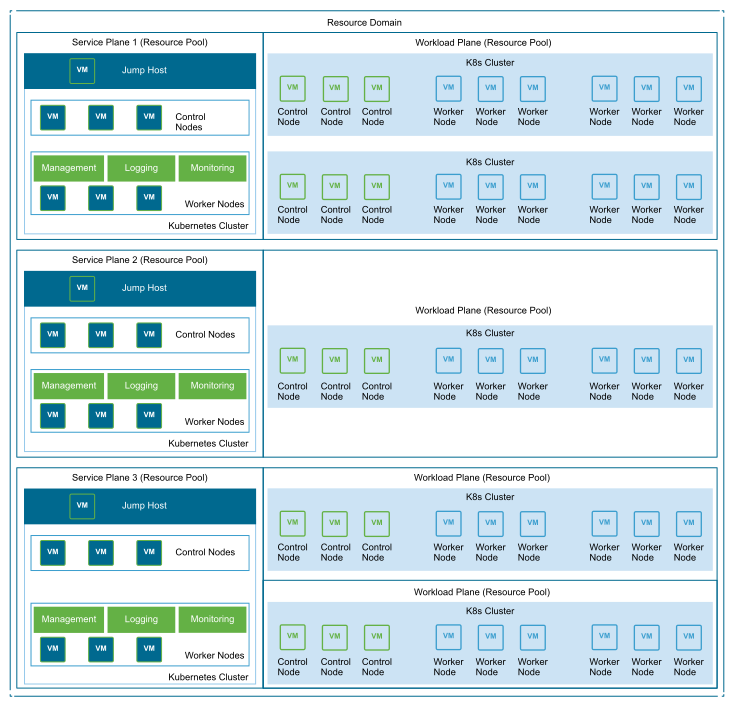

Three approaches to map the workload cluster models to the Service and Workload Plane design are as follows:

A single service plane to manage a single workload plane Kubernetes cluster. This deployment is most useful in a shared cluster model.

Figure 4. Service Plane per Cluster

A single service plane to manage multiple workload plane Kubernetes clusters in a single Resource pool. This model is most useful for applications that share similar performance characteristics but require the Kubernetes cluster level isolation.

Figure 5. Service Plane per Administrative Domain Single Resource Pool

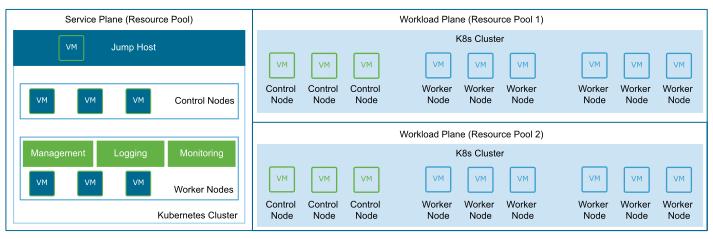

A single service plane to manage multiple workload plane Kubernetes clusters, each Kubernetes cluster is part of a dedicated Resource Pool. This model is most useful for applications that require the Kubernetes cluster level isolation and have drastically different performance characteristics.

Figure 6. Service Plane per Administrative Domain Multiple Resource Pools

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

Use the resource pool to separate the workload plane clusters from the Service plane cluster. |

|

|

Use 1:1 Kubernetes cluster per Workload Plane resource pool for data plane and signal processing workloads. |

A dedicated resource pool per Kubernetes cluster provides better isolation and a more deterministic SLA. |

|

Use N:1 Kubernetes cluster per Workload Plane resource pool for control plane workloads. |

|

During contention, workloads in a resource pool might lack resources and experience poor performance. |

Deploy AVI Kubernetes Operator(AKO) in the workload cluster to expose any Kubernetes service to external networks using the NSX Advanced Load Balancer. |

|

None |

Deploy Fluentd in the Workload Kubernetes Cluster. Use Fluentd to forward all logs to vRealize stack deployed in the management cluster. |

|

|

Assign a dedicated virtual network to each workload plane for management. |

|

Security policy must be set so that only required communication between workload plane management and management network for vSphere and NSX-T are allowed. |

Reserve a /24 subnet for the Nodes IP Block for each Kubernetes in the workload plane. |

|

Additional IP address management overhead |