Telco Cloud Automation (TCA) consists of TCA Manager, TCA-Control Plane, NodeConfig Operator, Container registry, and CNF designer. This section outlines the design best practices of these components.

TCA-Control Plane

Telco Cloud Automation distributes VIM and CaaS manager management across a set of distributed Telco Cloud Automation appliances. TCA-CP performs multi‑VIM/CaaS registration, synchronizes multi‑cloud inventories, and collects faults and performance from infrastructure to network functions.

TCA Manager: TCA Manager connects with TCA-CP nodes through site pairing to communicate with the VIM. It posts workflows to the TCA-CP. TCA manager relies on the inventory information captured from TCA-CP to deploy and scale Tanzu Kubernetes clusters.

Tanzu Kubernetes Cluster: Tanzu Kubernetes cluster bootstrapping environment is completed abstracted into the TCP-CP node. All the binaries and cluster plans required to bootstrap the Kubernetes clusters are pre-bundled into the TCP-CP appliance. After the base OS image templates are imported into respective vCenter Servers, Tanzu Kubernetes Cluster admins can log into the TCA manager and deploy Kubernetes clusters directly from the TCA manager console.

Workflow Orchestration: Telco Cloud Automation provides a workflow orchestration engine that is distributed and easily maintainable through the integration with vRealize Orchestrator. vRealize Orchestrator workflows are intended to run operations that are not supported natively on TCA Manager. Using vRealize Orchestrator, you can create custom workflows or use an existing workflow as a template to design a specific workflow to run on your network function or network service. For example, you can create a workflow to assist CNF deployment or simplify the day-2 lifecycle management of CNF. vRealize Orchestrator is registered with TCA-CP.

Resource Tagging: Telco Cloud Automation supports resource tagging. Tags are custom-defined metadata that can be associated with any component. They can be based on hardware attributes or business logic. Tags simplify the grouping of resources or components.

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

Integrate Management vCenter PSC with LDAP / AD for TCA user onboarding. |

|

Requires additional components to manage in the Management cluster. |

Deploy a single instance of the TCA manager to manage all TCA-CP endpoints. |

|

None |

Register TCA manager with the management vCenter Server. |

Management vCenter Server is used for TCA user onboarding. |

None |

Deploy a dedicated TCA-CP node to control the TCP management cluster. |

Required for the deployment of the Tanzu Kubernetes Management cluster. |

TCA-CP requires additional CPU and memory in the management cluster. |

Deploy a TCA-CP node for each vCenter Server instance. |

Each TCA-CP node manages a single vCenter Server. Multiple vCenter Servers in one location require multiple TCA-CP nodes. |

Each time a new vCenter Server is deployed, a new TCA-CP node is required. To minimize recovery time in case of TCA-CP failure, each TCA-CP node must be backed up independently, along with the TCA manager. |

Deploy TCA manager and TCA-CP on a shared LAN segment used by VIM for management communication. |

|

None |

Deploy a single vRealize Orchestrator solution for use in the design. |

Reduces the number of vRO nodes to deploy and manage. |

Requires vRO to be highly available, if multiple TCA-CP endpoints are dependent on a shared deployment |

Deploy a highly available, three-node vRO cluster. |

A highly available cluster ensures that vRO is highly available for all TCA-CP endpoints |

vRO redundancy requires an external Load Balancer. |

Schedule TCA manager and TCA-CP backups at around the same time as SDDC infrastructure components to minimize database synchronization issues upon restore. Note: Your backup frequency and schedule might vary based on your business needs and operational procedure. |

|

Backups are scheduled manually. TCA admin must log into each component and configure a backup schedule and frequency. |

CaaS Infrastructure

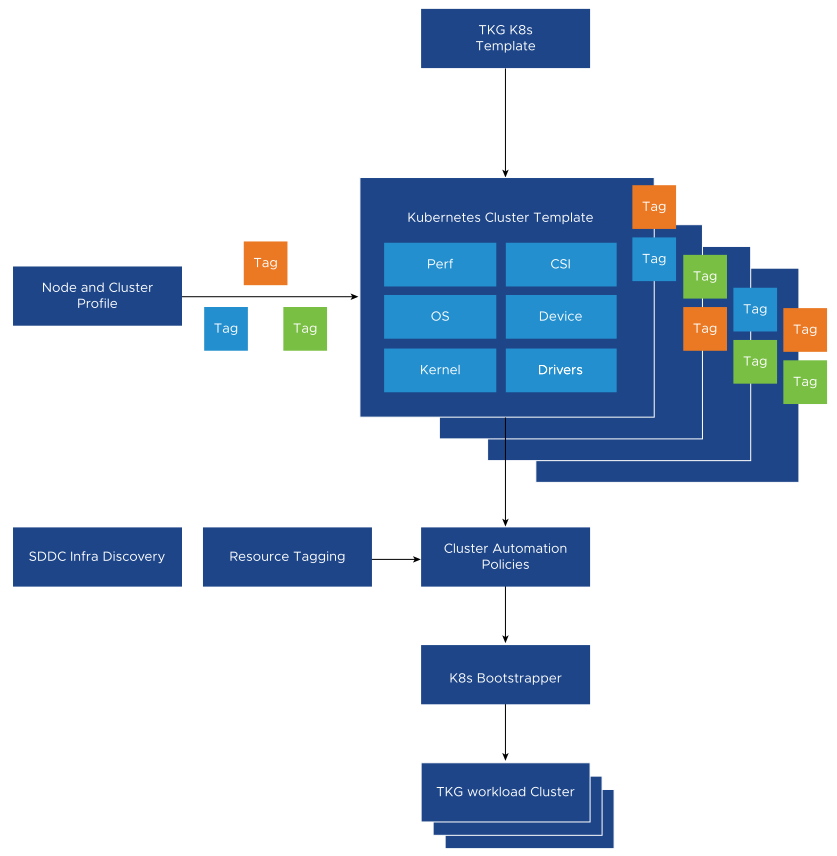

The Tanzu Kubernetes Cluster automation starts with Kubernetes templates that capture deployment configurations for a Kubernetes cluster. The cluster templates are a blueprint for Kubernetes cluster deployments and are intended to minimize repetitive tasks. The cluster templates enforce best practices and define guard rails for infrastructure management.

A policy engine is used to honor SLA required for each template profile by mapping the TCI resources to the Cluster templates. Policies can be defined based on the tags assigned to the underlying VIM or based on the role and role permission binding. Hence, the appropriate VIM resources are exposed to a set of users, thereby automating the SDDC to the K8s Cluster creation process.

TCA CaaS Infrastructure automation consists of the following components:

TCA Kubernetes Cluster Template Designer: TCA admin uses the Tanzu Kubernetes Cluster template designer to create Kubernetes Cluster templates to help deploy the Kubernetes cluster. The cluster template defines the composition of the Kubernetes cluster. Attributes such as the number and size of Control and worker nodes, Kubernetes CNI, Kubernetes storage interface, and Helm version make up a typical Kubernetes cluster template. The template designer does not capture CNF-specific Kubernetes attributes but instead leverages the VMware NodeConfig operator through late binding. For late binding details, see TCA VM and Node Config Automation Design.

SDDC Profile and Inventory Discovery: The Inventory management component of Telco Cloud Automation can discover the underlying infrastructure for each VIM associated with a TCA-CP appliance. Hardware characteristics of the vSphere node and vSphere cluster are discovered using the TCA inventory service. The platform inventory data is made available by the discovery service to the Cluster Automation Policy engine to assist the Kubernetes cluster placement. TCA admin can add tags to the infrastructure inventory to provide additional business logic on top of the discovered data.

Cluster Automation Policy: The Cluster Automation policy defines the mapping of the Tanzu Kubernetes Cluster template to infrastructure. VMware Telco Cloud Platform allows TCA admins to map the resources using a Cluster Automation Policy to identify and group the infrastructure to assist users in deploying higher-level components on them. The Cluster Automation Policy indicates the intended usage of the infrastructure. During the cluster creation time, Telco Cloud Automation validates whether the Kubernetes template requirements are met by the underlying infrastructure resources.

K8s Bootstrapper: When the deployment requirements are met, Telco Cloud Automation generates a deployment specification. The K8s Bootstrapper uses the Kubernetes cluster APIs to create the Cluster based on the deployment specification. Bootstrapper is a component of the TCA-CP.

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

Create unique Kubernetes Cluster templates for each 5G system Profile defined in the Workload Profile and Cluster Sizing section. |

Cluster templates serve as a blueprint for Kubernetes cluster deployments and intend to minimize repetitive tasks. Cluster templates enforce best practices, and define guard rails for infrastructure management. |

K8s templates must be maintained to align with the latest CNF requirements. |

When creating the Tanzu Management Cluster template, define a single network label for all nodes across the cluster. |

Tanzu management cluster nodes require only a single NIC per node. |

None |

When creating workload Cluster templates, define only network labels required for Tanzu Standard for Telco management and CNF OAM using network labels. |

|

None |

When creating workload Cluster templates, enable Multus CNI for clusters that host Pods requiring multiple NICs. |

|

Multus is an upstream plugin and follows the community support model. |

When creating workload Cluster templates, enable whereabouts if cluster-wide IPAM is required for secondary Pod NICs. |

|

Whereabouts is an upstream plugin and follows the community support model. |

When defining workload cluster templates, enable nfs_client CSI for multiaccess read and write support. |

Some CNF vendors require readwritemany persistent volume support. NFS provider supports K8s RWX persistent volume types. |

NFS backend must be onboarded separately, outside of Telco Cloud Automation. |

When defining workload and management Kubernetes templates, enable Taint on all Control Plane nodes. |

Improved security, stability, and management of the control plane. |

None |

When defining workload cluster and Management template, do not enable multiple node pools for the Kubernetes Control node. |

TCP supports only a single Control node group per cluster. |

None |

When defining a workload cluster template, if a cluster is designed to host CNFs with different performance profiles, create a separate node pool for each profile. Define unique node labels to distinguish node members from other node pools. |

|

Too many node pools might lead to resource underutilization. |

Pre-define a set of infrastructure tags and apply the tags to SDDC infrastructure resources based on the CNF and Kubernetes resource requirements. |

Tags simplify the grouping of infrastructure components. Tags can be based on hardware attributes or business logic. |

Infrastructure tag mapping requires Administrative level visibility into the Infrastructure composition. |

Pre-define a set of CaaS tags and apply the tags to each Kubernetes cluster template defined by the TCA admin. |

Tags simplify the grouping of Kubernetes templates. Tags can be based on hardware attributes or business logic. |

K8s template tag mapping requires advanced knowledge of CNF requirements. K8s template mapping can be performed by the TCA admin with assistance from Tanzu Kubernetes Cluster admins. |

Pre-define a set of CNF tags and apply the tags to each CSAR file uploaded to the CNF catalog. |

Tags simplify the searching of CaaS resources. |

None |

After deploying the resources with Telco Cloud Automation, you cannot rename infrastructure objects such as Datastores or Resource Pools.