This network virtualization design uses the vSphere Distributed Switch (VDS) along with Network I/O Control.

Design Goals

The following high-level design goals apply regardless of your environment.

Meet diverse needs: The network must meet the diverse requirements of different entities in an organization. These entities include applications, services, storage, administrators, and users.

Reduce costs: Reducing costs is one of the simpler goals to achieve in the vSphere infrastructure. Server consolidation reduces network costs by reducing the number of required network ports and NICs, but a more efficient network design is required. For example, configuring two 25 GbE NICs might be more cost-effective than configuring four 10 GbE NICs.

Improve performance: You can achieve performance improvement and decrease the maintenance time by providing sufficient bandwidth, which in turn reduces the contention and latency.

Improve availability: A well-designed network improves availability by providing network redundancy.

Support security: A well-designed network supports an acceptable level of security through controlled access and isolation, where required.

Enhance infrastructure functionality: You can configure the network to support vSphere features such as vSphere vMotion, vSphere High Availability, and vSphere Fault Tolerance.

Network Best Practices

Follow these networking best practices throughout your environment:

Separate the network services to achieve high security and better performance.

Use Network I/O Control and traffic shaping to guarantee bandwidth to critical VMs. During the network contention, these critical VMs receive a high percentage of the bandwidth.

Separate the network services on a vSphere Distributed Switch by attaching them to port groups with different VLAN IDs.

Keep vSphere vMotion traffic on a separate network. When a migration using vSphere vMotion occurs, the contents of the memory of the guest operating system are transmitted over the network. You can place vSphere vMotion on a separate network by using a dedicated vSphere vMotion VLAN.

Ensure that physical network adapters that are connected to the same vSphere Standard or Distributed Switch are also connected to the same physical network.

Network Segmentation and VLANs

Separate the different types of traffic for access security and to reduce the contention and latency.

High latency on any network can negatively affect performance. Some components are more sensitive to high latency than others. For example, high latency IP storage and the vSphere Fault Tolerance logging network can negatively affect the performance of multiple VMs.

According to the application or service, high latency on specific VM networks can also negatively affect performance. Determine which workloads and networks are sensitive to high latency by using the information gathered from the current state analysis and by interviewing key stakeholders and SMEs.

Determine the required number of networks or VLANs depending on the type of traffic.

vSphere Distributed Switch

Create a single virtual switch per vSphere cluster. For each type of network traffic, configure a port group to simplify the configuration and monitoring.

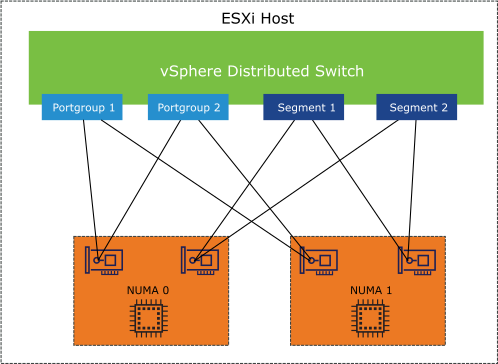

When using NSX-T, allocate four physical NICs to the distributed switch. Use two physical NICs (one per NUMA Node) for vSphere Distributed Switch port groups and the other two physical NICs for NSX-T segments.

Health Check

Health Check helps identify and troubleshoot configuration errors in vSphere distributed switches. The common configuration errors are as follows:

Mismatching VLAN trunks between an ESXi host and the physical switches to which it is connected.

Mismatching MTU settings between physical network adapters, distributed switches, and physical switch ports.

Mismatching virtual switch teaming policies for the physical switch port-channel settings.

In addition, Health Check also monitors VLAN, MTU, and teaming policies.

NIC Teaming

You can use NIC teaming to increase the network bandwidth in a network path and to provide the redundancy that supports high availability.

NIC teaming helps avoid a single point of failure and provides options for traffic load balancing. To reduce the risk of a single point of failure further, build NIC teams by using ports from multiple NIC and motherboard interfaces.

Network I/O Control

When Network I/O Control is enabled, the distributed switch allocates bandwidth for the traffic that is related to the main vSphere features.

When the network contention occurs, Network I/O Control enforces the share value specified for different traffic types. Network I/O Control applies the share values set to each traffic type. As a result, less important traffic, as defined by the share percentage, is throttled while granting access to more network resources to more important traffic types.

Network I/O Control supports bandwidth reservation for system traffic based on the capacity of physical adapters on an ESXi host. It also enables fine-grained resource control at the VM network adapter. Resource control is similar to the CPU and memory reservation model in vSphere DRS.

TCP/IP Stack

Use the vMotion TCP/IP stack to isolate the traffic for vSphere vMotion and to assign a dedicated default gateway for the vSphere vMotion traffic.

By using a separate TCP/IP stack, you can manage vSphere vMotion and cold migration traffic according to the network topology, and as required by your organization.

Route the traffic for the migration of VMs (powered on or off) by using a default gateway. The default gateway is different from the gateway assigned to the default stack on the ESXi host.

Assign a separate set of buffers and sockets.

Avoid the routing table conflicts that might appear when many features are using a common TCP/IP stack.

Isolate the traffic to improve security.

SR-IOV

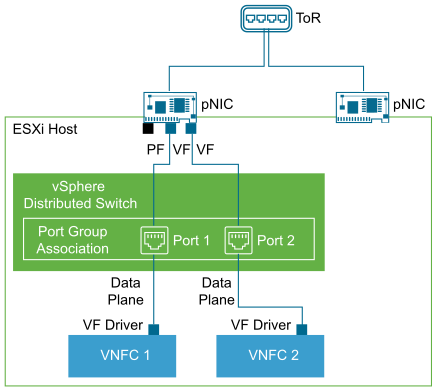

SR-IOV is a specification that allows a single Peripheral Component Interconnect Express (PCIe) physical device under a single root port to appear as multiple separate physical devices to the hypervisor or the guest operating system.

SR-IOV uses Physical Functions (PFs) and Virtual Functions (VFs) to manage global functions for the SR-IOV devices. PFs are full PCIe functions that can configure and manage the SR-IOV functionality. VFs are lightweight PCIe functions that support data flow but have a restricted set of configuration resources. The number of VFs provided to the hypervisor or the guest operating system depends on the device. SR-IOV enabled PCIe devices require appropriate BIOS, hardware, and SR-IOV support in the guest operating system driver or hypervisor instance.

In vSphere, a VM can use an SR-IOV virtual function for networking. The VM and the physical adapter exchange data directly without using the VMkernel stack as an intermediary. Bypassing the VMkernel for networking reduces the latency and improves the CPU efficiency for high data transfer performance.

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

Use two physical NICs in the management and edge clusters. |

Provides redundancy to all port groups. |

None |

Use four physical NICs in compute clusters. |

Provides redundancy to all port groups and segments. |

None |

Use vSphere Distributed Switches. |

Simplifies the management of the virtual network. |

Migration from a standard switch to a distributed switch requires a minimum of two physical NICs to maintain redundancy. |

Use a single vSphere Distributed Switch per vSphere cluster. |

Reduces the complexity of the network design. |

Increases the number of vSphere Distributed Switches that must be managed. |

Use ephemeral port binding for the management port group. |

Provides the recovery option for the vCenter Server instance that manages the distributed switch. |

Port-level permissions and controls are lost across power cycles, and no historical context is saved. |

Use static port binding for all non-management port groups. |

Ensures that a VM connects to the same port on the vSphere Distributed Switch. This allows for historical data and port-level monitoring. |

None |

Enable health check on all vSphere distributed switches. |

Verifies that all VLANs are trunked to all ESXi hosts attached to the vSphere Distributed Switch and the MTU sizes match the physical network. |

You must have a minimum of two physical uplinks to use this feature. |

Use the Route based on the physical NIC load teaming algorithm for all port groups. |

|

None |

Enable Network I/O Control on all distributed switches. |

Increases the resiliency and performance of the network. |

If configured incorrectly, Network I/O Control might impact the network performance for critical traffic types. |

Set the share value to Low for non-critical traffic types such as vMotion and any unused IP storage traffic types such as NFS and iSCSI. |

During the network contention, these traffic types are not as important as the VM or vSAN traffic. |

During the network contention, vMotion takes longer than usual to complete. |

Set the share value for management traffic to Normal. |

|

None |

Set the share value to High for the VM and vSAN traffic. |

|

None. |

Use the vMotion TCP/IP stack for vSphere vMotion traffic. |

By using the vMotion TCP/IP stack, vSphere vMotion traffic can be assigned a default gateway on its own subnet and can go over Layer 3 networks. |

None. |