The paravirtualized network interface card (VMXNET3) from VMware provides improved performance over other virtual network interfaces. It provides several advanced features including multi-queue support, Receive Side Scaling (RSS), Large Receive Offload (LRO), IPv4 and IPv6 offloads, and MSI and MSI-X interrupt delivery. By default, VMXNET3 also supports an interrupt coalescing algorithm.

VMware Telco Cloud Platform extends the following performance features to CNFs leveraging:

Data Plane Development Kit (DPDK), an Intel-led packet processing acceleration technology, accelerates data-intensive workloads. Its capabilities include optimizations through poll mode drivers, CPU affinity and optimization, and buffer management.

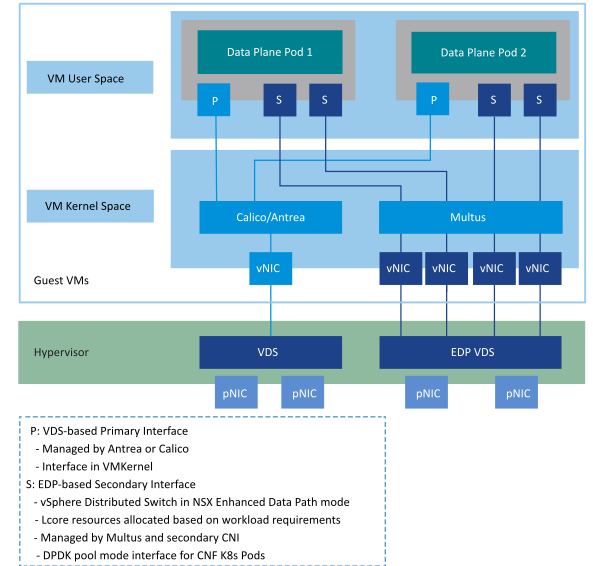

VMware NSX managed Virtual Distributed Switch (VDS) in Enhanced Data Path mode (EDP) leverages DPDK techniques and provides fast virtual switching fabric on VMware vSphere.

Improved performance through multi-tiered routing and huge pages with the increased access efficiency of translation look-aside buffers.

EDP is an efficient virtual networking stack that uses industry advances such as DPDK. By using DPDK principles, EDP achieves higher packet throughput rates than previous virtual networking stacks.

EDP uses dedicated CPU resources assigned to its networking processes. This ensures that the amount of resources that are dedicated to the platform’s virtual networking is predetermined by the administrator. By dedicating resources to virtual networking, the resources are clearly separated between the virtualization platform and CNFs.

Before attaching the secondary CNI for intensive data plane workload, the hosts in the Compute Clusters where the Pod runs need specific configuration:

vNICs that are intended for use with EDP switch must be connected by using similar bandwidth capacity on all NUMA nodes.

A VDS with EDP enabled with at least one dedicated physical NIC per NUMA node must be created on each host.

The same number of cores from each NUMA node must be assigned to the EDP switch.

A Poll Mode Driver for EDP must be installed on the ESXi hosts for use of the physical NICs that are dedicated to the switch. Identify and download the correct drivers by looking for "Enhanced Data Path - Poll Mode" in the feature column of the VMware Compatibility Guide.

A data plane intensive VNFC must always use VMXNET3 as its vNIC instead of using other interfaces. If a VNFC uses DPDK, a VMXNET3 poll mode driver must be used.

For more information about DPDK support in the VMXNET3 driver, see Poll Mode Driver for Paravirtual VMXNET3 NIC.