Telco Cloud workloads consist of CNFs in the form of container images and Helm charts from network vendors. This section outlines the recommendations to deploy and maintain a secure container image registry using VMware Harbor.

Container images and Helm charts must be stored in registries that are always available and easily accessible. Public or cloud-based registries lack critical security compliance features to operate and maintain carrier-class deployments. Harbor addresses these issues by providing trust, compliance, performance, and interoperability.

Telco Cloud Automation supports HELM charts stored either in the chartmuseum component of Harbor or as OCI charts pushed directly to the repository.

The chartmuseum feature of Harbor is deprecated and scheduled for removal post Harbor 2.8 releases.

Image registry allows users to pull container images and charts and the admins to publish new container images. Different categories of images are required to support Telco Applications. Some CNF images are required by all Tanzu Kubernetes users, while others are required only by specific Tanzu Kubernetes users. To maintain image consistency, Cloud admins might need to ingest a set of golden CNF public images that all tenants can consume. Kubernetes admins might also require the images not offered by the network vendors and may upload private CNF images or charts.

To support Telco applications, organize CNF images and helm charts into various projects and assign different access permissions for those projects using RBAC. Integration with a federated identity provider is also important. Federated identity simplifies user management while providing enhanced security.

Container image scanning is important in maintaining and building container images. Irrespective of whether the image is built from the source or from VNF vendors, it is important to discover any known vulnerabilities and mitigate them before the Tanzu Kubernetes cluster deployment. Trivy is the default scanning implementation with Harbor.

Image signing establishes the image trust, ensuring that the image you run in the cluster is the image you intended to run. Notary digitally signs images using keys that allow service providers to securely publish and verify content. Components signing is done before uploading to a harbor project.

Container image replication is essential for backup, disaster recovery, multi-datacenter, and edge scenarios. It allows a Telco operator to ensure image consistency and availability across many data centers based on a predefined policy.

Images that are not required for production must be bounded to a retention policy, so that obsoleted CNF images do not remain in the registry. To avoid a single tenant from consuming all available storage resources, the resource quota can also be set per tenant project.

Harbor Deployment considerations

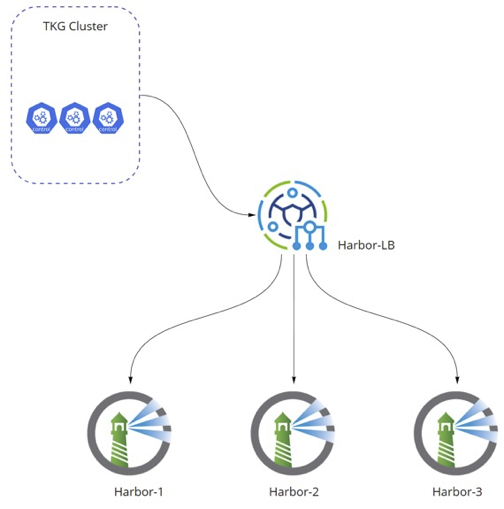

Telco Cloud Automation supports multiple harbors to be attached to a Tanzu Kubernetes cluster. However, this architecture allows the CSPs to leverage different harbors for different network functions in a distributed way. This architecture is not designed to offer harbor redundancy.

For pulling HELM charts, you can use the following two options to provide a redundant or resilient harbor:

Resilient harbor: In the resilient Harbor method, a resilient harbor is deployed on top of cloud-native k8s infrastructure. It requires additional external components such as external highly available databases. This method is a single harbor deployment that is constrained by locality and other factors.

Restriction:To implement a highly available Harbor as a cloud-native application, external redis and PostgreSQL databases are required. These components are not covered in this reference architecture.

Load-balanced Harbor: This method involves individually load-balanced harbor deployments. The load-balanced Harbor provides a resilient harbor. However, any pools of harbor VMs deployed behind the load-balancer must be in sync. If the images and charts are not properly replicated across all harbors, the load-balancer forwards a request to a harbor that does not have the image in the appropriate project.

The harbor and load-balancer can be configured in various modes, including one-armed load-balancer and routed load-balancer. Examples include simple round-robin with IP stickiness, proximity based, connection count, and so on. In a typical telco cloud deployment, multiple Harbors are deployed within a single region, leveraging a one-armed load-balancer with health checks.

Depending on the size of the Telco Cloud deployments, multiple Harbors can be deployed per region or domain to provide resiliency within a region and to provide a shorter path for image or chart pulls. This reduces traffic on the WAN and speeds up deployments.

When integrating harbors to a Tanzu Kubernetes Cluster through Telco Cloud Automation, only a single account can be used to access the harbor. To ensure that a tenant within Telco Cloud Automation can only access specific projects within the harbor, use the following two methods:

Onboard multiple copies of the same harbor with different credentials and then assign the appropriate harbor to the TKG cluster.

Leverage the ability to supply username and password credentials through the TCA UI when onboarding the CNF. This implies that the NF Deploy has the correct URL for the chart location and valid credentials to access the specific project within harbor.

Harbor replication is required to keep the projects across multiple harbors in sync. One of the major requirements for replication is that all harbor instances are running the same version.

Harbor replication consists of setting up endpoints and replication rules. The endpoints control the replication source and destination such as other Harbors, jFrog repositories, and so on. The replication rules specify a push/pull model for distributing projects or components of projects among different harbors.

Harbor Authentication

As part of a common authentication methodology, Harbor supports LDAP integration and RBAC policies.

Harbor can be configured to integrate with LDAP and leverage the same Authentication Directory as used by TCA, thereby achieving commonality across user and group configurations.

Harbor projects can be configured as Private or Public. Additionally, user access to projects can be configured on a per LDAP user or group basis. This allows a granular approach to securing visibility to harbor projects through traditional LDAP group membership.

Container Registry Design Recommendations

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

Integrate Harbor with existing User authentication providers such as OIDC or external LDAP/Active Directory server. |

User accounts are managed centrally by an external authentication provider. Central authentication provider simplifies user management and role assignment. |

Requires coordination between Harbor administrator and authentication provider. |

Use Harbor projects to isolate container images between different users and groups. Within a Harbor project, map the users to roles based on the TCA persona. |

Role-based access control ensures that only authorized users can access private container images. |

None |

Use quotas to control resource usage. Set a default project quota that applies to all projects and use project-level override to increase quota limits upon approval. |

Quota system is an efficient way to manage and control system utilization. |

None |

Enable Harbor image Vulnerability Scanning. Images must be scanned during the container image ingest and daily as part of a scheduled task. |

Non-destructive form of testing provides immediate feedback on the health and security of a container image. Proactive way to identify, analyze, and report potential security issues. |

None |

Centralize the container image ingestion at the core data center and enable Harbor replication between Harbor instances using push mode. Define replication rules such that only the required images are pushed to remote Harbor instances. |

Centralized container image ingestion allows better control of image sources and prevents the intake of images with known software defects and security flaws. Image replication push mode can be scheduled to minimize WAN contention. Image replication rule ensures that only the required container images are pushed to remote sites. Better utilization of resources at remote sites. |

None |

When using a load-balancer harbor, leverage the NSX Advanced Load Balancer to provide the L4 service offering. |

Leverages VMware stack for simplified LB deployment |

Additional SE deployments might be required to provide the LB services |

Ensure the LB and harbor nodes are using CA signed certs |

Eliminates complexity around container management and certificates |

None |

If needed create an intake / replication strategy that simplifies the distribution of OCI images and charts to the different harbor deployments. |

Ensures a defined process for both intake and image replication |

Requires planning to build an intake process from multiple network vendors and a proper replication strategy to ensure that images are available across all required harbor deployments |