Volumes are block storage devices that you attach to instances to activate persistent storage.

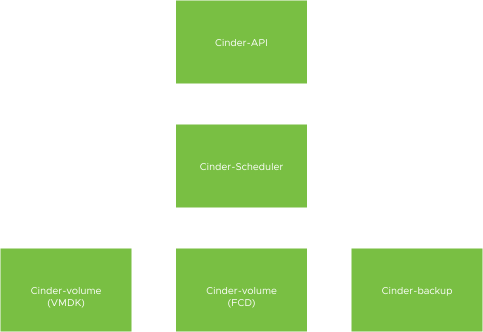

Block storage in VMware Integrated OpenStack is provided by Cinder. The Cinder services (cinder-api, cinder-volumes, cinder-scheduler) run as Pods in the VIO control plane Kubernetes cluster. The Cinder services functionality is not modified from the OpenStack mainline and a vSphere-specific driver is developed to support vSphere-centric storage platforms.

Shadow VM and First Class Disks (FCD)

In OpenStack, Cinder services rely on first-class block storage. However, before vSphere 6.5, the vCenter object model and API do not support block storage to native Cinder usage in OpenStack. The required functionality is provided in VIO through Shadow VMs. Shadow VM works by mimicking a first-class block storage object and is created using the following steps:

When provisioning a Cinder volume, the vSphere Cinder driver creates a new Cinder volume object in the OpenStack database.

When attaching the volume to a virtual instance, the Cinder driver requests the provisioning of a new virtual instance, called a shadow VM.

The shadow VM is provisioned with a single VMDK volume whose size matches the requested Cinder volume size.

After the shadow VM is provisioned successfully, Cinder attaches the shadow VM's VMDK file to the target virtual instance and Cinder treats the VMDK as the Cinder volume.

For each Cinder volume, a shadow VM is created with a VMDK attached. Therefore, many powered-off VMs are created for Cinder volumes.

The First Class Disks (vStorageObject) feature introduced in vSphere 6.7 activates virtual disk-managed objects. FCDs perform life-cycle management of VM disk objects, independent of any VMs. FCDs can create, delete, snapshot/backup, restore, and perform life-cycle tasks on VMDK objects without requiring them to be attached to a VM. The conversion between FCD and existing VMDK volumes based on Shadow VM is done manually. To convert a VMDK volume manually, see Manage a Volume.

Both FCD and Shadow VM VMDK drivers can co-exist in VIO. If FCD is activated, a new cinder-volume backend is created and it co-exists with the default VMDK driver-based cinder-volume backend. The shadow VM-based volumes and FCD-based volumes can be attached to the same Nova instance.

FCD Driver |

VMDK Driver |

|

|---|---|---|

Create or Delete Snapshot |

Yes |

Yes |

Copy Image to Volume. |

Yes

|

Yes

|

Retype |

Yes (must be unattached) |

Yes (must be unattached) |

Attach or De-attach |

Yes |

Yes |

Multi-Attach |

No |

Yes |

Create or restore Volume backup |

Yes |

Yes |

Adapter Type |

|

|

VMDK Type |

|

|

Extension |

Yes (must be unattached) |

Yes (must be unattached) |

Transfer |

Yes (must be unattached) |

Yes (must be unattached) |

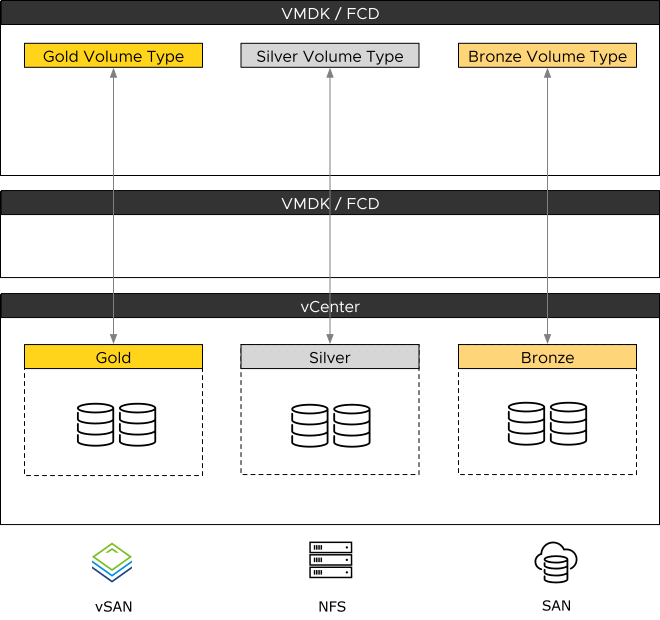

Storage Policy Based Management (SPBM) and Cinder

SPBM (storage policies) is a vCenter Server feature that controls which type of storage is provided for the VM and how the VM is placed within the storage. vSphere offers default storage policies. You can also define policies and assign them to the VMs. In a custom storage policy, you can specify various storage requirements and data services such as caching and replication for virtual disks.

When the SPBM storage policy is integrated with VIO, Tenant users can create, clone, or migrate cinder volumes by selecting the corresponding storage volume type exposed by the Cloud Admin.

The SPBM mechanism assists with placing the VM in a matching datastore and ensures that the cinder virtual disk objects are provisioned and allocated within the storage resource to guarantee the required level of service.

vSphere Cinder Volume

The vSphere Cinder driver supports the following datastore types:

NFS

VMFS

vSAN

VVOL

The VIO Cinder service uses cinder-scheduler to select a datastore to place a new volumes request. The following are some of the VMDK driver characteristics:

Storage vMotion and Storage DRS are supported when running VMware Integrated OpenStack. However, there is no integration between cinder-schedulers. A scheduler neither has information nor understands other schedulers.

vSphere SPBM policies can be applied to provide storage tiering by using metadata.

Snapshots of cinder volumes are placed on the same datastore as the primary volume.

Storage over-subscription through the max_over_subscription_ratio option or activating thin or thick provisioning support through the thin_provisioning_support and thick_provisioning_support options do not apply to the Cinder VMDK driver.

By default, the VMDK driver creates Cinder volumes as thin-provisioned disks. Cloud Administrators can change the default setting using extra specifications. To expose more than one type, administrators can create corresponding disk types with the vmware:vmdk_type key set to either thin, thick, or eagerZeroedThick. Disk types can be created from the UI (Horizon under Admin → Volumes), CLI, or API.

Type |

Metadata |

|---|---|

thick_volume |

vmware:vmdk_type=thick |

thin_volume |

vmware:vmdk_type=thin |

eagerZeroedThick |

vmware:vmdk_type=eagerZeroedThick |

QoS |

Based on SPBM policy |