This section highlights the considerations for deploying RAN at large scale.

Out of the three common models for deploying the RAN environment, the most common are the RU-DU Co-Located (D-RAN) and the centralized processing (C-RAN) models.

Designing a RAN at scale involves various elements, each with its own scale limitations. Logical building blocks can be created and re-used at scale to provide scale-out and scale-up points based on the scale limits.

Some of the scaling considerations from the Telco Cloud Automation platform are as follows. For more details about the configuration maximums for VMware products, see VMware Configuration Maximums.

Maximum number of TCA-CP nodes to a single TCA Manager

Maximum VI registrations to a single TCA Manager

Maximum number of Tanzu Kubernetes Management Clusters per single TCA manager and TCA control-plane node.

Maximum number of workload clusters per TCA Manager

Maximum number of Tanzu Kubernetes Workload clusters per TCA control-plane node.

Maximum number of workers in a single node pool

Maximum number of node pools in a single workload cluster

Maximum number of workers nodes in a single workload cluster

Maximum number of Network Functions managed by a single TCA control-plane node

Maximum number of Network Functions managed by a single TCA Manager.

Other scaling considerations are based on the Telco Cloud and the vendor RAN architecture:

Maximum number of RAN hosts per vCenter

Maximum number of DU workloads to a single or redundant CU

Maximum number of DU workloads to the vendor management elements.

While each product has its own configuration maximums, it must not exceed 70-80% of a maximum.

In addition to configuration maximums, the most prominent consideration is the blast radius. While you can deploy 2,500 ESXi servers into a single vCenter, the blast radius must be relatively wide when the vCenter experiences an outage. The connectivity and management of 2,500 servers will be unavailable when the vCenter is under outage or maintenance.

The scale considerations are different for each deployment. Detailed discussions with the CSP and RAN vendors are required to ensure appropriate sizing and scaling considerations or constraints.

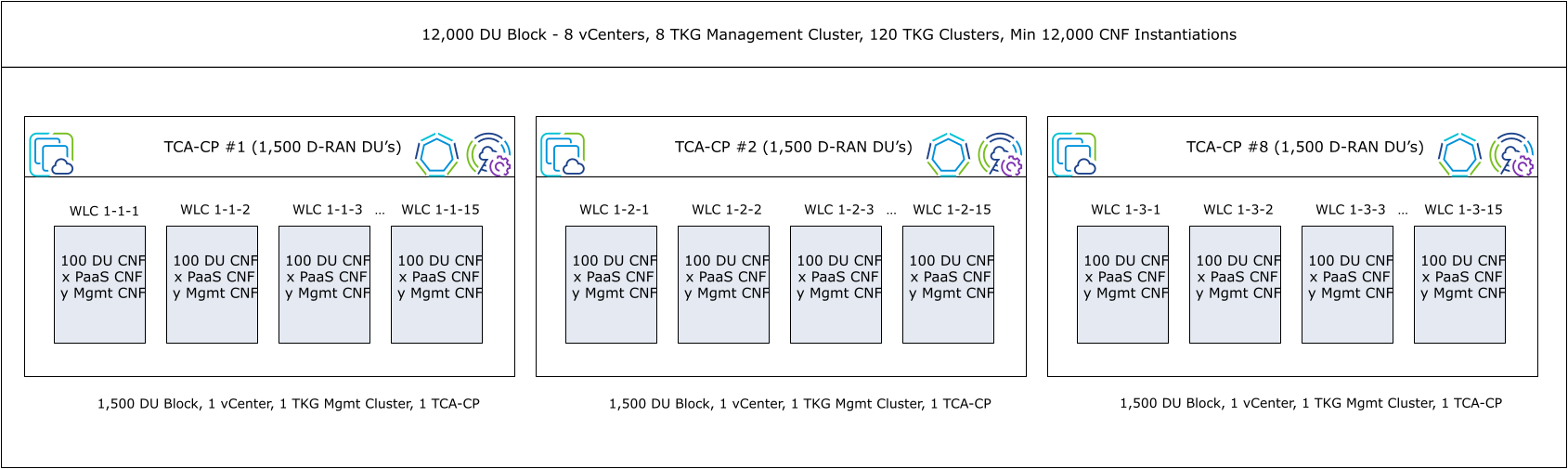

The RAN can be divided into logical blocks. A sample structure of these blocks, with different maximums and constraints, is as follows:

Each Tanzu Kubernetes Workload cluster can host a maximum of 100 DUs deployed in a D-RAN deployment mode, with one worker node or DU per ESXi host.

A maximum of 15 workload clusters per Tanzu Kubernetes Management cluster

A single Tanzu Kubernetes Management cluster per TCA-CP or vCenter

This structure creates a block of up to 1,500 DU nodes per TCA-CP node, with 1,500 ESXi hosts per vCenter. This larger block can then be scaled out to create multiple blocks of 1,500 DU nodes until a higher scale limit is reached. It can be eight large blocks per TCA Manager, accommodating up to 12,000 DU nodes in a single TCA manager. To increase the size of the RAN deployments, the sizes of the individual scale elements can be adjusted according to the configuration maximums.

A single RAN DU cluster needs more than just the DU Network Function to be instantiated. Depending on the overall RAN DU requirements and vendor requirements, additional PaaS and management components may be necessary and must be factored into the CNF scaling and dimensioning.

When planning the dimensioning and scale requirements for RAN deployments, note that each Tanzu Kubernetes workload cluster requires resources for the control-plane nodes. These control-plane nodes can reside in the Domain or Site management cluster or a vSphere cluster associated with the same vCenter. Different elements of a single Tanzu Kubernetes workload cluster must not be deployed across multiple vCenter Servers.

When deploying numerous nodes concurrently for RAN, be aware of the per-host and per-datastore limits for cloning. A maximum of eight clone operations can occur per host or datastore. For a large concurrent deployment, deploy the OVA template per host and automate the deployment to limit the concurrent clone operations to eight.