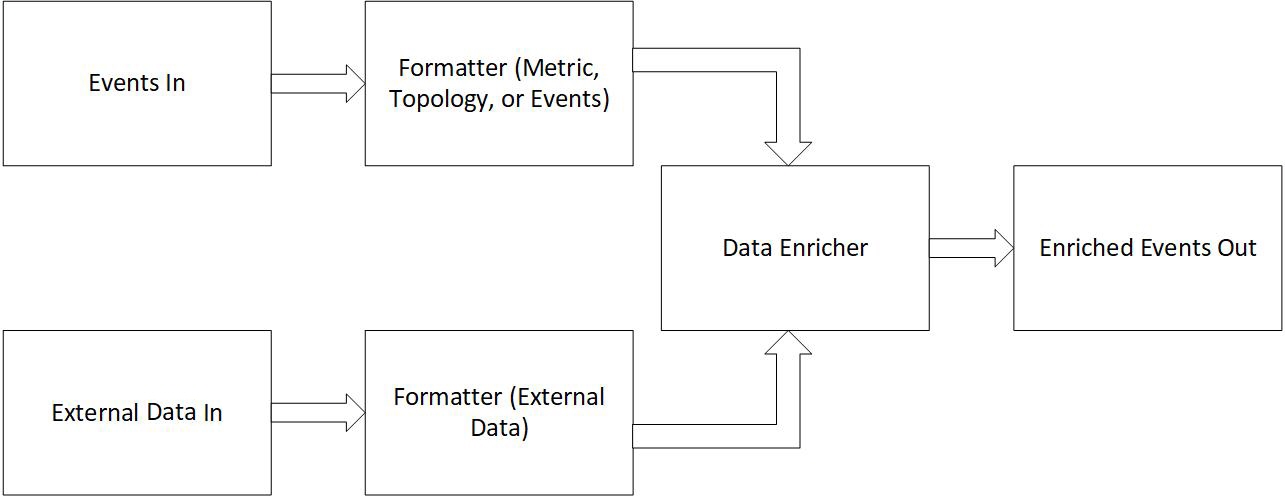

Data enrichment streams pull in data from external sources and enrich other metrics or events with that external data. All metrics, events, and external data object are assumed to be coming in on a Kafka topic.

Here is a simple diagram showing how events and external data interact.

The data enricher works with any type of object, but by default it assumes that the objects are:

- External Data: Objects with a key and a data field. The key is a unique identifier string, while the data field is a key-value map with all the enrichment data.

- Events: The enrichment stream can handle Metric, Topology, or Event types.

The enricher has two modes of operation: Replicated and Partitioned. In replicated mode, the external data objects are replicated across the cluster so that a copy is present on each node. In partitioned mode, the external objects are spread out across the cluster and must be partitioned in the same way as the events so that they are enriched on the same node. Partitioned mode is scalable if the amount of external data is large.

Changing the Existing Stream

When a user wants to change an existing stream, use the following sequence:

- Undeploy the default stream.

- Update the key as properites [dataSource].

- Save and deploy the enrichment stream.

- Add the enrichment input data to dataInput topic of the edge-kafka

- Create a collector (for example, Vipetela) corresponding to the dataSource given in the dataInput topic.

- Check the reports.