The Telco Edge is a collection of distributed virtual infrastructure deployments that can be used to run Telco specific workloads such as VNFs, and other user applications. Depending on the nature of the workloads and applications, the position in the network, the size of the deployment, and the software defined infrastructure model, this Edge Reference Architecture provides the desired flexibility.

The mobile network is also transforming into a mixture of highly distributed network functions coupled with some centralized control plane and management plane functions. 5G services are typically comprised of a mixture of low-latency, high throughput, and high user density applications. This requires deployment of both applications and network functions at the edge. The implication for the Telco network is that it evolves from a purely centralized model of service delivery to a highly distributed one. There is also an emerging paradigm shift with employing third-party IaaS, PaaS, and SaaS offerings from public cloud providers. These changes essentially require a more sophisticated service delivery model.

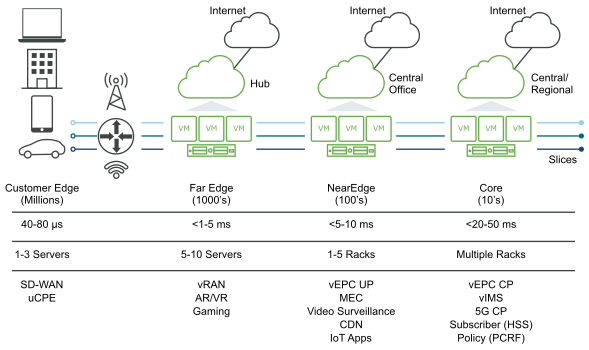

The following diagram shows the reference environment for a Telco network with the addition of edges with clear functional abstractions and interactions in a tiered approach.

Customer Edge

On the left of the reference environment in the preceding figure is the customer edge, which comprises millions of end-points (especially with the requirements for IoT). The Customer Edge is on customer premises or it may be a wireless device. The capacity and capability of the customer edge depends on the use cases. SD-WAN is a usecase for such Customer Edges and other services, such as virtual firewalls, and so on. The Customer Edge is not discussed in this Reference Architecture.

Far Edge

Customer edges connect at the “last mile” to cell towers or wireline aggregation points that are called Far Edges. The number of servers and the types of Telco network functions, such as virtualized RAN (vRAN), and applications (AR/VR) are constrained by deployment locations and related power, cooling, or network factors. There is an Internet “breakout” to allow for applications deployed on the Far Edge to access the Internet directly without having the traffic backhauled to the Near Edge or regional/core location.

Near Edge

The next level of the hierarchy is the Near Edge, which aggregates traffic from multiple Far Edges and generally has fewer constraints related to capacity. There are a larger number of servers with a higher capacity to run applications. A repurposed central office in the wireline scenario is an example of a Near Edge deployment location. A Near Edge can contain multiple racks in a typical deployment. Latencies from the user equipment to Near Edge are in the range of 5-10 milliseconds, but can vary depending on the deployment.

Content Delivery Network (CDN) and MEC applications are usually instantiated at the Near Edge. The Telco VNFs that are instantiated at the Near Edge include vEPC user plane functions (UPFs) that require higher performance and lower latency. An Internet breakout is also present in this deployment.

The aggregation functionality can involve a separate management plane installation to manage Far Edges. In some cases, the Near Edge is only used to run applications, while the management functionality for both Near and Far Edges is instantiated in a Core data center.

Core Data Center

The final level of the hierarchy is the Core that acts as a centralized location for aggregating all control and management plane components for a given region. This deployment is similar to the current centralized model used in Telco networks where the core runs VNF functions and other applications. In the 5G world, the 5G control plane (CP) functions are run in the Core data center and the user plane (UP) functions are run in the edges.

For more information on implementing the Core data center and its management components, see the VMware vCloud NFV OpenStack Edition Reference Architecture document.

Reference Environment Requirements for Edge

The edge infrastructure reference environment places strict requirements for service placement and management to achieve optimal performance.

- Federation options

-

The reference environment topology offers a diverse set of federation options for end-points, private and public clouds, each with distinct ownership and management domains.

- Disaggregated functions

-

Services are highly disaggregated so that control, data, and management planes can be deployed across the distributed topology. Edge clouds offer performance advantages of low latency to allow for data plane intensive workloads while control and management plane components can be centralized with a regional and global scope.

- Functional isolation

-

With the ability to isolate tenants and providing them with their own resource slices, the reference environment allows for network and service isolation. However, resource management decisions are to be made for shared network functions such as DNS, policy, authentication, and so on. Another facet of 5G technology is the ability to carve an independent slice of the end-to-end mobile network to specific use or enterprise. Each slice has its own end-to-end logical network that includes guarantees, dedicated mobile core elements such as 5G CPF/UPF, and enterprise-specific Telco networking. While the virtual assets are created in the same Telco Cloud infrastructure, it is the responsibility of the Virtualization Infrastructure to provide thecomplete resource isolation with a guarantee for each network slice. This includes compute, network, and storage resource guarantees.

- Service placement

-

The highly distributed topology allows for flexibility in the workload placement. Making decisions based on proximity, locality, latency, analytical intelligence, and other EPA criteria are critical to enable an intent-based placement model.

- Workload life cycle management

-

Each cloud is elastic with workload mobility and how applications are deployed, executed, and scaled. An integrated operations management solution can enable an efficient life cycle management to ensure service delivery and QoS.

- Carrier grade characteristics

-

CSPs deliver services that are often regulated by local governments. Hence, carrier grade aspects of these services, such as high availability and deterministic performance, are also important.

- NFVI life cycle (patching and upgrades)

-

The platform must be patched and upgraded by using optimized change management approaches for zero to minimal downtime.