The virtual infrastructure design comprises the design of the software components that form the virtual infrastructure layer. This layer supports running Telco workloads and workloads that maintain the business continuity of services. The virtual infrastructure components include the virtualization platform hypervisor, virtualization management, storage virtualization, network virtualization, and backup and disaster recovery components.

This section outlines the building blocks for the virtual infrastructure, their components, and the networking to tie all the components together.

Compute Design

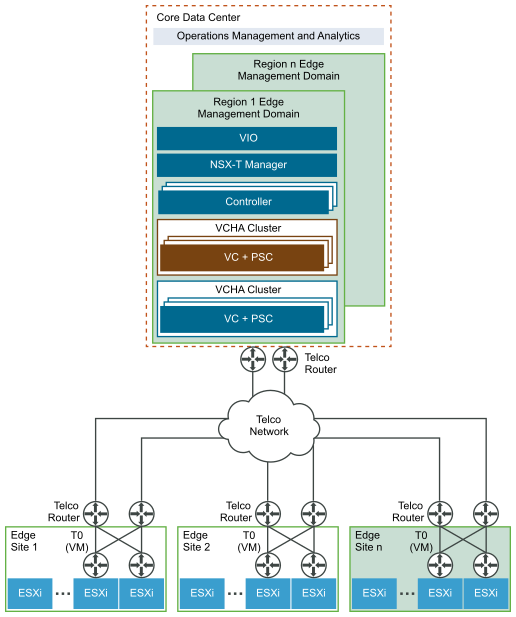

It is important to limit the distance between the core site and the edge sites to ensure that the latency is below 150 ms RTT. In addition, each site is treated as a remote cluster with its own storage – HCI storage with vSAN is recommended. An NSX Edge (pair) needs to be deployed at the remote site (even though the NSX Manager and Controller resides at the Core site) for connectivity to the Core site and for Internet breakout.

The network links between the core site and the edge sites should also be redundant and path-diverse without any SRLGs (Shared Risk Link Groups) between the paths at a transport layer. In addition, a minimum bandwidth of 10Gbps is required between each edge site and the core site.

Storage Design

This section outlines the building blocks for the virtual infrastructure shared storage design that is based on vSAN. vCloud NFV OpenStack Edition also supports certified third-party shared storage solutions, as listed in the VMware Compatibility Guide.

vSAN is a software feature built into the ESXi hypervisor that allows locally attached storage to be pooled and presented as a shared storage pool for all hosts in a vSphere cluster. This simplifies the storage configuration with a single datastore per cluster for management and VNF workloads. With vSAN, VM data is stored as objects and components. One object consists of multiple components that are distributed across the vSAN cluster based on the policy that is assigned to the object. The policy for the object ensures a highly available storage backend for the cluster workload, with no single point of failure.

vSAN is a fully integrated hyper-converged storage software. Creating a cluster of server hard disk drives (HDDs) or solid-state drives (SSDs), vSAN presents a flash-optimized, highly resilient, shared storage datastore to ESXi hosts and virtual machines. This allows for the control of capacity, performance, and availability through storage policies, on a per VM basis.

Network Design

The vCloud NFV Edge platform consists of infrastructure networks and VM networks. Infrastructure networks are host level networks that connect hypervisors to physical networks. Each ESXi host has multiple port groups configured for each infrastructure network.

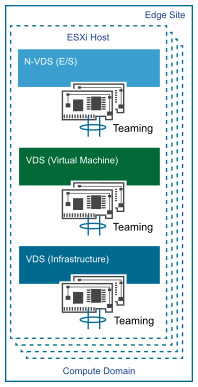

The hosts in each cluster are configured with VMware vSphere® Distributed Switch™ (vDS) devices that provide consistent network configuration across multiple hosts. One vSphere Distributed Switch is used for VM networks and the other one maintains the infrastructure networks. Also, the N-VDS switch is used as the transport for Telco workload traffic.

Infrastructure networks are used by the ESXi hypervisor for vMotion, VMware vSphere Replication, vSAN traffic, management, and backup. The Virtual Machine networks are used by VMs to communicate with each other. For each cluster, the separation between infrastructure and VM networks ensures security and provides network resources where needed. This separation is implemented by two vSphere Distributed Switches, one for infrastructure networks and another for VM networks. Each distributed switch has separate uplink connectivity to the physical data center network, completely separating its traffic from other network traffic. The uplinks are mapped to a pair of physical NICs on each ESXi host for optimal performance and resiliency. In addition to the infrastructure networks, virtual machine network on the VDS is required for the NSX-T Edge for North-South traffic.

VMs can be connected to each other over a VLAN or over Geneve-based overlay tunnels. Both networks are designed according to the requirements of the workloads that are hosted by a specific cluster. The infrastructure vSphere Distributed Switch and networks remain the same regardless of the cluster function. However, the VM networks depend on the networks that the specific cluster requires. The VM networks are created by NSX-T Data Center to provide enhanced networking services and performance to the workloads. The ESXi host’s physical NICs are used as uplinks to connect the distributed switches to the physical network switches. All ESXi physical NICs connect to layer 2 or layer 3 managed switches on the physical network. For redundancy purposes, it is common to use two switches for connecting to the host physical NICs.

The infrastructure networks used in the edge site include:

-

ESXi Management Network. The network for the ESXi host management traffic.

-

vMotion Network. The network for the VMware vSphere® vMotion® traffic.

-

vSAN Network. The network for the vSAN shared storage traffic.