The placement of workloads is typically a subset of the VNF onboarding process and is a collaborative effort between the VNF vendor and the CSP. A prescriptive set of steps must be followed to package and deploy a VNF. The VMware Ready for NFV program is a good vehicle to pre-certify the VNFs and the onboarding compliance with the vCloud NFV OpenStack Edition platform to ensure smooth deployment in the CSP environment.

Before a VNF is onboarded, the VNF vendor must provide the CSP with all the prerequisites for the successful onboarding of the VNF. This includes information such as the VNF format, number of the required networks, East-West and North-South network connectivity, routing policy, security policy, IP ranges, and performance requirements.

VNF Onboarding with VMware Integrated OpenStack

After the initial VNF requirements, images, and formats are clarified, a project must be created to deploy the VNF in an operational environment. Projects are the VMware Integrated OpenStack constructs that map to tenants. Administrators create projects and assign users to each project. Permissions are managed through definitions for user, group, and project. Users have a further restricted set of rights and privileges. Users are limited to the projects to which they are assigned, although they can be assigned to more than one project. When a user logs in to a project, they are authenticated by Keystone. Once the user is authenticated, they can perform operations within the project.

Resource Allocation

When building a project for the VNF, the administrator must set the initial quota limits for the project. For fine-grained resource allocation and control, the quota of the resources that are available to a project can be further divided using Tenant vDCs. A Tenant vDC provides resource isolation and guaranteed resource availability for each tenant. Quotas are the operational limits that configure the amount of system resources that are available per project. Quotas can be enforced at a project and user level. When a user logs in to a project, they see an overview of the project including the resources that are provided for them, the resources they have consumed, and the remaining resources.

Resource allocation at the Edge data centers leverage the same mechanism as in the core data center. Tenant VDCs are created at the Edge data center to carve resources for the tenant Edge workloads and assigned to the respective project for the tenant, ensuring consistent level of fine-grained resource allocation and control across the infrastructure.

VNF Networking

Based on specific VNF networking requirements, a tenant can provision East-West connectivity, security groups, firewalls, micro-segmentation, NAT, and LBaaS using the VMware Integrated OpenStack user interface or command line. VNF North-South connectivity is established by connecting tenant networks to external networks through NSX-T Data Center Tier-0 routers that are deployed in Edge nodes. External networks are created by administrators and a variety of VNF routing scenarios are possible.

After the VNFs are deployed, their routing, switching, and security policies must be configured. There are many different infrastructure services available that can be configured in different ways. This document discusses few of these options in the later sections.

Tenant networks are accessible by all Tenant vDCs within the project in the same data center. Therefore, the implementation of East-West connectivity between VNF-Cs in the same Tenant vDC in the Core data center and the connectivity between VNF-Cs in Tenant vDCs in the Edge data center belonging to the same project is identical. Tenant networks are implemented as logical switches within the project. The North-South network is a tenant network that is connected to the telecommunications network through an N-VDS Enhanced for data-intensive workloads or by using NVDS standard through an NSX Edge Cluster. VNFs utilize the North-South network of their data center when connectivity to remote data centers is required, such as when workloads in the Core data center need to communicate with those in the Edge data center and vice-versa.

VMware Integrated OpenStack exposes a rich set of API calls to provide automation. The deployment of VNFs can be automated by using a Heat template. With API calls, the upstream VNF-M and NFVO can automate all aspects of the VNF life cycle.

VNF Onboarding

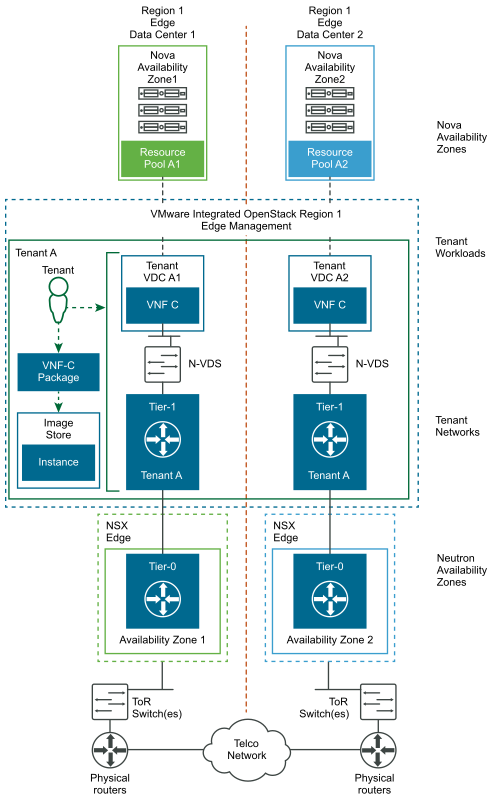

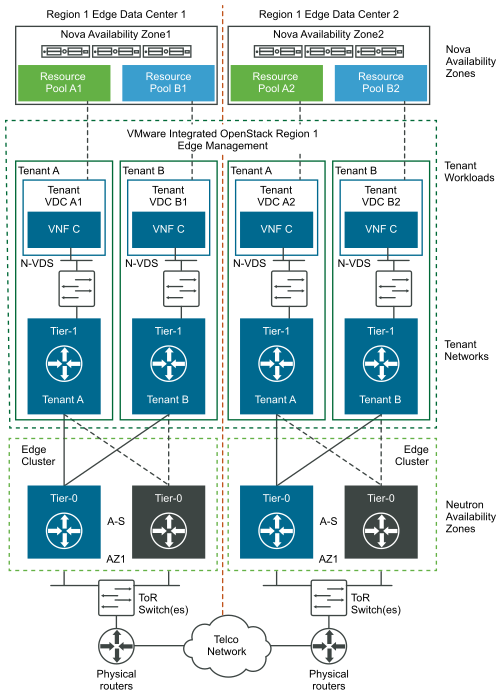

The VNF workload onboarding introduced in OSE 3.1 is still valid in this Edge reference architecture, with the exception that compute nodes are now remotely located at an edge site. All the functional VNF workload onboarding principles for VIO remain the same.

Edge sites contain VNFs that are run on VIO tenant networks that can potentially connect to other tenant networks at the Core data center where other VNF components may be deployed. The VIO instances themselves are deployed only at the Core data center and are inline with vCenter Server and its associated FCAPS (vROPs, VRNI, and vRLI). From a virtual infrastructure management perspective, vCenter Server in the Core data center is the only component talking to the compute nodes in the Edge data center for workload placement and state management. The VIO instances in the Core data center relay virtual infrastructure requests to their respective vCenter Server and NSX-T Data Center VIM components through the use of nova and neutron plugins respectively. These VIM components then carry out the tasks on behalf of VIO, such as provisioning tenant networks and workload resource management.

VNF Placement

After the VNF is onboarded, the tenant administrator deploys the VNF to either the Core data center or the Edge data center depending on the defined policies and workload requirements.

The DRS, NUMA, and Nova Schedulers ensure that the initial placement of the workload meets the target host aggregate and acceleration configurations defined in the policy. Dynamic workload balancing ensures the polices are respected when there is resource contention. The workload balancing can be manual, semi-supervised, or fully automated.

After the host aggregates are defined and configured, policies for workload placement should be defined for the workload instances. The following diagram describes the workload placement architecture for the edge sites with VIO.

Flavor Specification for Instances

Flavors are templates with a predefined or custom resource specification that are used to instantiate workloads. A flavor can be configured with additional Extra Specs metadata parameters for workload placement.

Automation

To meet the operational policies and SLAs for workloads, a closed-loop automation is necessary across the shared cloud infrastructure environment. This domain can host functions such as an NFV-O that is responsible for service blueprinting, chaining, and orchestration across multiple cloud infrastructure environments. Next to NFV-O are the global SDN control functions that are responsible for stitching and managing physical and overlay networks for cross-site services. Real-time performance monitoring can be integrated into the SDN functions to dynamically optimize network configurations, routes, capacity, and so on.

Capacity and demand planning, SLA violations, performance degradations, and issue isolation capabilities can be augmented with the analytics-enabled reference architecture. The analytics-enabled architecture provisions a workflow automation framework to provide closed-loop integrations with NFVO and VNFM for just-in-time optimizations. The successful cloud automation strategy implies full programmability across other domains. For more information, refer to the API Documentation for Automation.