Once the VNF is onboarded, its placement is determined based on the defined policies and the target host aggregates where to deploy the VNF.

Before determining how the workloads are placed in the Resource Pod, the following initial considerations need to be taken into account, based on the traffic profile and characteristics of the VNF.

-

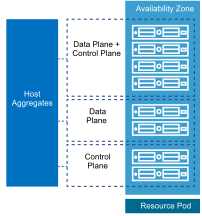

Is it an accelerated and non-accelerated workload? For the purposes of this discussion, assume that accelerated workloads are Data Plane, while non-accelerated are Control Plane functions.

-

Does the control plane workload need a dedicated host infrastructure or can they be co-located with the data plane functions?

To optimize the resource consumption across the hosts in the resource pools and achieve performance across workloads, the shared infrastructure needs to be designed and configured appropriately.

-

Group compute nodes in Nova that are vCenter Server clusters in host aggregates with similar capability profiles.

-

Define OpenStack Flavors with optimization policies.

-

Define host affinity policies.

-

Define DRS placement policies.

-

Allow Nova schedulers to place initial workloads on a specific compute node and DRS to select a specific ESXi host.

-

Allow DRS to optimize workloads at runtime.

Host Aggregate Definition

Host aggregates in the VMware Integrated OpenStack region have to be configured to meet the workload characteristics. The diagram from below shows the three potential options to consider.

-

A host aggregate is a set of Nova compute nodes. A compute node in VMware Integrated OpenStack refers to vCenter Server clusters consisting of homogenous ESXi hosts that are grouped together by capability. A host aggregate with just one vCenter Server cluster can exist. Host Aggregate in VMware Integrated OpenStack can be considered as an elastic vCenter Server cluster that can offer a similar SLA.

-

When using vSAN, a minimum of four hosts are recommended for each vCenter Server cluster. Operational factors need to be considered for each cluster when selecting the number of hosts.

-

Configure the workload acceleration parameters for NUMA vertical alignment as discussed in the Workload Acceleration section.

The DRS, NUMA, and Nova Schedulers ensure that the initial placement of the workload meets the target host aggregate and acceleration configurations defined in the policy. Dynamic workload balancing ensures that the policies are respected when there is resource contention. The workload balancing can be manual, semi-supervised, and fully automated.

Once the host aggregates are defined and configured, policies for workload placement should be defined for the workload instances.

Flavor Specification for Instances

Flavors are templates with a predefined or custom resource specification that are used to instantiate workloads. A Flavor can be configured with additional Extra Specs metadata parameters for workload placement. The following parameters can be employed for workload acceleration by using the N-VDS Enhanced switch:

-

Huge Pages. To provide important or required performance improvements for some workloads, OpenStack huge pages can be enabled for up to 1GB per page. The memory page size can be set for a huge page.

-

CPU Pinning. VMware Integrated OpenStack supports virtual CPU pinning. When running latency-sensitive applications inside a VM, virtual CPU pinning could be used to eliminate the extra latency that is imposed by the virtualization. The flavor extra spec hw:cpu_policy can be set for CPU pinning.

-

NUMA Affinity. ESXi has built-in smart NUMA scheduler that can load balance VMs and align resources from the same NUMA. This can be achieved by setting the latency sensitivity level to high or setting the flavor extra spec hw:cpu_policy to dedicated.

Host Affinity and Anti-Affinity Policy

The Nova scheduler provides filters that can be used to ensure that VMware Integrated OpenStack instances are automatically placed on the same host (affinity) or separate hosts (anti-affinity).

Affinity or anti-affinity filters can be applied as a policy to a server group. All instances that are members of the same group are a subject to the same filters. When an OpenStack instance in created, the server group to which the instance will belong is specified and therefore a filter is applied. These server groups policies are automatically realized as DRS VM-VM placement rules. DRS ensures that the affinity and anti-affinity policies are maintained both at initial placement and during runtime load balancing.

DRS Host Groups for Placement

In VMware Integrated OpenStack, Nova compute nodes map to vCenter Server clusters. The cloud administrator can use vSphere DRS settings to control how specific OpenStack instances are placed on hosts in the Compute cluster. In addition to the DRS configuration, the metadata of source images in OpenStack can be modified to ensure that instances generated from those images are correctly identified for placement.

vSphere Web Client provides options to create VM and host groups to contain and manage specific OpenStack instances and to create a DRS rule to manage the distribution of OpenStack instances in a VM group to a specific host group.

VMware Integrated OpenStack allows to modify the metadata of a source image to automatically place instances into VM groups. VM groups are configured in the vSphere Web Client and can be further used to apply DRS rules.