The Three-Pod design completely separates the vCloud NFV functional blocks by using a Management Pod, Edge Pod, and Resource Pod for their functions. The initial deployment of a Three-Pod design consists of three vSphere clusters, respectively one cluster per Pod. Clusters are scaled up by adding ESXi hosts, whereas Pods are scaled up by adding clusters. The separation of management, Edge, and resource functions in individually scalable Pods allows the CSPs to plan capacity according to the needs of the specific function that each Pod hosts. This provides greater operational flexibility.

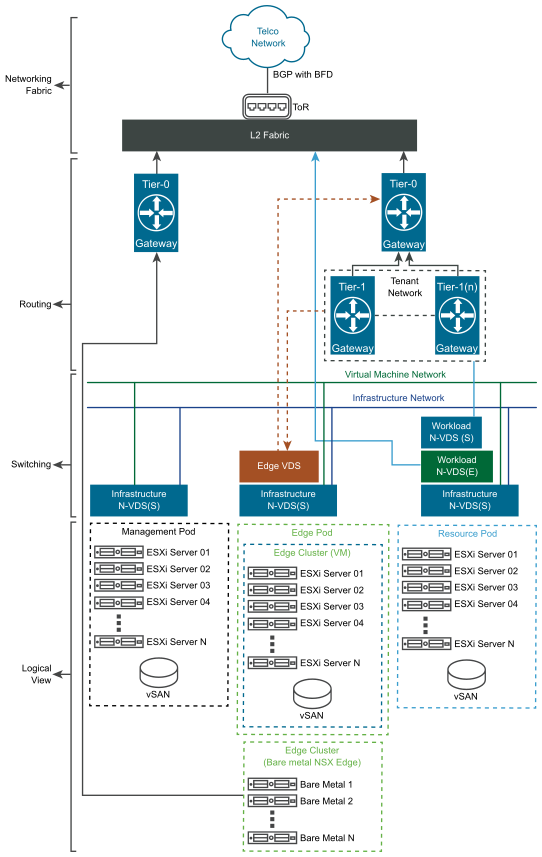

The following diagram depicts the physical representation of the compute and networking connectivity and the logical layers of the switching and routing fabric.

This diagram shows both the Edge form factors (VM and Baremetal) to demonstrate how each deployment appears. Your deployment will include only one form factor.

The initial deployment of a Three-Pod design is more hardware intensive than the initial deployment of a Two-Pod design. Each Pod in the design scales up independently from the others. A Three-Pod design consists of the same components as in a Two-Pod design, as the way functions are combined to form the solution is different in each design. Regardless of the Pod design that is used to create the NFVI, VNFs perform the same way.

Logical View

-

Management Pod. Hosts all the NFV management components. Its functions include resource orchestration, analytics, BCDR, third-party management, NFV-O, and other ancillary management.

-

Edge Pod. Hosts the NSX-T Data Center network components, which are the NSX Edge nodes. Edge nodes provide connectivity to the physical infrastructure for North-South traffic and provide services such as NAT and load balancing. Edge nodes can be deployed in a VM or bare metal form-factor to meet capacity and performance needs.

-

Resource Pod. Provides the virtualized runtime environment, that is compute, network, and storage, to execute workloads.

Routing and Switching

Before deploying the Three-Pod configuration, a best practice is to consider a VLAN design to isolate the traffic for infrastructure, VMs, and VIM.

The N-VDS switch port groups have different requirements based on the Pod profile and networking requirements.

For example, one N-VDS Standard switch can be used in the Management Pod for both VMkernel traffic and VM management traffic. While the switching design is standardized for the Management and Edge Pods, the Resource Pod offers flexibility with the two classes of NSX-T Data Center switches (N-VDS Standard and N-VDS Enhanced). The N-VDS Standard switch offers overlay and VLAN networking, while the N-VDS Enhanced switch offers acceleration by using DPDK to workloads.

N-VDS Enhanced switches are VLAN backed only and do not support overlay networking.

The NSX-T Data Center two-tiered routing fabric provides the separation between the provider routers (Tier-0) and the tenant routers (Tier-1).

The Edge nodes provide the physical connectivity to the CSPs core and external networking. Both dynamic and static routing are possible at the Edge nodes.

NSX-T Data Center requires a minimum of 1600 MTU size for overlay traffic. The recommended MTU size is 9000.