ETSI classifies NFV workloads into three categories: management, control, and data plane. Based on experience deploying vCloud NFV in various CSP networks, data plane workloads are further divided into intensive workloads, and workloads that behave as management and control plane workloads. The latter class of data plane workloads have been proven to function well on the vCloud NFV OpenStack Edition platform, as has been described in this reference architecture. Further information regarding these workloads is provided in the VNF Performance in Distributed Deployments section of this document. For data plane intensive VNFs hosted on the vCloud NFV OpenStack Edition platform, specific design considerations are provided in the following section of this document. Enhanced Platform Awareness (EPA) delivers carrier-grade, low-latency, data-plane performance. VMware technologies including CPU pinning, NUMA placement, HugePages support, and SR-IOV support allow CSPs to maintain high network performance.

Data Plane Intensive Design Framework

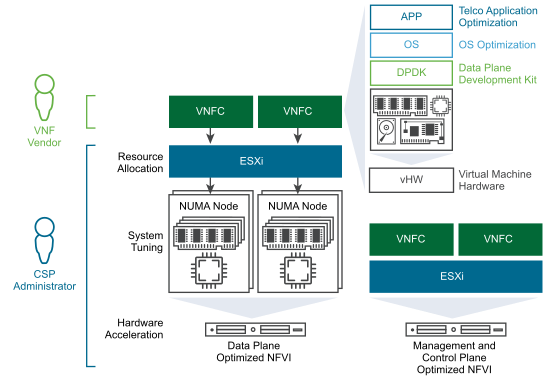

Two parties are involved in the successful deployment and operations of a data plane intensive VNF: the VNF vendor and the NFVI operator. Both parties must be able to understand the performance requirements of the VNF, and share an understanding of the VNF design. They must also be willing to tune the entire stack from the physical layer to the VNF itself, for the demands data plane intensive workloads place on the system. The responsibilities of the two parties are described as follows:

Virtual Network Function Design and Configuration. The vendor supplying the VNF is expected to tune the performance of the VNF components and optimize their software. Data plane intensive workloads benefit from the use of a Data Plane Development Kit (DPDK) to speed up VNFC packet processing and optimize the handling of packets off-loading to the virtual NIC. Use of the VMware VMXNET3 paravirtualized network interface card (NIC) is a best practice VNF design for performance demanding VNFs. VMXNET3 is the most advanced virtual NIC on the VMware platform and has been contributed to the Linux community, making it ubiquitous in many Linux distributions.

Once the VNF is created by its supplier, there are several VNFC level configurations that are essential to these types of workloads. Dedicated resource allocation, for the VNFC and the networking-related processes associated with it, can be configured and guaranteed through the use of two main parameters: Latency Sensitivity and System Contexts. Both parameters are discussed in detail in a separate white paper.

Another aspect essential to the performance of a data plane intensive VNF is the number of virtual CPUs required by the VNFC. Modern multiprocessor server architecture is based on a grouping of resources, including memory and PCIe cards, into Non-Uniform Memory Access (NUMA) nodes. Resource usage within a NUMA node is fast and efficient. However, when NUMA boundaries are crossed, due to the physical nature of the QPI bridge between the two nodes, speed is reduced and latency increases. VNFCs that participate in the data plane path are advised to contain the virtual CPU, memory, and physical NIC associated with them to a single NUMA node for optimal performance.

Data plane intensive VNFs tend to serve a central role in a CSP network: as a Packet Gateway in a mobile core deployment, a Provider Edge router (PE) in an MPLS network, or a media gateway in an IMS network. As a result, these VNFs are positioned in a centralized location in the CSP network: the data center. With their crucial role, these VNFs are typically static and are used by the central organization to offer services to a large customer base. For example, a virtualized Packet Gateway in a mobile core network will serve a large geographical region as the central termination point for subscriber connections. Once the VNF is deployed, it is likely to remain active for a long duration, barring any NFVI life cycle activities such as upgrades or other maintenance.

This aggregation role translates into a certain sizing requirement. The VNFs must serve many customers, which is the reason for their data plane intensive nature. Such VNFs include many components to allow them to be scaled and managed. These components include at a minimum an OAM function, packet processing functions, VNF-specific load balancing, and often log collection and monitoring. Individual components can also require significant resources to provide large scale services.

The central position of these VNFs, their sizeable scale, and their static nature, all suggest that dedicated resources are required to achieve their expected performance goals. These dedicated resources begin with hosts using powerful servers with high performing network interface cards. The servers are grouped together into a cluster that is dedicated to data plane intensive workloads. Using the same constructs introduced earlier in this document, the data plane intensive cluster is consumed by VMware Integrated OpenStack and is made into a Tenant vDC. VNFs are then onboarded into the VMware Integrated OpenStack image library for deployment.

VMware Integrated OpenStack supports NUMA aware placement on the underlying vSphere platform. This feature provides low latency and high throughput to Virtual Network Functions (VNFs) that run on telecommunications environments. To achieve low latency and high throughput, it is important that vCPUs, memory, and physical NICs that are used for VM traffic are aligned on same NUMA node. The specific teaming policy that must be created depends on the type of deployment you have.

With the architecture provided in this section, data plane intensive workloads are ensured the resources they require, to benefit from platform modularity while meeting carrier grade performance requirements. Specific configuration and VNF design guidelines are detailed in the Tuning VMware vCloud NFV for Data Plane Intensive Workloads.