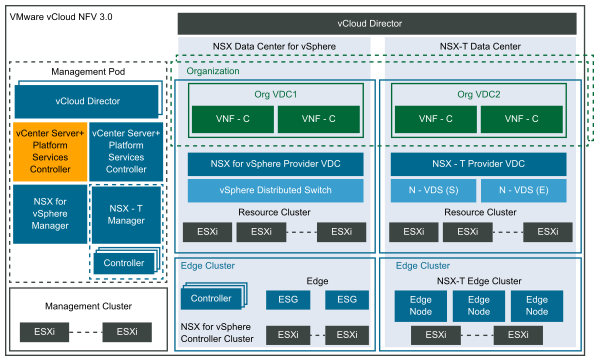

This deployment option allows CSPs to deploy the vCloud NFV 3.0 platform by using both NSX Data Center for vSphere and NSX-T Data Center networking stacks. The CSP can create a dedicated vSphere cluster for each networking stack and map it to vCloud Director logical tenancy constructs. The CSP should create a dedicated Provider VDC for each networking stack, respectively NSX Data Center for vSphere and NSX-T Data Center.

| Building Block |

Design Objective |

|---|---|

| Management Pod |

|

| Resource Pod |

|

| Edge Pod |

|

To deploy clusters based on NSX Data Center for vSphere, use the vCloud NFV 2.0 Reference Architecture design guidelines and principles.

NFVI components such as vCenter Server treat both stacks as separate entities in terms of host clusters, resource pools, and virtual switches. As the top level VIM component, vCloud Director allows tenants to consume the resources of either or both stacks by configuring organization VDCs backed by corresponding provider VDCs. Each stack has a dedicated Resource and Edge Pods for compute and North-South connectivity respectively.