When using vSphere with Tanzu with vDS networking, HAProxy provides load balancing for developers accessing the Tanzu Kubernetes control plane, and for Kubernetes Services of Type Load Balancer. Review the possible topologies that you can implement for the HAProxy load balancer.

Workload Networks on the Supervisor Cluster

To configure a Supervisor Cluster with the vSphere networking stack, you must connect all hosts from the cluster to a vSphere Distributed Switch. Depending on the topology that you implement for the Supervisor Cluster Workload Networks, you create one or more distributed port groups. You designate the port groups as Workload Networks to vSphere Namespaces.

Before you add a host to a Supervisor Cluster, you must add it to all the vSphere Distributed Switches that are part of the cluster.

The Kubernetes control plane VMs on the Supervisor Cluster use three IP addresses from the IP address range that is assigned to the Primary Workload Network. Each node of a Tanzu Kubernetes cluster has a separate IP address assigned from the address range of the Workload Network that is configured with the namespace where the Tanzu Kubernetes cluster runs.

Allocation of IP Ranges

- A range for allocating virtual IPs for HAProxy. The IP range that you configure for the virtual servers of HAProxy are reserved by the load balancer appliance. For example, if the virtual IP range is

192.168.1.0/24, all hosts on that range are not accessible for traffic other than virtual IP traffic.Note: You must not configure a gateway within the HAProxy virtual IP range, because all routes to that gateway will fail. - An IP range for the nodes of the Supervisor Cluster and Tanzu Kubernetes clusters. Each Kubernetes control plane VM in the Supervisor Cluster has an IP address assigned, which make three IP addresses in total. Each node of a Tanzu Kubernetes cluster also has a separate IP assigned. You must assign a unique IP range to each Workload Network on the Supervisor Cluster that you configure to a namespace.

An example configuration with one /24 network:

- Network: 192.168.120.0/24

- HAProxy VIPs: 192.168.120.128/25

- 1 IP address for the HAProxy workload interface: 192.168.120.5

Depending on the IPs that are free within the first 128 addresses, you can define IP ranges for Workload Networks on the Supervisor Cluster, for example:

- 192.168.120.31-192.168.120.40 for the Primary Workload Network

- 192.168.120.51-192.168.120.60 for another Workload Network

HAProxy Network Topology

There are two network configuration options for deploying HAProxy: Default and Frontend. The default network has 2 NICs: one for the Management network and one for the Workload network. The Frontend network has 3 NICs: Management network, Workload network, and the Frontend network for clients. The table lists and describes characteristics of each network.

| Network | Characteristics |

|---|---|

| Management |

The Supervisor Cluster uses the Management network to connect to and program the HAProxy load balancer.

|

| Workload |

The HAProxy control plane VM uses the Workload network to access the services on the Supervisor Cluster and Tanzu Kubernetes cluster nodes.

Note: The workload network must be on a different subnet than the management network. Refer to the

system requirements.

|

| Frontend (optional) | External clients (such as users or applications) accessing cluster workloads use the Frontend network to access backend load balanced services using virtual IP addresses.

|

The diagram below illustrates an HAProxy deployment using a Frontend Network topology. The diagram indicates where configuration fields are expected during the installation and configuration process.

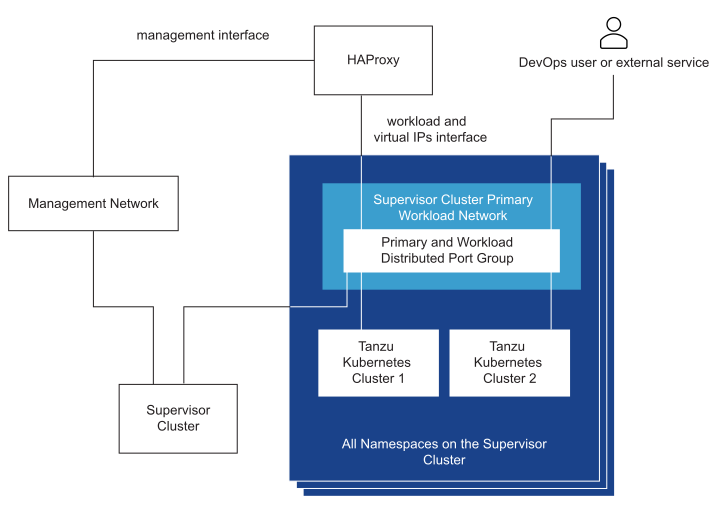

Supervisor Cluster Topology with One Workload Network and HA Proxy with Two Virtual NICs

In this topology, you configure a Supervisor Cluster with one Workload Network for the following components:

- Kubernetes control plane VMs

- The nodes of Tanzu Kubernetes clusters.

- The HAProxy virtual IP range where external services and DevOps users connect. In this configuration, HAProxy is deployed with two virtual NICs (Default configuration), one connected to the management network, and a second one connected to the Primary Workload Network. You must plan for allocating Virtual IPs on a separate subnet from the Primary Workload Network.

- The DevOps user or external service sends traffic to a virtual IP on the Workload Network subnet of the distributed port group.

- HAProxy load balances the virtual IP traffic to either Tanzu Kubernetes node IP or control plane VM IP. HAProxy claims the virtual IP address so that it can load balance the traffic coming on that IP.

- The control plane VM or Tanzu Kubernetes cluster node delivers the traffic to the target pods running inside the Supervisor Cluster or Tanzu Kubernetes cluster respectively.

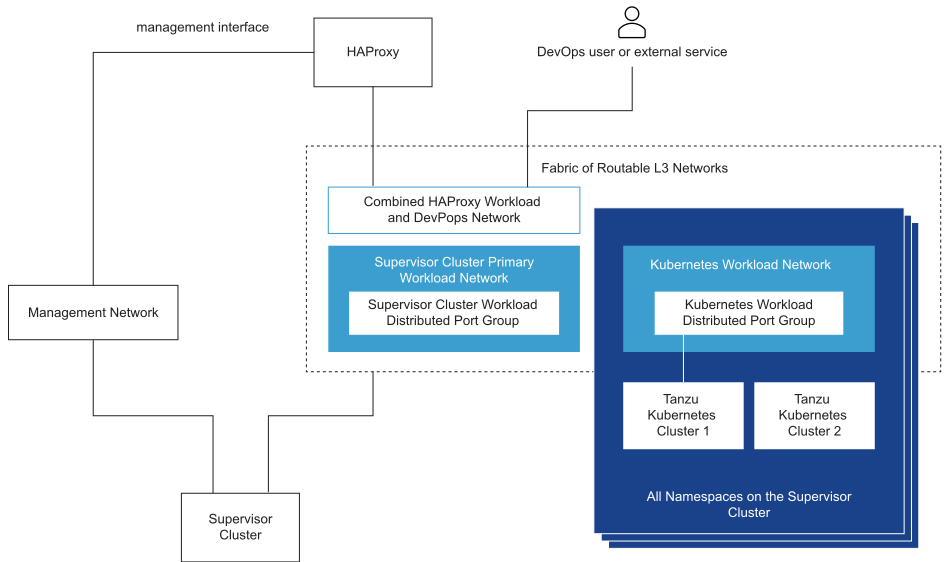

Supervisor Cluster Topology with an Isolated Workload Network and HA Proxy with Two Virtual NICs

- Kubernetes control plane VMs. A Primary Workload Network to handle the traffic for Kubernetes control plane VMs.

- Tanzu Kubernetes cluster nodes. A Workload Network. that you assign to all namespaces on the Supervisor Cluster. This network connects the Tanzu Kubernetes cluster nodes.

- HAProxy virtual IPs. In this configuration, the HAProxy VM is deployed with two virtual NICs (Default configuration). You can either connect the HAProxy VM to the Primary Workload Network or the Workload Network that you use for namespaces. You can also connect HAProxy to a VM network that already exists in vSphere and is routable to the Primary and Workload networks.

- The DevOps user or external service sends traffic to a virtual IP. Traffic is routed to the network where HAProxy is connected.

- HAProxy load balances the virtual IP traffic to either Tanzu Kubernetes node IP or control plane VM. HAProxy is claiming the virtual IP address so that it can load balance the traffic coming on that IP.

- The control plane VM or Tanzu Kubernetes cluster node delivers the traffic to the target pods running inside the Tanzu Kubernetes cluster.

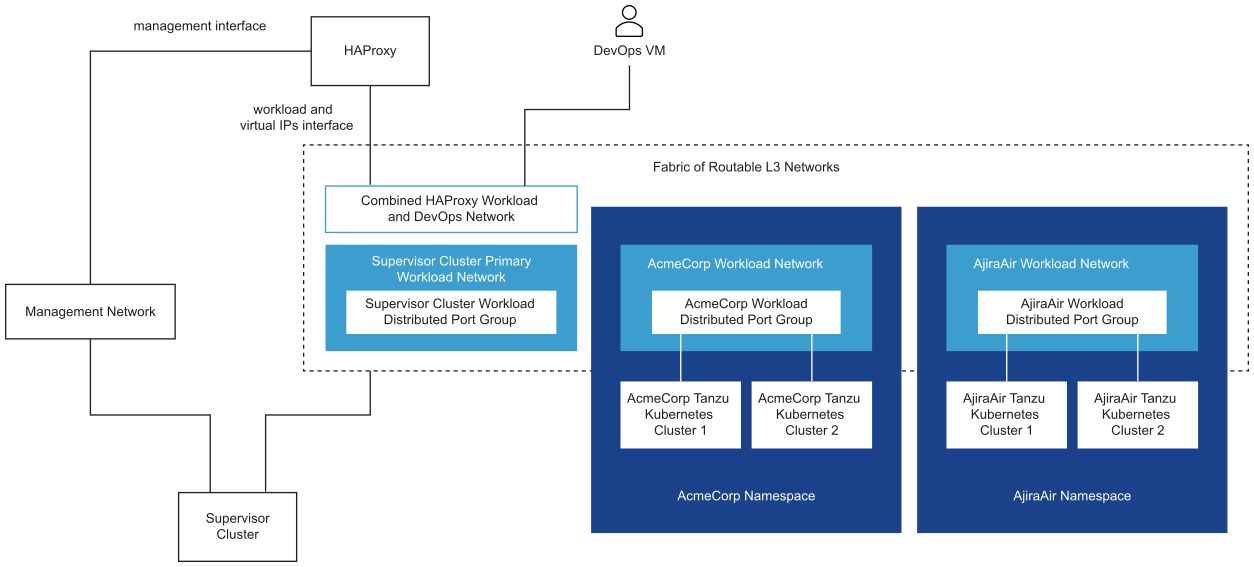

Supervisor Cluster Topology with Multiple Workload Networks and HA Proxy with Two Virtual NICs

In this topology, you can configure one port group to act as the Primary Workload Network and a dedicated port group to serve as the Workload Network to each namespace. HAProxy is deployed with two virtual NICs (Default configuration), and you can either connect it to the Primary Workload Network or to any of the Workload Networks. You can also use en existing VM network that is routable to the Primary and Workload Networks.

Supervisor Cluster Topology with Multiple Workload Networks and HAProxy with Three Virtual NICs

Selecting Between the Possible Topologies

Before you select between each of the possible topologies, asses the needs of your environment:

- Do you need layer 2 isolation between Supervisor Cluster and Tanzu Kubernetes clusters?

- No: the simplest topology with one Workload Network serving all components.

- Yes: the isolated Workload Network topology with a separate Primary and Workload Networks.

- Do you need further layer 2 isolation between your Tanzu Kubernetes clusters?

- No: isolated workload network topology with a separate Primary and Workload Networks.

- Yes: multiple workload networks topology with a separate Workload Network for each namespace and a dedicated Primary Workload Network.

- Do you want to prevent your DevOps users and external services from directly routing to Kubernetes control plane VMs and Tanzu Kubernetes cluster nodes?

- No: two NIC HAProxy configuration.

- Yes: three NIC HAProxy configuration. This configuration is recommended for production environments

Considerations for Using the HAProxy Load Balancer with vSphere with Tanzu

Keep in mind the following considerations when configuring vSphere with Tanzu with the HAProxy load balancer.

- A support contract is required with HAProxy to get technical support for the HAProxy load balancer. VMware GSS cannot provide support for the HAProxy appliance.

- The HAProxy appliance is a singleton with no possibility for a highly available topology. For highly available environments, VMware recommends that you use either a full installation of NSX or the NSX Advanced Load Balancer.

- It is not possible to expand the IP address range used for the front-end at a later date, meaning the network should be sized for all future growth.