You can customize the Tanzu Kubernetes Grid Service with global settings for key features, including the container network interface (CNI), proxy server, and TLS certificates. Be aware of trade-offs and considerations when implementing global versus per-cluster functionality.

TkgServiceConfiguration v1alpha2 Specification

TkgServiceConfiguration specification provides fields for configuring the

Tanzu Kubernetes Grid Service instance.

apiVersion: run.tanzu.vmware.com/v1alpha2

kind: TkgServiceConfiguration

metadata:

name: tkg-service-configuration-spec

spec:

defaultCNI: string

proxy:

httpProxy: string

httpsProxy: string

noProxy: [string]

trust:

additionalTrustedCAs:

- name: string

data: string

defaultNodeDrainTimeout: time

TkgServiceConfiguration specification applies to all

Tanzu Kubernetes clusters provisioned by that service. If a rolling update is initiated, either manually or by upgrade, clusters are updated by the changed service specification.

Annotated TkgServiceConfiguration v1alpha2 Specification

TkgServiceConfiguration specification parameters. For examples, see

Examples for Configuring the Tanzu Kubernetes Grid Service v1alpha1 API.

apiVersion: run.tanzu.vmware.com/v1alpha2

kind: TkgServiceConfiguration

#valid config key must consist of alphanumeric characters, '-', '_' or '.'

metadata:

name: tkg-service-configuration-spec

spec:

#defaultCNI is the default CNI for all Tanzu Kubernetes

#clusters to use unless overridden on a per-cluster basis

#supported values are antrea, calico, antrea-nsx-routed

#defaults to antrea

defaultCNI: string

#proxy configures a proxy server to be used inside all

#clusters provisioned by this TKGS instance

#if implemented all fields are required

#if omitted no proxy is configured

proxy:

#httpProxy is the proxy URI for HTTP connections

#to endpionts outside the clusters

#takes the form http://<user>:<pwd>@<ip>:<port>

httpProxy: string

#httpsProxy is the proxy URI for HTTPS connections

#to endpoints outside the clusters

#takes the frorm http://<user>:<pwd>@<ip>:<port>

httpsProxy: string

#noProxy is the list of destination domain names, domains,

#IP addresses, and other network CIDRs to exclude from proxying

#must include Supervisor Cluster Pod, Egress, Ingress CIDRs

noProxy: [string]

#trust configures additional trusted certificates

#for the clusters provisioned by this TKGS instance

#if omitted no additional certificate is configured

trust:

#additionalTrustedCAs are additional trusted certificates

#can be additional CAs or end certificates

additionalTrustedCAs:

#name is the name of the additional trusted certificate

#must match the name used in the filename

- name: string

#data holds the contents of the additional trusted cert

#PEM Public Certificate data encoded as a base64 string

data: string

#defaultNodeDrainTimeout is the total amount of time the

#controller spends draining a node; default is undefined

#which is the value of 0, meaning the node is drained

#without any time limitations; note that `nodeDrainTimeout`

#is different from `kubectl drain --timeout`

defaultNodeDrainTimeout: time

Proxy Server Configuration Requirements

- The required

proxyparameters arehttpProxy,httpsProxy, andnoProxy. If you add theproxystanza, all three fields are mandatory. - You can connect to the proxy server using HTTP. HTTPS connections are not supported.

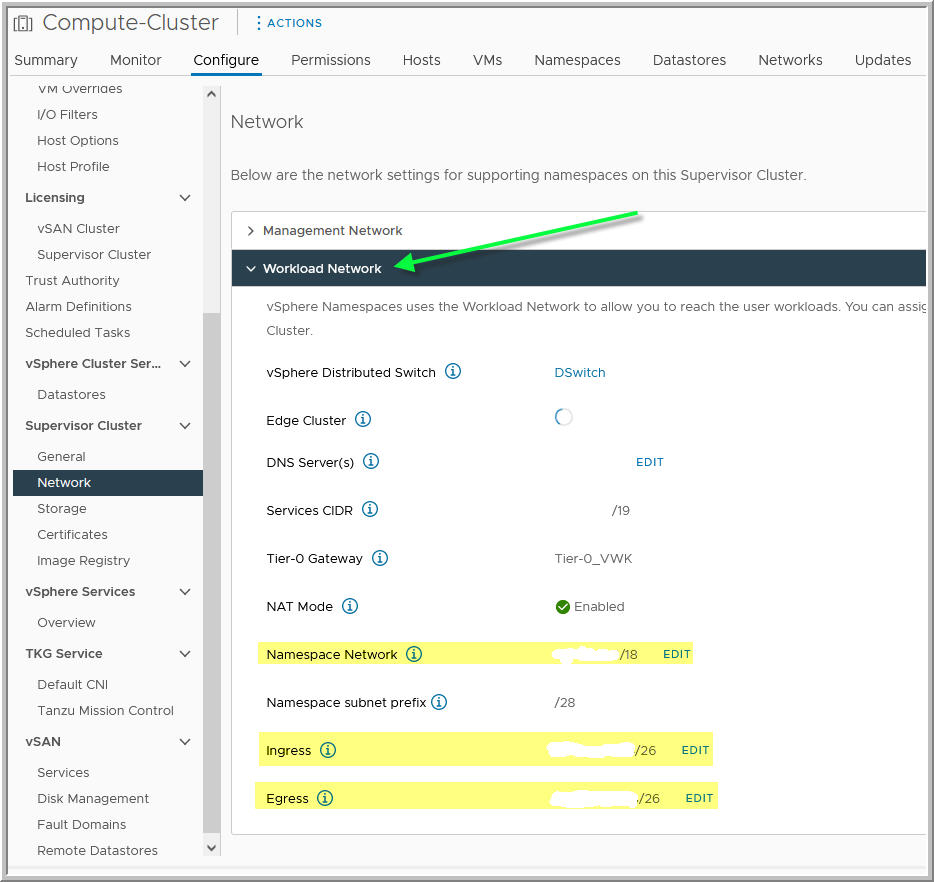

- You obtain the required

spec.proxy.noProxyvalues from the Workload Network. You must not proxy the Namespace Network (formerly called Pods CIDRs), Ingress (formerly called Ingress CIDRs), and Egress (formerly called Egress CIDRs) by including them in thenoProxyfield. Refer to the example images below. - You do not need to include the Services CIDR in the

noProxyfield. Tanzu Kubernetes clusters do not interact with this subnet. - You do not need to include the

network.services.cidrBlocksand thenetwork.pods.cidrBlocksvalues from the Tanzu Kubernetes cluster specification in thenoProxyfield. These subnets are automatically not proxied for you. - You do not need to include

localhostand127.0.0.1in thenoProxyfield. The endpoints are automatically not proxied for you.

When To Use Global or Per-Cluster Configuration Options

The TkgServiceConfiguration is a global specification that impacts all Tanzu Kubernetes clusters provisioned by the Tanzu Kubernetes Grid Service instance.

TkgServiceConfiguration specification, be aware of the per-cluster alternatives that might satisfy your use case instead of a global configuration.

| Setting | Global Option | Per-Cluster Option |

|---|---|---|

| Default CNI | Edit the TkgServiceConfiguration spec. See Examples for Configuring the Tanzu Kubernetes Grid Service v1alpha1 API. |

Specify the CNI in the cluster specification. For example, Antrea is the default CNI. To use Calico, specify it in the cluster YAML. See Examples for Provisioning Tanzu Kubernetes Clusters Using the Tanzu Kubernetes Grid Service v1alpha1 API |

| Proxy Server | Edit the TkgServiceConfiguration spec. See Examples for Configuring the Tanzu Kubernetes Grid Service v1alpha1 API. |

Include the proxy server configuration parameters in the cluster spec. See Examples for Provisioning Tanzu Kubernetes Clusters Using the Tanzu Kubernetes Grid Service v1alpha1 API. |

| Trust Certificates | Edit the TkgServiceConfiguration spec. There are two use cases: configuring an external container registry and certificate-based proxy configuration. See Examples for Configuring the Tanzu Kubernetes Grid Service v1alpha1 API |

Yes, you can include custom certificates on a per-cluster basis or override the globally-set trust settings in the cluster specification. See Examples for Provisioning Tanzu Kubernetes Clusters Using the Tanzu Kubernetes Grid Service v1alpha1 API. |

TkgServiceConfiguration, that proxy information is propagated to the cluster manifest after the initial deployment of the cluster. The global proxy configuration is added to the cluster manifest only if there is no proxy configuration fields present when creating the cluster. In other words, per-cluster configuration takes precedence and will overwrite a global proxy configuration. For more information, see

Configuration Parameters for the Tanzu Kubernetes Grid Service v1alpha1 API.

Before editing the TkgServiceConfiguration specification, be aware of the ramifications of applying the setting at the global level.

| Field | Applied | Impact on Existing Clusters If Added/Changed | Per-Cluster Overriding on Cluster Creation | Per-Cluster Overriding on Cluster Update |

|---|---|---|---|---|

defaultCNI |

Globally | None | Yes, you can override the global setting on cluster creation | No, you cannot change the CNI for an existing cluster; if you used the globally set default CNI on cluster creation, it cannot be changed |

proxy |

Globally | None | Yes, you can override the global setting on cluster creation | Yes, with U2+, you can override the global setting on cluster update |

trust |

Globally | None | Yes, you can override the global setting on cluster creation | Yes, with U2+, you can override the global setting on cluster update |

Propagating Global Configuration Changes to Existing Clusters

Settings made at the global level in the TkgServiceConfiguration may not be automatically propagated to existing clusters. For example, if you make changes to either the proxy or the trust settings in the TkgServiceConfiguration, such changes may not affect clusters that are already provisioned.

To manually propagate a global change to an existing cluster, you must patch the Tanzu Kubernetes cluster to make the cluster inherit the changes made to the TkgServiceConfiguration.

kubectl patch tkc <CLUSTER_NAME> -n <NAMESPACE> --type merge -p "{\"spec\":{\"settings\":{\"network\":{\"proxy\": null}}}}"

kubectl patch tkc <CLUSTER_NAME> -n <NAMESPACE> --type merge -p "{\"spec\":{\"settings\":{\"network\":{\"trust\": null}}}}"