You can backup and restore Tanzu Kubernetes cluster workloads using standalone Velero and Restic. This method is an alternative to using the Velero Plugin for vSphere. The primary reason for using standalone Velero instead of the Velero Plugin for vSphere is if you require portability. Restic is required for stateful workloads.

Prerequisites

Backup a Stateless Application Running on a Tanzu Kubernetes Cluster

Backing up a stateless application running on a Tanzu Kubernetes cluster requires the use of Velero.

--include namespaces tag where all application components are in that namespace.

velero backup create example-backup --include-namespaces example-backup

Backup request "example-backup" submitted successfully. Run `velero backup describe example-backup` or `velero backup logs example-backup` for more details.

velero backup get

velero backup describe example-backup

Check the Velero bucket on the S3-compatible object store such as the MinIO server.

kubectl get crd

kubectl get backups.velero.io -n velero

kubectl describe backups.velero.io guestbook-backup -n velero

Restore a Stateless Application Running on a Tanzu Kubernetes Cluster

Restoring a stateless application running on a Tanzu Kubernetes cluster requires the use of Velero.

To test the restoration of an example application, delete it.

kubectl delete ns guestbook namespace "guestbook" deleted

velero restore create --from-backup example-backup

Restore request "example-backup-20200721145620" submitted successfully. Run `velero restore describe example-backup-20200721145620` or `velero restore logs example-backup-20200721145620` for more details.

velero restore describe example-backup-20200721145620

velero restore get

kubectl get ns

kubectl get pod -n example

kubectl get svc -n example

Backup a Stateful Application Running on a Tanzu Kubernetes Cluster

Backing up a stateful application running on a Tanzu Kubernetes cluster involves backing up both the application metadata and the application data stored on a persistent volume. To do this you need both Velero and Restic.

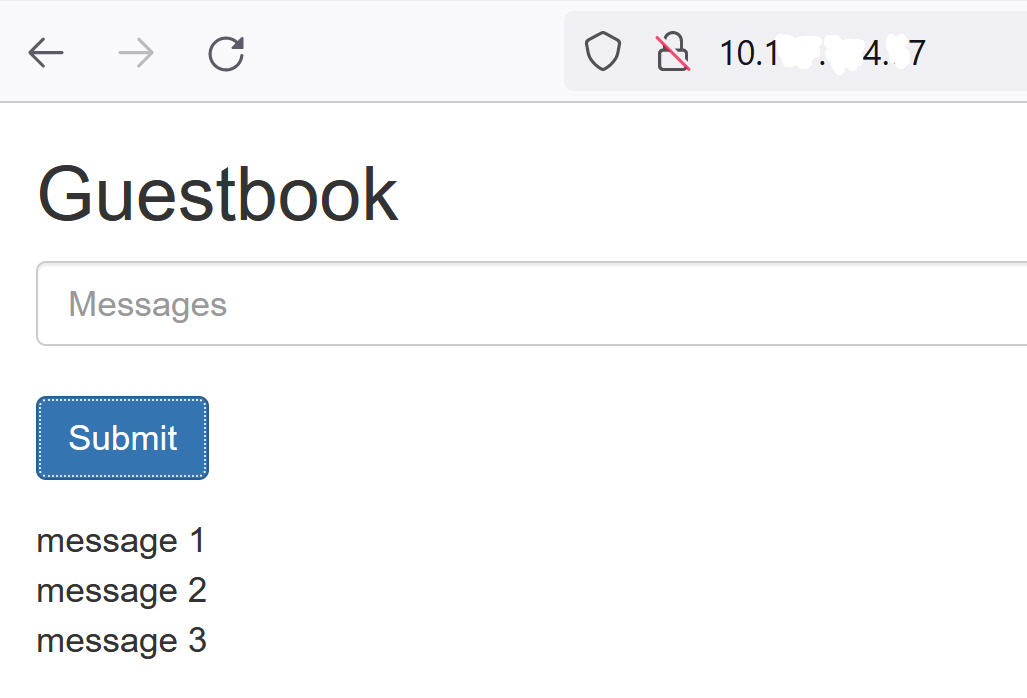

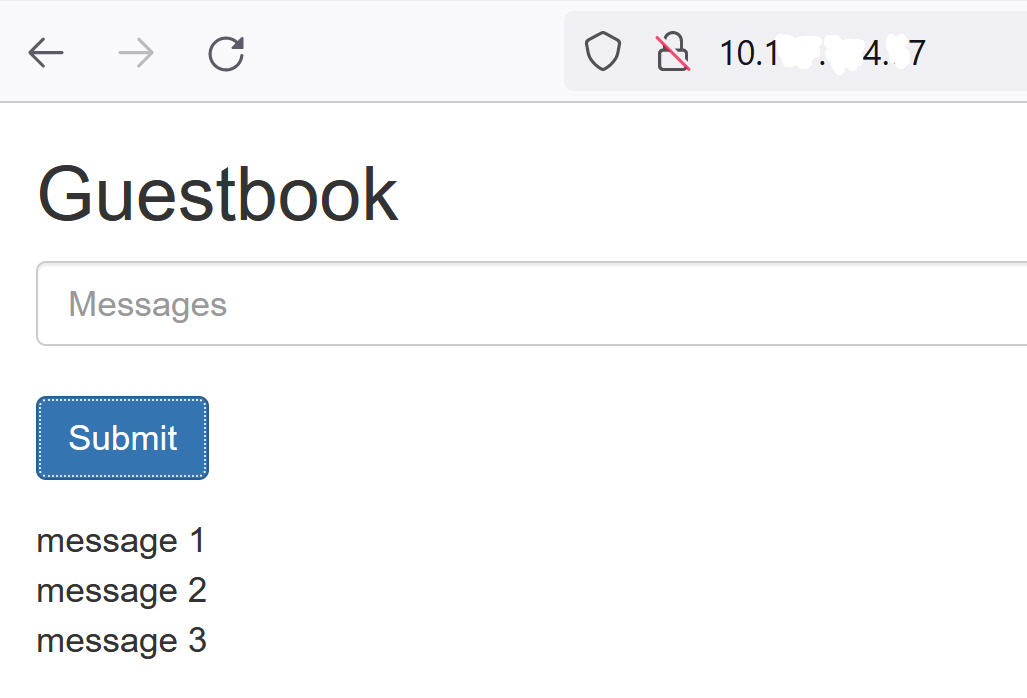

For this example we are going to use the Guestbook application. If is assumed that you have deployed the Guestbook application to a Tanzu Kubernetes cluster. See Tanzu Kubernetes Guestbook Tutorial for guidance.

So that we can demonstrate stateful backup and restore, submit some message to the Guestbook application using the frontend web page so that the messages are persisted. For example:

--include namespace tag as well as pod annotations.

--default-volumes-to-restic option when creating the backup. This will automatically backup all PVs using Restic. See

https://velero.io/docs/v1.5/restic/ for more information.

kubectl get pod -n guestbook

kubectl get pod -n guestbook NAME READY STATUS RESTARTS AGE guestbook-frontend-deployment-85595f5bf9-h8cff 1/1 Running 0 55m guestbook-frontend-deployment-85595f5bf9-lw6tg 1/1 Running 0 55m guestbook-frontend-deployment-85595f5bf9-wpqc8 1/1 Running 0 55m redis-leader-deployment-64fb8775bf-kbs6s 1/1 Running 0 55m redis-follower-deployment-84cd76b975-jrn8v 1/1 Running 0 55m redis-follower-deployment-69df9b5688-zml4f 1/1 Running 0 55m

The persistent volumes are attached to the Redis pods. Because we are backing up these stateful pods with Restic, we need to add annotations to the stateful pods with the volumeMount name.

volumeMount to annotate the stateful pod. To get the

mountName, run the following command.

kubectl describe pod redis-leader-deployment-64fb8775bf-kbs6s -n guestbook

In the results you see Containers.leader.Mounts: /data from redis-leader-data. This last token is the volumeMount name to use for the leader pod annotation. For the follower it will be redis-follower-data. You can also obtain the volumeMount name from the source YAML.

kubectl -n guestbook annotate pod redis-leader-64fb8775bf-kbs6s backup.velero.io/backup-volumes=redis-leader-data

pod/redis-leader-64fb8775bf-kbs6s annotated

kubectl -n guestbook describe pod redis-leader-64fb8775bf-kbs6s | grep Annotations Annotations: backup.velero.io/backup-volumes: redis-leader-data

kubectl -n guestbook describe pod redis-follower-779b6d8f79-5dphr | grep Annotations Annotations: backup.velero.io/backup-volumes: redis-follower-data

velero backup create guestbook-backup --include-namespaces guestbook

Backup request "guestbook-backup" submitted successfully. Run `velero backup describe guestbook-pv-backup` or `velero backup logs guestbook-pv-backup` for more details.

velero backup get NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR guestbook-backup Completed 0 0 2020-07-23 16:13:46 -0700 PDT 29d default <none>

velero backup describe guestbook-backup --details

kubectl get backups.velero.io -n velero NAME AGE guestbook-backup 4m58s

kubectl describe backups.velero.io guestbook-backup -n velero

Restore a Stateful Application Running on a Tanzu Kubernetes Cluster

Restoring a stateful application running on a Tanzu Kubernetes cluster involves restoring both the application metadata and the application data stored to a persistent volume. To do this you need both Velero and Restic.

This example assumes that you backed up the stateful Guestbook application as described in the previous section.

kubectl delete ns guestbook namespace "guestbook" deleted

kubectl get ns kubectl get pvc,pv --all-namespaces

Restore request "guestbook-backup-20200723161841" submitted successfully. Run `velero restore describe guestbook-backup-20200723161841` or `velero restore logs guestbook-backup-20200723161841` for more details.

velero restore describe guestbook-backup-20200723161841 Name: guestbook-backup-20200723161841 Namespace: velero Labels: <none> Annotations: <none> Phase: Completed Backup: guestbook-backup Namespaces: Included: all namespaces found in the backup Excluded: <none> Resources: Included: * Excluded: nodes, events, events.events.k8s.io, backups.velero.io, restores.velero.io, resticrepositories.velero.io Cluster-scoped: auto Namespace mappings: <none> Label selector: <none> Restore PVs: auto Restic Restores (specify --details for more information): Completed: 3

velero restore get NAME BACKUP STATUS ERRORS WARNINGS CREATED SELECTOR guestbook-backup-20200723161841 guestbook-backup Completed 0 0 2021-08-11 16:18:41 -0700 PDT <none>

kubectl get ns NAME STATUS AGE default Active 16d guestbook Active 76s ... velero Active 2d2h

vkubectl get all -n guestbook NAME READY STATUS RESTARTS AGE pod/frontend-6cb7f8bd65-h2pnb 1/1 Running 0 6m27s pod/frontend-6cb7f8bd65-kwlpr 1/1 Running 0 6m27s pod/frontend-6cb7f8bd65-snwl4 1/1 Running 0 6m27s pod/redis-leader-64fb8775bf-kbs6s 1/1 Running 0 6m28s pod/redis-follower-779b6d8f79-5dphr 1/1 Running 0 6m28s pod/redis-follower-899c7e2z65-8apnk 1/1 Running 0 6m28s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/guestbook-frontend LoadBalancer 10.10.89.59 10.19.15.99 80:31513/TCP 65s service/redis-follower ClusterIP 10.111.163.189 <none> 6379/TCP 65s service/redis-leader ClusterIP 10.111.70.189 <none> 6379/TCP 65s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/guestbook-frontend-deployment 3/3 3 3 65s deployment.apps/redis-follower-deployment 1/2 2 1 65s deployment.apps/redis-leader-deployment 1/1 1 1 65s NAME DESIRED CURRENT READY AGE replicaset.apps/guestbook-frontend-deployment-56fc5b6b47 3 3 3 65s replicaset.apps/redis-follower-deployment-6fc9cf5759 2 2 1 65s replicaset.apps/redis-leader-deployment-7d89bbdbcf 1 1 1 65s

kubectl get pvc,pv -n guestbook NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/redis-leader-claim Bound pvc-a2f6e6d4-42db-4fb8-a198-5379a2552509 2Gi RWO thin-disk 2m40s persistentvolumeclaim/redis-follower-claim Bound pvc-55591938-921f-452a-b418-2cc680c0560b 2Gi RWO thin-disk 2m40s NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-55591938-921f-452a-b418-2cc680c0560b 2Gi RWO Delete Bound guestbook/redis-follower-claim thin-disk 2m40s persistentvolume/pvc-a2f6e6d4-42db-4fb8-a198-5379a2552509 2Gi RWO Delete Bound guestbook/redis-leader-claim thin-disk 2m40s

Lastly, access the Guestbook frontend using the external IP of the guestbook-frontend service and verify that the messages you submitted at the beginning of the tutorial are restored. For example: