To migrate virtual machines with vMotion, the virtual machine must meet certain network, disk, CPU, USB, and other device requirements.

The following virtual machine conditions and limitations apply when you use vSphere vMotion:

- The source and destination management network IP address families must match. You cannot migrate a virtual machine from a host that is registered to vCenter Server with an IPv4 address to a host that is registered with an IPv6 address.

- Using 1 GbE network adapters for the vSphere vMotion network might result in migration failure, if you migrate virtual machines with large vGPU profiles. Use 10 GbE network adapters for the vSphere vMotion network.

- If virtual CPU performance counters are enabled, you can migrate virtual machines only to hosts that have compatible CPU performance counters.

- You can migrate virtual machines that have 3D graphics enabled. If the 3D Renderer is set to Automatic, virtual machines use the graphics renderer that is present on the destination host. The renderer can be the host CPU or a GPU graphics card. To migrate virtual machines with the 3D Renderer set to Hardware, the destination host must have a GPU graphics card.

- Starting with vSphere 6.7 Update 1 and later, vSphere vMotion supports virtual machines with vGPU.

- vSphere DRS supports initial placement of vGPU virtual machines running vSphere 6.7 Update 1 or later without load balancing support.

- You can migrate virtual machines with USB devices that are connected to a physical USB device on the host. You must enable the devices for vSphere vMotion.

- You cannot use migration with vSphere vMotion to migrate a virtual machine that uses a virtual device backed by a device that is not accessible on the destination host. For example, you cannot migrate a virtual machine with a CD drive backed by the physical CD drive on the source host. Disconnect these devices before you migrate the virtual machine.

- You cannot use migration with vSphere vMotion to migrate a virtual machine that uses a virtual device backed by a device on the client computer. Disconnect these devices before you migrate the virtual machine.

Using vSphere vMotion to Migrate vGPU Virtual Machines

You can use vMotion to perform a live migration of NVIDIA vGPU-powered virtual machines without causing data loss.

To enable vMotion for vGPU virtual machines, you need to set the vgpu.hotmigrate.enabled advanced setting to true. For more information about how to configure the vCenter Server advanced settings, see Configure Advanced Settings in the vCenter Server Configuration documentation.

In vSphere 6.7 Update 1 and vSphere 6.7 Update 2, when you migrate vGPU virtual machines with vMotion and vMotion stun time exceeds 100 seconds, the migration process might fail for vGPU profiles with 24 GB frame buffer size or larger. To avoid the vMotion timeout, upgrade to vSphere 6.7 Update 3 or later.

During the stun time, you are unable to access the VM, desktop, or application. Once the migration is completed, access to the VM resumes and all applications continue from their previous state. For information on frame buffer size in vGPU profiles, refer to the NVIDIA Virtual GPU documentation.

The expected VM stun times (the time when the VM is inaccessible to users during vMotion) and the estimated worst-case stun times are listed in the following tables. The expected stun times were tested over a 10Gb network with NVIDIA Tesla V100 PCIe 32 GB GPUs:

| Used vGPU Frame Buffer (GB) | VM Stun Time (sec) |

|---|---|

| 1 | 2 |

| 2 | 4 |

| 4 | 6 |

| 8 | 12 |

| 16 | 22 |

| 32 | 39 |

| vGPU Memory | VM Memory 4 GB | VM Memory 8 GB | VM Memory 16 GB | VM Memory 32 GB | |

|---|---|---|---|---|---|

| 1 GB | 5 | 6 | 8 | 12 | |

| 2 GB | 7 | 9 | 11 | 15 | |

| 4 GB | 13 | 14 | 16 | 21 | |

| 8 GB | 24 | 25 | 28 | 32 | |

| 16 GB | 47 | 48 | 50 | 54 | |

| 32 GB | 91 | 92 | 95 | 99 | |

- The configured vGPU profile represents an upper bound to the used vGPU frame buffer. In many use cases, the amount of vGPU frame buffer memory used by the VM at any given time is below the assigned vGPU memory in the profile.

- Both expected and estimated worst-case stun times are only valid when migrating a single virtual machine. If you are concurrently migrating multiple virtual machines, that is, for a vSphere manual remediation process, the stun times will have adverse effects.

- The above estimates assume sufficient CPU, memory, PCIe, and network capacity to achieve 10 Gbps migration throughput.

DRS supports initial placement of vGPU VMs running vSphere 6.7 Update 1 and later without load balancing support.

VMware vSphere vMotion is supported only with and between compatible NVIDIA GPU device models and NVIDIA GRID host driver versions as defined and supported by NVIDIA. For compatibility information, refer to the NVIDIA Virtual GPU User Guide.

To check compatibility between NVIDIA vGPU host drivers, vSphere, and Horizon, refer to the VMware Compatibility Matrix.

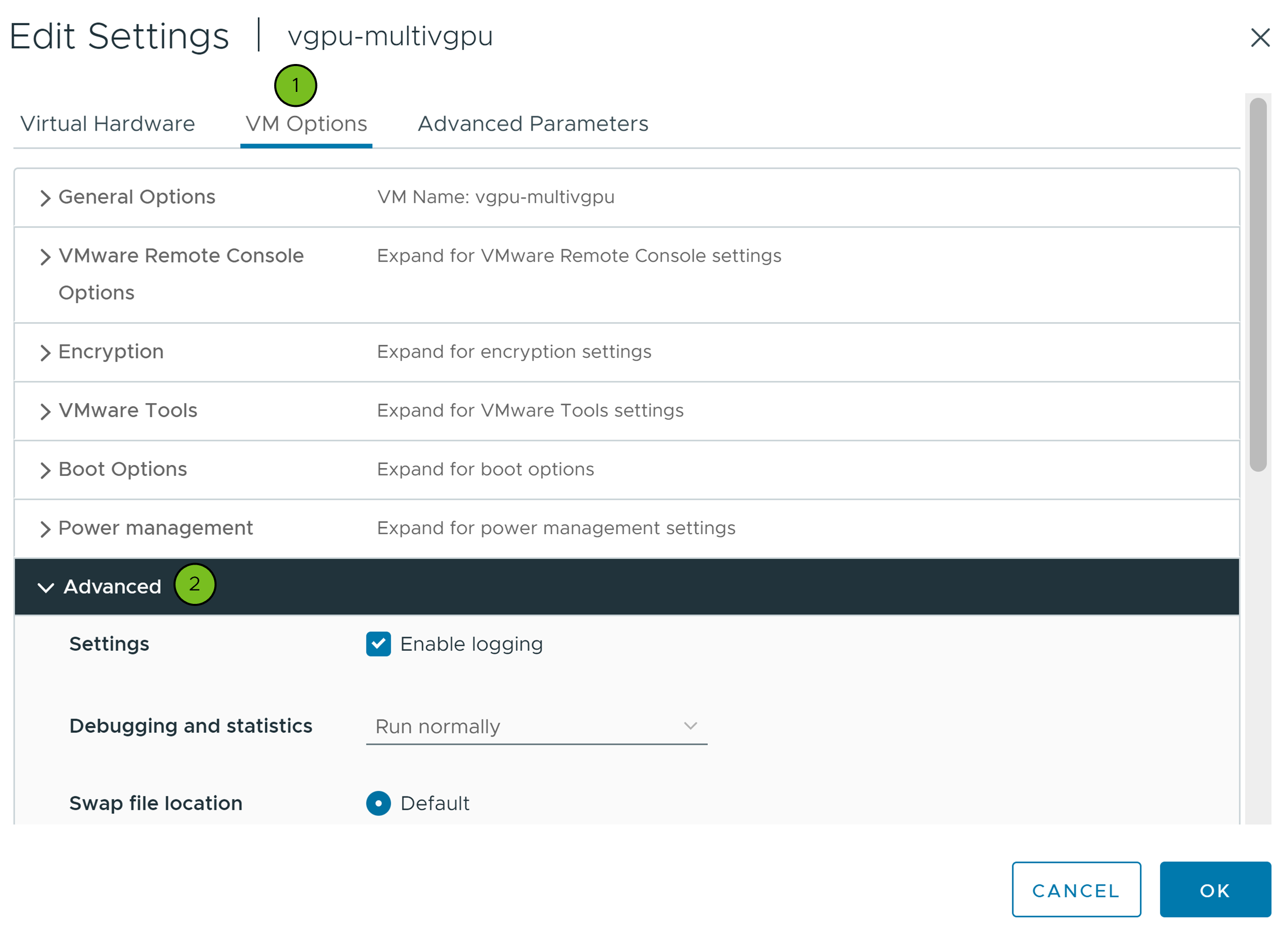

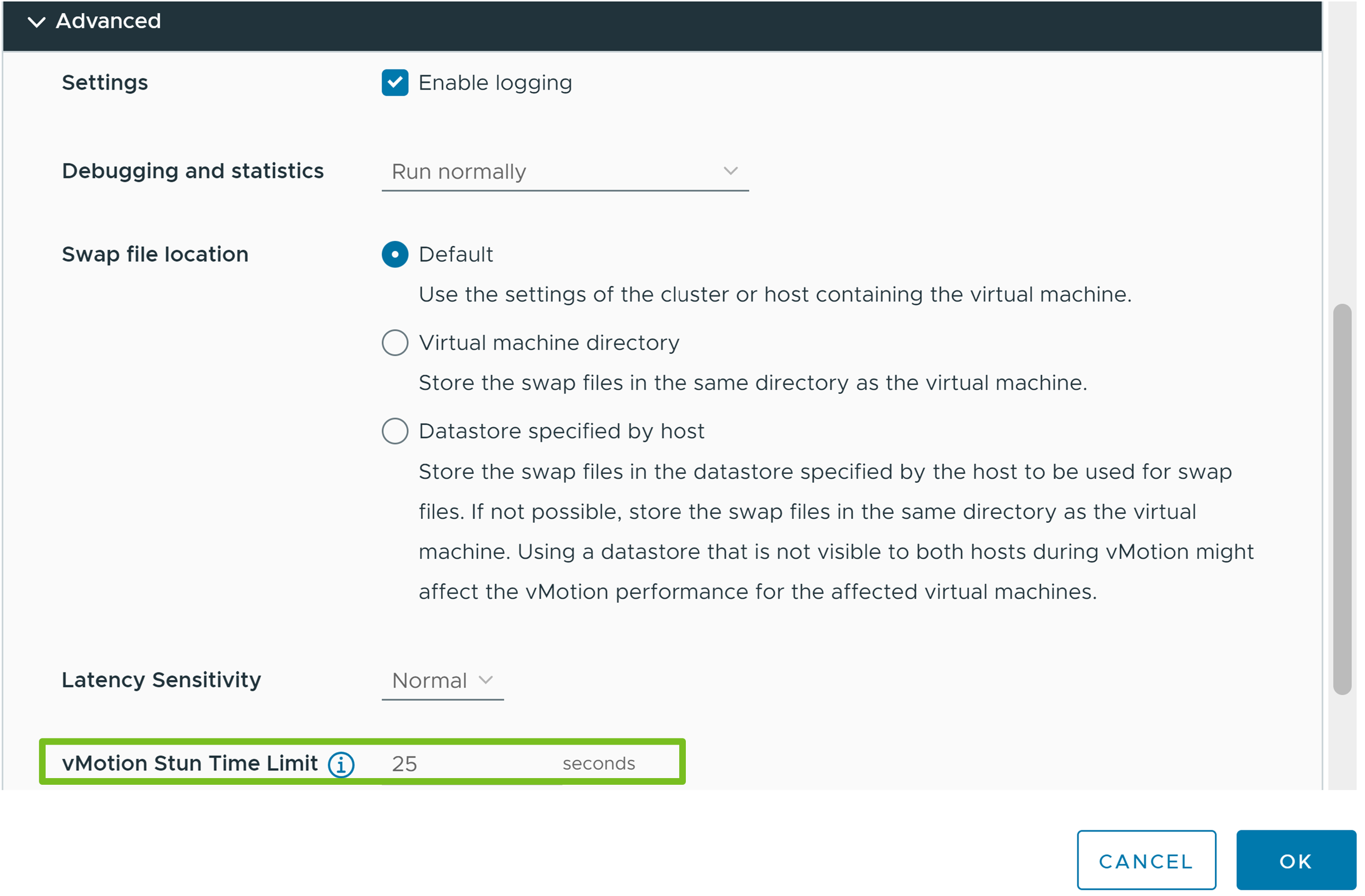

How to Set a Stun Time Limit for Your vGPU Virtual Machines

Learn how to set a stun time limit per virtual machine when you migrate NVIDIA vGPU-powered virtual machines with vSphere vMotion. Setting a stun time limit may prevent vCenter Server from powering on the virtual machine or migrating it to a host and network whose estimated maximum stun time exceeds that limit.

The limit that you set must be higher than the estimated maximum stun time for the current device configuration. In case of multiple PCI devices, the stun time limit that you set must be higher than the sum of the contributions of all PCI devices. Setting a stun time limit that is lower than the estimated maximum stun time may prevent the virtual machine from powering on.

The maximum stun time is calculated on the basis of the bandwidth of the host on which the VM runs currently. The calculations may change at the time of migration if the destination host has lower bandwidth. For example, if a virtual machine is running on a host with a 25 Gbps vMotion NIC, but the destination host has a 10 Gbps vMotion NIC. At the time of migration the maximum stun time calculation is based on the 10 Gbps vMotion NIC.

Prerequisites

- Verify that the vCenter Server instance is version 8.0 Update 2.

- Verify that the source and destination ESXi hosts are version 8.0 Update 2.

- Verify that Sphere Cluster Services (vCLS) VMs are in a healthy state. For information about vCLS, see vSphere Cluster Services.

- Verify that the vMotion network is configured through the Quickstart Workflow. For information about configuring the networking options for the vMotion traffic, see How to Configure Your vSphere Cluster by Using the Quickstart Workflow.

- Verify that vMotion is enabled for vGPU virtual machines. The

vgpu.hotmigrate.enabledadvanced setting must be set totrue. For more information about how to configure the vCenter Server advanced settings, see Configure Advanced Settings in the vCenter Server Configuration documentation.

Procedure

Virtual Machines Swap File Location and vSphere vMotion Compatibility

Virtual machine swap file location affects vMotion compatibility in different ways depending on the version of ESXi running on the virtual machine's host.

You can configure ESXi 6.7 or later hosts to store virtual machine swap files with the virtual machine configuration file, or on a local swap file datastore specified for that host.

The location of the virtual machine swap file affects vMotion compatibility as follows:

- For migrations between hosts running ESXi 6.7 and later, vMotion and migrations of suspended and powered-off virtual machines are allowed.

- During a migration with vMotion, if the swap file location on the destination host differs from the swap file location on the source host, the swap file is copied to the new location. This activity can result in slower migrations with vMotion. If the destination host cannot access the specified swap file location, it stores the swap file with the virtual machine configuration file.

See the vSphere Resource Management documentation for information about configuring swap file policies.

vSphere vMotion Notifications for Latency Sensitive Applications

Starting with vSphere 8.0, you can notify an application running inside the guest OS of a virtual machine when a vSphere vMotion event starts and finishes. This notification mechanism allows latency sensitive applications to prepare or delay a vSphere vMotion operation.

In cases of latency sensitive applications, such as VoIP applications and high frequency trading applications, vSphere vMotion and vSphere DRS are usually deactivated. vSphere 8.0 introduces a notification mechanism which allows you to notify an application that a vSphere vMotion is about to happen so that the application takes the necessary steps to prepare. vSphere vMotion pauses after generating the start event and waits for the application to acknowledge the start notification before it proceeds.

Enabling the notification mechanism for vSphere vMotion operations might result in an increase of the overall vSphere vMotion time.

How to Configure a Virtual Machine for vSphere vMotion Notifications

By default, the notification mechanism is deactivated. To enable the notification mechanism on a virtual machine, the virtual machine must be of hardware version 20 or above and you must configure the following advanced virtual machine configuration options.

You can use vSphere Web Services API to enable sending notifications to applications running inside the virtual machine, and to specify the maximum period of time in seconds that an application has to prepare for the vMotion operation.

To enable sending notifications to applications running inside the virtual machine, set the vmx.vmOpNotificationToApp.enabled virtual machine property to true

To specify the maximum period of time in seconds that an application has to prepare for the vMotion operation, use the vmx.vmOpNotificationToApp.timeout virtual machine property. When a vSphere vMotion operation generates a start event, the vMotion operation pauses and waits for an acknowledgment from the application to proceed. vSphere vMotion waits for the notification timeout that you specify.

This property is optional and is not set by default. You can use it to configure a more restrictive timeout for a specific virtual machine. If left unset, the host notification configuration is used.

For more information, see the virtual machine data object properties in the vSphere Web Services API documentation. For information on how to use the vSphere Web Services SDK, see the vSphere Web Services SDK Programming Guide.

How to Configure a Host for vSphere vMotion Notifications

You can use the VmOpNotificationToApp.Timeout advanced host configuration setting, to specify a notification timeout that applies to all virtual machines on a host. Use the ConfigManager APIs to set a value to this configuration property. The default notification timeout is 0. In this case, application notifications are generated but the vSphere vMotion operations are not delayed.

If you set a notification timeout on a host and a virtual machine running on the host at the same time, the smaller value is used.

For information on how to use the vSphere Web Services SDK, see the vSphere Web Services SDK Programming Guide.

How to Register an Application for vSphere vMotion Notifications

To enable an application to receive notifications for vSphere vMotion events, you must have VMware Tools installed on the virtual machine hosting the application. For more information about installing and configuring VMware Tools, see the VMware Tools documentation.

With vSphere 8.0, you can register only one application per virtual machine. An application that wants to receive vSphere vMotion notifications, can use the following guest RPC calls and check for new vMotion events periodically, for example, every 1 or 2 seconds, using the vm-operation-notification.check-for-event guest RPC call.

For information on how to use guest RPC calls, see the VMware Guest SDK Programming Guide.

Command |

Description |

|---|---|

vm-operation-notification.register |

Registers an application to start receiving notifications for vSphere vMotion events. |

vm-operation-notification.unregister |

Unregisters an application from receiving notifications for vSphere vMotion events. |

vm-operation-notification.list |

Retrieves information about the registered application running on a virtual machine on the host. |

vm-operation-notification.check-for-event |

Retrieves information about the vSphere vMotion event registered at the time of the call. |

vm-operation-notification.ack-event |

Acknowledges a vSphere vMotion start event. |