vSphere with Tanzu supports rolling updates for Supervisors and Tanzu Kubernetes Grid clusters, and for the infrastructure supporting these clusters.

How Supervisors and Tanzu Kubernetes Grid Clusters Are Updated

vSphere with Tanzu uses a rolling update model for Supervisors and Tanzu Kubernetes Grid clusters. The rolling update model ensures that there is minimal downtime for cluster workloads during the update process. Rolling updates include upgrading the Kubernetes software versions and the infrastructure and services supporting the Kubernetes clusters, such as virtual machine configurations and resources, vSphere Namespaces, and custom resources.

Dependency Between Supervisor Updates and Tanzu Kubernetes Grid Cluster Updates

You update the Supervisor and the Tanzu Kubernetes Grid clusters separately. Note, however, that there are dependencies between the two.

Updating a Supervisor will likely trigger a rolling update of the Tanzu Kubernetes Grid clusters deployed there. See Update the Supervisor by Performing a vSphere Namespaces Update.

You may need to update one or more Tanzu Kubernetes Grid clusters before updating a Supervisor if the Tanzu Kubernetes Grid cluster is not compliant with the target Supervisor version. See Verify Tanzu Kubernetes Grid Cluster Compatibility for Upgrade of the Supervisor.

About Supervisor Updates

When you initiate a Supervisor update, the system creates a new control plane VM and joins it to the existing Supervisor control plane. During this phase of the update, the vSphere inventory shows four control plane VMs as the system adds a new updated VM and then removes the older out-of-date VM. Objects are migrated from one of the old control plane VMs to the new one, and the old control plane VM is then removed. This process repeats one-by-one until all control plane VMs are updated. Once all the control plane VMs are updated, the worker nodes are updated in a similar rolling update fashion. The worker nodes are the ESXi hosts, and each spherelet process on each ESXi host is updated one-by-one.

- Update the vSphere Namespaces.

- Update everything, including VMware versions and Kubernetes versions.

Updating vSphere Namespaces

- Upgrade vCenter Server.

- Perform a vSphere Namespaces update (including Kubernetes upgrade).

To perform a vSphere Namespaces update, see Update the Supervisor by Performing a vSphere Namespaces Update.

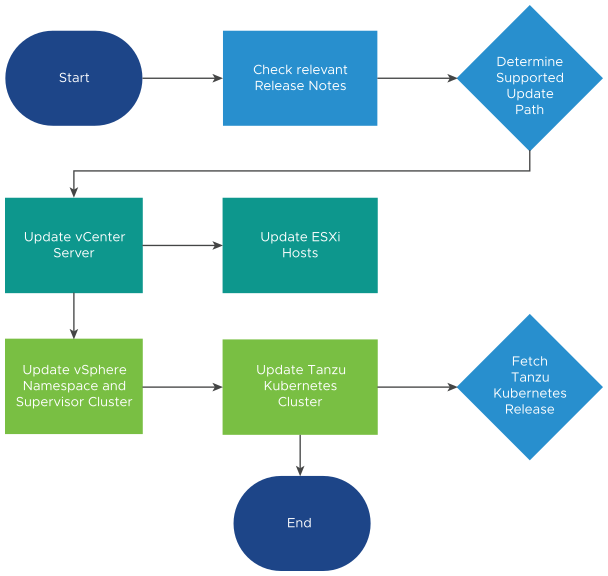

Updating all vSphere with Tanzu Components

You use the update everything workflow to update all vSphere with Tanzu components. This type of update is required when you are updating major releases, for example such as from NSX-T 3.X to 4 and from vSphere 7.x to 8.

- Check the VMware Interoperability matrix https://interopmatrix.vmware.com/Interoperability for the vCenter Server and NSX to determine compatibility. vSphere with Tanzu functionality is delivered by Workload Control Plane (WCP) software which ships with vCenter Server.

- Upgrade NSX, if compatible.

- Upgrade vCenter Server.

- Upgrade vSphere Distributed Switch.

- Upgrade ESXi hosts.

- Check compatibility of any provisioned Tanzu Kubernetes Grid clusters with the target Supervisor version.

- Update vSphere Namespaces (including the Supervisor Kubernetes version).

- Update Tanzu Kubernetes Grid clusters.

About Tanzu Kubernetes Grid Cluster Updates

When you update a Supervisor, the infrastructure components supporting the Tanzu Kubernetes Grid clusters deployed on that Supervisor, such as Tanzu Kubernetes Grid 2.0, are likewise updated. Each infrastructure update can include updates for services supporting the Tanzu Kubernetes Grid 2.0 (CNI, CSI, CPI), and updated configuration settings for the control plane VMs and worker nodes that can be applied to existing Tanzu Kubernetes Grid clusters. To ensure that your configuration meets compatibility requirements, vSphere with Tanzu performs pre-checks during rolling update and enforces compliance.

To perform a rolling update of a Tanzu Kubernetes Grid cluster, you update the cluster manifest. See Updating TKG 2 Clusters on Supervisor. Note, however, that when a vSphere Namespaces update is performed, the system immediately propagates updated configurations to all Tanzu Kubernetes Grid clusters. These updates can automatically trigger a rolling update of the Tanzu Kubernetes Grid control plane and worker nodes.

The rolling update process for replacing the cluster nodes is similar to the rolling update of pods in a Kubernetes Deployment. There are two distinct controllers responsible for performing a rolling update of Tanzu Kubernetes Grid clusters: the Add-ons Controller and the TanzuKubernetesCluster controller. Within those two controllers there are three key stages to a rolling update: updating add-ons, updating the control plane, and updating the worker nodes. These stages occur in order, with pre-checks that prevent a step from beginning until the preceding step has sufficiently progressed. These steps might be skipped if they are determined to be unnecessary. For example, an update might only affect worker nodes and therefore not require any add-on or control plane updates.

During the update process, the system adds a new cluster node, and waits for the node to come online with the target Kubernetes version. The system then marks the old node for deletion, moves to the next node, and repeats the process. The old node is not deleted until all pods are removed. For example, if a pod is defined with PodDisruptionBudgets that prevent a node from being fully drained, the node is cordoned off but is not removed until those pods can be evicted. The system upgrades all control plane nodes first, then worker nodes. During an update, the Tanzu Kubernetes Grid cluster status changes to "updating". After the rolling update process completes, the Tanzu Kubernetes Grid cluster status changes to "running".

Pods running on a Tanzu Kubernetes Grid cluster that are not governed by a replication controller will be deleted during a Kubernetes version upgrade as part of the worker node drain during the Tanzu Kubernetes Grid cluster update. This is true if the cluster update is triggered manually or automatically by a vSphere Namespaces update. Pods not governed by a replication controller include pods that are not created as part of a Deployment or ReplicaSet spec. Refer to the topic Pod Lifecycle: Pod lifetime in the Kubernetes documentation for more information.