How to attach your Kubernetes clusters in VMware Aria Automation for Secure Clouds

VMware Aria Automation for Secure Clouds can monitor your Kubernetes clusters as a separate resource similar to currently supported cloud providers. By attaching your Kubernetes clusters you can take advantage of real-time monitoring for misconfigurations and security violations corresponding to CIS Kubernetes Benchmark standards, and build a more complete image of your organization's security posture in the cloud.

Before you start

Before you try to attach any clusters, check each section here to make sure you've got the right system dependencies, Kubernetes version, and deployment model so you can select the correct process.

System dependencies

The following dependencies are required to successfully add a cluster to VMware Aria Automation for Secure Clouds:

- Access to an environment with kubectl installed.

- The relevant provider CLI.

Local access to your provider CLI is required to set kubectl's current context to your clusters in the public cloud. You can also run CLI commands from your provider's cloud shell if preferred, though you may need to install kubectl there first.

Supported Kubernetes versions

Version support for Kubernetes is aligned to active versions for Upstream Kubernetes and select vendor distributions. In other words, as long as you're on a standard (non-experimental) version of Kubernetes that is still maintained and patched by one of the vendor distributions listed in this section, then it's supported by VMware Aria Automation for Secure Clouds as well.

Refer to this support matrix for the Kubernetes distributions VMware Aria Automation for Secure Clouds currently supports in public cloud and data center environments. Click on a distribution to see its active Kubernetes versions:

Some distributions may need additional modification to the installation manifest to onboard correctly. Contact customer support if you require assistance.

Clusters from Kubernetes distributions that are not listed here may not work correctly when added. Please reach out to your product representative if you're interested in getting official support for a different Kubernetes distribution.

Identify your Kubernetes deployment model

VMware Aria Automation for Secure Clouds supports multiple deployment models of Kubernetes for cloud and data center environments. These environments are defined accordingly in the context of the service:

- A public cloud environment is one where your Kubernetes clusters are deployed on a natively supported public cloud (AWS, Azure, or GCP). These clusters may be on a managed service offered by the cloud provider or self-managed on cloud provider infrastructure (virtual machines, containers, and so on).

- A data center environment is one where your Kubernetes clusters are deployed somewhere other than the natively supported public clouds. Clusters in a data center environments are considered self-managed in VMware Aria Automation for Secure Clouds.

To see which process you should follow to attach your Kubernetes clusters, review the following deployment scenarios:

- If your Kubernetes clusters are deployed on AKS, EKS, or GKE in their respective public clouds, you should follow the directions for managed service Kubernetes clusters.

- If your Kubernetes clusters are not deployed on AKS, EKS, or GKE but are still managed through one of those public clouds, you should follow the directions for self-managed Kubernetes clusters in a public cloud.

- If your Kubernetes clusters are deployed in any kind of environment other than the AWS, Azure, or GCP public clouds, you should follow the directions for self-managed Kubernetes clusters in a data center.

How to attach a managed service Kubernetes cluster

You can follow this process for any Kubernetes clusters that are deployed on the AKS, EKS, or GKE platforms.

If your clusters are on a managed service with a cloud provider that isn't natively supported by VMware Aria Automation for Secure Clouds, you can still attach them by creating a Data center cloud account and attaching them to it.

Review detected clusters

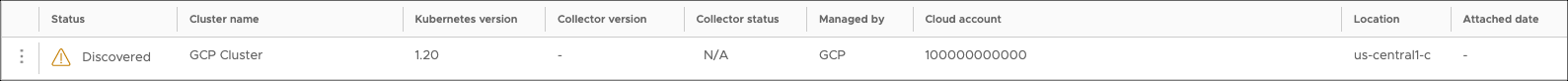

Managed service Kubernetes clusters are automatically detected by VMware Aria Automation for Secure Clouds if they're associated with an existing cloud account. Navigate to Settings > Kubernetes clusters to see the list of detected clusters. Any cluster that has been detected but not yet attached has the Discovered status.

Try viewing this page in different contexts, if you have access to them. The list dynamically updates to show only clusters associated with cloud accounts scoped to the currently selected context.

If your clusters are deployed on a cloud account that's not currently monitored by the service, follow the appropriate cloud account onboarding process for your provider, then wait a few minutes for the clusters to populate.

Attach a cluster

To attach a cluster, you must install a collector in your Kubernetes environment that sends configuration data back to VMware Aria Automation for Secure Clouds, which can then trigger findings based on active monitoring rules.

To start, click the menu icon to the left of a detected cluster, then click Attach cluster. You can review your cluster information in the dialog window, then click Next.

Your cluster should now be in Pending status. The next step installs the collector, which you can do through a single YAML file or Helm chart method. Review both methods and decide which is best for your needs.

YAML file installation

Select Single YAML file from the Attach discovered cluster dialog.

Copy the value for COLLECTOR_CLIENT_SECRET to a secure location. The secret isn't displayed again after this screen, so you'll need to detach the cluster and go through these steps again if you lose it.

Copy this command from the UI (include your secret where indicated) and run it in your local or cloud shell:

kubectl create namespace chss-k8s && kubectl create secret generic collector-client-secret --from-literal=COLLECTOR_CLIENT_SECRET='<your_secret>' -n chss-k8sDownload the Kubernetes configuration YAML file supplied by VMware Aria Automation for Secure Clouds. You can click the download icon next to the "View Configuration details (YAML)" link to save it to your local environment. If you're using a provider cloud shell, upload this file through your shell interface before taking the next step.

Note: Every YAML configuration file is unique to the specific cluster and collector you're trying to attach. Do not use a different YAML configuration file, even if it's from the same cluster on a previous attempt.

In your CLI, navigate to the directory the YAML file is saved to and run this command:

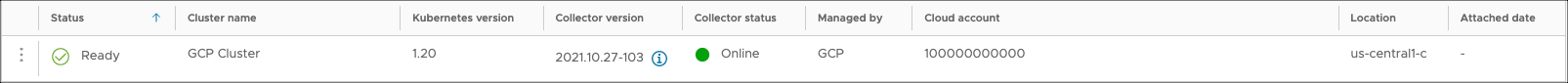

kubectl apply -f <filename.yaml>Refresh your screen and you should now see your cluster in the Ready status. It may take a few minutes to update.

Helm chart installation

Note: Depending on your shell environment, you may need to install Helm before following these directions.

Select Helm chart from the Attach discovered cluster dialog.

Select your Helm version.

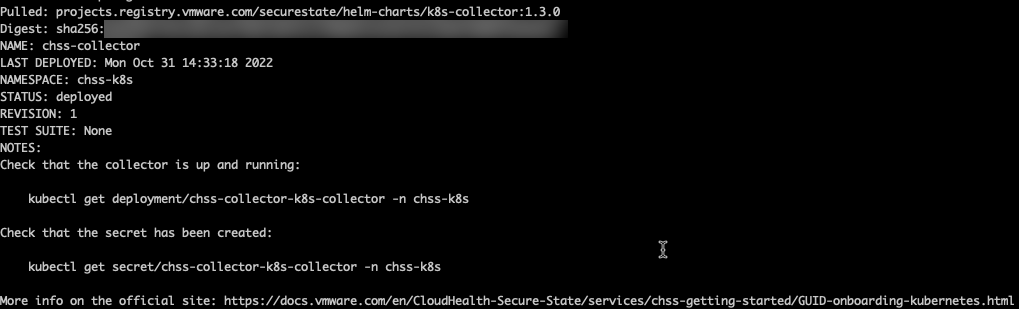

Run the commands provided by the dialog in your shell environment to install the collector. A successful deployment output should resemble the following:

Refresh your screen and you should now see your cluster in the Ready status. It may take a few minutes to update.

How to attach a self-managed Kubernetes cluster

Self-managed Kubernetes clusters are any type of cluster that's not deployed on one of the managed Kubernetes services described in the previous section. Some examples of self-managed clusters you can add include:

- Clusters deployed on virtual machines in services like AWS EC2, Azure Compute, or GCP Compute Engine.

- Clusters managed on other Kubernetes distributions like Rancher, Red Hat OpenShift, or VMware Tanzu Kubernetes Grid.

- Clusters deployed in a data center.

How to attach a self-managed Kubernetes cluster in a public cloud

Follow this process if your self-managed Kubernetes clusters are deployed on the AWS, Azure, or GCP public clouds.

Navigate to Settings > Kubernetes clusters.

Click the Attach Self-Managed Cluster button.

Choose a name for your cluster. You can enter any name that isn't currently in use by another cluster for the same cloud account.

Select the cloud account the cluster is associated with, then click Next.

Follow the directions for attaching a cluster.

How to attach a self-managed Kubernetes cluster in a data center

Follow this process if your self-managed Kubernetes clusters are not deployed on the AWS, Azure, or GCP public clouds and are instead are in a data center or other type of on-premise solution.

A unique step in this procedure is the creation of a Data center cloud account to associate with your Kubernetes clusters. Data center cloud accounts aren't specific to any provider. You can associate one or multiple clusters with a single Data center cloud account.

How you group your clusters is going to impact how you search, explore, and report on their findings, so it's a good idea to plan out how you want to organize your clusters in advance. For example, if you want to make sure the same project is able to report on different clusters, you should group those clusters together under one datacenter account and add it to the project. Clusters that you don't want the project to have visibility on should be grouped in different data center cloud accounts based on their particular relationships (mutual owner, environment, application type, and so on). You'll save much more time in setup if you consider these factors before you start adding clusters to data center cloud accounts, since the only way to correct a mis-associated cluster is to detach and attach it again.

Navigate to Settings > Cloud accounts.

Select Add Account.

Select Data center, then click Add.

Choose a name and project for your account, add any optional information, then click Save.

Navigate to Settings > Kubernetes clusters.

Click the Attach Self-Managed Cluster button.

Choose a name for your cluster. You can enter any name that isn't currently in use by another cluster for the same cloud account.

For the Cloud Account field, Select the data center account you just created, then click Next.

Follow the directions for attaching a cluster.

How to manage your Kubernetes clusters

After you've attached your Kubernetes clusters, make to sure to maintain them by keeping the collectors updated and detaching clusters once they become outdated. This ensures that your deployment stays in good operational health and that you have access to the latest features for Kubernetes support in VMware Aria Automation for Secure Clouds.

How to update the collector

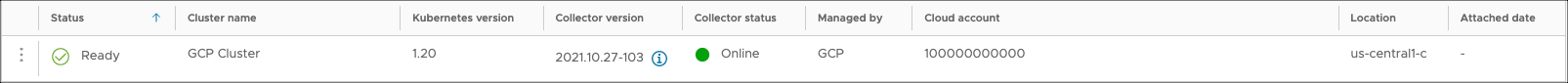

The development team regularly updates the collector for Kubernetes clusters. You can see which clusters need an update by going to the Kubernetes clusters page and reviewing the list of clusters in Ready status. Clusters with outdated collectors have an info icon in the Collector version field that shows you the latest available version. You can also see the total count of collectors that need to be updated at the top of the list.

To update a collector, click the menu icon to the left of your cluster and select Update collector version. You can choose to install the update through a YAML file or Helm chart.

Update with Helm chart

Note: Depending on your shell environment, you may need to install Helm before following these directions.

Select Helm chart from the collector update dialog.

Select your Helm version.

Run the commands provided by the dialog in your shell environment to update the collector.

Refresh your screen and you should now see your cluster with the latest version in the Collector version field. It may take a few minutes to update.

Update with YAML file

Select Single YAML file from the collector update dialog.

Download the Kubernetes configuration YAML file provided in the dialog. You can click the download icon next to the "View Configuration details (YAML)" link to save it to your local environment. If you're using a provider cloud shell, upload this file through your shell interface before taking the next step.

In your CLI, navigate to the directory the YAML file is saved to and run this command:

kubectl apply -f <filename.yaml>Refresh your screen and you should now see your cluster with the latest version in the Collector version field. It may take a few minutes to update.

How to detach a cluster

Clusters in Pending or Ready status can be detached after the collector running in the cluster is removed and Collector Status is Offline. When you detach a cluster, the Kubernetes collector is uninstalled from your cluster, returning it to Discovered status. The service stops tracking any findings originating from the detached cluster, similar to disabling a rule.

Run this command in your local or cloud shell to remove the collector.

kubectl delete namespace chss-k8sNavigate to Settings > Kubernetes clusters.

Locate your cluster and verify the Collector Status is Offline. You may need to wait a few minutes and refresh your screen before the status changes.

Click the menu icon to the left of your cluster and select Detach cluster.

Enter the name of your cluster where prompted and select Detach.

You can re-attach the cluster again by following the same process as before.

How to review findings from your Kubernetes clusters

VMware Aria Automation for Secure Clouds offers two broad types of findings to align your Kubernetes security posture to best practices:

- Findings that are aligned to Kubernetes CIS benchmark controls and hardening guidance adopted by industry practitioners.

- Findings that are recommended by VMware Aria Automation for Secure Clouds based on best practices promoted by cloud providers.

Findings of the former type are provider agnostic and can be generated on any type of Kubernetes cluster you deploy, while the latter are specific to the managed Kubernetes service you're using. You can easily identify these findings by looking for the managed service (EKS, GKE, or AKS) in the name of the associated rule.

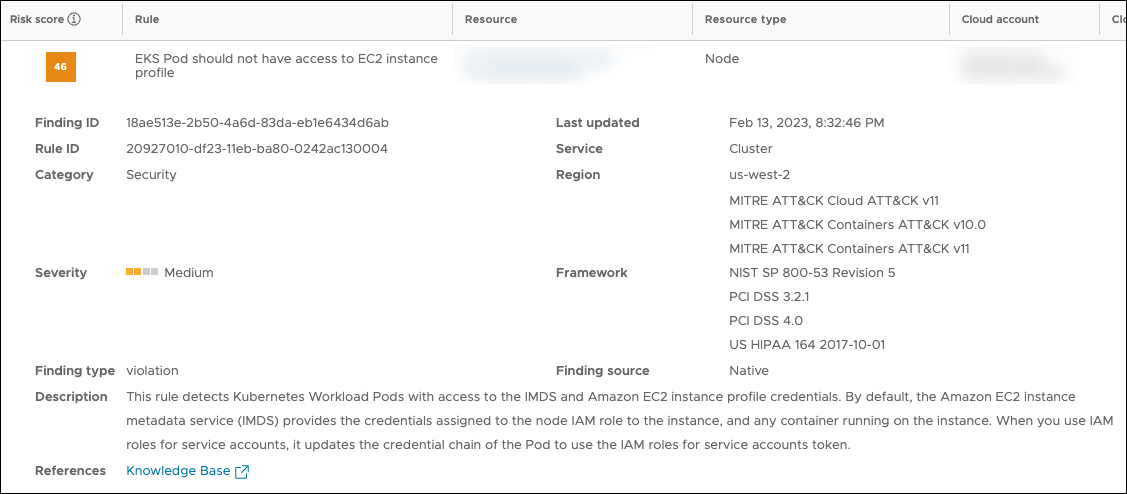

Example of a finding for a provider-managed Kubernetes cluster

Example of a finding for a self-managed Kubernetes cluster

Once your clusters are attached, you can view and filter findings for them like any other provider. See the Findings guide for more details.