This topic covers details on provision of application proxy services next to clients to improve performance and security.

Challenge

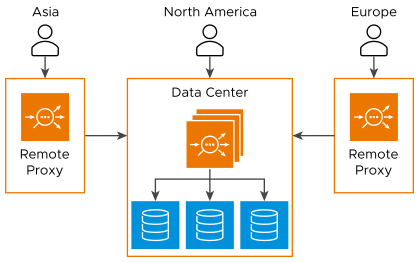

Applications are typically hosted in one or two data centers with clients connecting over the Internet to interact with the apps. For some applications, it is important to minimize the latency. The best way to do this is to move the applications closer to the clients. Since the databases that underpin the apps must remain in the primary data centers, options are limited.

Solution

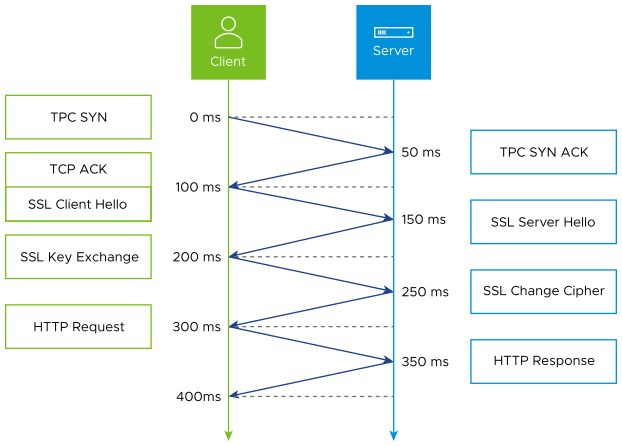

The applications remain unchanged, residing in the primary data centers. Deploy application proxies close to where the clients reside. The forward proxies have minimal latency between the clients, which significantly accelerates connection establishment and data transfer. Features such as TCP and HTTP fully proxy, SSL termination, caching, compression, and security block or allow listing enable better performance for clients and better, distributed security for the applications. In the example latency diagram, with 100 ms of latency, it will take at least 400 ms for a client to receive the first html file. By moving content closer to the client with an edge proxy, if the RTT is 30 ms, the first html file will be received within 120 ms. The time delta grows significantly as additional and larger objects are requested, which require further TCP ACKs and more round trips.

Use Case 1

This use case is relevant for dynamic surges in client demand. For example, a temporary event might draw numerous clients to a geographic area, such as a conference or sporting event such as the Olympics.

A temporary data center can be spun up quickly, with a couple of Service Engines provisioned on virtual infrastructure in a public cloud. After the event, the edge proxies can be easily de-provisioned. The primary intent in this variation is acceleration of client experience.

Use Case 2

Another variation is for static clients that regularly access applications. Since these clients are known and trusted, such as remote offices, a proxy can be installed on their premise. When they access the application, their request is routed through the local proxy, which provides an initial authentication prior to sending client requests through an encrypted tunnel back to the central data center through an SD-WAN. The primary intent of this variation is security, with acceleration as a secondary benefit.

Alternatives

For acceleration of the applications, content delivery networks (CDNs) are the most common alternative. CDNs generally require SSL certificates, which is not viable for the financial industry. They often have significant long term costs.

For the security use case, each connecting client can be configured with a VPN or a given dedicated WAN link.

Architecture

The architecture of the existing data centers remains the same in both the use cases. For use case 1, a new data center is brought online. This can be a public cloud such as AWS or GCP. Two new Service Engines are provisioned to provide proxy services. These SEs are managed by the existing Controllers in the primary data center. Each edge proxy is separated as a unique cloud in the Controller.

Virtual Service: A new virtual service is created for each application to be proxied through the edge proxies. These virtual services can be configured for SSL termination, HTTP caching, access control lists, client authentication, custom DataScripts, or any other feature that might normally be used.

Pool: The proxy virtual services are configured with a pool that contains the virtual service IP address from the primary and secondary data centers. Using priority load balancing, traffic is sent to the primary data center, or to the secondary when the primary is down or inaccessible. If multiple active data centers are used, the fastest load balancing algorithm is preferable.

DC to DC Traffic: Requests forwarded from the edge proxies to the data center virtual services must always be secure and encrypted. Within the proxy virtual service pool, enable server-side SSL to ensure wire to wire encryption.

Object Reuse: Objects such as SSL certificates can be used by any cloud. So updating an SSL cert (or similar shared object) updates all edge proxies that are using the object.

HTTP Compression: If compression is desired, it must be deactivated on the edge proxies and enabled on the data center virtual service. This way, the compression is only performed once. Once content is compressed at the data center, the compressed content is forwarded back to the clients connected to the edge proxies.

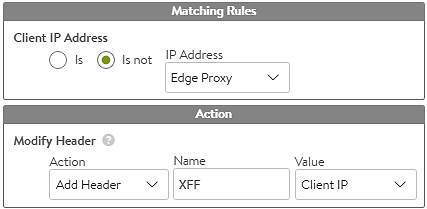

X-Forwarded-For: If application servers require the client IP addresses, the edge proxies must be configured through the HTTP application profile to insert XFF headers. If the virtual service at the primary data center also has XFF header insertion enabled, it will embed the edge proxy’s SNAT IP address. To resolve this, the primary data center virtual service must use a DataScript or HTTP Request policy to selectively add the XFF header based on the source address. If the source is an edge proxy and the XFF already exists, do not add an XFF. If the source is not an edge proxy, add an XFF. For large numbers of edge proxies, put their IP addresses in an IP Group to keep the policy or DataScript cleaner.

Attracting Traffic: On the public Internet, traffic is attracted to the edge proxies through DNS. This can be done through a global load balancer using a dynamic algorithm such as geographic location or IP Anycast. In private environments such as for use case 2, DNS can be used, though static routing can instead be required.

Return Traffic: By default, Service Engines SNAT traffic at the edge proxy and again at the data center. Responses flow through the same path. In the use case 2, an SD-WAN can be used to create connections from the central data center to each remote location. The Avi Load Balancer will send all responses back to the MAC address which sent the request to the Service Engine.