In the design of the ESXi host configuration for your VMware Cloud Foundation environment, consider the resources, networking, and security policies that are required to support the virtual machines in each workload domain cluster.

Logical Design for ESXi for VMware Cloud Foundation

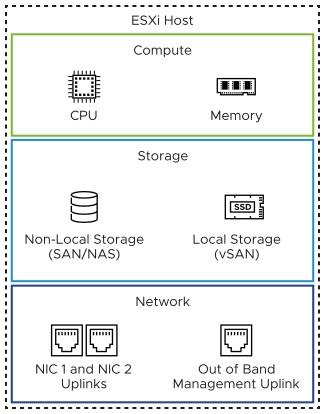

In the logical design for ESXi, you determine the high-level integration of the ESXi hosts with the other components of the VMware Cloud Foundation instance for providing virtual infrastructure to management and workload components.

To provide the resources required to run the management and workload components of the VMware Cloud Foundation instance, each ESXi host consists of the following elements:

CPU and memory

Storage devices

Out of band management interface

Network interfaces

Sizing Considerations for ESXi for VMware Cloud Foundation

You decide on the number of ESXi hosts per cluster and the number of physical disks per ESXi host.

The configuration and assembly process for each system should be standardized, with all components installed in the same manner on each ESXi host. Because standardization of the physical configuration of the ESXi hosts removes variability, the infrastructure is easily managed and supported. ESXi hosts are deployed with identical configuration across all cluster members, including storage and networking configurations. For example, consistent PCIe card slot placement, especially for network interface controllers, is essential for accurate mapping of physical network interface controllers to virtual network resources. By using identical configurations, you have an even balance of virtual machine storage components across storage and compute resources.

Hardware Element |

Considerations |

|---|---|

CPU |

|

Memory |

|

Storage |

|

ESXi Design Requirements and Recommendations for VMware Cloud Foundation

The requirements for the ESXi hosts in a workload domain in VMware Cloud Foundation are related to the system requirements of the workloads hosted in the domain. The ESXi requirements include number, server configuration, amount of hardware resources, networking, and certificate management. Similar best practices help you design optimal environment operation.

ESXi Server Design Requirements

You must meet the following design requirements for the ESXi hosts in a workload domain in a VMware Cloud Foundation deployment.

Requirement ID |

Design Requirement |

Requirement Justification |

Requirement Implication |

|---|---|---|---|

VCF-ESX-REQD-CFG-001 |

Install no less than the minimum number of ESXi hosts required for the cluster type being deployed. |

|

None. |

VCF-ESX-REQD-CFG-002 |

Ensure each ESXi host matches the required CPU, memory and storage specification. |

|

Assemble the server specification and number according to the sizing in VMware Cloud Foundation Planning and Preparation Workbook which is based on the projected deployment size. |

VCF-ESX-REQD-SEC-001 |

Regenerate the certificate of each ESXi host after assigning the host an FQDN. |

Establishes a secure connection with VMware Cloud Builder during the deployment of a workload domain and prevents man-in-the-middle (MiTM) attacks. |

You must manually regenerate the certificates of the ESXi hosts before the deployment of a workload domain. |

ESXi Server Design Recommendations

In your ESXi host design for VMware Cloud Foundation, you can apply certain best practices.

Recommendation ID |

Recommendation |

Justification |

Implication |

|---|---|---|---|

VCF-ESX-RCMD-CFG-001 |

Use vSAN ReadyNodes with vSAN storage for each ESXi host in the management domain. |

Your management domain is fully compatible with vSAN at deployment. For information about the models of physical servers that are vSAN-ready, see vSAN Compatibility Guide for vSAN ReadyNodes. |

Hardware choices might be limited. If you plan to use a server configuration that is not a vSAN ReadyNode, your CPU, disks and I/O modules must be listed on the VMware Compatibility Guide under CPU Series and vSAN Compatibility List aligned to the ESXi version specified in VMware Cloud Foundation 5.2 Release Notes. |

VCF-ESX-RCMD-CFG-002 |

Allocate hosts with uniform configuration across the default management vSphere cluster. |

A balanced cluster has these advantages:

|

You must apply vendor sourcing, budgeting, and procurement considerations for uniform server nodes on a per cluster basis. |

VCF-ESX-RCMD-CFG-003 |

When sizing CPU, do not consider multithreading technology and associated performance gains. |

Although multithreading technologies increase CPU performance, the performance gain depends on running workloads and differs from one case to another. |

Because you must provide more physical CPU cores, costs increase and hardware choices become limited. |

VCF-ESX-RCMD-CFG-004 |

Install and configure all ESXi hosts in the default management cluster to boot using a 128-GB device or larger. |

Provides hosts that have large memory, that is, greater than 512 GB, with enough space for the scratch partition when using vSAN. |

None. |

VCF-ESX-RCMD-CFG-005 |

Use the default configuration for the scratch partition on all ESXi hosts in the default management cluster. |

|

None. |

VCF-ESX-RCMD-CFG-006 |

For workloads running in the default management cluster, save the virtual machine swap file at the default location. |

Simplifies the configuration process. |

Increases the amount of replication traffic for management workloads that are recovered as part of the disaster recovery process. |

VCF-ESX-RCMD-NET-001 |

Place the ESXi hosts in each management domain cluster on a host management network that is separate from the VM management network. |

Enables the separation of the physical VLAN between ESXi hosts and the other management components for security reasons. The VM management network is not required for a multi-rack compute-only cluster in a VI workload domain. |

Increases the number of VLANs required. |

VCF-ESX-RCMD-NET-002 |

Place the ESXi hosts in each VI workload domain on a separate host management VLAN-backed network. |

Enables the separation of the physical VLAN between the ESXi hosts in different VI workload domains for security reasons. |

Increases the number of VLANs required. For each VI workload domain, you must allocate a separate management subnet. |

VCF-ESX-RCMD-SEC-001 |

Deactivate SSH access on all ESXi hosts in the management domain by having the SSH service stopped and using the default SSH service policy |

Ensures compliance with the vSphere Security Configuration Guide and with security best practices. Disabling SSH access reduces the risk of security attacks on the ESXi hosts through the SSH interface. |

You must activate SSH access manually for troubleshooting or support activities as VMware Cloud Foundation deactivates SSH on ESXi hosts after workload domain deployment. |

VCF-ESX-RCMD-SEC-002 |

Set the advanced setting |

|

You must turn off SSH enablement warning messages manually when performing troubleshooting or support activities. |