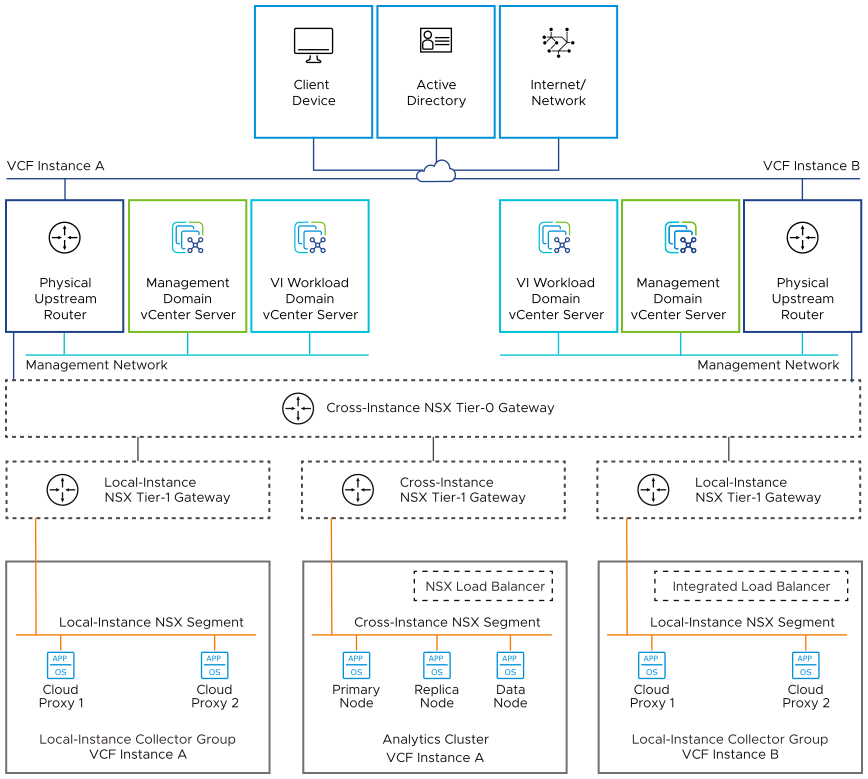

For secure access to the UI and API of VMware Aria Operations, you place the analytics cluster nodes on the cross-instance NSX segment. This configuration also supports accesiblity of the analytics cluster nodes from customer routable networks.

Network Segments

The network segments design consists of characteristics and decisions for placement of VMware Aria Operations in the management domain.

For secure access, load balancing, and multi-instance design, you deploy the VMware Aria Operations analytics cluster on the cross-instance NSX segment, and you place the VMware Cloud Proxy appliances on the corresponding local-instance NSX segments.

Type |

Description |

|---|---|

Overlay-backed NSX segment |

The routing of the VLAN-backed management network segment and other networks is dynamic and based on the Border Gateway Protocol (BGP). Routed access to the VLAN-backed management network segment is provided through an NSX Tier-1 and Tier-0 gateway. Recommended option to facilitate scale out to a multi instance design supporting disaster recovery. |

VLAN-backed NSX segment |

You must provide two unique VLANs, network subnets, and vCenter Server portgroup names. |

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

IOM-VAOPS-NET-001 |

Place the VMware Aria Operations analytics nodes on the cross-instance NSX network segment. |

Provides a consistent deployment model for management applications and a potential to extend to a second VMware Cloud Foundation instance for disaster recovery. |

You must use an implementation of NSX to support this network configuration. |

IOM-VAOPS-NET-002 |

Place the VMware Cloud Proxy for VMware Aria Operations appliances on the local-instance NSX network segment. |

Supports collection of metrics locally per VMware Cloud Foundation instance. |

You must use an implementation in NSX to support this networking configuration. |

Network Segments for Multiple VMware Cloud Foundation Instances

In an environment with multiple VMware Cloud Foundation instances, the VMware Cloud Proxy appliances in each instance are connected to the corresponding local-instance network segment.

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

IOM-VAOPS-NET-003 |

In an environment with multiple VMware Cloud Foundation instances, place the VMware Cloud Proxy for VMware Aria Operations appliances in each instance on the local-instance NSX segment. |

Supports collection of metrics locally per VMware Cloud Foundation instance. |

You must use an implementation in NSX to support this networking configuration. |

IP Addressing

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

IOM-VAOPS-NET-004 |

Allocate statically assigned IP addresses and host names from the cross-instance NSX segment to the VMware Aria Operations analytics cluster nodes and the NSX load balancer. |

Ensures stability across the SDDC, and makes it simpler to maintain and easier to track. |

Requires precise IP address management. |

IOM-VAOPS-NET-005 |

Allocate statically assigned IP addresses and host names from the local-instance NSX segment to the VMware Cloud Proxy for VMware Aria Operations appliances. |

Ensures stability across the SDDC, and makes it simpler to maintain and easier to track. |

Requires precise IP address management. |

IP Addressing for Multiple VMware Cloud Foundation Instances

In an environment with multiple VMware Cloud Foundation instances, the VMware Cloud Proxy appliances in each instance are assigned IP addresses associated with their corresponding network.

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

IOM-VAOPS-NET-006 |

In an environment with multiple VMware Cloud Foundation instances, allocate statically assigned IP addresses and host names from each local-instance NSX segment to the corresponding VMware Cloud Proxy for VMware Aria Operations appliances in the instance. |

Ensures stability across the SDDC, and makes it simpler to maintain and easier to track. |

Requires precise IP address management. |

Name Resolution

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

IOM-VAOPS-NET-007 |

Configure forward and reverse DNS records for all VMware Aria Operations nodes and for the NSX load balancer virtual IP address. |

All nodes are accessible by using fully qualified domain names instead of by using IP addresses only. |

You must provide DNS records for the VMware Aria Operations nodes. |

Load Balancing

A VMware Aria Operations cluster deployment requires a load balancer to manage the connections to VMware Aria Operations. This validated solution uses load-balancing services provided by NSX in the management domain. The load balancer is automatically configured by VMware Aria Suite Lifecycle and SDDC Manager during the deployment of VMware Aria Operations. The load balancer is configured with the following settings.

Load Balancer Element |

Settings |

|---|---|

Service monitor |

|

Server pool |

|

TCP application profile |

|

Source IP persistence profile |

|

HTTP redirect application profile |

|

Virtual server |

|

HTTP redirect virtual server |

|

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

IOM-VAOPS-NET-008 |

Use the small-size load balancer that is configured by SDDC Manager on a dedicated NSX Tier-1 gateway in the management domain to load balance the clustered Workspace ONE Access nodes, to also load balance the connections across the VMware Aria Operations analytics cluster members. |

Required to deploy a VMware Aria Operations analytics cluster deployment type with distributed user interface access across members. |

You must use the NSX load balancer that is configured by SDDC Manager to support this network configuration. |

IOM-VAOPS-NET-009 |

Do not use a load balancer for the VMware Cloud Proxy for VMware Aria Operations appliances. |

|

None. |

Time Synchronization

Time synchronization provided by the Network Time Protocol (NTP) is important to ensure that all components within the SDDC are synchronized to the same time source.

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

IOM-VAOPS-NET-010 |

Configure NTP on each VMware Aria Operations node. |

VMware Aria Operations depends on time synchronization. |

None. |

IOM-VAOPS-NET-011 |

Configure the timezone of VMware Aria Operations to use UTC. |

You must use UTC to provide the integration with VMware Aria Automation , because VMware Aria Automation supports only UTC. |

If you are in a timezone other than UTC, timestamps appear skewed. |