When you create a multiple-host SDDC, you have the option to use stretched clusters that span two availability zones (AZs). This Multi-AZ configuration provides data redundancy and SDDC resiliency in the face of AZ failure.

A stretched cluster uses vSAN technology to provide a single datastore for the SDDC that's replicated across both AZs. NSX network segments are also transparently stretched across both AZs. If service is disrupted in either AZ, workloads are brought up in the other one.

The following additional restrictions apply to Multi-AZ SDDCs:

- The linked VPC must have two subnets, one in each AZ occupied by the SDDC.

- An SDDC can contain either standard (single availability zone) clusters or stretched clusters, but not a mix of both.

- You cannot convert a Multi-AZ SDDC to a Single-AZ SDDC.

- You cannot convert a Single-AZ SDDC to a Multi-AZ SDDC.

- You need a minimum of two hosts (one in each AZ) to create a stretched cluster. Hosts must be added and removed in pairs.

- Large-sized SDDC appliances are not supported in SDDCs with two-host stretched management clusters. A minimum of 6 hosts is required.

AZ Affinity for Management and Workload VMs

Both AZs in a stretched cluster can be used by your workloads. The system deploys management appliances in the first AZ you select during SDDC deployment. This behavior is a property of the system and cannot be modified.

All VMs and management appliances have a temporary affinity for the AZ in which they are first powered on. If they are migrated to a different AZ they remain there until a failure or other scenario requires them to be recovered in the other AZ. This behavior also applies to NSX Edge VMs. A failure in the AZ hosting the active and standby NSX Edge VMs forces them to be recovered in the other AZ, taking all SDDC egress and ingress traffic with them.

Networking in Stretched Clusters

SDDC network routing in a stretched cluster is AZ-agnostic. Gateways and Edge routers are unaware of which AZ they are in, or which AZ a destination address is in. Traffic entering or leaving the SDDC network, or traffic routed between gateways must go through the Active Edge VM. But because the placement of the Edge VMs is not bound to a specific AZ, traffic routed through an Edge VM may hairpin between AZs depending upon the AZ where the source, destination, and active Edge VM are located. Your SDDC network design should anticipate the possibility that this traffic can flow through either AZ.

AZ Failure Scenarios

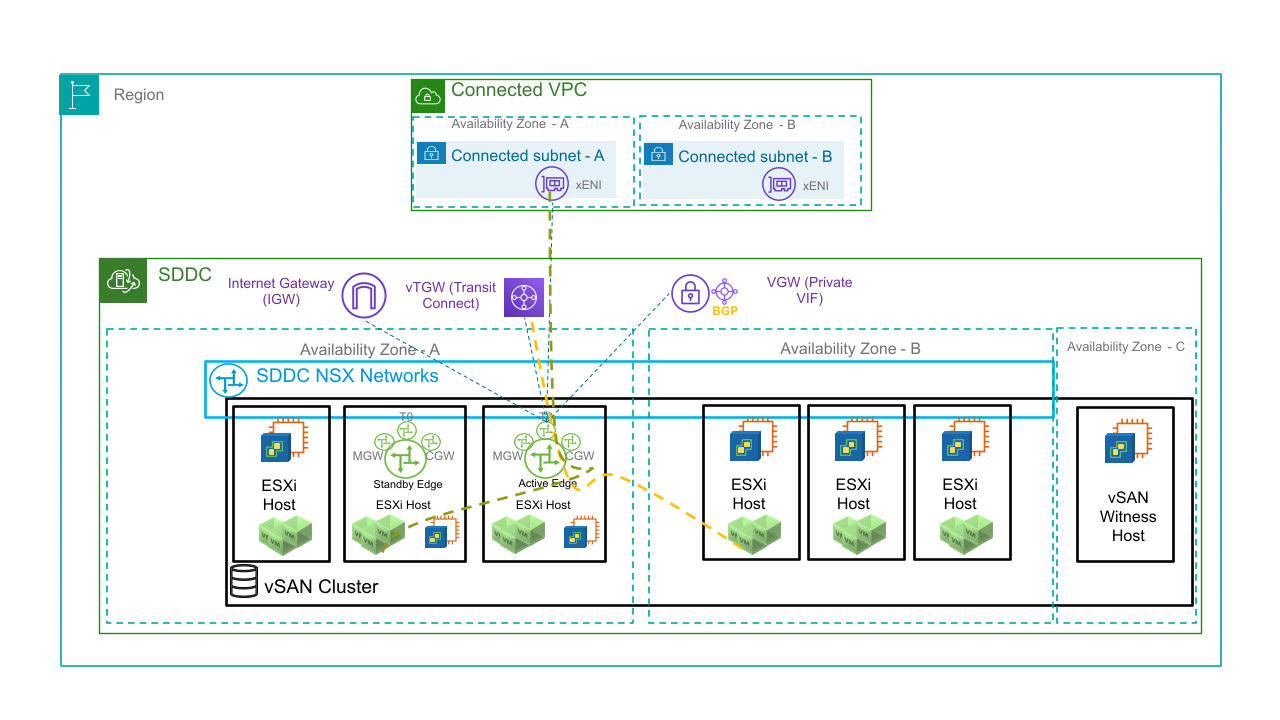

These diagrams illustrate a typical six-host stretched cluster. To ensure network resilience in the face of host failures, the active NSX Edge and standby NSX Edge never run on the same host.

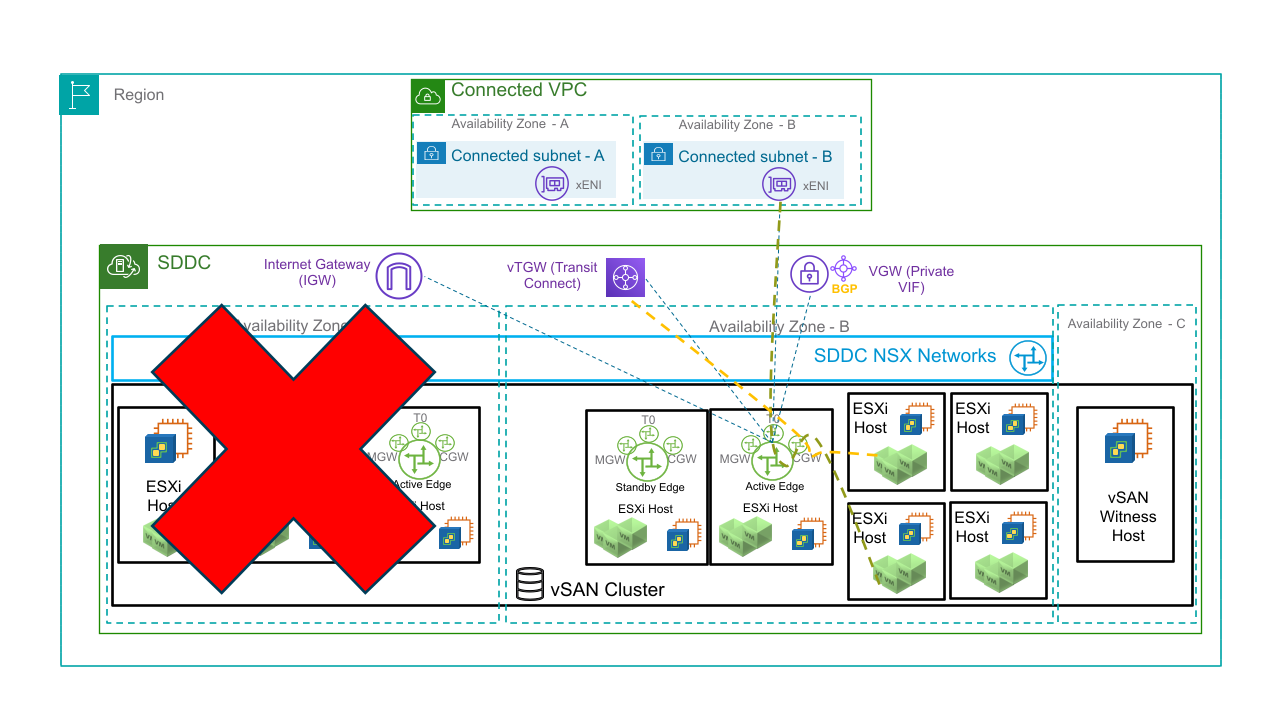

When one AZ fails, as shown here, additional hosts are deployed in the remaining AZ (Availability Zone B in this figure). You are not billed for these hosts. The active and standby NSX Edge VMs are powered up on hosts in the remaining AZ, and traffic that had been routed through the active NSX Edge in the failed AZ is now handled by the active NSX Edge in the remaining AZ. As shown here, this includes traffic from the SDDC's Internet gateway, VMware Transit Connect(SDDC group) traffic, and DX traffic to a private VIF. Connected VPC traffic moves to the ENI attached to the host where active NSX Edge is running.

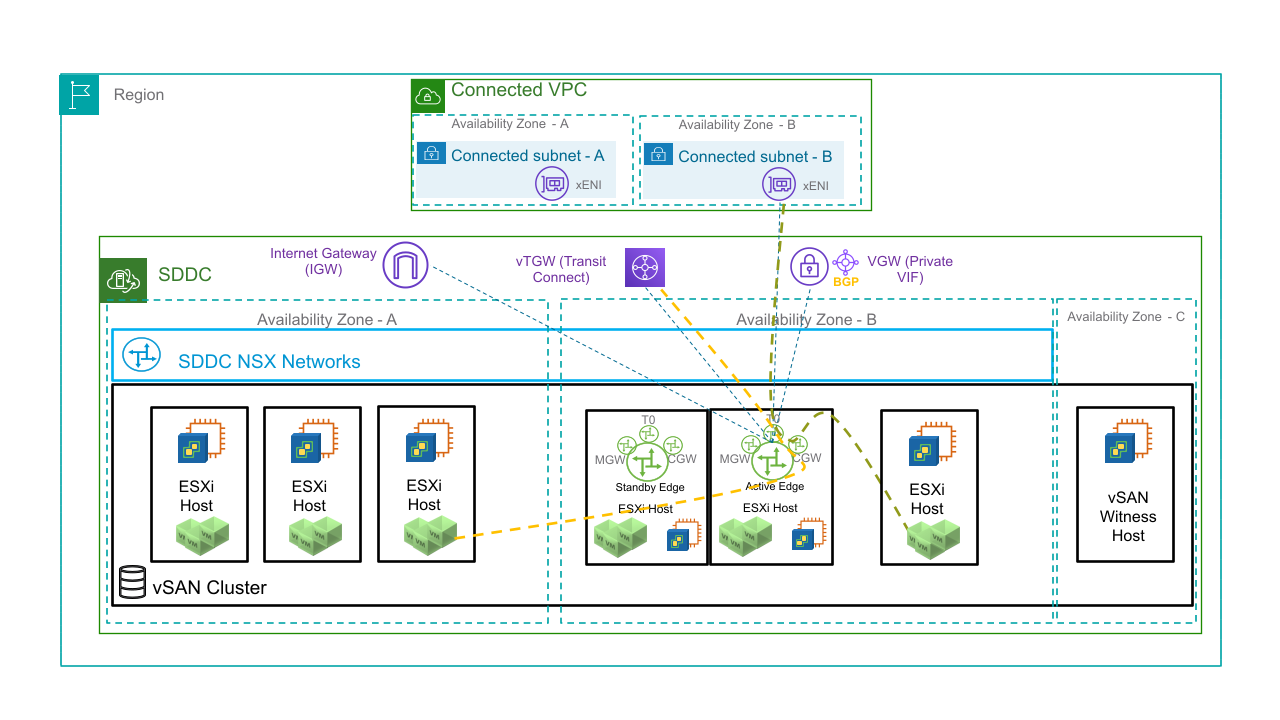

When the failed AZ recovers, the hosts that were temporarily added to the other AZ (Availability Zone B in this figure) are removed and normal operation continues with three hosts in each AZ. The active and standby NSX Edge VMs and management appliances continue to run the other AZ.

To ensure there is always a cluster majority and avoid a "split-brain" scenario, a vSAN witness node is deployed in a third AZ selected by the system when the SDDC is first deployed. This node is not billable and cannot run workloads or store workload data. It stores all the vSAN metadata required to recover form a site failure and serves as a tiebreaker when one AZ becomes unreachable. The VMware Cloud Tech Zone article Understanding the vSAN Witness Host has more about this host and the role it plays in your SDDC.

Further Reading

See NSX Networking Concepts for a high-level discussion of SDC networking. You can find more information about stretched clusters in the VMware Cloud Tech Zone article VMware Cloud on AWS: Stretched Clusters. For limitations that affect all stretched clusters, see VMware Configuration Maximums.