VMware Cloud on AWS uses NSX to create and manage SDDC networks. NSX provides an agile software-defined infrastructure to build cloud-native application environments.

This guide explains how to manage your SDDC networks using NSX and the VMware Cloud Console Networking and Security Dashboard.

- By using a secure reverse proxy server to connect to a public IP address reachable by any browser that can connect to the Internet.

- By using a private network connection to reach the NSX Manager's private IP directly. Examples of private connections include: VPN, Direct Connect (Private VIF), through an SDDC Group, or via an EC2 instance (jump host) in the Connected VPC.

- By connecting directly to your cloud provider’s network. AWS Direct Connect provides this kind of connection for VMware Cloud on AWS. See Configure AWS Direct Connect Between Your SDDC and On-Premises Data Center.

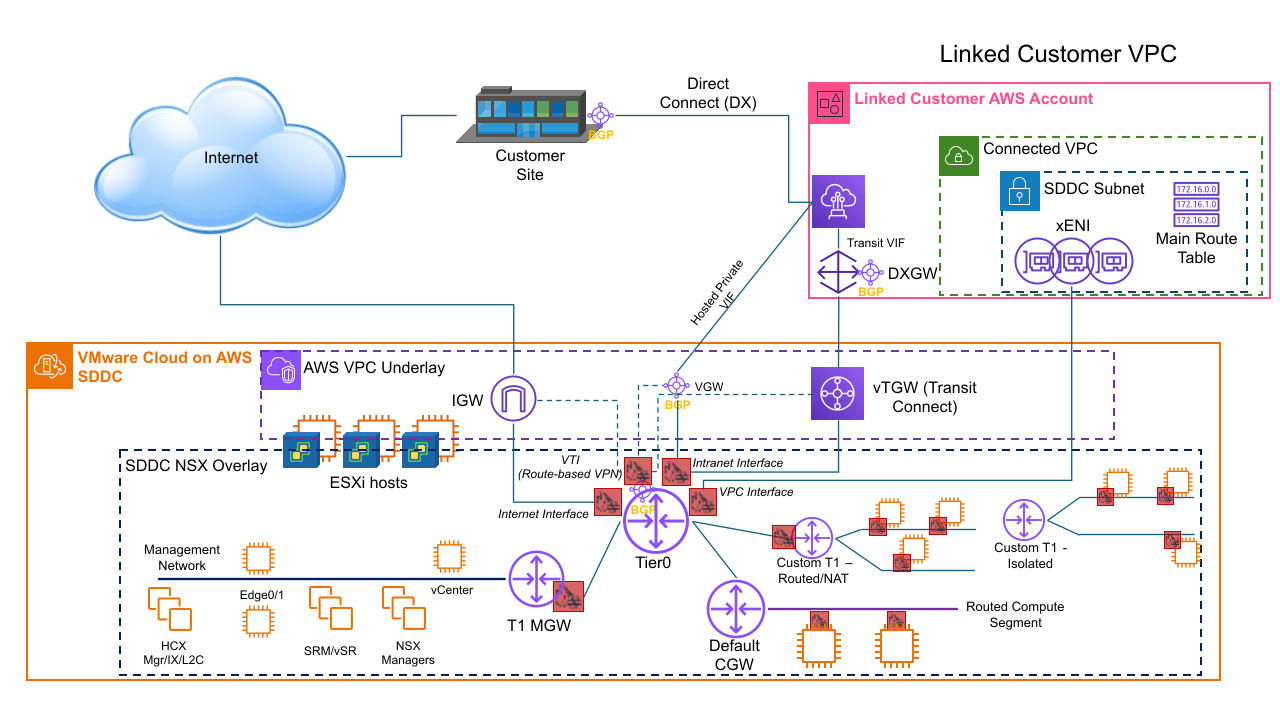

SDDC Network Topology

When you create an SDDC, it includes a Management Network. You specify the Management Network CIDR block when you create the SDDC. It cannot be changed after the SDDC has been created. See Deploy an SDDC from the VMC Console for details.

- The AWS Underlay Network

- This is the AWS VPC subnet that you specify when you deploy the SDDC. It supports the native AWS networking and services that provide connectivity to the SDDC.

- The NSX Overlay Network

- This is the layer in which all SDDC subnets are created and all SDDC appliances and workloads run.

The Compute Network includes an arbitrary number of logical segments for your workload VMs. See VMware Configuration Maximums for current limits on logical segments. You must create compute network segments to meet your workloads' needs. See VMware Configuration Maximums for applicable limits.

- Tier-0 handles north-south traffic (traffic leaving or entering the SDDC, or between the Management and Compute gateways). In the default configuration, each SDDC has a single Tier-0 router. If an SDDC is a member of an SDDC group, you can reconfigure the SDDC to add Tier-0 routers that handle SDDC group traffic. See Configure a Multi-Edge SDDC With Traffic Groups.

- Tier-1 handles east-west traffic (traffic between routed network segments within the SDDC). In the default configuration, each SDDC has a single Tier-1 router. You can create and configure additional Tier-1 gateways if you need them. See Add a Custom Tier-1 Gateway to a VMware Cloud on AWS SDDC.

- NSX Edge Appliance

-

The default NSX Edge Appliance is implemented as a pair of VMs that run in active/standby mode. This appliance provides the platform on which the default Tier-0 and Tier-1 routers run, along with IPsec VPN connections and their BGP routing machinery. All north-south traffic goes through the default Tier-0 router. To avoid sending east-west traffic through the appliance, a component of each Tier-1 router runs on every ESXi host that handles routing for destinations within the SDDC.

If you need additional bandwidth for the subset of this traffic routed to SDDC group members, a Direct Connect Gateway attached to an SDDC group, HCX Service Mesh, or to the Connected VPC, you can reconfigure your SDDC to be Multi-Edge by creating traffic groups, each of which creates an additional T0 router. See Configure a Multi-Edge SDDC With Traffic Groups for details.Note:VPN traffic, as well as DX traffic to a private VIF must pass through the default T0 and cannot be routed to a non-default traffic group. In addition, because NAT rules always run on the default T0 router, additional T0 routers cannot handle traffic subject to NAT rules. This includes traffic to and from the SDDC's native Internet connection. It also includes traffic to the Amazon S3 service, which uses a NAT rule and must go through the default T0.

- Management Gateway (MGW)

- The MGW is a Tier-1 router that handles routing and firewalling for vCenter and other management appliances running in the SDDC. Management gateway firewall rules run on the MGW and control access to management VMs. In a new SDDC, the Internet connection is labeled Not Connected in the Overview tab and remains blocked until you create a Management Gateway Firewall rule allowing access from a trusted source. See Add or Modify Management Gateway Firewall Rules.

- Compute Gateway (CGW)

- The CGW is a Tier-1 router that handles network traffic for workload VMs connected to routed compute network segments. Compute gateway firewall rules, along with NAT rules, run on the Tier-0 router. In the default configuration, these rules block all traffic to and from compute network segments (see Configure Compute Gateway Networking and Security).

Routing Between Your SDDC and the Connected VPC

When you create an SDDC, we pre-allocate 17 AWS Elastic Network Interfaces (ENIs) in the selected VPC owned by the AWS account you specify at SDDC creation. We assign each of these ENIs an IP address from the subnet you specify at SDDC creation, then attach each of the hosts in the SDDC cluster Cluster-1 to one of these ENIs. An additional IP address is assigned to the ENI where the active NSX Edge Appliance is running.

This configuration, known as the Connected VPC, supports network traffic between VMs in the SDDC and native AWS instances and services with addresses in the Connected VPC's primary CIDR block. When you create or delete routed network segments connected to the default CGW, the main route table is automatically updated. When Managed Prefix List mode is enabled for the Connected VPC, the main route table and any custom route tables to which you have added the managed prefix list are also updated.

The Connected VPC (or SERVICES) Interface is used for all traffic to destinations within the Connected VPC's primary CIDR. AWS services or instances that communicate with the SDDC must be in subnets associated with the main route table of the Connected VPC when using the default configuration. If the AWS Managed Prefix List Mode mode is enabled (see Enable AWS Managed Prefix List Mode for the Connected Amazon VPC) then you can manually add the Managed Prefix list to any custom route table within the connected VPC when you want AWS services and instances using those custom route tables to communicate with SDDC workloads over the SERVICES Interface.

When the NSX Edge appliance in your SDDC is moved to another host, either to recover from a failure or during SDDC maintenance, the IP address allocated to the appliance is moved to the new ENI (on the new host), and the main route table, along with any custom route tables that use a Managed Prefix List, is updated to reflect the change. If you have replaced the main route table or are using a custom route table but have not enabled Managed Prefix List Mode, that update fails and network traffic can no longer be routed between SDDC networks and the Connected VPC. See View Connected VPC Information and Troubleshoot Problems With the Connected VPC for more about how to use the VMware Cloud Console to see the details of your Connected VPC.

VMware Cloud on AWS provides several facilities to help you aggregate routes to the Connected VPC, other VPCs, and your VMware Managed Transit Gateways. See Enable AWS Managed Prefix List Mode for the Connected Amazon VPC.

For an in-depth discussion of SDDC network architecture and the AWS network objects that support it, read the VMware Cloud Tech Zone article VMware Cloud on AWS: SDDC Network Architecture.

Reserved Network Addresses

|

These ranges are reserved within the SDDC management subnet, but can be used in your on-premises networks or SDDC compute network segments. |

|

Per RFC 3927, all of 169.254.0.0/16 is a link-local range that cannot be routed beyond a single subnet. However, with the exception of these CIDR blocks, you can use 169.254.0.0/16 addresses for your virtual tunnel interfaces. See Create a Route-Based VPN. |

Multicast Support in SDDC Networks

In SDDC networks, layer 2 multicast traffic is treated as broadcast traffic on the network segment where the traffic originates. It is not routed beyond that segment. Layer 2 multicast traffic optimization features such as IGMP snooping are not supported. Layer 3 multicast (such as Protocol Independent Multicast) is not supported in VMware Cloud on AWS.

MTU Considerations for Internal and External Traffic

- SDDC group and DX share the same interface so must use the lower MTU value (8500 bytes) when both connections are in use.

- All VM NICs and interfaces on the same segment need to have the same MTU.

- MTU can differ between segments as long as the endpoints support PMTUD and any firewalls in the path permit ICMP traffic.

- The layer 3 (IP) MTU must be less than or equal to the underlying layer 2 connection's maximum supported packet size (MTU) minus any protocol overhead. In VMware Cloud on AWS this is the NSX segment, which supports layer 3 packets with an MTU of up to 8900 bytes.

Understanding SDDC Network Performance

For a detailed discussion of SDDC network performance, please read the VMware Cloud Tech Zone Designlet Understanding VMware Cloud on AWS Network Performance.