If you use Kubernetes as your orchestration framework, you can install and deploy the DSM Consumption Operator to enable native, self-service consumption of VMware Data Services Manager from your Kubernetes environment.

Be familiar with basic concepts and terminology related to the DSM Consumption Operator.

Consumption Operator - The operator used to manage resources against the DSM Gateway.

Cloud Administrator - An administrator of a Kubernetes cluster that consumes DSM in a self-service manner from within Kubernetes clusters. The Cloud Administrator is responsible for installing and setting up the Consumption Operator. The Cloud Administrator account maps to a DSM user account within DSM. For information about the DSM user, see About Roles and Responsibilities in VMware Data Services Manager.

Cloud User - A developer using a Kubernetes cluster to consume database cluster from DSM in a self-service manner.

DSM Gateway - The gateway implementation based on Kubernetes. It is used within DSM to provide a Kubernetes API for infrastructure policies, Postgres clusters, and MySQL clusters.

Consumption Cluster - The Kubernetes cluster where the consumption operator and custom resources are deployed to use the DSM API for self-service.

Infrastructure Policy - Allows vSphere administrators to define and set limits to specific compute, storage, and network resources that DSM database workloads can use.

Supported DSM Version

The following table maps the Consumption Operator version with the DSM version.

| Consumption Operator | DSM Version |

|---|---|

| 1.0.0 | 2.0.x |

| 1.1.2 | 2.1.x |

Step 1: Requirements

Before you begin installing and deploying the DSM Consumption Operator, ensure that the following requirements are met:

- Helm v3.8+

You have a DSM provider deployed and configured in your organization. For DSM installation, follow steps in Installing and Configuring VMware Data Services Manager.

- You have a running Kubernetes cluster.

Step 2: Install the DSM Consumption Operator

This task is performed by the Cloud Administrator.

Prerequisites

As a Cloud Administrator, obtain the following details from the DSM Administrator:

DSM username and password for the Cloud Administrator.

DSM provider URL.

List of infrastructure policies that are supported by the DSM provider and are allowed to be used in the given consumption cluster.

List of backup locations that are supported by the DSM provider and are allowed to be used in the given consumption cluster.

TLS certificate for secure communication between consumption operator and DSM provider. Save this certificate in a file named 'root_ca' under directory 'consumption/'. These names are just examples. You can use other names, but make sure to use them correctly in the helm and kubectl commands during the installation.

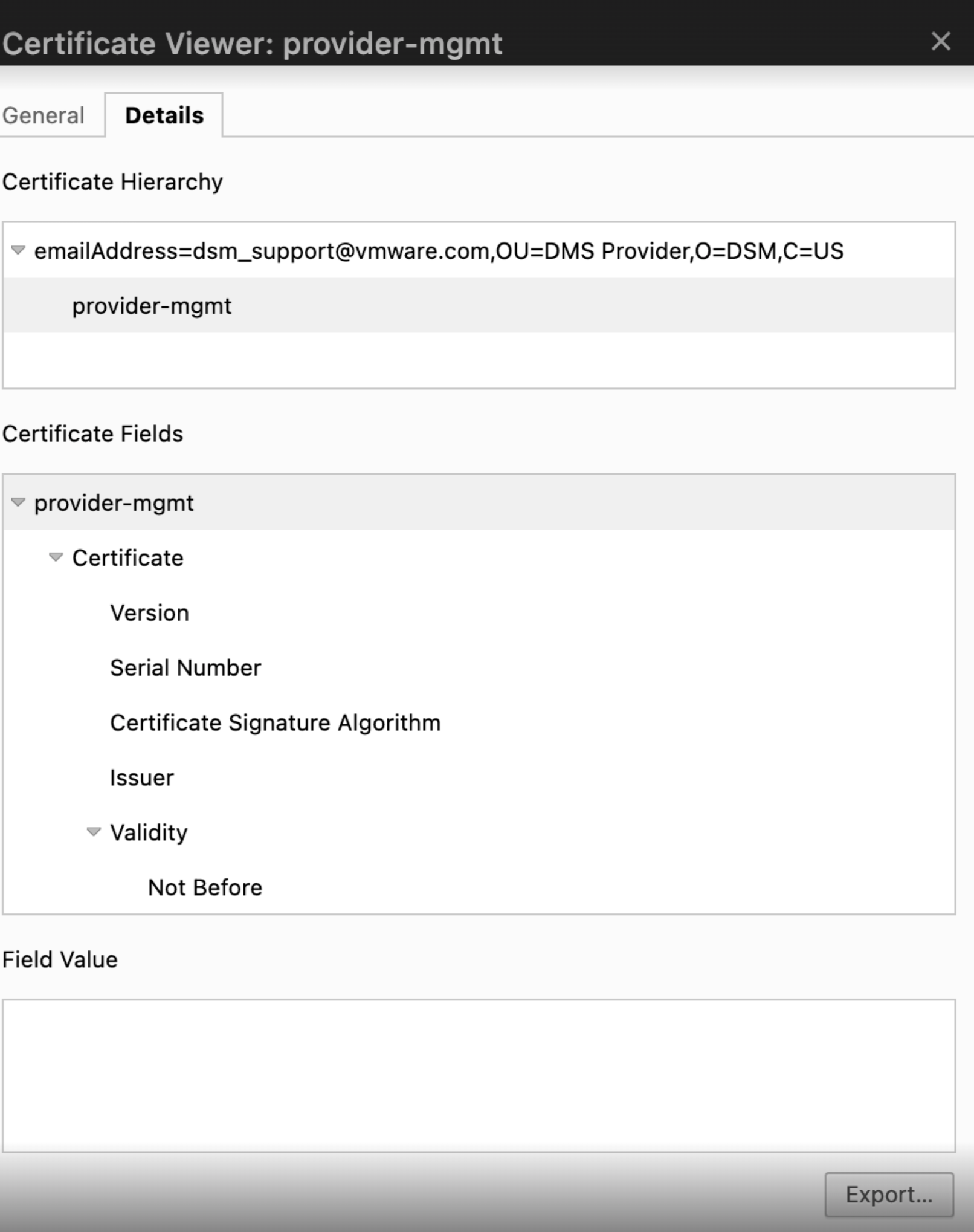

A DSM administrator needs to share the provider VM CA certificate with the Cloud Administrator. Use one of the following methods to get this certificate.- From Chrome Browser:

- Open the DSM UI portal.

- Click the icon to the left of the URL in the address bar.

- In the dropdown list, click the Connection tab > Certificate to open the Certificate Viewer window.

- Click the Details tab, click Export, and save the certificate with the .pem extension locally.

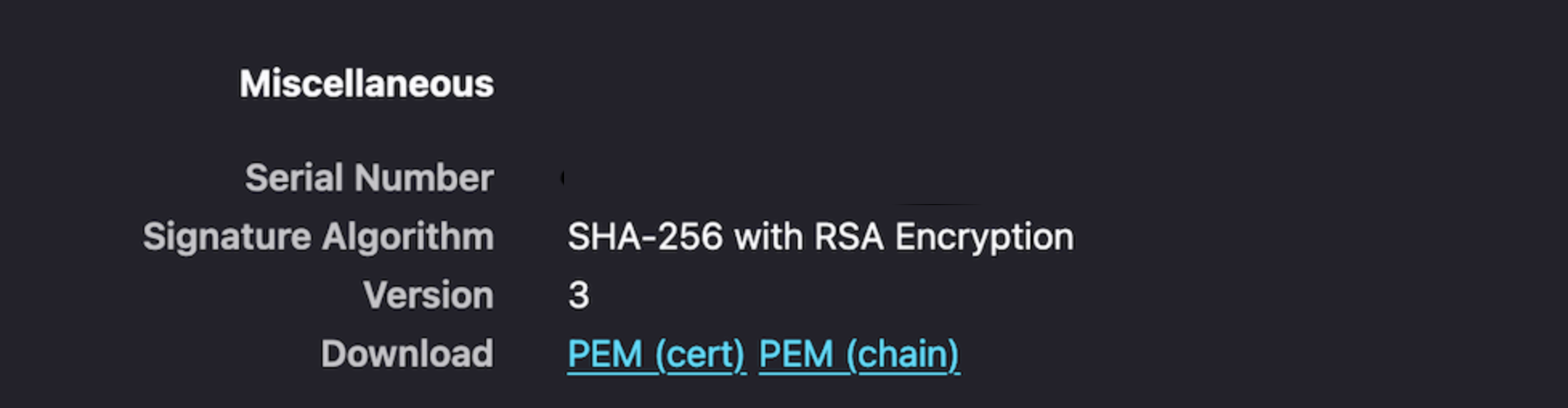

From Firefox Browser:

- Open DSM UI portal.

- Click the lock icon to the left of the URL in the address bar.

- Click Connection Secure > More Information.

- On the Security window, click View Certificate.

- In the Miscellaneous section, click

PEM (cert)to download the CA certificate for Provider VM.

- From Chrome Browser:

Procedure

Step 3: Configure a User Namespace

This task is performed by the Cloud Administrator. Once the consumption operator is deployed successfully, the Cloud Administrator can set up Kubernetes namespaces for the Cloud Users so that they can start deploying databases on DSM.

The Cloud Administrator can also have namespace-specific enforcements to allow or disallow the use of InfrastructurePolicies and BackupLocations in different namespaces.

To achieve this policy enforcement, consumption operator introduces two custom resources called InfraPolicyBinding and BackupLocationBinding. They are simple objects with no spec field. The name of the binding object should match to the InfrastructurePolicy or BackupLocation that the namespace is allowed to use.

Procedure

Results

As a Cloud Administrator, you can configure multiple namespaces with different infrastructure policies and backup locations in the same consumption cluster.

You can also set up multiple consumption clusters to connect to a single DSM provider.

You can use the same cluster name in different namespaces or different Kubernetes clusters. However, in DSM 2.0.x, same namespace cannot have a PostgresCluster and a MySQLCluster with the same name.

Step 4: Create a Database Cluster

This task is performed by Cloud Users.

Procedure

Step 5: Connect to the Database Cluster

Use this procedure to connect to the database cluster.