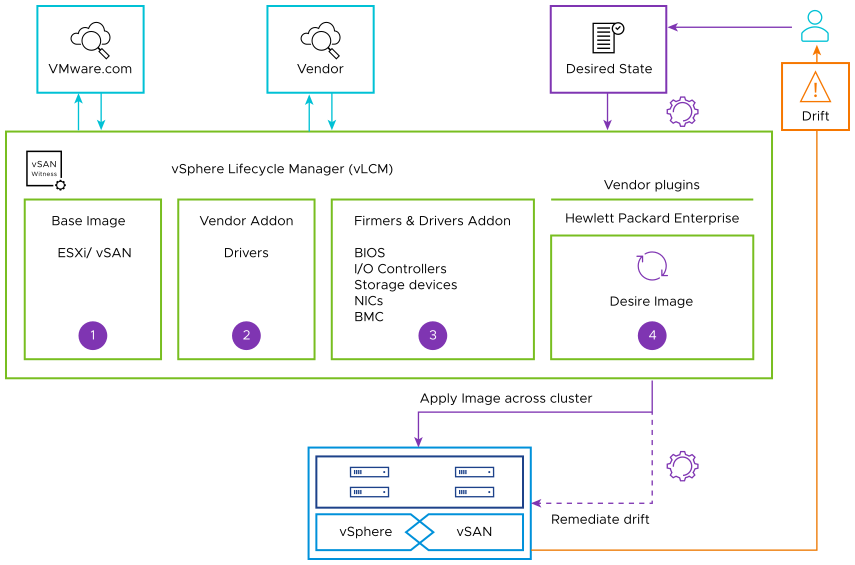

VSphere Lifecycle Manager

VMware vSphere Lifecycle Manager enables centralized and simplified lifecycle management for ESXi hosts using images and baselines. Lifecycle management refers to the process of installing software, maintaining it through updates/upgrades, and decommissioning it. In the context of maintaining a vSphere environment, the clusters and hosts in particular, lifecycle management refers to tasks such as installing ESXi and firmware on new hosts and updating or upgrading the ESXi version and firmware when required.

vSphere Lifecycle Manager uses desired-state model for all lifecycle operations.

Monitors compliance drift.

Remediates back to the desired state.

It is built to manage hosts at the cluster level.

Hypervisor

Drivers

Firmware

Modular framework supports vendor firmware plugins.

vSphere Lifecycle Manager Overview:

vSphere Lifecycle Manager is a service that runs in vCenter Server and uses the embedded vCenter Server PostgreSQL database. No additional installation is required to start using that feature. Upon deploying the vCenter Server appliance, the vSphere Lifecycle Manager user interface becomes automatically enabled in the HTML-based vSphere Client.Baselines and baseline groups or Lifecycle Manager images can be leveraged for host patching and host upgrade operations. VM hardware and VMware Tools versioning for VMs are included.Lifecycle Manager can work in an environment that has access to the Internet, directly or through a proxy server. It can also work in a secured network without access to the Internet. In such cases, the Update Manager Download Service (UMDS) can be used to download updates to the vSphere Lifecycle Manager depot, or imported manually.

vSphere Lifecycle Manager Operations:

The basic Lifecycle Manager operations are related to maintaining an environment that is up-to-date and ensuring smooth and successful updates and upgrades of the ESXi hosts.

Operation |

Description |

|---|---|

Compliance Check |

An operation of scanning ESXi hosts to determine their level of compliance with a baseline attached to the cluster or with the image that the cluster uses. The compliance check does not alter the object. |

Remediation Pre-Check |

An operation that is performed before remediation to ensure the good health of a cluster and that no issues occur during the remediation process. |

Remediation |

An operation of applying software updates to the ESXi hosts in a cluster. During remediation, software is installed on the hosts. Remediation makes a non-compliant host compliant with the baselines attached to the cluster or with the image for cluster. |

Staging |

An operation that is available only for clusters managed with baselines or baseline groups. When patches or extensions are staged to an ESXi host, VIBs are downloaded to the host without applying them immediately. Staging makes the patches and extensions available locally on the hosts. |

Several components make up Lifecycle Manager and work together to deliver the functionality and coordinate the major lifecycle management operations that it provides. The depot is an important component in the architecture because it contains all software updates that are used to create baselines and images. Lifecycle Manager can only be used if the depot is populated with components, add-ons, base imаges, and legacy bulletins and patches.

Secure Hashing and Signature Verification in vSphere Lifecycle Manager:

vCenter Server performs an automatic hash check on all software that vSphere Lifecycle Manager downloads from online depots or from a UMDS-created depot. Similarly, it performs an automatic checksum verification on all software that is manually imported into the depot. The hash check verifies the sha-256 checksum of the downloaded software to ensure its integrity. During remediation, before vSphere Lifecycle Manager installs any software on a host, the ESXi host checks the signature of the installable units to verify that they are not corrupted or altered during the download.

When an ISO image is imported into the vSphere Lifecycle Manager depot, vCenter Server performs an MD5 hash check on the ISO image to validate its MD5 checksum. During remediation, before the ISO image is installed, the ESXi host verifies the signature inside the image. And, if an ESXi host is configured with UEFI Secure Boot, the ESXi host performs full signature verification of each package that is installed on the host every time the host boots.

vSphere Lifecycle Manager vSAN Integration:

vSAN 7 (U3 and greater) provides support for managing the lifecycle of the vSAN witness host appliance for vSAN stretched cluster and 2-node topologies. Once a stretched cluster or 2-node cluster that meets the criteria is managed by Lifecycle Manager, the witness host appliance is also managed. Hosts and witness appliances are updated in the recommended order to maintain availability.

General Tips for Upgrades/Patching vSphere

Beyond the act of using vSphere Lifecycle Manager, these are some best practices and tips for ensuring success during patching and upgrades. These include technical concepts, as well as people and process improvements.

Patching vCenter Server doesn't impact workloads, and vMotion can move workloads seamlessly (infrastructure permitting) so that ESXi can be patched.

Ensure the vCenter Server Appliance (VCSA) root and [email protected] account passwords are stored correctly and are not locked out. By default, the VCSA root account locks itself after 90 days. Prior to patching, verify these accounts work correctly, recovering the passwords if needed (which may require a restart of vCenter Server), then changing them after patching/upgrading.

Ensure that time settings are correct on the appliance. Many issues on systems can be traced to incorrect time synchronization.

Ensure that vCenter Server’s file-based backup and restore is configured and generating scheduled output. This can be configured through the Virtual Appliance Management Interface (VAMI) on port 5480/TCP on the VCSA.

Take a snapshot of the VCSA prior to the update, and preferably from the ESXi host client after the VCSA has been shut down gracefully and cleanly. Snapshots have performance impacts, so ensure its deletion after the upgrade is verified.

If it has been many months since a system has been restarted, it is recommended to restart it as-is, and let it restore to good health. Otherwise, any pre-existing problems become more difficult to troubleshoot.

If vSphere HA has been configured with custom isolation addresses (for example, das.isolationaddress) ensure that it is not set the same as the vCenter Server or it could trigger HA failover.

Where possible, minimize the number of plugins installed in vCenter Server. Modern zero-trust security architecture practices discourage connecting systems in these ways, to make life harder for attackers. Fewer items installed means fewer compatibility checks are necessary.

Minimize additional installed VIBs on ESXi, and use "stock" VMware ESXi versions instead of OEM customized ones, whenever possible. This helps avoid issues with VIB version conflicts that can arise from vendor packages. vSphere Lifecycle Manager makes it easy to add OEM driver packages and additional software.

Use Dynamic Resource Scheduler (DRS) groups and affinity rules to keep vCenter Server on a particular ESXi host. Then, when issues arise, the VCSA can be found easily using the ESXi host client. Ensure that a management workstation can get to the host client interface on that ESXi host.

Don't forget about Platform Services Controllers (PSCs). They are considered part of vCenter Server and all PSCs that replicate together should be updated before patching vCenter Server. Ensure that NTP, DNS, and all the other considerations above are checked and valid for the PSCs, too.

vCenter Server should always be updated before ESXi, so the overall order for vSphere is: PSCs, then vCenter Servers, then ESXi hosts.

After updates, clear browser cache to ensure that the latest vSphere Client components download properly.

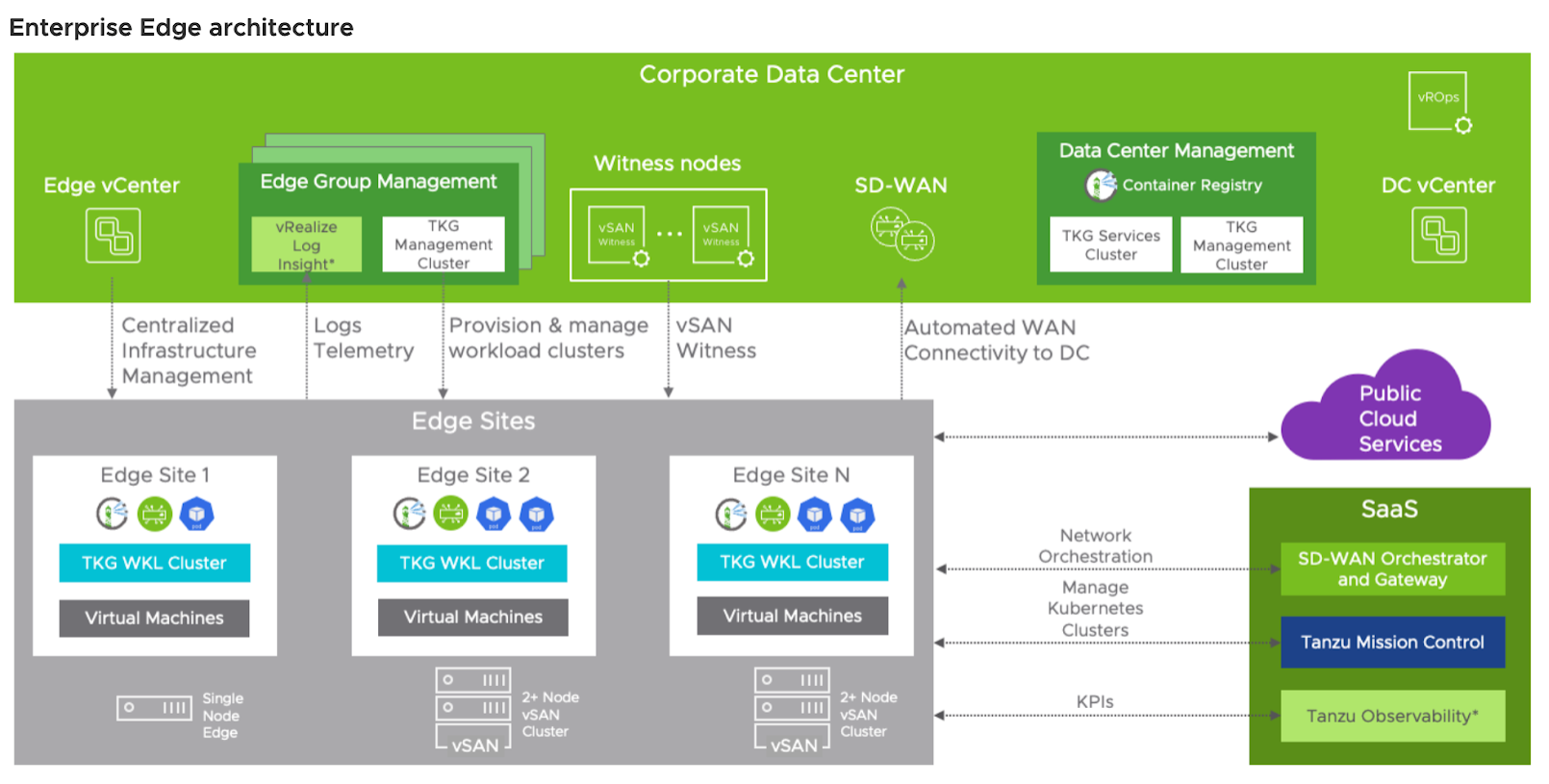

Edge Compute Stack 3.0

VMware Edge Compute Stack is an edge-optimized runtime and orchestration platform for frictionless management of edge apps and infrastructure across many sites with limited resources. It runs operational workloads, including real-time applications and its simplified operations reduce complexity and cost. The flexible platform supports ever-changing edge computing and application needs. The purpose of the document is to provide a high-level overview of current Edge Compute architecture and an in-depth explanation and design recommendations for the solution components.

VMware Edge Compute Stack (ECS) is a purpose-built platform that is designed to help organizations achieve their business goals by leveraging a range of powerful VMware technologies. This comprehensive platform consists of infrastructure and operation components, as well as enterprise-ready edge native application solutions. Additionally, it offers a variety of optional components for integrated networking, security, and monitoring, providing businesses with a single, centralized solution for their IT and OT needs.

By leveraging the ECS, organizations can enjoy improved efficiency, agility, and scalability, as well as reduced operational costs and complexity. With the ability to easily deploy, manage, and monitor their infrastructure and applications at the edge, the ECS empowers businesses to achieve their goals faster and more efficiently, while also delivering a more seamless and reliable experience for end-users.

Components

The current release of VMware ECS is built on the foundational VMware vSphere hyper-converged infrastructure (HCI) technologies and VMware Tanzu for workload management and Kubernetes operations.

- vCenter - Central management for vSphere environment used to manage data center and Edge hosts.

- vSphere ESXi – Hypervisor and a virtualization platform that abstracts computing, storage, and networking on server hosts.

- Tanzu Kubernetes – Suite of Kubernetes-related products that helps users run and manage multiple Kubernetes clusters. Tanzu Kubernetes Grid, or TKG, is an upstream conformant Kubernetes runtime from VMware used to deploy and run Kubernetes clusters in public and private clouds.

- Tanzu Mission Control - Centralized Kubernetes Cluster Management platform delivered as SaaS for consistently operating Kubernetes and modern applications across multiple teams and cloud infrastructures.

- VMware Edge Cloud Orchestrator (VECO) telemetry – provides visibility into application traffic to or from the workloads hosted on ECS. VECO telemetry functionality is currently delivered by Edge Intelligence (EI), an AIOps solution leveraging big data and machine learning to accelerate problem resolution, improve visibility and insight, and proactive alerting.