NSX Advanced Load Balancer SEs can run into CPU, memory, or PPS resource exhaustion while performing application delivery tasks. To increase the capacity of a load-balanced virtual service, NSX Advanced Load Balancer needs to increase the resources dedicated to the virtual service.

The NSX Advanced Load Balancer Controller can migrate a virtual service to an unused SE, or scale out the virtual service across multiple SEs for even greater capacity. This allows multiple active SEs to concurrently share the workload of a single virtual service.

NSX Advanced Load Balancer Data Plane Scaling Methods

NSX Advanced Load Balancer supports three techniques to scale data plane performance:

Vertical scaling of individual SE performance

Native horizontal scaling of SEs in a group

BGP-based horizontal scaling of SEs in a group

In vertical scaling, the allocated resources for a virtual machine running the SE increases manually, and the virtual machine must reboot. The physical limitations of a single virtual machine restrict this scaling. For instance, a SE is not allowed to consume more resources than the physical host allows.

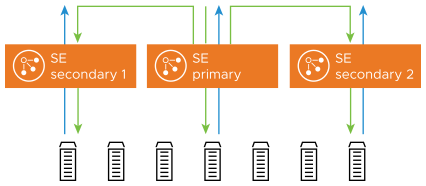

In horizontal scaling, a virtual service is placed on additional Service Engines. The first SE on which the virtual service is placed is called the primary SE and all the additional SEs are called secondary SEs for the virtual service.

With native scaling, the primary SE receives all connections for the virtual service and distributes them across all secondary SEs. As a result, all virtual service traffic is routed through the primary SE. At some point, the primary SE's packet processing capacity will reach a limit. Although secondary SEs might have the capacity, the primary SE cannot forward enough traffic to utilize that capacity. Thus, the packet-processing capacity of the primary SE decides the effectiveness of native scaling.

For instance, when a virtual service is scaled out to four SEs, that is, one primary SE and three secondary SEs, the primary SE's packet processing capacity will reach a limit and have only marginal benefits to scale out the virtual service to a fifth Service Engine.

To scale beyond the native scaling's limit of four Service Engines, NSX Advanced Load Balancer supports BGP-based horizontal scaling. This method relies on RHI and ECMP and requires manual intervention to scale the load balancing infrastructure. For more information, see BGP Support for Scaling Virtual Services.

Both horizontal methods can be used in combination. Native scaling requires no changes to the first SE but instead relies on distributing load to the additional SEs. The scaling capacity requires no changes within the network or applications.

Native Service Engine Scaling Out

During a normal steady-state, all traffic can be handled by a single SE. The MAC address of this SE will respond to any Address Resolution Protocol (ARP) requests.

As traffic increases beyond the capacity of a single SE, the NSX Advanced Load Balancer Controller can add one or more new SEs to the virtual service. These new SEs can process other virtual service traffic, or they can be newly created for this task. Existing SE s can be added within a couple of seconds, whereas instantiating a new SE VM may take up to several minutes, depending on the time necessary to copy the SE image to the virtual machine's host.

Once the new SEs are configured (both for networking and configuration sync), the first SE, known as the primary, will begin forwarding a percentage of inbound client traffic to the new SE. Packets will flow from the client to the MAC address of the primary SE, then be forwarded (at layer 2) to the MAC address of the new SE. This secondary SE will terminate the Transmission Control Protocol (TCP) connection, process the connection and/or request, then load balance the connection/request to the chosen destination server.

The secondary SE will source NAT the traffic from its IP address when load balancing the flow to the chosen server. Servers will respond to the source IP of the connection (the SE), ensuring a symmetrical return path from the server to the SE that owns the connection.

For OpenStack with standard Neutron, such behavior presents a security violation. To avoid this, it is recommended to use port security.

If you (administrator) wish to take direct control of how an SE routes responses to clients, you can use the CLI (or REST API) to control the

se_tunnel_modesetting, as shown:>configure serviceenginegroup Default-Group >serviceenginegroup> se_tunnel_mode 1 >serviceenginegroup> save

Tunnel mode values are:

0 (default) — Automatic, based on customer environment

1 — Enable tunnel mode

2 — Deactivate tunnel mode

>reboot serviceengine

Native Service Engine Scaling In

In this mode, NSX Advanced Load Balancer is load balancing the load balancers, which allows a native ability to expand or shrink capacity on the fly.

To scale traffic in, NSX Advanced Load Balancer reverses the process, allowing secondary SEs 30 seconds to timeout active connections by default. At the end of this period, the secondary terminates the remaining connections. Subsequent packets for these connections will now be handled by the primary SE, or if the virtual service was distributed across three or more SEs, the connection could hash to any of the remaining SEs. This timeout can be changed using the following CLI command: vs_scalein_tmeout seconds.

Distribution

When scaled across multiple Service Engines, the percentage of load may not be entirely equal. For instance, the primary SE must make a load balancing decision to determine which SE must handle a new connection, then forward the ingress packets. For this reason, it will have a higher workload than the secondary SEs and may therefore own a smaller percentage of connections than secondary SEs. The primary will automatically adjust the percentage of traffic across the eligible SEs based on available CPU.