This section covers the virtual service optimization topics.

The following are the types of virtual service optimization:

Scaling out a virtual service to an additional NSX Advanced Load Balancer Service Engine.

Scaling in a virtual service back to fewer SEs.

Migrating a virtual service from one SE to another SE.

NSX Advanced Load Balancer supports scaling virtual services which distributes the virtual service workload across multiple SEs to provide increased capacity on demand. This extends the throughput capacity of the virtual service and increasing the level of high availability.

Scaling out a virtual service distributes that virtual service to an additional SE. By default, NSX Advanced Load Balancer supports a maximum of four SEs per virtual service when native load balancing of SEs is in play. In BGP environments, the maximum can be increased to 64.

Scaling in a virtual service reduces the number of SEs over which its load is distributed. A virtual service requires atleast one SE always.

Scaling NSX Advanced Load Balancer Virtual Services in VMware/ OpenStack with Nuage

For VMware deployments and OpenStack deployments with Nuage, the scaled out traffic behaves as follows:

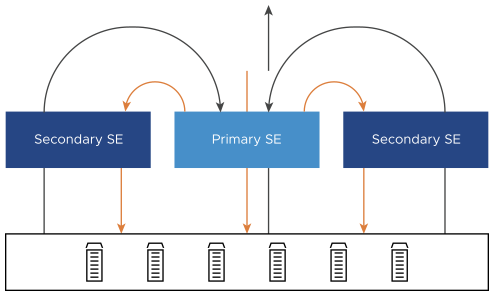

The virtual service IP is GARPed by the primary SE. All inbound traffic from clients will arrive at this SE.

The primary SE will handle a percentage of traffic as expected.

At Layer 2, excess traffic is forwarded to the MAC address of the additional secondary Service Engine(s).

The scaled-out traffic to the secondary SEs is processed as normal. The SEs will change the source IP address of the connection to their own IP address within the server network.

The servers will respond to the source IP address of the traffic, which can be the primary or one of the secondary SEs.

Secondary SEs will forward the response traffic back to the client, bypassing the primary SE.

Scaling NSX Advanced Load Balancer Virtual Services in OpenStack with Neutron

For OpenStack deployments with native Neutron, the server response traffic sent to the secondary SEs will be forwarded through the primary SE before returning to the original client.

NSX Advanced Load Balancer will issue an alert if the average CPU utilization of an SE exceeds the designated limit during a five-minute polling period. The alerts for additional thresholds can be configured for a virtual service. The process of scaling in or scaling out must be initiated by an administrator. The CPU Threshold field of the defines the minimum and maximum CPU percentages.

Scaling NSX Advanced Load Balancer Virtual Services in Amazon Web Services (AWS)

For deployments in AWS, the scaled out traffic behaviour is as follows:

The virtual service IP is GARPed by the primary SE. All inbound traffic from clients will arrive at this SE.

The primary SE will handle a percentage of traffic as expected.

At Layer 2, excess traffic is forwarded to the MAC address of the additional secondary Service Engine(s).

The scaled-out traffic to the secondary SEs is processed as normal. The SEs will change the source IP address of the connection to their own IP address within the server network.

The servers will respond to the source IP address of the traffic, which could be the primary or one of the secondary SEs.

Secondary SEs will forward the response traffic back to the client origin, bypassing the primary SE.

Scaling NSX Advanced Load Balancer Virtual Services in Microsoft Azure Deployments

NSX Advanced Load Balancer deployments in Microsoft Azure leverage the Azure Load Balancer to provide an ECMP-like, layer 3 scale-out architecture. In this case, the traffic flow is as follows:

The virtual service IP resides on the Azure Load Balancer. All inbound traffic from clients will arrive at the Azure LB.

The Azure load balancer has a backend pool consisting of the NSX Advanced Load Balancer Service Engines.

The Azure load balancer balances the traffic to one of the NSX Advanced Load Balancer Service Engines associated with the virtual service IP.

The traffic to the SEs is processed. The SEs will change the source IP address of the connection to their own IP address within the server network.

The servers will respond to the source IP address of the traffic, which can be the primary or one of the secondary SEs.

The SEs forward their response traffic directly back to the origin client, bypassing the Azure load balancer.

Scaling Process

The process used to scale out will depend on the level of access that is either 'write' access or 'read access/no access', that the NSX Advanced Load Balancer has to the hypervisor orchestrator. The following is the scaling process:

If NSX Advanced Load Balancer is in 'write' access mode with write privileges to the virtualization orchestrator, then the NSX Advanced Load Balancer will automatically create additional Service Engines when required to share the load. If the Controller runs into an issue while creating a new Service Engine, then it will wait for few minutes and then retry on a different host. With native load balancing of SEs in play, the original Service Engine (primary SE) and ARPs for the virtual service IP address processes as much traffic as possible. Some percentage of traffic arriving here will be forwarded via Layer 2 to the additional (secondary) Service Engines. When traffic decreases, the virtual service automatically scales in back to the original primary Service Engine.

If NSX Advanced Load Balancer is in 'read access or no access' mode, an administrator must manually create and configure new Service Engines in the virtualization orchestrator. The virtual service can be scaled out only when the Service Engine is both configured for the network and connected to the NSX Advanced Load Balancer Controller.

Existing Service Engines with spare capacity and appropriate network settings may be used for the scale out. Otherwise, scaling out may require either modifying existing Service Engines or creating new Service Engines.