This section discusses calculation of memory utilization within a Service Engine (SE) to estimate the number of concurrent connections or the amount of memory that can be allocated to features such as HTTP caching.

SEs support 2-256 GB memory. The minimum memory recommendation for NSX Advanced Load Balancer is 2 GB. Providing more memory drastically increases the scale of capacity and the priorities for memory gets adjusted between concurrent connections and optimized performance buffers.

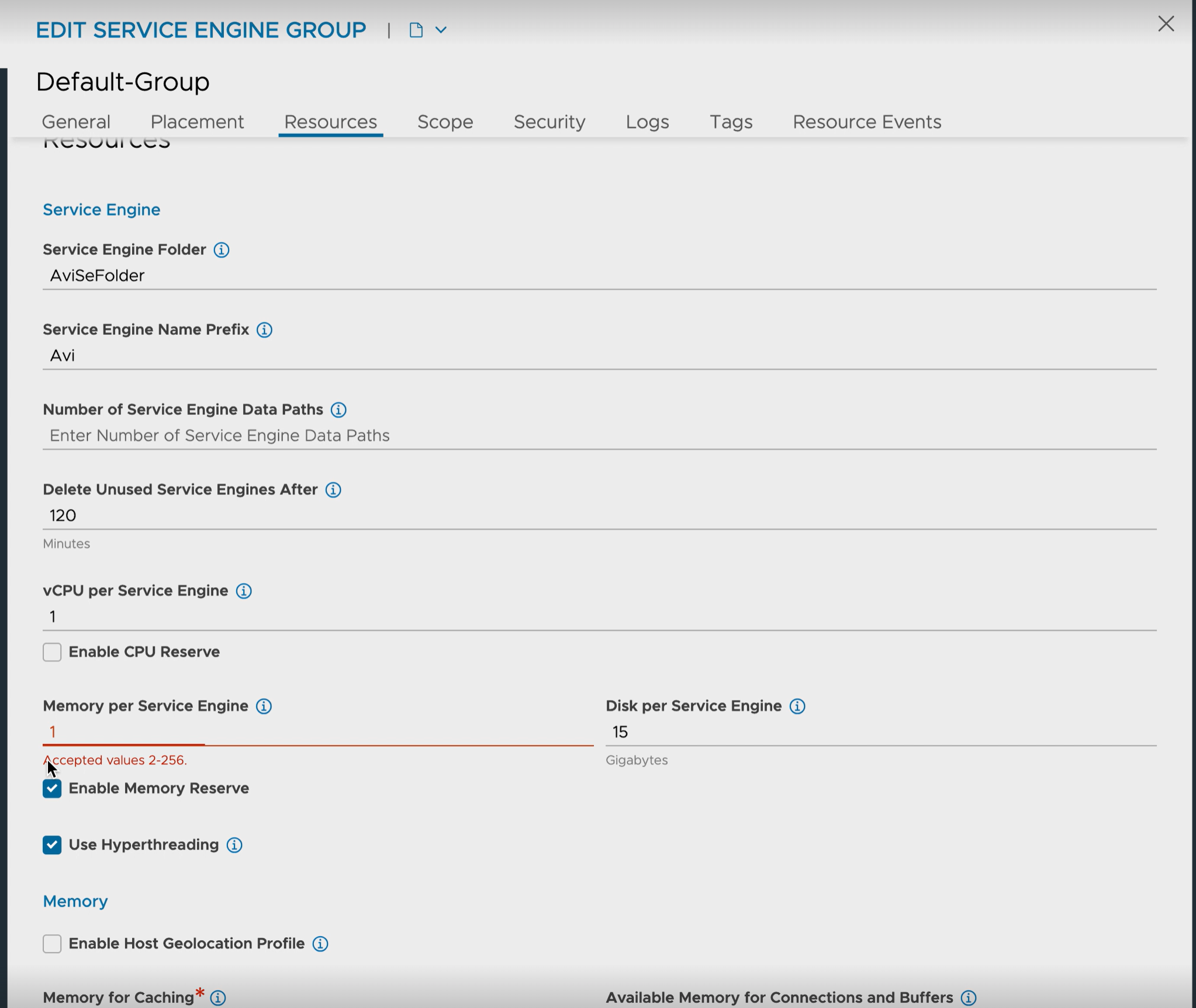

Memory allocation for NSX Advanced Load Balancer SE deployments in write access mode is configured through . Changes to the Memory per Service Engine property only impact newly created SEs. For read or no access modes, the memory is configured on the remote orchestrator such as vCenter. Changes to existing SEs need the SE to be powered down prior to the change.

Memory Allocation

The following table details the memory allocation for SE:

Base |

500 MB |

Required to turn on the SE (Linux plus basic SE functionality). |

Local |

100 MB/ core |

Memory allocated per vCPU core. |

Shared |

Remaining |

Remaining memory is split between Connections and Buffers. |

Connections consist of the TCP, HTTP, and SSL connection tables. Memory allocated to connections directly impacts the total concurrent connections that an SE can maintain.

Buffers consist of application layer packet buffers. These buffers are used for Layer 4 through 7 to queue packets to provide improved network performance. For instance, if a client is connected to the NSX Advanced Load Balancer SE at 1 Mbps with large latency and the server is connected to the SE at no latency and 10Gbps throughput, the server can respond to client queries by transmitting the entire response and proceed to service the next client request. The SE buffers the response and transmits it to the client at a much reduced speed, handling any retransmissions without needing to interrupt the server. This memory allocation also includes application centric features such as HTTP caching and improved compression.

Buffers maximize the number of concurrent connections by changing the priority towards connections. The calculations for NSX Advanced Load Balancer are based on the default setting, which allocates 50% of the shared memory for connections.

Prior to NSX Advanced Load Balancer version 30.1.1, NSX Advanced Load Balancer recommendations were to have minimum of 2GB memory but system allowed creation of Service Engine with less than 2GB memory. Starting with NSX Advanced Load Balancer version 30.1.1, NSX Advanced Load Balancer block the Service Engine creation with value less than 2 GB. This newly introduced limitation will impact the customers who are running Service Engine with less than 2GB memory in any of the environment.

It is mandatory to upgrade the SE memory to minimum of 2 GB before upgrading to 30.1.1 version for both system upgrade and Controller upgrade.

Changing Resources/ Flavor for Service Engines

This following table lists the workflow for updating resources (Memory) for SE group running in different HA mode:

Cloud |

Active/ Standby |

Active/ Active |

N+M (Minimum Buffer of 1) |

|---|---|---|---|

No-Access |

If distributed, Switch over to 1 SE, turn off SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP (Service Engine status to be up), repeat for other SE. If not distributed, Turn off standby SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, switchover to Active SE, repeat for new standby SE. |

Force deactivate the SE (some virtual service might be HA compromised), wait for deactivation to finish, turn off SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, repeat for all SE with less memory. |

Deactivate SE, wait for migration to finish, turn off SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, repeat for all SE with less memory. |

vCenter |

If distributed, Switch over to 1 SE, turn off SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, repeat for other SE. If not distributed, Turn off standby SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, switchover to Active SE, repeat for new standby SE. |

Force deactivate the SE (some virtual service might be HA compromised), wait for deactivation to finish, turn off SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, repeat for all SE with less memory. |

Deactivate SE, wait for migration to finish, turn off SE from the cloud, increase memory, turn on SE from Cloud, wait for SE_UP, repeat for all SE with less memory. |

NSX-T |

If distributed, Switch over to 1 SE, turn off SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, repeat for other SE. If not distributed, Turn off standby SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, switchover to Active SE, repeat for new standby SE. |

Force deactivate the SE (some virtual service might be HA compromised), wait for deactivation to finish, turn off SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, repeat for all SE with less memory. |

Deactivate SE, wait for migration to finish, turn off SE from the Cloud, increase memory, turn on SE from the Cloud, wait for SE_UP, repeat for all SE with less memory. |

AWS |

Change SE Group flavor to something with more than 2GB memory. If distributed, Switch over to 1 SE, delete SE from the Cloud, wait for new SE SE_UP, repeat for other SE. If not distributed, Delete standby SE from the Cloud, wait for new SE SE_UP, switchover to Active SE, repeat for new standby SE. |

Change SE Group flavor to something with more than 2GB memory, Force deactivate the SE (some virtual service might be HA compromised), wait for deactivation to finish, delete SE, repeat for all SE with less memory. |

Change SE Group flavor to some other flavor with more than 2GB memory, deactivate SE, wait for migration to finish, delete SE, repeat for all SE with less memory. |

OpenStack |

Change SE Group flavor to something with more than 2GB memory. If distributed, Switch over to 1 SE, delete SE from the Cloud, wait for new SE SE_UP, repeat for other SE. If not distributed, Delete standby SE from the Cloud, wait for new SE SE_UP, switchover to Active SE, repeat for new standby SE. |

Change SE Group flavor to something with more than 2GB memory, Force deactivate the SE (some virtual service might be HA compromised), wait for deactivation to finish, delete SE, repeat for all SE with less memory. |

Change SE Group flavor to some other flavor with more than 2GB memory, Deactivate SE, wait for migrate to finish, delete SE, repeat for all SE with less memory. |

GCP |

Change SE Group flavor to some other flavor with more than 2GB memory. If distributed, Switch over to 1 SE, delete SE from the Cloud, wait for new SE SE_UP, repeat for other SE. If not distributed, Delete standby SE from the Cloud, wait for new SE SE_UP, switchover to Active SE, repeat for new standby SE. |

Change SE Group flavor to some other flavor with more than 2GB memory, Force deactivate the SE (some virtual service might be HA compromised), wait for deactivation to finish, delete SE, repeat for all SE with less memory. |

Change SE Group flavor to some other flavor with more than 2GB memory, deactivate SE, wait for migration to finish, delete SE, repeat for all SE with less memory. |

Azure |

Change SE Group flavor to something with more than 2GB memory. If distributed, Switch over to 1 SE, delete SE from the Cloud, wait for new SE SE_UP, repeat for other SE. If not distributed, Delete standby SE from the Cloud, wait for new SE SE_UP, switchover to Active SE, repeat for new standby SE. |

Change SE Group flavor to some other flavor with more than 2GB memory, Force deactivate the SE (some virtual service might be HA compromised), wait for deactivation to finish, delete SE, repeat for all SE with less memory. |

Change SE Group flavor to something with more than 2GB memory, deactivate SE, wait for migration to finish, delete SE, repeat for all SE with less memory. |

Concurrent Connections

Most Application Delivery Controller (ADC) benchmark numbers are based on an equivalent TCP Fast Path, which uses a simple memory table with client IP:port mapped to server IP:port. Though TCP Fast Path uses very less memory, enabling extremely large concurrent connection numbers, it is not relevant to the vast majority of real world deployments which rely on TCP and application layer proxying. The NSX Advanced Load Balancer benchmark numbers are based on full TCP proxy (L4), TCP plus HTTP proxy with buffering and basic caching with DataScript (L7), and the same scenario with Transport Layer Security Protocol (TLS) 1.2 between client and the NSX Advanced Load Balancer.

The memory consumption numbers per connection listed below can be higher or lower. For example, typical buffered HTTP request headers consume 2kb of memory, but they can be as high as 48kb. The numbers below are intended to provide real world sizing guidelines.

Memory consumption per connection:

10 KB L4

20 KB L7

40 KB L7 + SSL (RSA or ECC)

To calculate the potential concurrent connections for an SE, use the following formula:

Concurrent L4 connections = ((SE memory - 500 MB - (100 MB * number of vCPU)) * Connection Percent) / Memory per Connection

((8000 - 500 - ( 100 * 8 )) * 0.50) / 0.01 = 335k.

1 vCPU |

4 vCPU |

32 vCPU |

|

|---|---|---|---|

1 GB |

36k |

NA |

NA |

4 GB |

306k |

279k |

NA |

32 GB |

2.82m |

2.80m |

2.52m |

View Allocation through CLI

The show serviceengine <SE Name> memdist command shows a truncated breakdown of memory distribution for the SE. The SE has one vCPU core with 141 MB allocated for the shared memory’s connection table. The huge_pages value of 91 means that there are 91 pages of 2 MB each. This indicates that 182 MB is allocated for the shared memory’s HTTP cache table.

[admin:grr-ctlr2]: > show serviceengine 10.110.111.10 memdist +-------------------------+-----------------------------------------+ | Field | Value | +-------------------------+-----------------------------------------+ | se_uuid | 10-217-144-19:se-10.217.144.19-avitag-1 | | proc_id | C0_L4 | | huge_pages | 2353 | | clusters | 1900544 | | shm_memory_mb | 398 | | conn_memory_mb | 4539 | | conn_memory_mb_per_core | 1134 | | num_queues | 1 | | num_rxd | 2048 | | num_txd | 2048 | | hypervisor_type | 6 | | shm_conn_memory_mb | 238 | | os_reserved_memory_mb | 0 | | shm_config_memory_mb | 160 | | config_memory_mb | 400 | | app_learning_memory_mb | 30 | | app_cache_mb | 1228 | +-------------------------+-----------------------------------------+ [admin:grr-ctlr2]: >

Code |

Description |

|---|---|

clusters |

The total number of packet buffers (mbufs) reserved for an SE. |

|

The total amount of shared memory reserved for an SE. |

|

The total amount of memory reserved from the heap for the connections. |

|

The amount of memory reserved from the heap for the connections per core ( |

|

The amount of memory reserved from the shared memory reserved for the connections. |

|

The number of NIC queue pairs. |

|

The number of RX descriptors. |

|

The number of TX descriptors. |

|

The amount of extra memory reserved for non SE datapath processes. |

|

The amount of memory reserved from the shared memory reserved for configuration. |

|

The amount of memory reserved from the heap for configuration. |

hypervisor_type refers to the following list of hypervisor types and the respective values associated with it:

Hypervisor Types |

Values |

|---|---|

|

0 |

|

1 |

|

2 |

|

3 |

|

4 |

|

5 |

|

6 |

|

7 |

View Allocation through API

The total memory allocated to the connection table and the percentage in use can be viewed. Use the following commands to query the API:

https://<IP Address>/api/analytics/metrics/serviceengine/se-<SE UUID>?metric_id=se_stats.max_connection_mem_total returns the total memory available to the connection table. In the following response snippet, 141 MB is allocated.

"statistics": {

"max": 141,

}

https://<IP Address>/api/analytics/metrics/serviceengine/se-<SE UUID>?metric_id=se_stats.avg_connection_mem_usage&step=5 returns the average percent of memory used during the queried time period. In the result snippet below, 5% of the memory was in use.

"statistics": {

"min": 5,

"max": 5,

"mean": 5

},

Shared Memory Caching

You can use the app_cache_percent field in the Service Engine properties to reserve a percentage of the SE memory for Layer 7 caching. The default value is zero, which implies that the NSX Advanced Load Balancer will not cache any object. This is a property that takes affect on SE boot up and so needs a SE reboot/ restart after the configuration.

If virtual service application profile caching is enabled, on upgrading the NSX Advanced Load Balancer from an earlier version, this field is automatically set to 15 and so 15% of SE memory will be reserved for caching. This value is a percentage configuration and not an absolute memory size.

After configuring the feature, restart the SE to enable the configuration.

Total memory allocated for caching must meet one GB minimum allocation per core. If app_cache_percent exceeds this condition, the allocated memory will be less than 15% of the Total System memory.

For instance, App cache memory = Total memory - number of cores*1 GB.

For 10GB, 9 Core SE, 15 % app_cache_percent will be one GB instead of 1.5 GB.

Configuring using CLI

Enter the following commands to configure the app_cache_percent:

[admin:cntrlr]: > configure serviceenginegroup Serviceenginegroup-name [admin:cntrlr]: serviceenginegroup> app_cache_percent 30 Overwriting the previously entered value for app_cache_percent [admin:cntrlr]: serviceenginegroup> save

Configuring using UI

You can enable this feature using the NSX Advanced Load Balancer UI. Navigate to and click the edit icon of the desired SE group. In the Basic Settings tab, under Memory Allocation section, enter the value meant to be reserved for Layer 7 caching in the Memory for Caching field.

Reduce the Core File Size

The following new fields have been introduced to help exclude some sections from being included into the core:

core_shm_app_learning: To include shared memory for application learning in core file.core_shm_app_cache: To include shared memory for application cache in core file.

By default, these options are set to False. Use the following commands and enable the options core_shm_app_learning and core_shm_app_cache.

[admin:cntrlr]: serviceenginegroup> core_shm_app_learning Overwriting the previously entered value for core_shm_app_learning [admin:cntrlr]: serviceenginegroup> core_shm_app_cache Overwriting the previously entered value for core_shm_app_cache [admin:cntrlr]: serviceenginegroup> save

Restart or reboot the SE for this configuration to take effect.

Per Virtual Service Level Admission Control

Connection Refusals to Incoming Requests on a Virtual Service

The connection refusals on a particular virtual service can be due to the high consumption of packet buffers by that virtual service.

When the packet buffer usage of a virtual service is greater than 70% of the total packet buffers, the connection refusals start. Hence, there is a slow client that is causing a packet buffer build up on the virtual service.

This issue can be alleviated by increasing the memory allocated per SE or by identifying and limiting the number of requests by slow clients using a network security policy.

Per virtual service level admission control is deactivated by default. To enable this setting, set the Service Engine Group option per_vs_admission_control to True.

[admin Controller]: > configure serviceenginegroup <name-of-the-SE-group> [admin Controller]: serviceenginegroup> per_vs_admission_control Overwriting the previously entered value for per_vs_admission_control [admin Controller]: serviceenginegroup> save | per_vs_admission_control | True |

The connection refusals stop when the packet buffer consumption on the Virtual Service drops to 50%. The sample logs generated show admission control:

C255 12:46:28.774900 [se_global_calculate_per_vs_mbuf_usage:1561] Packet buffer usage for the Virtual Service: 'vs-http' UUID: 'virtualservice-e20cfff1-173f-4f4c-9028-4ae544116191' has breached the threshold 70.0%, current value is 71.8%. Starting admission control. C255 12:49:01.285088 [se_global_calculate_per_vs_mbuf_usage:1575] Packet buffer usage for the Virtual Service: 'vs-http' UUID: 'virtualservice-e20cfff1-173f-4f4c-9028-4ae544116191' is below the threshold 50.0%, current value is 46.7%. Stopping admission control.

The connection refusals and packet throttles due to admission control can be monitored using the se_stats metrics API:

https://<Controller-IP>/api/analytics/metrics/serviceengine/se-<SE-UUID>?metric_id=se_stats.sum_connection_dropped_packet_buffer_stressed,se_stats.sum_packet_dropped_packet_buffer_stressed

To know how to resolve intermittent connection refusals on NSX Advanced Load Balancer SEs correlating to higher traffic volume, see Connection Refusals to Incoming Requests Observed on NSX Advanced Load Balancer Service Engines.