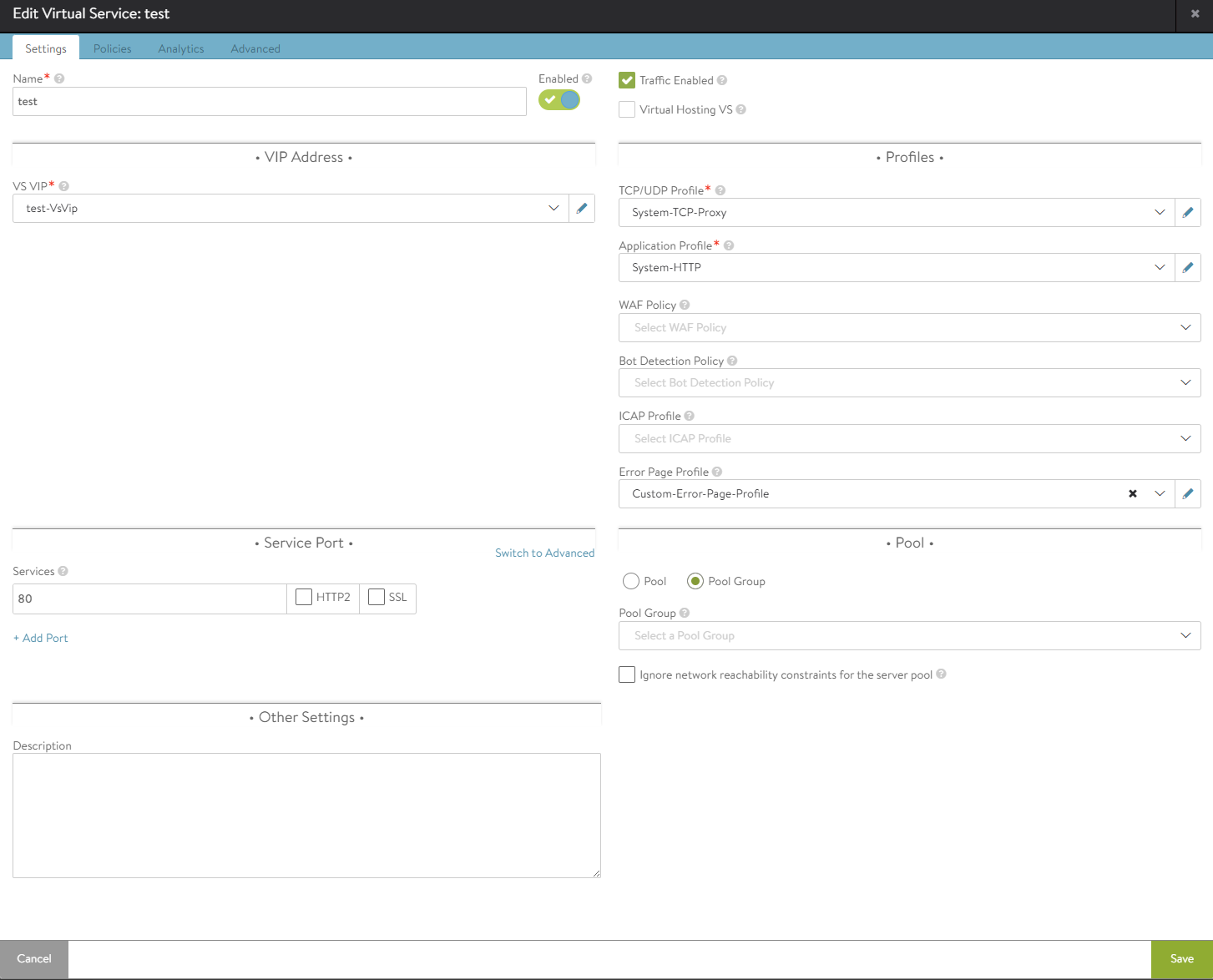

You can attach the pool group to a virtual service in Advanced Mode of virtual service window.

Create a virtual service by navigating to . Click CREATE VIRTUAL SERVICE button and select Advanced Setup option from the drop-down menu. You can also edit the existing virtual service by clicking edit icon.

Select Pool Group option in Pool section in Settings tab to attach the previously created pool group to the virtual service.

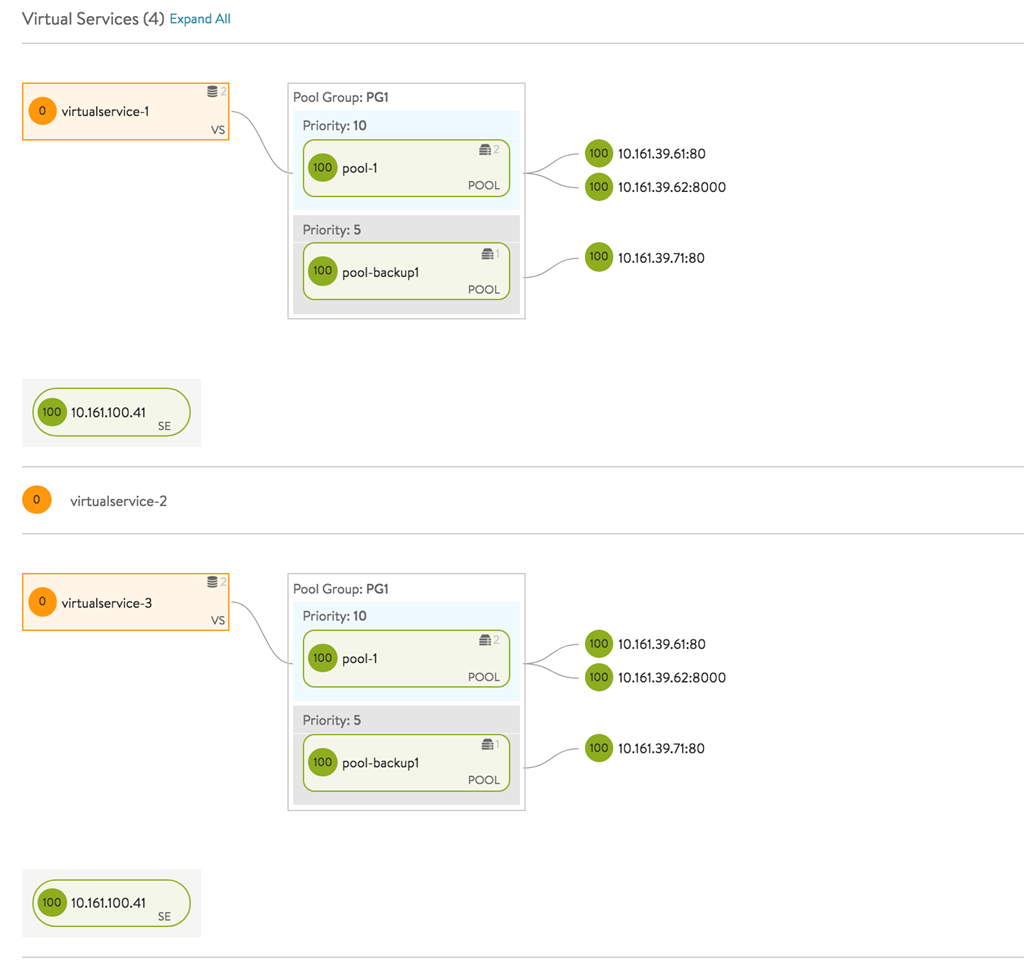

To view the overall setup of the virtual service and pool groups, navigate to and select VS Tree from drop-down menu.

Pool group sharing set up with the virtual service is represented as shown in the following image:

Caching is not supported for pool groups.

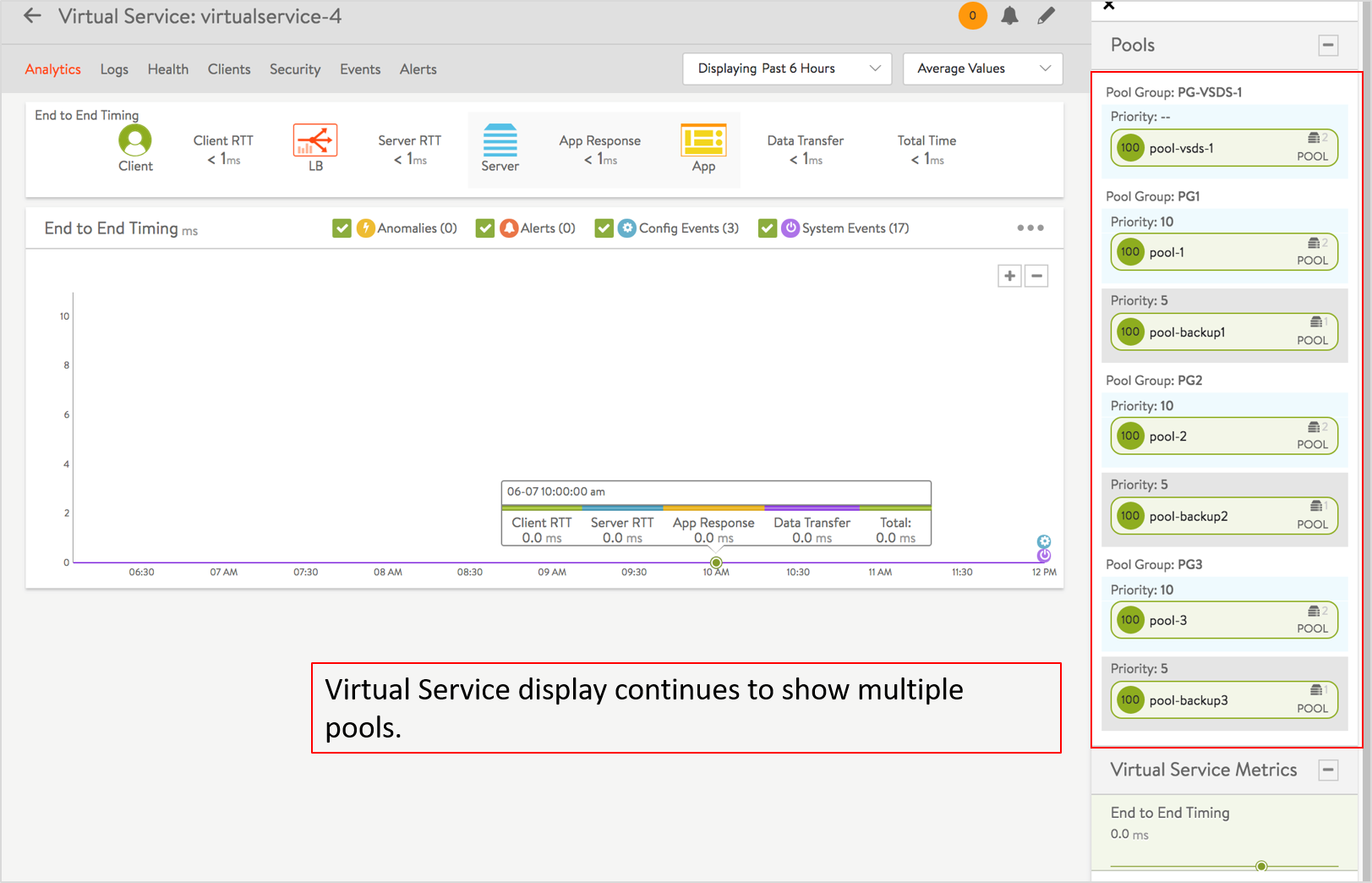

Click a virtual service to view the pool groups associated.

You can see the ability for a single virtual service to be associated with multiple pool groups.

Use Cases

Priority Pools/Servers

Consider a case where a pool has different kinds of servers, such as, newer, very powerful ones, older slow ones, and very old, very slow ones. In the diagram, imagine the blue pools are comprised of the new, powerful servers, the green pools have the older slow ones, and the pink pool, the very oldest. Further note they have been assigned priorities from high_pri down to low_pri. This arrangement causes NSX Advanced Load Balancer to pick the newer servers in the three blue pools as much as possible, potentially always. Only if no server any of the highest priority pools can be found, NSX Advanced Load Balancer will send the slower members some traffic as well, ranked by priority.

One or a combination of circumstances trigger such an alternate selection (of a lower priority pool):

A running server cannot be found.

Similar to #1, no server at the given priority level will accept an additional connection. All candidates are saturated.

No pool at the given priority level is running the minimum server count configured for it.

Operational Notes

It is recommended keeping the priorities spaced, and leave gaps. This makes the addition of intermediate priorities easier at a later point.

For the pure priority use case, the ratio of the pool group is optional.

Setting the ratio to zero for a pool results in sending no traffic to this pool.

For each of the pools, normal load balancing is performed. After NSX Advanced Load Balancer selects a pool for a new session, the load balancing method configured for that pool is used to select a server.

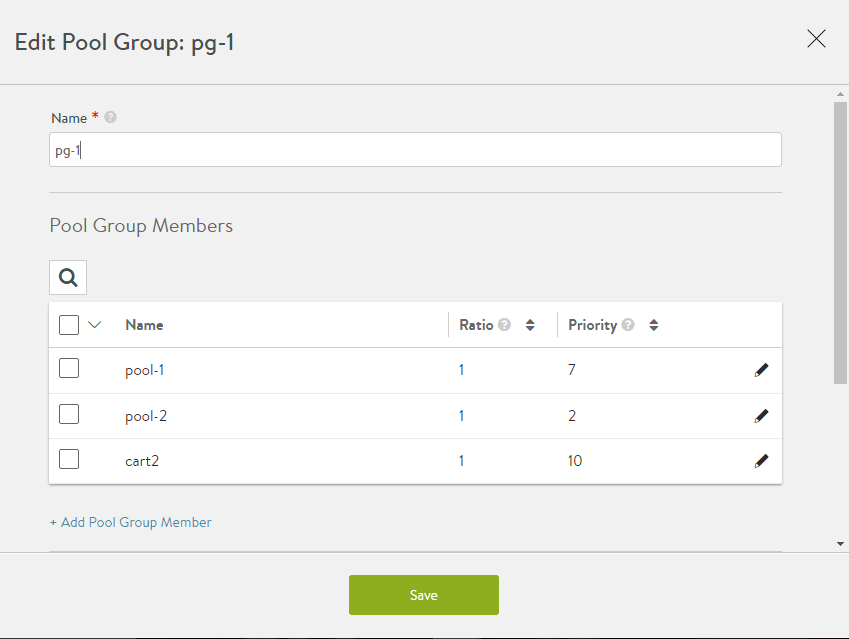

Sample Configuration for a Priority Pool

With only three pools in play, each at a different priority, the values in the Ratio column do not enter into pool selection. The cart2 will always be chosen, barring any of the three circumstances described above.

Backup Pools

The pre-existing implementation of backup pools is explained in the Pool Groups section. The existing option of specifying a backup pool as a pool-down/ fail action is deprecated. Instead, configure a pool group with two or more pools, with varying priorities. The highest priority pool will be chosen if a server is available within it (in alignment with the three previously mentioned circumstances).

Operational Notes

A pool with a higher value of priority is deemed better, and traffic is sent to the pool with the highest priority, if this pool is up, and the minimum number of servers is met.

It is recommended keeping the priorities spaced, and leave gaps. This makes the addition of intermediate priorities easier at a later point.

For each of the group’s pool members, normal load balancing is performed. After NSX Advanced Load Balancer selects a pool for a new session, the load balancing method configured for that pool is used to select a server.

The addition or removal of backup pools does not affect existing sessions on other pools in the pool group.

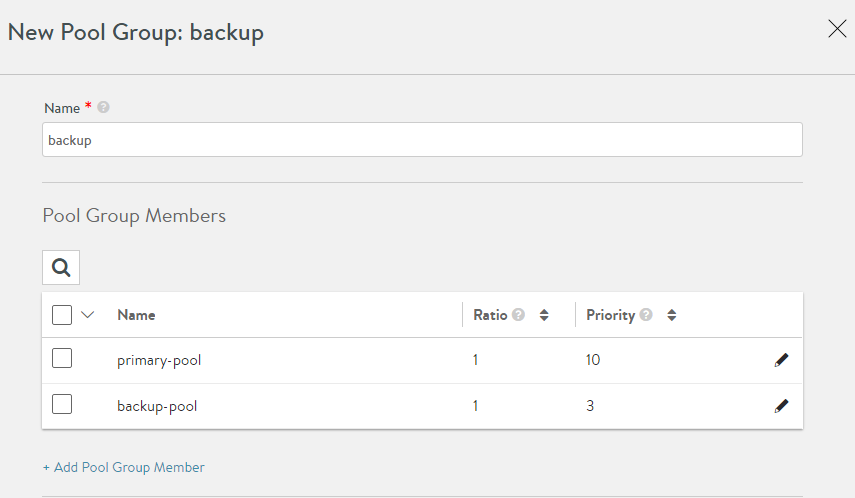

Sample Configuration for a Backup Pool

Create a pool group ‘backup’, which has two member pools — primary-pool with a priority of 10, and backup-pool which has a priority of three.

Object details:

{

url: "https://10.10.25.20/api/poolgroup/poolgroup-f51f8a6b-6567-409d-9556-835b962c8092",

uuid: "poolgroup-f51f8a6b-6567-409d-9556-835b962c8092",

name: "backup",

tenant_ref: "https://10.10.25.20/api/tenant/admin",

cloud_ref: "https://10.10.25.20/api/cloud/cloud-3957c1e2-7168-4214-bbc4-dd7c1652d04b",

_last_modified: "1478327684238067",

min_servers: 0,

members:

[

{

ratio: 1,

pool_ref: "https://10.10.25.20/api/pool/pool-4fc19448-90a2-4d58-bb8f-d54bdf4c3b0a",

priority_label: "10"

},

{

ratio: 1,

pool_ref: "https://10.10.25.20/api/pool/pool-b77ba6e9-45a3-4e2b-96e7-6f43aafb4226",

priority_label: "3"

}

],

fail_action:

{

type: "FAIL_ACTION_CLOSE_CONN"

}

}

A/B Pools

NSX Advanced Load Balancer supports the specification of a set of pools that could be deemed equivalent pools, with traffic sent to these pools in a defined ratio.

For instance, a virtual service can be configured with a single priority group having two pools, A, and B. Further, the user can specify that the ratio of traffic to be sent to A is four, and the ratio of traffic for B is one.

The A/B pool feature sometimes referred to as blue/green testing, provides a simple way to gradually transition a virtual service’s traffic from one set of servers to another. For instance, to test a major OS or application upgrade in a virtual service’s primary pool (A), a second pool (B) running the upgraded version can be added to the primary pool. Then, based on the configuration, a ratio (0-100) of the client-to-server traffic is sent to the B pool instead of the A pool.

To continue this instance, if the upgrade is performing well, the NSX Advanced Load Balancer user can increase the ratio of traffic sent to the B pool. Likewise, if the upgrade is unsuccessful or sub-optimal, the ratio to the B pool easily can be reduced again to test an alternative upgrade.

To finish transitioning to the new pool following successful upgrade, the ratio can be adjusted to send all traffic to the pool, which now makes pool B the production pool.

To perform the next upgrade, the process can be reversed. After upgrading pool A, the ratio of traffic sent to pool B can be reduced to test pool A. To complete the upgrade, the ratio of traffic to pool B can be reduced back to zero.

Operational Notes

Setting the ratio to zero for a pool results in sending no traffic to this pool.

For each of the pools, normal load balancing is performed. After NSX Advanced Load Balancer selects a pool for a new session, the load balancing method configured for that pool is used to select a server.

The A/B setting does not affect existing sessions. For instance, setting the ratio sent to B to one and A to zero does not cause existing sessions on pool A to move to B. Likewise, A/B pool settings do not affect persistence configurations.

If one of the pools that has a non-zero ratio goes down, new traffic is equally distributed to the rest of the pools.

For pure A/B use cases, the priority of the pool group is optional.

Pool groups can be applied as default on the virtual service, or attached to rules, DataScripts and Service port pool selector as well.

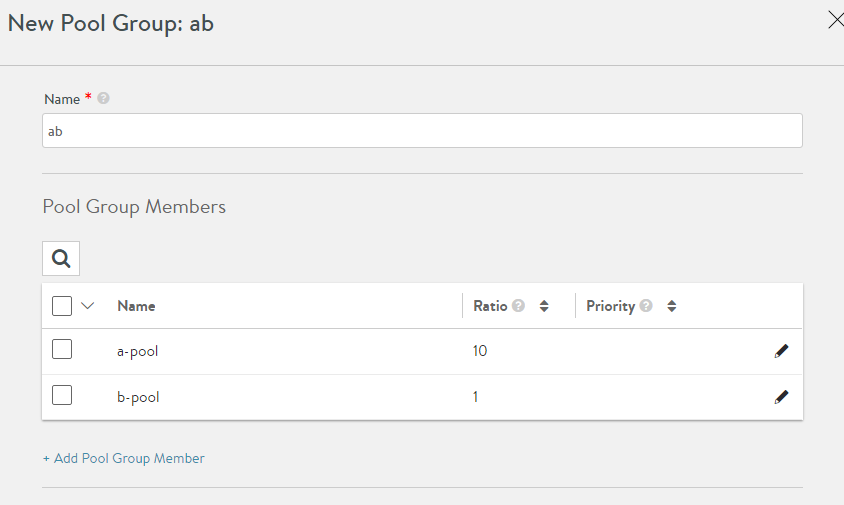

Sample Configuration for an A/B Pool

Create a pool group ‘ab’, with two pools in it, a-pool and b-pool, without specifying any priority:

In this example, 10% of the traffic is sent to b-pool, by setting the ratios of a-pool and b-pool to 10 and 1 respectively.

Apply this pool group to the virtual service, where you can have A/B functionality:

Object details:

{

url: "https://

/api/poolgroup/poolgroup-7517fbb0-6903-403e-844f-6f9e56a22633", uuid: "poolgroup-7517fbb0-6903-403e-844f-6f9e56a22633", name: "ab", tenant_ref: "https://

/api/tenant/admin", cloud_ref: "https://

/api/cloud/cloud-3957c1e2-7168-4214-bbc4-dd7c1652d04b", min_servers: 0, members: [ { ratio: 10, pool_ref: "https://

/api/pool/pool-c27ef707-e736-4ab6-ab81-b6d844d74e12" }, { ratio: 1, pool_ref: "https://

/api/pool/pool-23853ea8-aad8-4a7a-8e9b-99d5b749e75a" } ], }

Additional Use Cases

Blue/Green Deployment

This is a release technique that reduces downtime and risk by running two identical production environments, only one of which (for instance, blue) is live at any moment, and serving all production traffic. In preparation for a new release, deployment, and final-stage testing takes place in an environment that is not live (for instance, green). Once confident in green, all incoming requests go to green instead of blue. Green is now live, and blue is idle. Downtime due to application deployment is eliminated. In addition, if something unexpected happens with the new release on the green, roll back to the last version is immediate; just switch back to blue.

Canary Upgrades

This upgrade technique is so-called because of its similarity to miner’s canary, which will detect toxic gasses before any humans might be affected. The idea is that when performing system updates or changes, a group of representative servers gets updated first, are monitored/ tested for some time, and only thereafter are rolling changes made across the remaining servers.