If a cluster of ESXi hosts is registered to a VMware vCenter, you can apply transport node profiles on the VMware vCenter cluster to prepare all hosts part of the cluster as NSX transport nodes or you can prepare each host individually.

Note: (Host in lockdown mode) If your exception list for

vSphere lockdown mode includes expired user accounts,

NSX installation on

vSphere fails. If your host is part of the vLCM-enabled cluster, several users such as lldp-vim-user, nsx-user, mux-user, and da-user, are created automatically and added to the exception users list on an ESXi host when NSX VIBS are installed. Ensure that you delete all expired user accounts before you begin installation. For more information on accounts with access privileges in lockdown mode, refer to

Specifying Accounts with Access Privileges in Lockdown Mode in the

vSphere Security Guide. For more details on these NSX user accounts on the ESXi host, refer to the KB article,

https://ikb.vmware.com/s/article/87795.

Prerequisites

-

Verify all hosts that you want to configure as transport nodes are powered on in VMware vCenter.

-

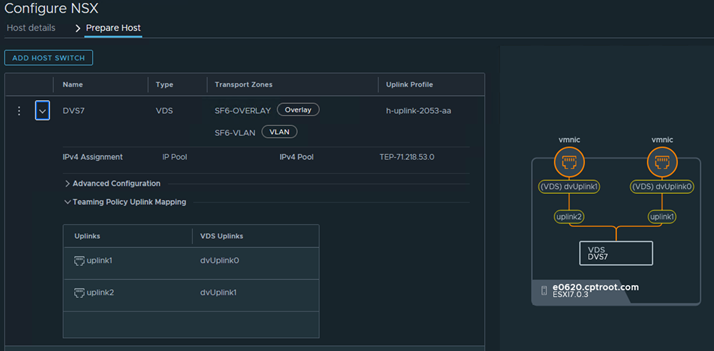

Verify all hosts are members of VDS in VMware vCenter with correct uplinks.

- Verify that the system requirements are met. See System Requirements.

- Verify vCenter is added as compute manager to NSX Manager.

- Verify NSX Manager cluster is up and stable.

- UI: Go to .

- CLI: SSH to one of the NSX Manager nodes as an admin and run get cluster status.

- The reverse proxy service on all nodes of the NSX Manager cluster must be Up and running.

To verify, run get service http. If the service is down, restart the service by running restart service http on each NSX Manager node. If the service is still down, contact VMware support.

- If you deployed VMware vCenter on a custom port or a non-default port, apply these rules to NSX Manager:

- IPv4 rules must be applied on NSX Manager manually before starting the host preparation.

iptables -A INPUT -p tcp -m tcp --dport <CUSTOM_PORT> --tcp-flags FIN,SYN,RST,ACK SYN -j ACCEPTiptables -A OUTPUT -p tcp -m tcp --dport <CUSTOM_PORT> --tcp-flags FIN,SYN,RST,ACK SYN -j ACCEPT

- IPv6 table rules must be applied on NSX Manager manually before starting the host preparation.

ip6tables -A OUTPUT -o eth0 -p tcp -m tcp --dport <CUSTOM_PORT> --tcp-flags FIN,SYN,RST,ACK SYN -j ACCEPTip6tables -A INPUT -p tcp -m tcp --dport <CUSTOM_PORT> --tcp-flags FIN,SYN,RST,ACK SYN -j ACCEPT

- IPv4 rules must be applied on NSX Manager manually before starting the host preparation.

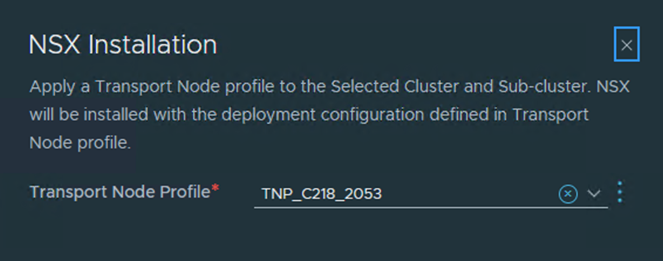

- Verify that a transport node profile is configured. See Add a Transport Node Profile.

Procedure

What to do next

Create a logical switch and assign logical ports. See the Advanced Switching section in the NSX Administration Guide.