This topic tells you about the components that form and interact with the Diego system in VMware Tanzu Application Service for VMs (TAS for VMs).

TAS for VMs uses the Diego system to manage app containers. Diego components assume app scheduling and management responsibility from the Cloud Controller.

Diego is a self-healing container management system that attempts to keep the correct number of instances running in Diego cells to avoid network failures and crashes. Diego schedules and runs Tasks and Long-Running Processes (LRP). For more information about Tasks and LRPs, see How the Diego Auction Allocates Jobs.

You can submit, update, and retrieve the desired number of Tasks and LRPs using the Bulletin Board System (BBS) API. For more information, see the BBS Server repository on GitHub.

Learning how Diego runs an app

The following sections describe how Diego handles a request to run an app. This is only one of the processes that happen in Diego. For example, running an app assumes the app has already been staged.

For more information about the staging process, see How Apps are Staged.

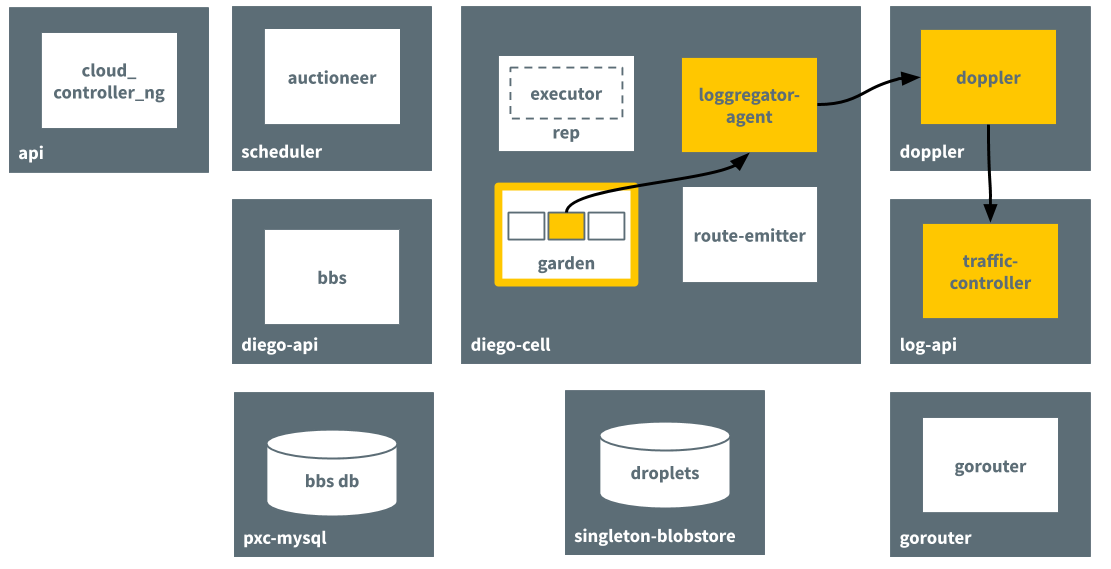

The following illustrations and descriptions do not include all of the components of Diego. For information about each Diego component, see Diego Components.

The architecture discussed in the following steps includes the following high level blocks:

- api - cloud_controller_ng

- scheduler - auctioneer

- diego-api - bbs

- pxc-mysql - bbs db

- diego-cell - rep/executor, garden, loggregator-agent, route-emitter

- singleton-blobstore - droplets

- doppler - doppler

- log-api - traffic-controller

- gorouter - gorouter

Note: The images below are based on the VM names in an open-source deployment of Cloud Foundry Application Runtime. In TAS for VMs, the processes interact in the same way, but are on different VMs. Correct VM names for each process are in the components sections of this topic.

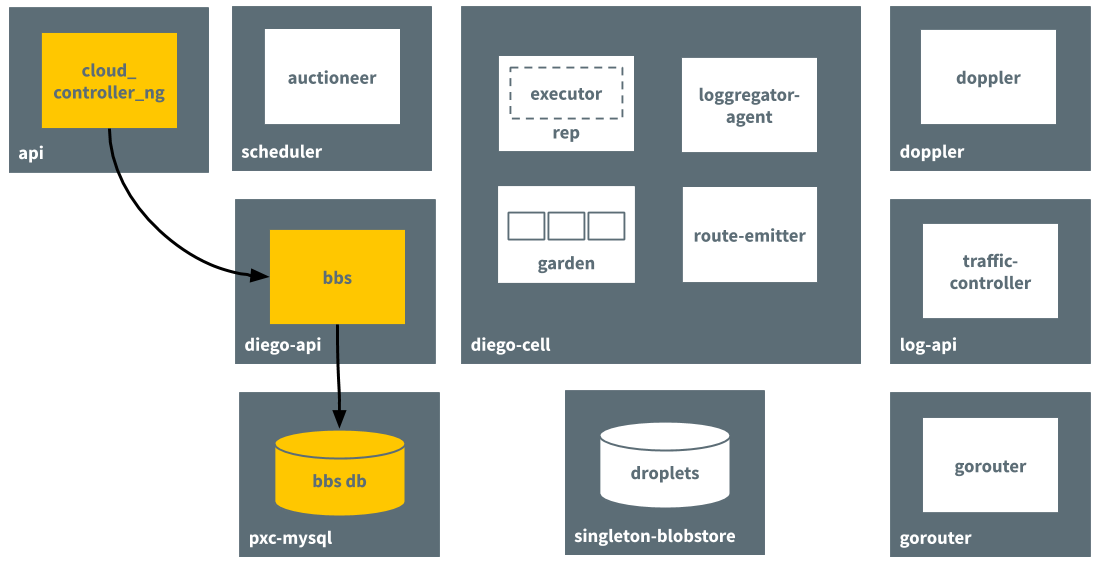

Step 1: Receiving the request to run an app

Cloud Controller passes requests to run apps to the Diego BBS, which stores information about the request in its database.

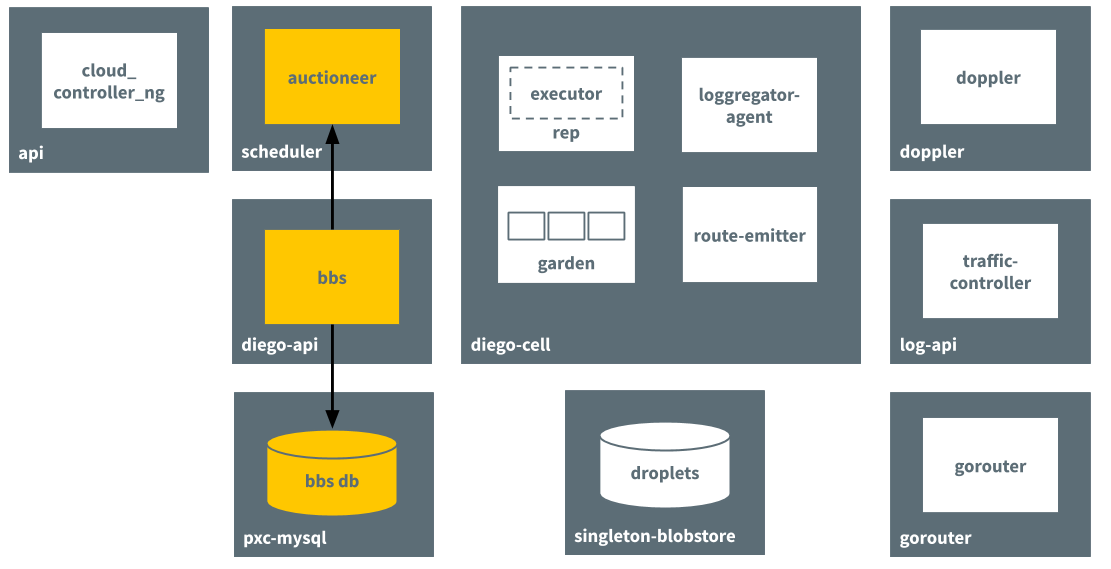

Step 2: Passing the request to the auctioneer process

The BBS contacts the Auctioneer to create an auction based on the desired resources for the app. It references the information stored in its database.

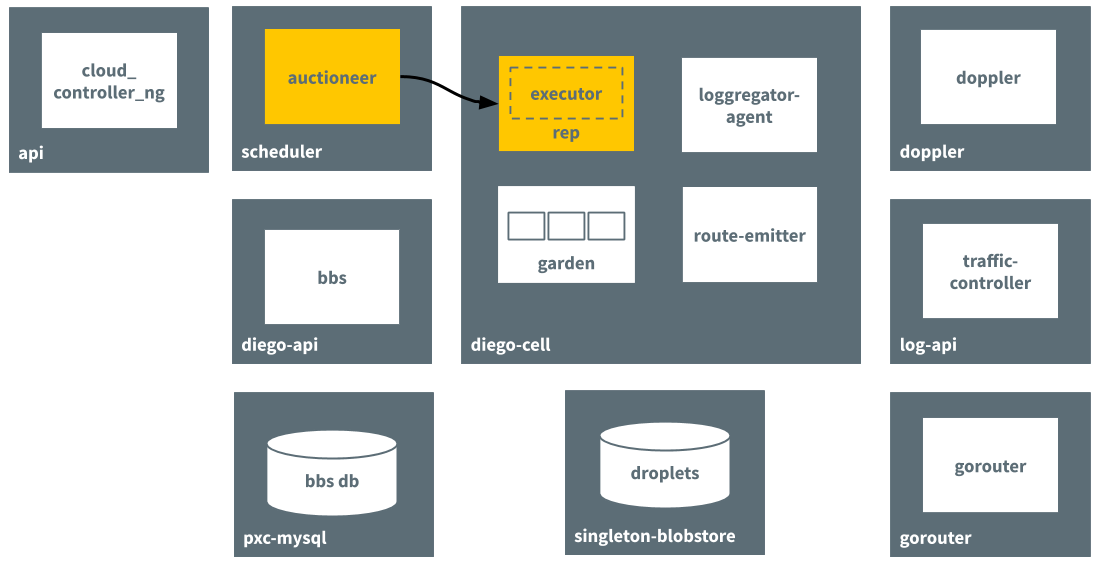

Step 3: Performing the auction

Through an auction, the Auctioneer finds a Diego Cell to run the app on. The Rep job on the Diego Cell accepts the auction request.

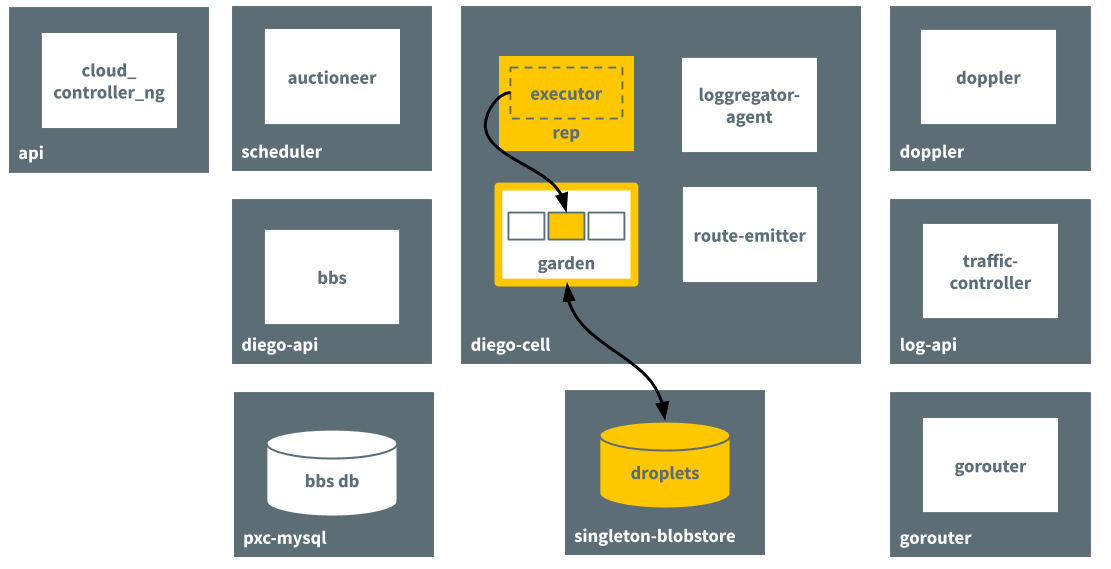

Step 4: Creating the container and running the app

The in process Executor creates a Garden container in the Diego Cell. Garden downloads the droplet that resulted from the staging process and runs the app in the container.

Step 5: Emitting a route for the app

The route-emitter process emits a route registration message to Gorouter for the new app running on the Diego Cell.

Step 6: Sending logs to the Loggregator

Loggregator agent forwards app logs, errors, and metrics to the TAS for VMs Loggregator.

For more information, see App Logging in TAS for VMs.

Diego components

The following table describes the jobs that are part of the TAS for VMs Diego BOSH release.

| Component | Function |

|---|---|

| Job: auctioneer VM: diego_brain |

|

| Job: bbs VM: diego_database |

|

| Job: file_server VM: diego_brain |

|

| Job: locket VM: diego_database |

|

| Job: rep VM: diego_cell |

|

| Job: route_emitter VM: diego_cell |

|

| Job: ssh_proxy VM: diego_brain |

|

Additional information

The following resources provide more information about Diego components:

-

The Diego Release repository on GitHub.

-

The Auctioneer repository on GitHub.

-

The Bulletin Board System repository on GitHub.

-

The File Server repository on GitHub.

-

The Rep repository on GitHub.

-

The Executor repository on GitHub.

-

The Route-Emitter repository on GitHub.

-

App SSH, App SSH Overview, and the Diego SSH repository on GitHub.

Maximum recommended Diego Cells

The maximum recommended Diego Cells is 250 Cells for each TAS for VMs deployment. By default, there is a hard limit of 256 addresses for vSphere deployments that use Silk for networking. This hard limit is described in the Silk Release documentation on GitHub.

The default CIDR address block for the overlay network is 10.255.0.0/16. Each Diego Cell requires a subnet, and subnets (0-255) for each Diego Cell are allocated out of this network.

TAS for VMs deployments that do not use Silk for networking do not have a hard limit. However, operating a foundation with more than 250 Diego Cells is not recommended for the following reasons:

- Changes to the foundation can take a long time, potentially days or weeks depending on the

max-in-flightvalue. For example, if there is a certificate expiring in a week, there might not be enough time to rotate the certificates before expiry. For more information, see Basic Advice in Configuring TAS for VMs for Upgrades. - A single foundation still has single points of failure, such as the certificates on the platform. The RAM that 250 Diego Cells provides is enough to host many business-critical apps.

Components from other releases

The following table describes jobs that interact closely with Diego but are not part of the Diego TAS for VMs BOSH release.

| Component | Function |

|---|---|

| Job: bosh-dns-aliases VM: all |

|

| Job: cc_uploader VM: diego_brain |

|

| Job: database VM: mysql |

|

| Job: loggregator-agent VM: all |

|

| Job: cloud_controller_clock VM: clock_global |

|

App lifecycle binaries

The following platform-specific binaries deploy apps and govern their lifecycle:

-

The Builder, which stages a TAS for VMs app. The Builder runs as a Task on every staging request. It performs static analysis on the app code and does any necessary pre-processing before the app is first run.

-

The Launcher, which runs a TAS for VMs app. The Launcher is set as the Action on the

DesiredLRPfor the app. It executes the start command with the correct system context, including working directory and environment variables. -

The Healthcheck, which performs a status check on running TAS for VMs app from inside the container. The Healthcheck is set as the Monitor action on the

DesiredLRPfor the app.

Current implementations

-

The buildpack app lifecycle implements the TAS for VMs buildpack-based deployment strategy. For more information, see the buildpackapplifecycle repository on GitHub.

-

The Docker app lifecycle implements a Docker deployment strategy. For more information, see the dockerapplifecycle repository on GitHub.

Additional information

The following resources provide more information about components from other releases that interact closely with Diego:

-

The CC-Uploader repository on GitHub.

-

The Loggregator Release repository on GitHub.

-

The TPS repository on GitHub.