NSX Advanced Load Balancer as L7 Ingress Controller

To use NSX Advanced Load Balancer as ingress controller in Kubernetes cluster, you must have the NSX ALB Enterprise version. Alternatively, you can use Contour as your ingress controller in your Tanzu Kubernetes Grid clusters.

When integrated though AKO in Tanzu Kubernetes Grid, NSX ALB supports three ingress modes: NodePort, ClusterIP, and NodePortLocal. Choose a type based on your requirement.

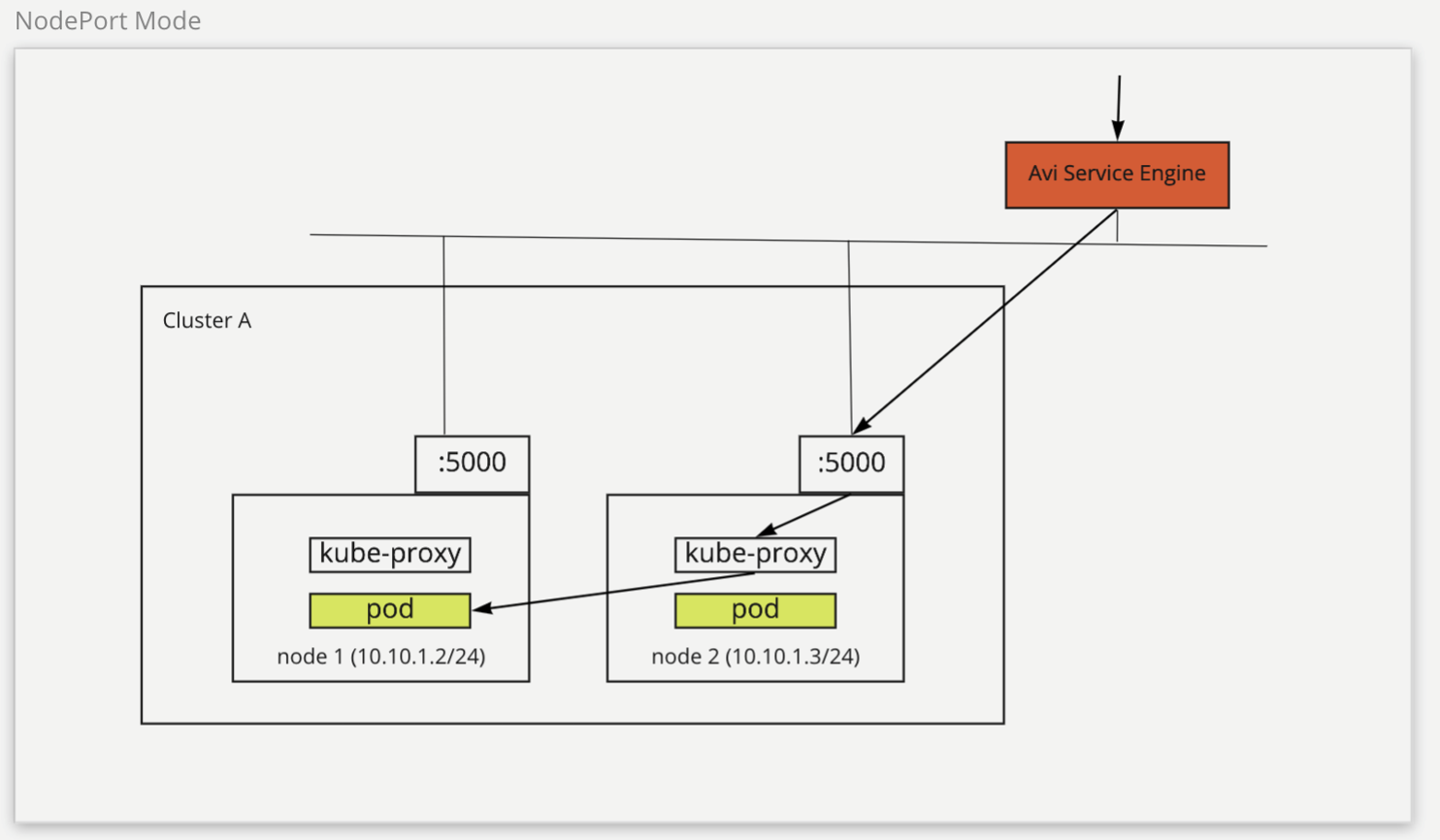

NodePort

When choosing to use the NodePort mode for NSX ALB ingress, the ingress backend service must be NodePort mode. In this type, the network traffic is routed from the client to the NSX ALB SE, and then to the cluster nodes, before it reaches the pods. The NSX ALB SE routes the traffic to the nodes, and kube-proxy helps route the traffic from the nodes to the target pods.

The following diagram describes how the network traffic is routed in the NodePort mode:

The NodePort mode supports sharing an NSX ALB SE between different clusters. However, this type of service can expose a port in the cluster as it requires to use the NodePort mode backend service. It also requires an extra hop for kube-proxy to route traffic from node to pod.

To configure the NodePort ingress mode on the management cluster:

-

Create a

AKODeploymentConfigCR object as follows:apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-for-l7-node-port spec: adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking controller: 10.191.192.137 cloudName: Default-Cloud serviceEngineGroup: Default-Group clusterSelector: matchLabels: nsx-alb-node-port-l7: "true" controlPlaneNetwork: cidr: 10.191.192.0/20 name: VM Network dataNetwork: cidr: 10.191.192.0/20 name: VM Network extraConfigs: cniPlugin: antrea disableStaticRouteSync: true ingress: defaultIngressController: true # optional, if set to false, you need to specify ingress class in your ingress object disableIngressClass: false # required serviceType: NodePort # required shardVSSize: SMALL nodeNetworkList: # required - networkName: VM Network cidrs: - 10.191.192.0/20 -

Apply the changes on the management cluster:

kubectl --context=MGMT-CLUSTER-CONTEXT apply -f install-ako-for-l7-node-port.yaml -

To enable the NodePort ingress mode on the workload clusters, create a workload cluster configuration YAML file, and add the following field:

AVI_LABELS: '{"nsx-alb-node-port-l7":"true"}' -

Deploy the workload cluster by using the

tanzu cluster createcommand.

For more information on deploying workload clusters to vSphere, see Create Workload Clusters.

NSX ALB ingress service provider is now configured to use the NodePort mode on the workload clusters that have the AVI_LABELS that match with the cluster selector in the install-ako-l7-node-port AKODeploymentConfig object.

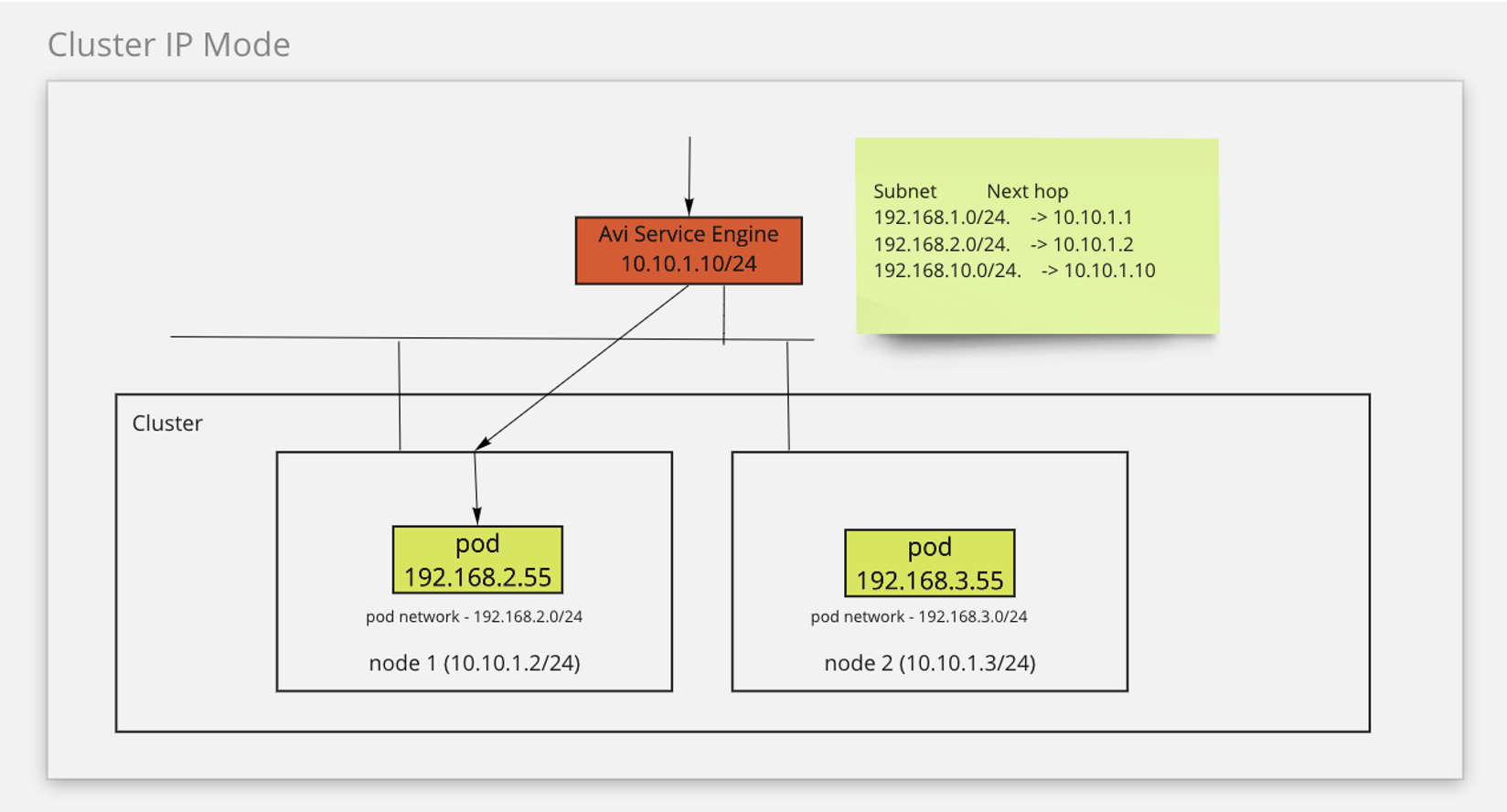

ClusterIP

In the ClusterIP mode, the network traffic is routed from the client to the NSX ALB SE, and then to the pods. This mode requires ClusterIP as the ingress backend service type. The NSX ALB SE routes the traffic to the pods by leveraging the static routes.

The following diagram describes how the network traffic is routed in the ClusterIP mode:

The ClusterIP mode simplifies the network traffic by routing it directly from the SE to the pods. This mode also prevents the exposure of the ports in a cluster. However, in this mode, SEs cannot be shared between clusters because an SE must be attached to a cluster.

To enable this feature:

-

Create a

AKODeploymentConfigCR object as follows:apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-for-l7-cluster-ip spec: adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking controller: 10.191.192.137 cloudName: Default-Cloud serviceEngineGroup: SEG-2 # must be unique, can only be used for one cluster clusterSelector: matchLabels: nsx-alb-cluster-ip-l7: "true" controlPlaneNetwork: cidr: 10.191.192.0/20 name: VM Network dataNetwork: cidr: 10.191.192.0/20 name: VM Network extraConfigs: cniPlugin: antrea disableStaticRouteSync: false # required ingress: defaultIngressController: true # optional, if set to false, you need to specify ingress class in your ingress object disableIngressClass: false # required serviceType: ClusterIP # required shardVSSize: SMALL nodeNetworkList: # required - networkName: VM Network cidrs: - 10.191.192.0/20 -

Apply the changes on the management cluster:

kubectl --context=MGMT-CLUSTER-CONTEXT apply -f install-ako-for-l7-cluster-ip.yaml -

To enable the ClusterIP ingress mode on the workload clusters, create a workload cluster configuration YAML file, and add the following field:

AVI_LABELS: '{"nsx-alb-cluster-ip-l7":"true"}' -

Deploy the workload cluster by using the

tanzu cluster createcommand.

For more information on deploying workload clusters to vSphere, see Create Workload Clusters.

NSX ALB ingress service provider is now configured to use the ClusterIP mode on the workload clusters that have the AVI_LABELS that match with the cluster selector in the install-ako-l7-cluster-ip AKODeploymentConfig object.

To enable the ClusterIP ingress mode on other workload clusters, you must create a new SE in Avi Controller, and then create a new AKODeploymentConfig object specifying the name of the new SE before applying the file on the management cluster.

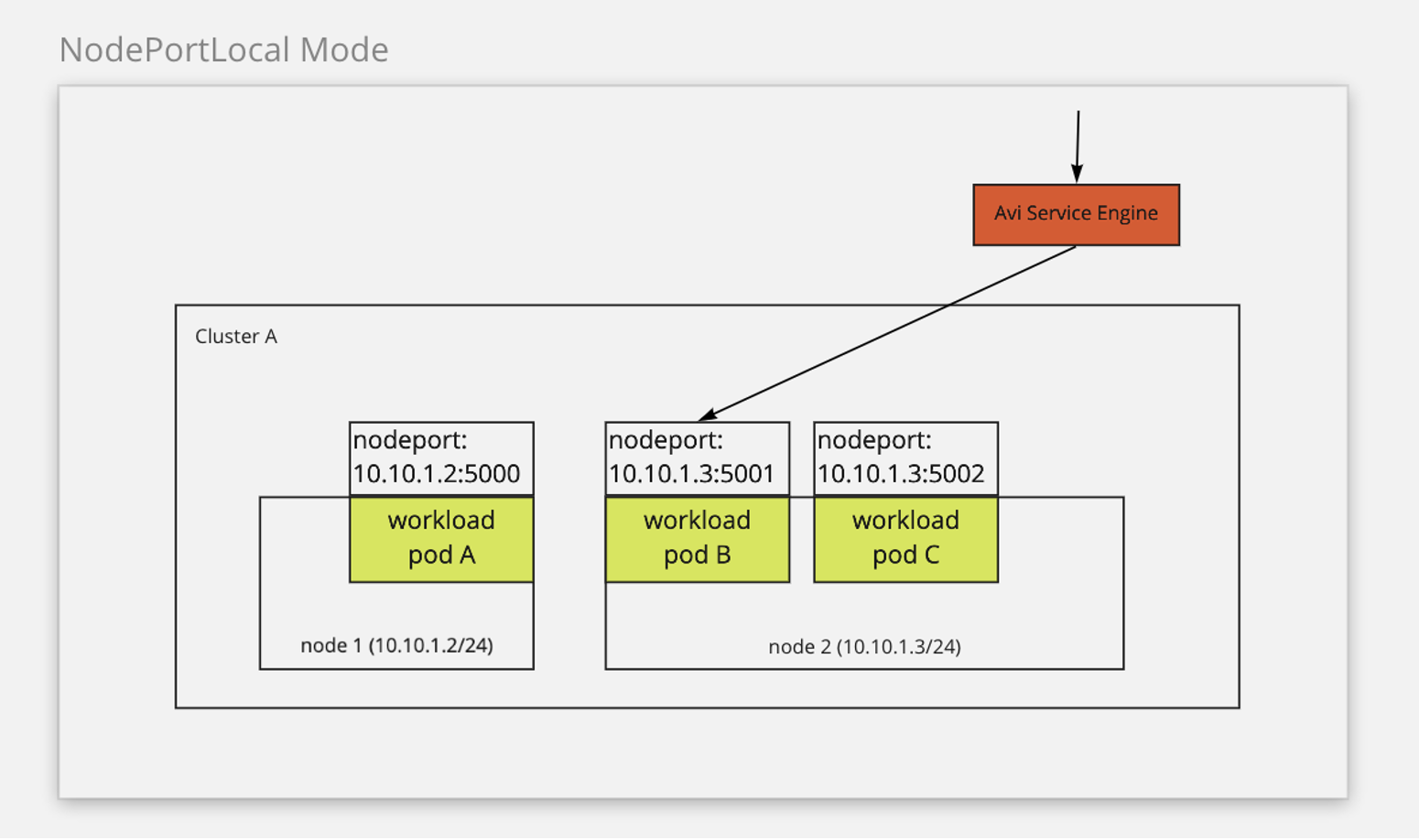

NodePortLocal (For Antrea CNI)

If you use Antrea as the CNI, you can enable the NodePortLocal ingress mode on NSX ALB. It leverages the NodePortLocal feature of Antrea to directly route the traffic from the SE to the pod. In this mode, the network traffic is routed from the client to the NSX ALB SE, and then to the pods. This mode requires ClusterIP as the ingress backend type. Additionally, the ANTREA_NODEPORTLOCAL configuration variable must be set to true in Tanzu Kubernetes Grid. For more information on the NodePortLocal feature in Antrea, see NodePortLocal (NPL) in the Antrea Documentation.

NoteNSX ALB

NodePortLocalingress mode is not supported for management clusters. You cannot run NSX ALB as a service type with ingress modeNodePortLocalfor traffic to the management cluster. You can only configureNodePortLocalingress to workload clusters. Configure management clusters withAVI_INGRESS_SERVICE_TYPEset to eitherNodePortorClusterIP. Default isNodePort.

The following diagram describes how the network traffic is routed in the NodePortLocal mode:

The NodePortLocal mode simplifies the network traffic by routing it directly from the SE to the pods. This mode supports shared SEs for the clusters and prevents the exposure of the ports in a cluster.

-

To enable the NodePortLocal ingress mode on the workload clusters, create a workload cluster configuration YAML file, and add the following field:

AVI_LABELS: '{"nsx-alb-node-port-local-l7":"true"}' ANTREA_NODEPORTLOCAL: "true" -

Deploy the workload cluster by using the

tanzu cluster createcommand.

NSX ALB ingress service provider is now configured to use the NodePortLocal mode on the workload clusters that have the AVI_LABELS that match with the cluster selector in the install-ako-l7-node-port-local AKODeploymentConfig object.

IPv4/IPv6 Dual-Stack Support

For information about how to create dual-stack clusters, see Create Dual-Stack Clusters with NSX Advanced Load Balancer as L7 Ingress Controller.

NSX ALB L7 Ingress Support in Management Cluster

Only the NodePort ingress mode is supported in the management cluster if you use NSX ALB as the cluster endpoint VIP provider. If you do not use NSX ALB as cluster endpoint VIP provider, all the three ingress modes are supported.

To enable L7 ingress in management cluster:

-

Edit the

AKODeploymentConfigfile in the management cluster:kubectl --context=MGMT-CLUSTER-CONTEXT edit adc install-ako-for-management-cluster -

In the file that opens, modify the

spec.extraConfigs.ingressfield as shown in the following example:extraConfigs: cniPlugin: antrea disableStaticRouteSync: true ingress: defaultIngressController: true # optional, if set to false, you need to specify ingress class in your ingress object disableIngressClass: false # required serviceType: NodePort # required shardVSSize: SMALL nodeNetworkList: # required - networkName: VM Network cidrs: - 10.191.192.0/20