IPv4/IPv6 Dual-Stack Networking

This topic explains how to configure Tanzu Kubernetes Grid management and workload clusters to use dual-stack IPv4/IPv6 networking for load balancing, ingress control, Node IPAM, control plane endpoint, and other networking applications.

Setting the Primary IP Family

When you configure dual-stack neworking for a cluster, you set TKG_IP_FAMILY in its configuration file to either "IPv4,IPv6" or "IPv6,IPv4".

The first item in this pair determines the primary IP family for the cluster, which sets the IP address format for certain core services, for example:

- The Kubernetes API

- The external control plane endpoint address by which external clients communicate with the Kubernetes API

- The default for single-stack services that are created when no IP family is specified

Prerequisites and Limitations

- Before implementing IPv4/IPv6 networking, configure vCenter Server to support both IPv4 and IPv6 connectivity. For information, see Configure vSphere for IPv6 in the vSphere 8 documentation.

- You can configure dual-stack networking on clusters with Ubuntu or Photon OS nodes.

- You cannot deploy a dual-stack management cluster with the installer interface.

LoadBalancerservices provided by NSX Advanced Load Balancer or Kube-VIP do not support dual-stack access. It might be possible with other providers.- You can use NSX ALB or Kube-VIP as the control plane endpoint provider for a dual-stack cluster.

- Only the core add-on components, such as Antrea, Calico, CSI, CPI, and Pinniped, have been validated for dual-stack support in this release.

Configure Node IPAM with Dual-Stack Networking

To configure a cluster for Node IPAM with dual-stack networking, you need pools of available IP addresses with a gateway address for both IPv4 and IPv6.

In the cluster configuration file, you set NODE_IP_POOL_* or MANAGEMENT_NODE_IP_POOL_* variables to values that apply to the cluster’s primary IP family, which depends on its TKG_IP_FAMILY configuration setting, as described in Setting the Primary IP Family.

For the other IP pool, you set additional variables that are identical to the ones that you set for the primary IP pool, but with SECONDARY_ added. for example:

-

Workload clusters:

NODE_IPAM_SECONDARY_IP_POOL_APIVERSION: "ipam.cluster.x-k8s.io/v1alpha2"NODE_IPAM_SECONDARY_IP_POOL_KIND: "InClusterIPPool"NODE_IPAM_SECONDARY_IP_POOL_NAME: ""

-

Standalone management clusters:

MANAGEMENT_NODE_IPAM_SECONDARY_IP_POOL_GATEWAY: ""MANAGEMENT_NODE_IPAM_SECONDARY_IP_POOL_ADDRESSES: []MANAGEMENT_NODE_IPAM_SECONDARY_IP_POOL_SUBNET_PREFIX: ""

Create Dual-Stack Clusters with Kube-VIP

You can use Kube-VIP as the control plane endpoint provider for a dual-stack cluster.

To configure dual-stack on the clusters, Deploy Management Clusters or Create Workload Clusters, as required.

In the cluster configuration file:

- Set the IP family configuration variable

TKG_IP_FAMILY: ipv4,ipv6. - Optionally, set the service CIDRs and cluster CIDRs.

NoteThere are two CIDRs for each variable. The IP families of these CIDRs follow the order of the configured

TKG_IP_FAMILY. The largest CIDR range that is permitted for the IPv4 addresses is /12, and the largest IPv6 SERVICE_CIDR range is /108. If you do not set the CIDRs, the default values are used.

The services, which have an ipFamilyPolicy specified in their specs of PreferDualStack or RequireDualStack, can now be accessed through IPv4 or IPv6.

NoteThe end-to-end tests for the dual-stack feature in upstream Kubernetes can fail as a cluster node advertises only its primary IP address (in this case, the IPv4 address) as its IP address.

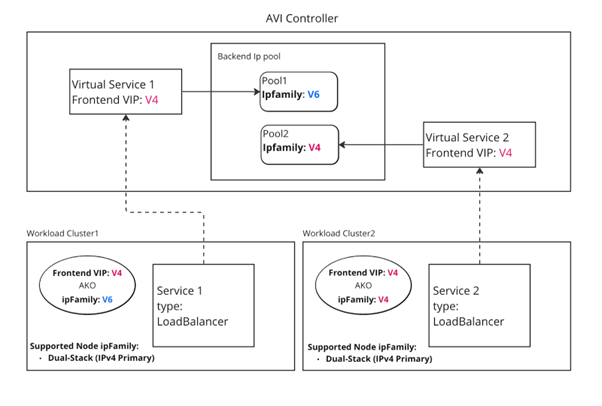

Create Dual-Stack Clusters with NSX Advanced Load Balancer as Cluster Load Balancer Service Provider

NSX ALB can serve as the external load balancer provider for workload clusters with dual-stack networking in a Tanzu Kubernetes Grid deployment. You set the primary IP family to use in the AKO configuration. The IP type of the data network CIDR determines the IP type of the load balancer service frontend VIP. The AKO ipFamily setting determines the IP type of the backend pool of the NSX ALB service.

For general information about how to use NSX ALB as the cluster load balancer service provider, see NSX Advanced Load Balancer as Cluster Load Balancer Service Provider.

To implement dual-stack clusters, you modify the AKODeploymentConfig file for those clusters. For information about how to access the AKODeploymentConfig for a cluster, see View the AKODeploymentConfig CR Object for a Cluster.

Supported Configurations

The NSX ALB load balancer service in a Tanzu Kubernetes Grid deployment supports the dual-stack configurations shown in the table below.

| Cluster IpFamily | AKO IpFamily | Frontend VIP IpFamily | Supported? |

|---|---|---|---|

| IPv4,IPv6 | V4 | V4 | ✔ |

| IPv4,IPv6 | V6 | V4 | ✔ |

| IPv4,IPv6 | V4 | V6 | ✖ |

| IPv4,IPv6 | V6 | V6 | ✖ |

| IPv6,IPv4 | V4 | V4 | ✖ |

| IPv6,IPv4 | V6 | V4 | ✖ |

| IPv6,IPv4 | V4 | V6 | ✖ |

| IPv6,IPv4 | V6 | V6 | ✖ |

Known Limitations

The following limitations apply when using dual-stack networking in workload clusters with TKG v2.5.

- AKO does not support dual-stack for service type

LoadBalancer. TheLoadBalancerservice has a single VIP. - NSX ALB does not support creating an IPv6 VIP for L4 service type

LoadBalancer. - AKO only supports one type of IP backend pool per cluster. The AKO

ipFamilytype must be the same as the IP type of the backend pool. - AKO cannot communicate with an IPv6 Kubernetes API server. You cannot install AKO in IPv6 single-stack or IPv6 primary dual-stack Kubernetes clusters. For more information, see the Support for IPv6 in AKO in the NSX ALB documentation.

- After you create a cluster, you cannot change the primary IP family or toggle an existing cluster between single stack and dual-stack.

Configure AKO to Use an IPv4 Backend in an IPv4 Primary Dual-Stack Cluster

-

Edit the

AKODeploymentConfigCR object, or create a new customizedAKODeploymentConfigfile.- Set the AKO

ipFamilytoV4. - Set the data network CIDR to

IPv4. - To apply this AKO configuration to all workload clusters, update

install-ako-for-all.

For example:

apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-on-dual-stack-v4-primary spec: clusterSelector: matchLabels: cluster-type: "install-ako-on-dual-stack-v4-primary" adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking cloudName: Default-Cloud controlPlaneNetwork: cidr: 10.218.48.0/20 name: VM Network controller: 10.186.103.243 controllerVersion: 22.1.2 dataNetwork: cidr: 10.218.48.0/20 name: VM Network extraConfigs: disableStaticRouteSync: false ipFamily: V4 ingress: defaultIngressController: false disableIngressClass: true nodeNetworkList: - networkName: VM Network - Set the AKO

-

Create the workload cluster from a configuration file.

To use a custom AKO configuration, set

AVI_LABELSin the cluster configuration to match the cluster selector inAKODeploymentConfig.CLUSTER_NAME: test-cluster TKG_IP_FAMILY: ipv4,ipv6 AVI_LABELS: '{"cluster-type": "install-ako-on-dual-stack-v4-primary"}' -

After the cluster is created, check the

avi-system/avi-k8s-configfile to make sure AKOipFamilyisV4andvipNetworkListCIDR isIPv4.apiVersion: v1 data: apiServerPort: "8080" cloudName: Default-Cloud clusterName: test-cluster controllerIP: 10.186.103.243 controllerVersion: 22.1.2 defaultIngController: "false" deleteConfig: "false" disableStaticRouteSync: "false" fullSyncFrequency: "1800" ipFamily: V4 logLevel: INFO nodeNetworkList: '[{"networkName":"VM Network"}]' serviceEngineGroupName: Default-Group serviceType: NodePort useDefaultSecretsOnly: "false" vipNetworkList: '[{"networkName":"VM Network","cidr":"10.218.48.0/20"}]' kind: ConfigMap -

Create a

LoadBalancerobject YAML file.For example, create a file named

loadbalancer.yamlwith the following contents. Setspec.ipFamilyPolicyto eitherRequireDualStackorPreferDualStack.apiVersion: v1 kind: Service metadata: name: dual namespace: test spec: type: LoadBalancer ipFamilies: - IPv4 - IPv6 ipFamilyPolicy: RequireDualStack selector: corgi: dual ports: - port: 80 targetPort: 80 -

Install the load balancer service type on the workload cluster.

kubectl apply -f loadbalancer.yaml

Configure AKO to Use an IPv6 Backend in an IPv4 Primary Dual-Stack Cluster

-

Edit the

AKODeploymentConfigCR object, or create a new customizedAKODeploymentConfigfile.- Set the AKO

ipFamilytoV6. - Set the data network CIDR to

IPv4. - To apply this AKO configuration to all workload clusters, update

install-ako-for-all.

For example:

apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-on-dual-stack-v4-primary spec: clusterSelector: matchLabels: cluster-type: "install-ako-on-dual-stack-v4-primary" adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking cloudName: Default-Cloud controlPlaneNetwork: cidr: 10.218.48.0/20 name: VM Network controller: 10.186.103.243 controllerVersion: 22.1.2 dataNetwork: cidr: 10.218.48.0/20 name: VM Network extraConfigs: disableStaticRouteSync: false ipFamily: V6 ingress: defaultIngressController: false disableIngressClass: true nodeNetworkList: - networkName: VM Network - Set the AKO

-

Create the workload cluster from a configuration file.

To use a custom AKO configuration, set

AVI_LABELSin the cluster configuration to match the cluster selector inAKODeploymentConfig.CLUSTER_NAME: test-cluster TKG_IP_FAMILY: ipv4,ipv6 AVI_LABELS: '{"cluster-type": "install-ako-on-dual-stack-v4-primary"}' -

After the cluster is created, check the

avi-system/avi-k8s-configfile to make sure AKOipFamilyisV6andvipNetworkListCIDR isIPv4.apiVersion: v1 data: apiServerPort: "8080" cloudName: Default-Cloud clusterName: test-cluster controllerIP: 10.186.103.243 controllerVersion: 22.1.2 defaultIngController: "false" deleteConfig: "false" disableStaticRouteSync: "false" fullSyncFrequency: "1800" ipFamily: V6 logLevel: INFO nodeNetworkList: '[{"networkName":"VM Network"}]' serviceEngineGroupName: Default-Group serviceType: NodePort useDefaultSecretsOnly: "false" vipNetworkList: '[{"networkName":"VM Network","cidr":"10.218.48.0/20"}]' kind: ConfigMap -

Create a

LoadBalancerobject YAML file.For example, create a file named

loadbalancer.yamlwith the following contents. Setspec.ipFamilyPolicyto eitherRequireDualStackorPreferDualStack. Set thespec.ipFamiliesservice toIPv6if you want to use the secondary IP type to route traffic.Example YAML file:

apiVersion: v1 kind: Service metadata: name: dual namespace: test spec: type: LoadBalancer ipFamilies: - IPv4 - IPv6 ipFamilyPolicy: RequireDualStack selector: corgi: dual ports: - port: 80 targetPort: 80 -

Install the load balancer service type on the workload cluster.

kubectl apply -f loadbalancer.yaml

Create Dual-Stack Clusters with NSX Advanced Load Balancer as L7 Ingress Controller

NSX ALB can serve as the ingress service for workload clusters with dual-stack networking in a Tanzu Kubernetes Grid deployment. For general information about how to use NSX ALB as an L7 ingress controller, see NSX Advanced Load Balancer as L7 Ingress Controller.

Supported Configurations

The NSX ALB ingress service in a Tanzu Kubernetes Grid deployment supports the dual-stack configurations shown in the table below.

| Cluster IpFamily | AKO IpFamily | Frontend VIP IpFamily | Supported? |

|---|---|---|---|

| IPv4,IPv6 | V4 | V4 | ✔ |

| IPv4,IPv6 | V6 | V4 | ✔ |

| IPv4,IPv6 | V4 | V6 | ✔ |

| IPv4,IPv6 | V6 | V6 | ✔ |

| IPv6,IPv4 | V4 | V4 | ✖ |

| IPv6,IPv4 | V6 | V4 | ✖ |

| IPv6,IPv4 | V4 | V6 | ✖ |

| IPv6,IPv4 | V6 | V6 | ✖ |

Known Limitations

- AKO does not support creatign dual-stack ingress services

- AKO cannot communicate with an IPv6 Kubernetes API server. You cannot install AKO in IPv6 single-stack or IPv6 primary dual-stack Kubernetes clusters

NodeportLocalmode is not supported.

For more information, see the Support for IPv6 in AKO in the NSX ALB documentation.

Configure AKO to Use IPv4 Frontend VIP and Backend in an IPv4 Primary Dual-Stack Cluster

-

Edit the

AKODeploymentConfigCR object, or create a new customizedAKODeploymentConfigfile.- Set the AKO

ipFamilytoV4. - Set the data network CIDR to

IPv4. - To apply this AKO configuration to all workload clusters, update

install-ako-for-all.

For example:

apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-on-dual-stack-v4-primary spec: clusterSelector: matchLabels: cluster-type: "install-ako-on-dual-stack-v4-primary" adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking cloudName: Default-Cloud controlPlaneNetwork: cidr: 10.218.48.0/20 name: VM Network controller: 10.186.103.243 controllerVersion: 22.1.2 dataNetwork: cidr: 10.218.48.0/20 name: VM Network extraConfigs: disableStaticRouteSync: false ipFamily: V4 ingress: defaultIngressController: true disableIngressClass: false serviceType: NodePort shardVSSize: MEDIUM nodeNetworkList: - networkName: VM Network - Set the AKO

-

Create the workload cluster from a configuration file.

To use a custom AKO configuration, set

AVI_LABELSin the cluster configuration to match the cluster selector inAKODeploymentConfig.CLUSTER_NAME: test-cluster TKG_IP_FAMILY: ipv4,ipv6 AVI_LABELS: '{"cluster-type": "install-ako-on-dual-stack-v4-primary"}' -

After the cluster is created, check the

avi-system/avi-k8s-configfile to make sure that the AKOipFamilyisV4and vipNetworkList cidr isIPv4.apiVersion: v1 data: apiServerPort: "8080" cloudName: Default-Cloud clusterName: test-cluster controllerIP: 10.186.103.243 controllerVersion: 22.1.2 defaultIngController: "true" deleteConfig: "false" disableStaticRouteSync: "false" fullSyncFrequency: "1800" ipFamily: V4 logLevel: INFO nodeNetworkList: '[{"networkName":"VM Network"}]' serviceEngineGroupName: Default-Group serviceType: NodePort shardVSSize: MEDIUM useDefaultSecretsOnly: "false" vipNetworkList: '[{"networkName":"VM Network","cidr":"10.218.48.0/20"}]' kind: ConfigMap -

Create an

Ingressobject YAML file.For example, create a file named

ingress.yamlwith the following contents.--- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: cafe-ingress spec: rules: - host: cafe.avilocal.lol http: paths: - path: /coffee pathType: Prefix backend: service: name: coffee-svc port: number: 5678 --- apiVersion: v1 kind: Service metadata: name: coffee-svc labels: app: coffee Spec: ipFamilies: - IPv4 - IPv6 ipFamilyPolicy: RequireDualStack ports: - port: 5678 selector: app: coffee type: NodePort --- apiVersion: apps/v1 kind: Deployment metadata: name: coffee-app spec: selector: matchLabels: app: coffee replicas: 2 template: metadata: labels: app: coffee spec: containers: - name: http-echo image: hashicorp/http-echo:latest args: - "-text=Connection succeeds" -

Install the ingress controller service type on the workload cluster.

kubectl apply -f ingress.yaml

Configure AKO to use IPv6 frontend VIP and IPv4 backend in dual-stack IPv4 primary cluster

-

Edit the

AKODeploymentConfigCR object, or create a new customizedAKODeploymentConfigfile.- Set the AKO

ipFamilytoV4. - Set the data network CIDR to

IPv6. - To apply this AKO configuration to all workload clusters, update

install-ako-for-all.

For example:

apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-on-dual-stack-v4-primary spec: clusterSelector: matchLabels: cluster-type: "install-ako-on-dual-stack-v4-primary" adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking cloudName: Default-Cloud controlPlaneNetwork: cidr: 10.218.48.0/20 name: VM Network controller: 10.186.103.243 controllerVersion: 22.1.2 dataNetwork: cidr: 2620:124:6020:c30a::/64 name: VM Network extraConfigs: disableStaticRouteSync: false ipFamily: V4 ingress: defaultIngressController: true disableIngressClass: false serviceType: NodePort shardVSSize: MEDIUM nodeNetworkList: - networkName: VM Network - Set the AKO

-

Create the workload cluster from a configuration file.

To use a custom AKO configuration, set

AVI_LABELSin the cluster configuration to match the cluster selector inAKODeploymentConfig.CLUSTER_NAME: test-cluster TKG_IP_FAMILY: ipv4,ipv6 AVI_LABELS: '{"cluster-type": "install-ako-on-dual-stack-v4-primary"}' -

After the cluster is created, check the

avi-system/avi-k8s-configfile to make sure that the AKOipFamilyisV4andvipNetworkListCIDR isIPv6.apiVersion: v1 data: apiServerPort: "8080" cloudName: Default-Cloud clusterName: test-cluster controllerIP: 10.186.103.243 controllerVersion: 22.1.2 defaultIngController: "true" deleteConfig: "false" disableStaticRouteSync: "false" fullSyncFrequency: "1800" ipFamily: V4 logLevel: INFO nodeNetworkList: '[{"networkName":"VM Network"}]' serviceEngineGroupName: Default-Group serviceType: NodePort shardVSSize: MEDIUM useDefaultSecretsOnly: "false" vipNetworkList: '[{"networkName":"VM Network","v6cidr":"2620:124:6020:c30a::/64"}]' kind: ConfigMap -

Create an

Ingressobject YAML file.For example, create a file named

ingress.yamlwith the following contents.--- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: cafe-ingress spec: rules: - host: cafe.avilocal.lol http: paths: - path: /coffee pathType: Prefix backend: service: name: coffee-svc port: number: 5678 --- apiVersion: v1 kind: Service metadata: name: coffee-svc labels: app: coffee Spec: ipFamilies: - IPv4 - IPv6 ipFamilyPolicy: RequireDualStack ports: - port: 5678 selector: app: coffee type: NodePort --- apiVersion: apps/v1 kind: Deployment metadata: name: coffee-app spec: selector: matchLabels: app: coffee replicas: 2 template: metadata: labels: app: coffee spec: containers: - name: http-echo image: hashicorp/http-echo:latest args: - "-text=Connection succeeds" -

Install the ingress controller service type on the workload cluster.

kubectl apply -f ingress.yaml

Configure AKO to use IPv4 frontend VIP and IPv6 backend in dual-stack IPv4 primary cluster

-

Edit the

AKODeploymentConfigCR object, or create a new customizedAKODeploymentConfigfile.- Set the AKO

ipFamilytoV6. - Set the data network CIDR to

IPv4. - To apply this AKO configuration to all workload clusters, update

install-ako-for-all.

For example:

apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-on-dual-stack-v4-primary spec: clusterSelector: matchLabels: cluster-type: "install-ako-on-dual-stack-v4-primary" adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking cloudName: Default-Cloud controlPlaneNetwork: cidr: 10.218.48.0/20 name: VM Network controller: 10.186.103.243 controllerVersion: 22.1.2 dataNetwork: cidr: 10.218.48.0/20 name: VM Network extraConfigs: disableStaticRouteSync: false ipFamily: V6 ingress: defaultIngressController: true disableIngressClass: false serviceType: NodePort shardVSSize: MEDIUM nodeNetworkList: - networkName: VM Network - Set the AKO

-

Create the workload cluster from a configuration file.

To use a custom AKO configuration, set

AVI_LABELSin the cluster configuration to match the cluster selector inAKODeploymentConfig.CLUSTER_NAME: test-cluster TKG_IP_FAMILY: ipv4,ipv6 AVI_LABELS: '{"cluster-type": "install-ako-on-dual-stack-v6-primary"}' -

After the cluster is created, check configmap

avi-system/avi-k8s-configto make sure that the AKOipFamilyisV6andvipNetworkListCIDR isIPv4.apiVersion: v1 data: apiServerPort: "8080" cloudName: Default-Cloud clusterName: test-cluster controllerIP: 10.186.103.243 controllerVersion: 22.1.2 defaultIngController: "true" deleteConfig: "false" disableStaticRouteSync: "false" fullSyncFrequency: "1800" ipFamily: V6 logLevel: INFO nodeNetworkList: '[{"networkName":"VM Network"}]' serviceEngineGroupName: Default-Group serviceType: NodePort shardVSSize: MEDIUM useDefaultSecretsOnly: "false" vipNetworkList: '[{"networkName":"VM Network","cidr":"10.218.48.0/20"}]' kind: ConfigMap -

Create an

Ingressobject YAML file.For example, create a file named

ingress.yamlwith the following contents. To use a secondary IP type to route traffic, set servicespec.ipFamiliestoIPv6.--- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: cafe-ingress spec: rules: - host: cafe.avilocal.lol http: paths: - path: /coffee pathType: Prefix backend: service: name: coffee-svc port: number: 5678 --- apiVersion: v1 kind: Service metadata: name: coffee-svc labels: app: coffee spec: ipFamilies: - IPv4 - IPv6 ipFamilyPolicy: RequireDualStack ports: - port: 5678 selector: app: coffee type: NodePort --- apiVersion: apps/v1 kind: Deployment metadata: name: coffee-app spec: selector: matchLabels: app: coffee replicas: 2 template: metadata: labels: app: coffee spec: containers: - name: http-echo image: hashicorp/http-echo:latest args: - "-text=Connection succeeds" -

Install the ingress controller service type on the workload cluster.

kubectl apply -f ingress.yaml

Configure AKO to use IPv6 frontend VIP and IPv6 backend in dual-stack IPv4 primary cluster

-

Edit the

AKODeploymentConfigCR object, or create a new customizedAKODeploymentConfigfile.- Set the AKO

ipFamilytoV6. - Set the data network CIDR to

IPv6. - To apply this AKO configuration to all workload clusters, update

install-ako-for-all.

For example:

apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-on-dual-stack-v4-primary spec: clusterSelector: matchLabels: cluster-type: "install-ako-on-dual-stack-v4-primary" adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking cloudName: Default-Cloud controlPlaneNetwork: cidr: 10.218.48.0/20 name: VM Network controller: 10.186.103.243 controllerVersion: 22.1.2 dataNetwork: cidr: 2620:124:6020:c30a::/64 name: VM Network extraConfigs: disableStaticRouteSync: false ipFamily: V6 ingress: defaultIngressController: true disableIngressClass: false serviceType: NodePort shardVSSize: MEDIUM nodeNetworkList: - networkName: VM Network - Set the AKO

-

Create the workload cluster from a configuration file.

To use a custom AKO configuration, set

AVI_LABELSin the cluster configuration to match the cluster selector inAKODeploymentConfig.CLUSTER_NAME: test-cluster TKG_IP_FAMILY: ipv4,ipv6 AVI_LABELS: '{"cluster-type": "install-ako-on-dual-stack-v6-primary"}' -

After the cluster is created, check the

avi-system/avi-k8s-configfile to make sure that the AKOipFamilyisV6andvipNetworkListCIDR isIPv6.apiVersion: v1 data: apiServerPort: "8080" cloudName: Default-Cloud clusterName: test-cluster controllerIP: 10.186.103.243 controllerVersion: 22.1.2 defaultIngController: "true" deleteConfig: "false" disableStaticRouteSync: "false" fullSyncFrequency: "1800" ipFamily: V6 logLevel: INFO nodeNetworkList: '[{"networkName":"VM Network"}]' serviceEngineGroupName: Default-Group serviceType: NodePort shardVSSize: MEDIUM useDefaultSecretsOnly: "false" vipNetworkList: '[{"networkName":"VM Network","v6cidr":"2620:124:6020:c30a::/64"}]' kind: ConfigMap -

Create an

Ingressobject YAML file.For example, create a file named

ingress.yamlwith the following contents. To use a secondary IP type to route traffic, set servicespec.ipFamiliestoIPv6.--- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: cafe-ingress spec: rules: - host: cafe.avilocal.lol http: paths: - path: /coffee pathType: Prefix backend: service: name: coffee-svc port: number: 5678 --- apiVersion: v1 kind: Service metadata: name: coffee-svc labels: app: coffee spec: ipFamilies: - IPv4 - IPv6 ipFamilyPolicy: RequireDualStack ports: - port: 5678 selector: app: coffee type: NodePort --- apiVersion: apps/v1 kind: Deployment metadata: name: coffee-app spec: selector: matchLabels: app: coffee replicas: 2 template: metadata: labels: app: coffee spec: containers: - name: http-echo image: hashicorp/http-echo:latest args: - "-text=Connection succeeds" -

Install the ingress controller service type on the workload cluster.

kubectl apply -f ingress.yaml