This topic describes the Amazon Web Services (AWS) reference architecture for VMware Tanzu Operations Manager, including VMware Tanzu Application Service for VMs (TAS for VMs) and VMware Tanzu Kubernetes Grid Integrated Edition (TKGI). This architecture builds on the common base architectures described in Platform Architecture and Planning Overview.

For general requirements for running Tanzu Operations Manager and specific requirements for running Tanzu Operations Manager on AWS, see Tanzu Operations Manager on AWS Requirements.

TAS for VMs

This section describes the reference architecture for a TAS for VMs installation on AWS.

Reference architecture diagram

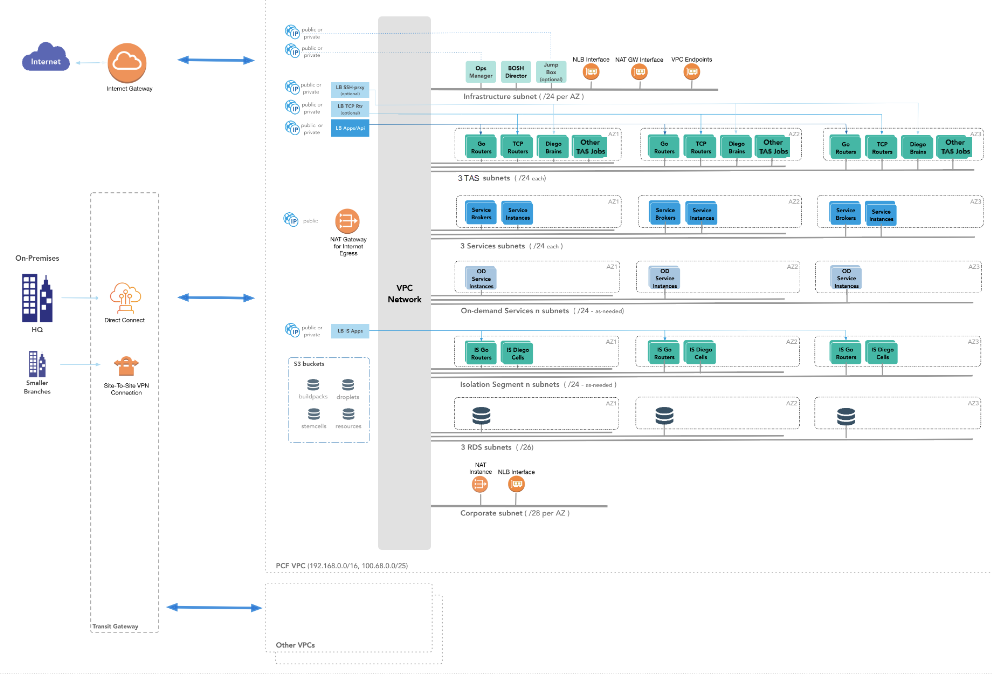

The following diagram illustrates the reference architecture for TAS for VMs on AWS. The diagram shows the flow and components from the HQ, branch offices, and broader internet into the VPC Network. Categories of elements in the VPC Network include:

- Infrastructure subnet (with Tanzu Operations Manager, BOSH Director, Jumpbox, NLB interface, NAT GW Interface, and VPC Endpoints)

- 3 TAS subnets

- 3 Services subnets

- On-demand Services subnets

- Isolation Segment subnets

- 3 RDS subnets

- Corporate subnet (with a NAT instance and an NLB interface)

View a larger version of this diagram..

Networking

These sections provide guidance about networking resources.

DNS

You can use AWS Route 53 for DNS resolution to host your TAS for VMs domains.

Load balancing

AWS offers Application Load Balancers (ALBs) and Network Load Balancers (NLBs). VMware recommends using NLBs because of the simplicity and security of leaving all encrypted traffic in place until it reaches the Gorouters. If you require Amazon Certificate Management or more complex Layer 7 routing rules, you can front or replace the NLB with an ALB.

Networks, subnets, and IP spacing planning

When planning networks, subnets, and IP spacing, some things to consider are:

-

In AWS, each availability zone (AZ) requires its own subnet; the first five IPs must be reserved.

-

Your organization might not have the IP space necessary to deploy TAS for VMs in a consistent manner. To alleviate this, VMware recommends using the

100.64address space to deploy a NAT instance and router so that the Tanzu Operations Manager deployment can use fewer IP addresses. -

If you want the front end of TAS for VMs to be accessible from your corporate network, or the services running on TAS for VMs to be able to access corporate resources, you must either:

- provide routable IPs to your VPC

- use NAT

-

If you want a VPC that is only public-facing, no special consideration is necessary for the use of IPs.

-

To understand the action required, depending on the type of traffic you want to allow for your Tanzu Operations Manager deployment, see the following table:

If you want... then... internet ingress Create the load balancers with Elastic IPs. internet egress Create the NAT Gateway toward the internet. corporate ingress Create load balancers on your corporate subnet. corporate egress (for accessing corporate resources) Create the NAT instance on your corporate subnet, or if business requirements dictate, make the entire VPC corporate-routable. -

If you plan to install VMware Tanzu Service Broker for AWS, you might want to create another set of networks in addition to those outlined in the base reference architecture. This set of networks would be used for RDS and other AWS-managed instances.

Alternative network layouts

These options are available for choosing your network topology:

| Type of traffic | Options |

|---|---|

| internet ingress |

|

| internet egress |

|

| peering |

|

| corporate peering |

|

RDS

Use a single RDS instance for BOSH and TAS for VMs. This instance requires several databases. For more information, see:

-

Step 3: Director Config Page in Configuring BOSH Director on AWS

Blobstore storage accounts

Tanzu Operations Manager requires a bucket for the BOSH blobstore.

TAS for VMs requires these buckets:

- Buildpacks

- Droplets

- Packages

- Resources

These buckets require an associated role for read/write access.

Identity management

For identity management, use Instance Profiles whenever possible. For example, the AWS Config Page of the BOSH Director tile provides a Use AWS Instance Profile option. For more information, see Step 2: AWS Config Page in Configuring BOSH Director on AWS.

TKGI

The following sections describe the reference architecture for a TKGI installation on AWS.

Diagram

The following diagram illustrates the reference architecture for TKGI on AWS. The diagram shows the flow and components from the HQ, branch offices, and the broader internet into the VPC Network. Categories of elements in the VPC Network include:

- Infrastructure subnet (with Tanzu Operations Manager, BOSH Director, Jumpbox, NLB interface, NAT GW Interface, and VPC Endpoints)

- 3 Services subnets

- 3 PKS subnets

- Kubernetes Pods subnet

- Kubernetes Services subnet

- 3 RDS subnets

- Corporate subnet (with a NAT instance and an NLB interface)

View a larger version of this diagram..

Networking

These sections provide guidance about networking resources.

DNS

You can use AWS Route 53 for DNS resolution to host your TKGI domains.

Load balancing

You can configure a front end load balancer to enable public access to the TKGI API VM.

For each TKGI cluster, VMware recommends creating a load balancer for the corresponding primary node or nodes. For more information, see Creating and Configuring an AWS Load Balancer for TKGI Clusters.

For deployed workloads, Kubernetes can automatically create load balancers when applying the service definition to the app instances. For more information, see Deploying and Exposing Basic Workloads.

Networks, subnets, and IP spacing planning

When planning networks, subnets, and IP spacing, some things to consider are:

-

In AWS, each AZ requires its own subnet; the first five IPs must be reserved.

-

If you want the front end of TKGI to be accessible from your corporate network, or the services running on TKGI to be able to access corporate resources, you must either:

- provide routable IPs to your VPC

- use NAT

-

Create the TKGI subnets that host the Kubernetes cluster VMs with enough IP address capacity to accommodate the number of VMs and clusters you expect to deploy. The reference architecture diagram above shows a

/24CIDR for the TKGI subnets, which allows for about 250 VMs per AZ. If you expect a larger number of clusters for your organization, consider defining larger subnets, such as/20, to make room for future growth.

Alternative network layouts

These options are available for choosing your network topology:

| Type of traffic | Options |

|---|---|

| internet ingress |

|

| internet egress |

|

| Peering |

|

| Corporate peering |

|

RDS instance

Use a single RDS instance for BOSH. This instance requires a database. For more information, see Step 3: Director Config page in Configuring BOSH Director on AWS.

Identity management

To create and manage Kubernetes primary and worker nodes, use Instance Profiles. For more information, see Kubernetes cloud provider in Installing Enterprise TKGI on AWS.

For information about installing the required AWS constructs for TKGI, see Installing Tanzu Operations Manager on AWS.