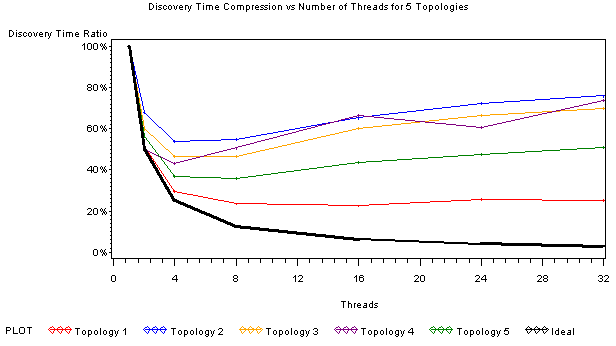

The optimal number of discovery threads varies because the number depends upon both latency and topology of the network. A higher number of threads is needed for high latency environments. For very high latency (for example, 300 ms) as many as 100 discovery threads may be optimal.

Discovery with two threads will take about half as long as discovery with one thread as the latency for devices will be overlapped almost completely. Four threads will again cut discovery time in half and so on, as depicted in Total CPU expansion versus number of threads for five topologies.

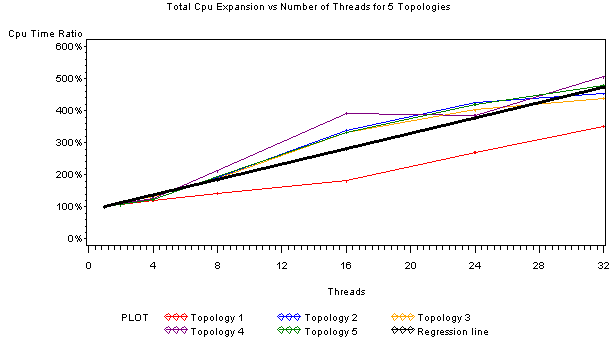

As discovery threads are added, the total amount of CPU seconds needed to complete discovery will rise due to increased overhead of lock contention among the discovery threads. The rise in CPU may be quite significant depending on how well the hardware platform handles synchronization. The amount of CPU overhead for increased discovery threads would vary similarly as is depicted in Discovery time compression versus number of threads for five topologies.

During testing, an average of about 12 percent increase was observed in the amount of CPU consumed for each additional discovery thread on the Sun M4000 in the test laboratory setup. For example, 32 threads would consume about 4.7 times as much CPU as a single thread. This would be lesser in higher latency environments. This serves to demonstrate the potential CPU increase due to discovery multi-threading. However, this should not be regarded as predictive for a customer environment.