This section describes the procedure of creating an Enrichment stream.

Note: It is recommended that you edit and update the default Enrichment streams based on your Enrichment requirements instead of creating a new Enrichment stream.

Procedure

- Navigate to Administration > Configuration > Enrichment.

- Click Add.

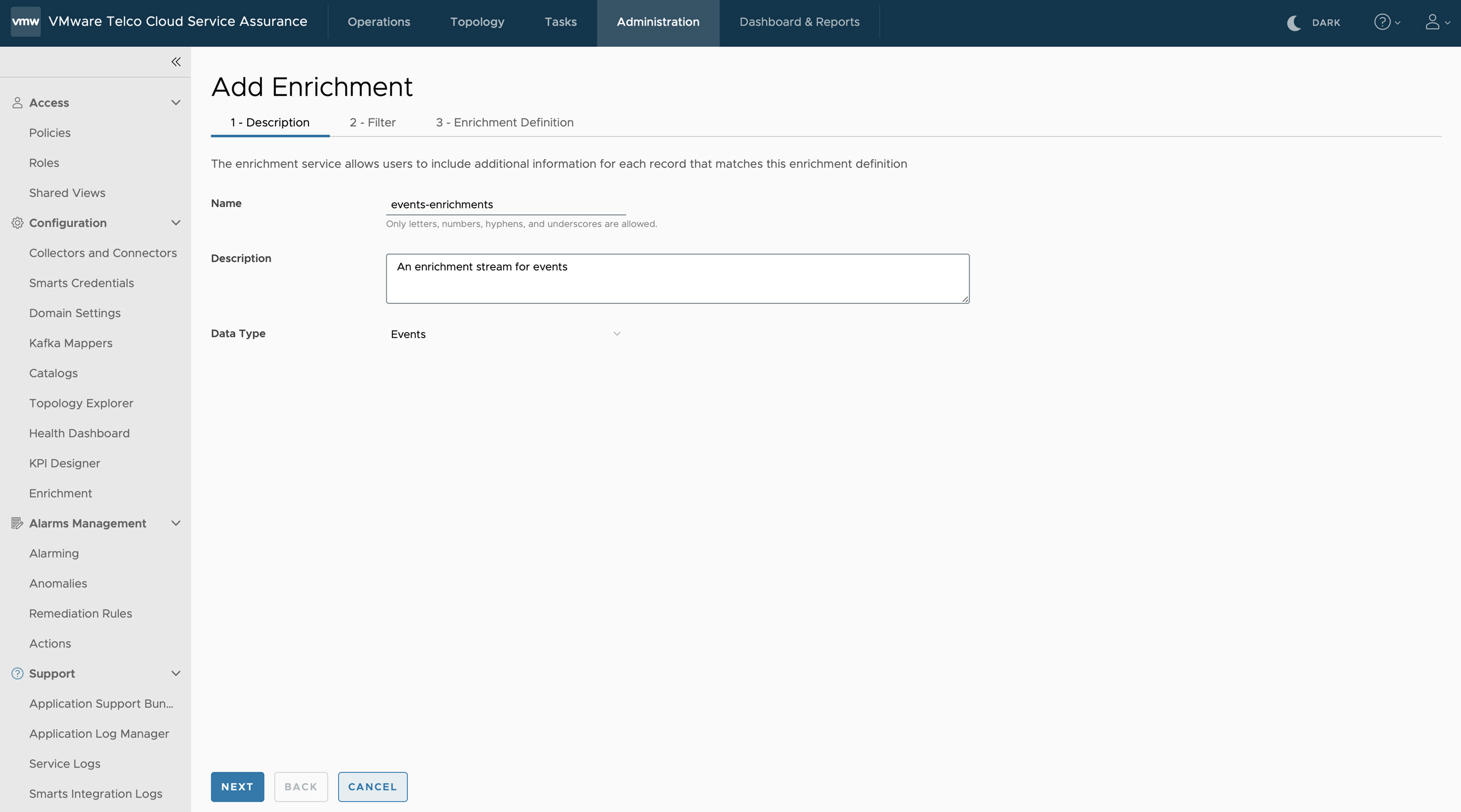

The browser navigates to the Add Enrichment page.Under the Description tab, enter the following parameters:

- Name: Required field. Configures the name of the Enrichment. Only letters, numbers, hyphens, and underscores are allowed.

- Description: Required field. Configures the description of the Enrichment. A double quote in the field will be escaped to a single quote.

- Data Type: Required field. Configures the data type of the VMware Telco Cloud Service Assurance record being enriched. Three types of records are supported. You can select only one record at a time.

- VMware Telco Cloud Service Assurance Event

- VMware Telco Cloud Service Assurance Metric

- VMware Telco Cloud Service Assurance Topology

- Click Next.

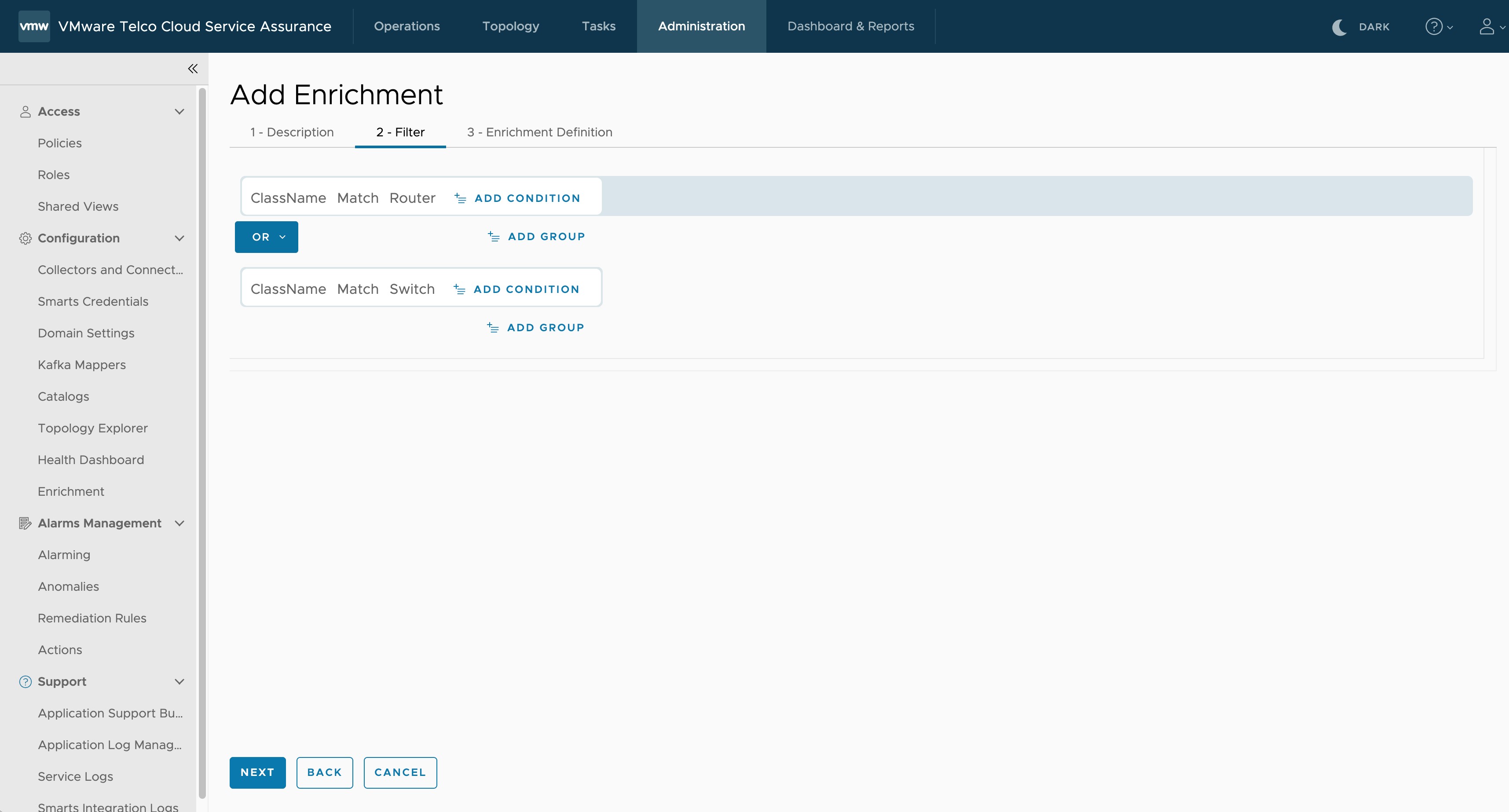

- Under Filter, select the Property, Expression, and enter the Value for the Enrichment definition. The listed key properties are based on the selected data type such as Metrics, Events, or Topology.

- To add multiple filters within the same group, click Add Condition. The AND condition tag is used when you add filters within the same group.

- To add multiple filters, click Add Group. The OR condition tag is used when you add filters from different groups.

Note: While metrics and events Enrichment streams have filters, there is no filter available for the Topology stream. Under Description if you select the Data Type as Topology, the Filter tab disappears.

- Click Next.

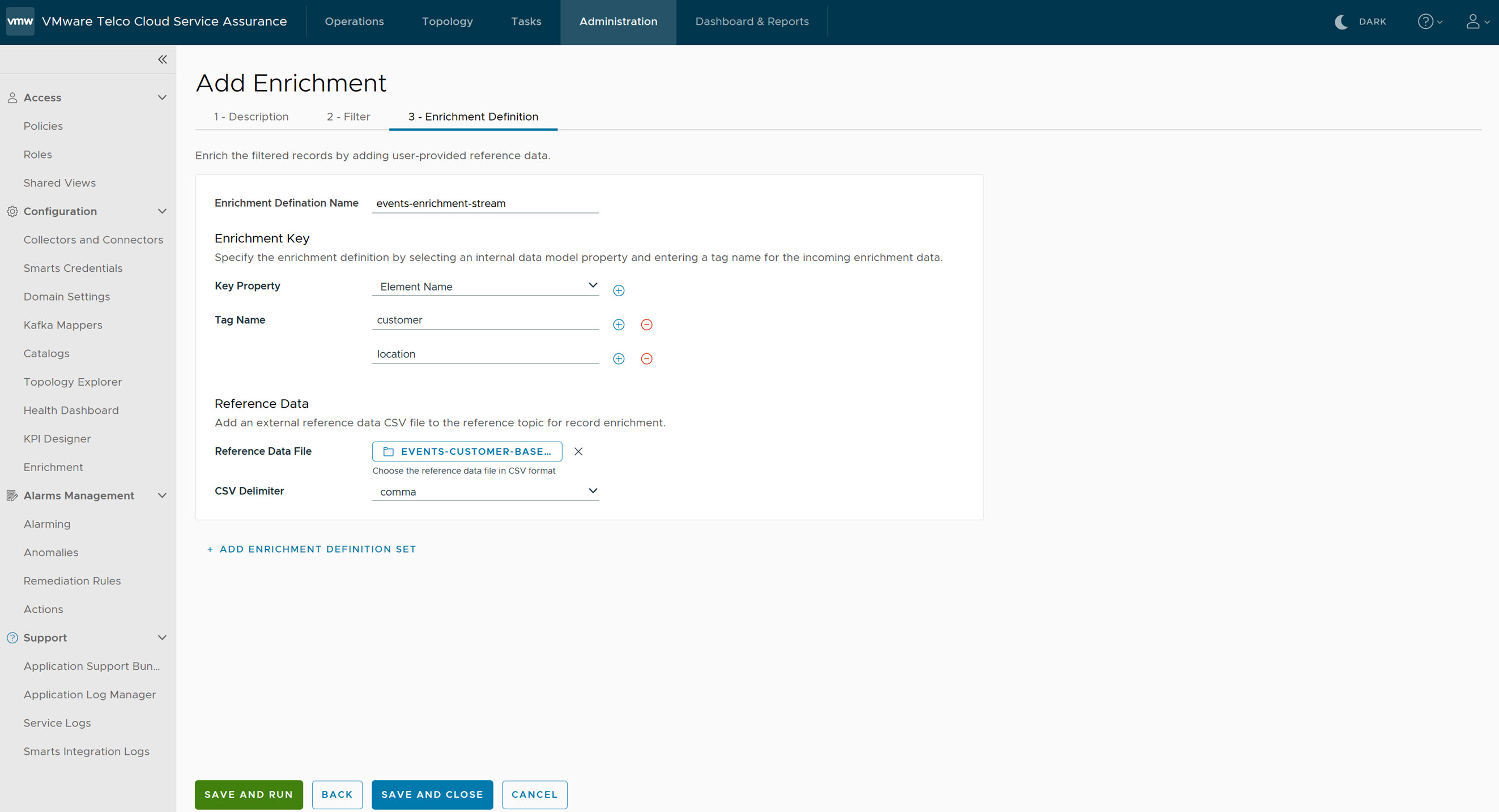

- Under Enrichment Definition tab, provide the following parameter details:

- Enrichment Definition Name: Required field. The name of the Enrichment definition. Atleast one Enrichment definition is required. To configure multiple Enrichments, click Add Enrichment Definition Set.

- In Enrichment Key, there are two fields to be configured:

- Key Property: Required field. Configures the key using the properties in the internal data model, which is used to match the corresponding external reference data key. The listed key properties are based on the selected data type such as Metrics, Events, or Topology. You can select multiple key properties.

- Tag Name: Required field. Provide a tag name for the Enrichment definition. You can add multiple tag names.

- In Reference Data, there are two fields to be configured:

- Reference Data File: Required field. Select the reference data file in CSV format. The file must have .csv as the file extension. The Enrichment uses the external reference data from the .csv file to enrich the VMware Telco Cloud Service Assurance records. Only letters, numbers, hyphens, underscores, and periods are allowed in the Reference Data File.

- CSV Delimiter: Required field. Select the delimiter type from the drop-down list. The default is comma.

Note:

- The reference data is not persisted. It is sent to the Kafka reference topic directly to be used by a running Enrichment stream. The reference data must be added again after the users stop and start the Enrichment.

- By default, the maximum file size is 10 MB, the admin can update the environment variable MAX_REFERENCE_DATA_SIZE and MAX_TOTAL_REFERENCE_DATA_SIZE of the Enrichment service to change the maximum file size.

The following table lists the supported Wild Card (regular expression) patterns for key properties in CSV reference data file.Patterns Description %text% Multiple character match: this is similar to checking any key that contains particular text. For example: %DIR%, any key that contains DIR is matched. text1_text2 Single character match: this is to check if any matching single character is present between text1 and text2. For example, Router-DI_, here matching key is Router-DIF or Router-DIZ or Router-DI2, any key which starts with Router-DI and ends with any single charcter after DI is matched. <NI-N2> Specify a range: this is to specify any number range. For example, 172.<16-31>.<0-255>.<0-255>. Here matching key is 172.18.220.176 and unmatching key is 172.14.255.224. Note: N1 must be less than N2.| (pipe) Pipe: this is to specify multiple matching fields within a key. For example: IP*-172.<16-31>.*|IP*-192.168.*|IP*-10.*|172.<16-31>.<0-255>.<0-255>|192.168.<0-255>.<0-255>|10.<0-255>.<0-255>.<0-255>,india

The example record has two fields, the first field is the datasource, and the second field is the location. In the following sample records, the key can be any of the following datasources.- IPv6-172.16.1.1

- IPv4-192.168.1.2

- IPv6-10.1.2.3

- 172.17.91.11

- 192.168.22.11

- 33.22.11.3

* (include all) For example: Router*DCP.

- Click Save & Close to save the current Enrichment configuration and exit the wizard or click Save & Run to save the current Enrichment configuration and start the Enrichment processing. After the Enrichment configuration is saved or canceled, the browser returns to the Enrichment list page.

Note: For Demo footprint deployment, Enrichment will be saved successfully. If your deployment is 25 K and higher, Enrichment stream might fail to save and run. Hence, you must perform the following steps:

- Run the following command from deployer VM.

kubectl exec -i edge-kafka-0 -n kafka-edge -- bash -c "/opt/kafka/bin/kafka-topics.sh --list --bootstrap-server localhost:9093" | grep <your-enrichment-name>

After running the command, you can get the following sample output.kubectl exec -i edge-kafka-0 -n kafka-edge -- bash -c "/opt/kafka/bin/kafka-topics.sh --list --bootstrap-server localhost:9093" | grep new_metric_stream Defaulted container "kafka" out of: kafka, kafka-init (init) new_metric_stream__metric-enricher-stream

- Create a JSON file such as

/tmp/increase-replication-factor.jsonwith the following content by providing topic with the step 7.a output.{"version":1, "partitions":[ {"topic":"new_metric_stream__metric-enricher-stream","partition":0,"replicas":[0,1,2]}, {"topic":"new_metric_stream__metric-enricher-stream","partition":1,"replicas":[0,1,2]}, {"topic":"new_metric_stream__metric-enricher-stream","partition":2,"replicas":[0,1,2]} ]} - Run the following commands to copy the above JSON file into Edge Kafka pod and apply the Kafka replication settings.

kubectl cp /tmp/increase-replication-factor.json kafka-edge/edge-kafka-0:/tmp/increase-replication-factor.json

kubectl exec -i edge-kafka-0 -n kafka-edge -- bash -c "/opt/kafka/bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9093 --reassignment-json-file /tmp/increase-replication-factor.json --execute"

- Run the following command from deployer VM.

- If you have followed the instructions under step 7, then edit the newly created Enrichment stream and navigate to Enrichment Definition and upload the reference data CSV file as mentioned in step 6.