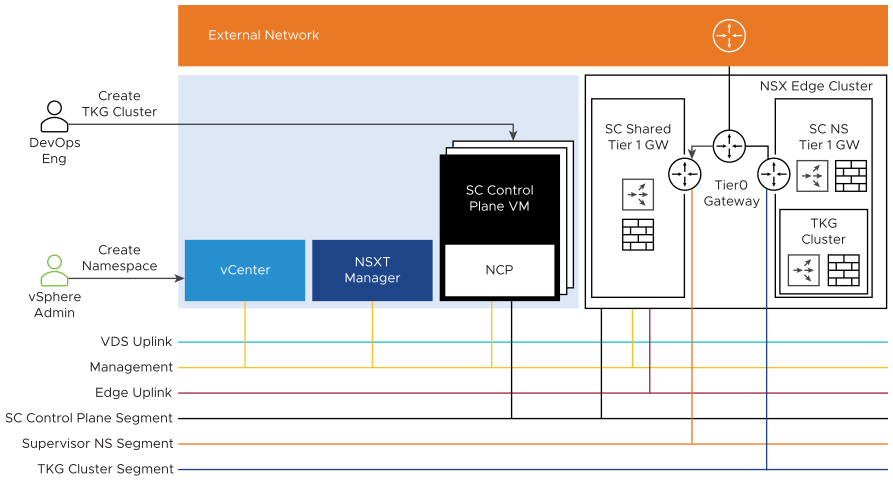

When you install vSphere with Tanzu version 7.0 Update 1c or upgrade the Supervisor Cluster from version 7.0 Update 1 to version 7.0 Update 1c, you upgrade the NSX Container Plug-in (NCP). This in turn migrates the networking topology of the Supervisor Cluster, namespaces, and Tanzu Kubernetes clusters. After the upgrade, the networking topology is upgraded from a single tier-1 gateway topology to a topology that has a tier-1 gateway for each namespace within the Supervisor Cluster.

During the upgrade, the NCP configures the NSX resources to support the new topology. The NCP provides a shared network infrastructure for namespaces that have less of layer-4 and layer-7 load balancing services. This reduces the resources on NSX and makes more available for Tanzu Kubernetes clusters.

System namespaces are namespaces that are used by the core components that are integral to functioning of the Supervisor Cluster and Tanzu Kubernetes clusters. The shared network resources that include the tier-1 gateway, load balancer, and SNAT IP are grouped in a system namespace.

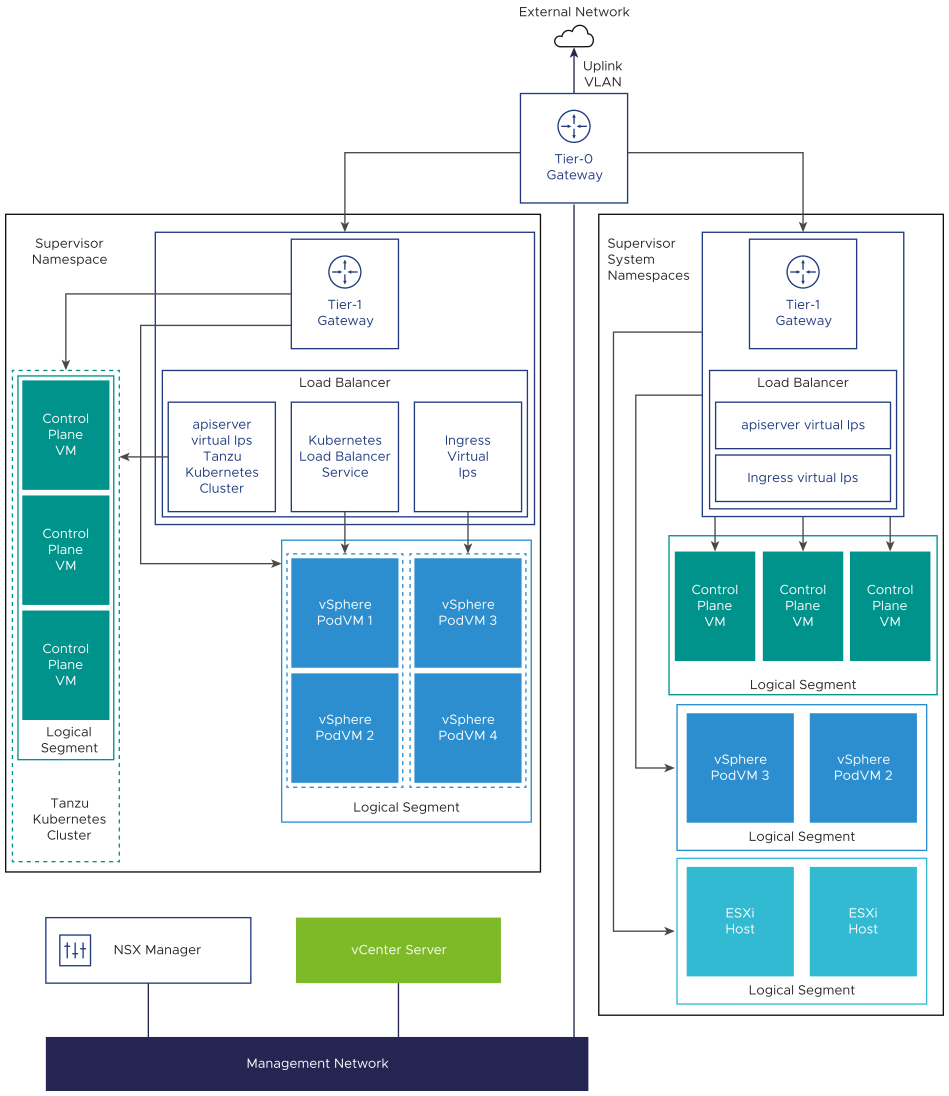

The NCP creates one shared tier-1 gateway for system namespaces and a tier-1 gateway and load balancer for each namespace, by default. The tier-1 gateway is connected to the tier-0 gateway and a default segment.

The NSX-T load balancer provides load balancing services in the form of virtual servers.

- Each vSphere Namespace has a separate network and set of networking resources shared by applications inside the namespace such as, tier-1 gateway, load balancer service, and SNAT IP address.

- Workloads running in vSphere Pods, regular VMs, or Tanzu Kubernetes clusters, that are in the same namespace, share a same SNAT IP for North-South connectivity.

- Workloads running in vSphere Pods or Tanzu Kubernetes clusters will have the same isolation rule that is implemented by the default firewall.

- A separate SNAT IP is not required for each Kubernetes namespace. East west connectivity between namespaces will be no SNAT.

The maximum number of namespaces that can be run depends on Edge Node size (Medium, Large, or X-Large) and the number of Edge nodes in the NSX Edge Cluster. The number of namespaces that can be run is less than 20 times the number of Edge nodes. For example, if the NSX Edge cluster has 10 Edge nodes of Large size, the maximum number of Supervisor Namespaces that can be created is 199.

For more information on the Edge Node size, see the NSX-T Data Center Installation Guide.

Supervisor Cluster Networking

Supervisor clusters have separate segments within the shared tier-1 gateway. For each Tanzu Kubernetes cluster, segments are defined within the tier-1 gateway of the namespace.

Workloads, including vSphere Pods and Tanzu Kubernetes clusters, that are within the same namespace, will share a SNAT IP for north-south connectivity. East-west connectivity between namespaces will be no SNAT.

Tanzu Kubernetes Clusters Networking

After the Supervisor Cluster upgrade, when DevOps engineers provision the first Tanzu Kubernetes cluster in a Supervisor Namespace, the cluster will share the same tier-1 gateway and load balancer as the namespace. For each Tanzu Kubernetes cluster that is provisioned in that namespace, a segment is created for that cluster and it is connected to the shared tier-1 gateway in its Supervisor Namespace.

When a Tanzu Kubernetes cluster is provisioned by the Tanzu Kubernetes Grid Service, a single virtual server is created that provides layer-4 load balancing for the Kubernetes API. This virtual server is hosted on the shared load balancer with the namespace and is responsible for routing kubectl traffic to the control plane. In addition, for each Kubernetes service load balancer that is resourced on the cluster, a virtual server is created that provides layer-4 load balancing for that service.