In a vSphere with Tanzu environment, a Supervisor Cluster can either use the vSphere networking stack or VMware NSX® to provide connectivity to Kubernetes control plane VMs, services, and workloads. When a Supervisor Cluster is configured with the vSphere networking stack, all hosts from the cluster are connected to a vSphere Distributed Switch that provides connectivity to Kubernetes workloads and control plane VMs. A Supervisor Cluster that uses the vSphere networking stack requires a load balancer on the vCenter Server management network to provide connectivity to DevOps users and external services. A Supervisor Cluster that is configured with VMware NSX®, uses the software-based networks of the solution as well as an NSX Edge load balancer to provide connectivity to external services and DevOps users.

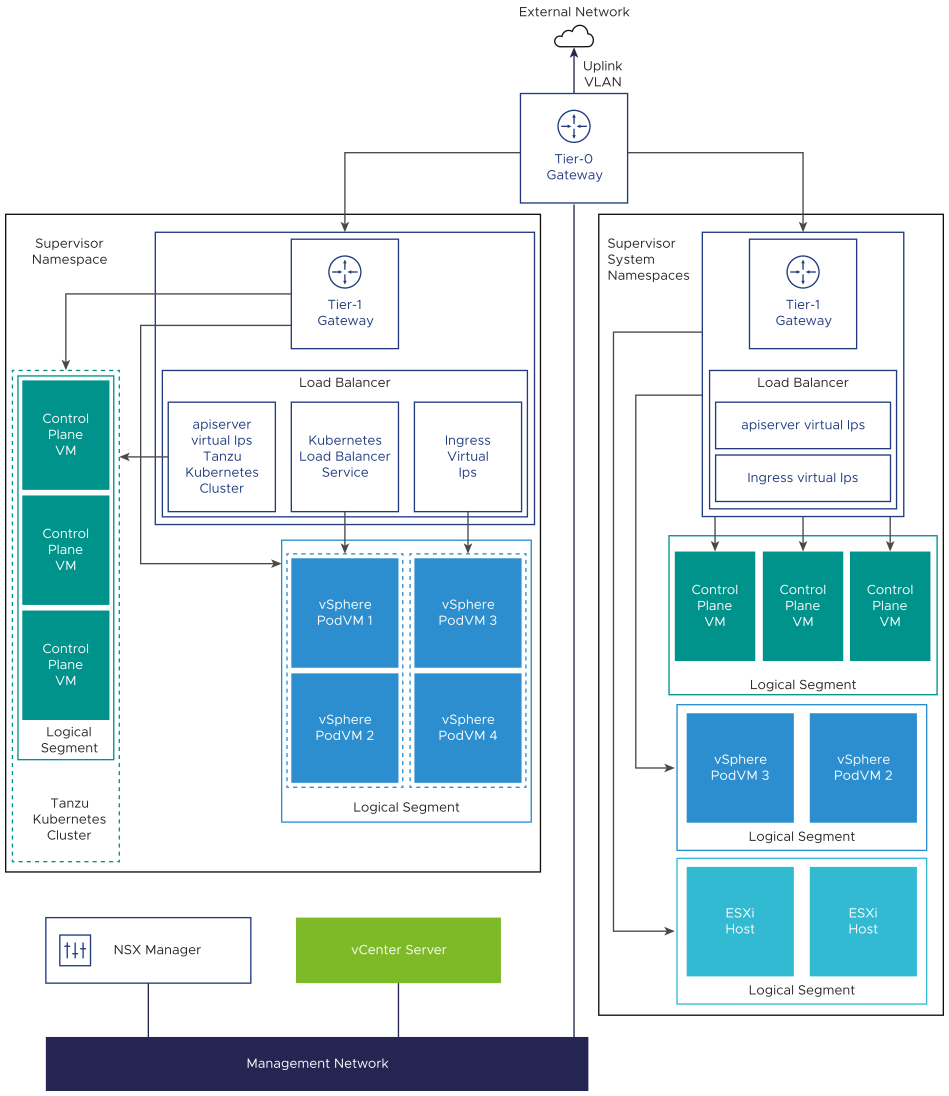

Supervisor Cluster Networking with NSX

VMware NSX® provides network connectivity to the objects inside the Supervisor Cluster and external networks. Connectivity to the ESXi hosts comprising the cluster is handled by the standard vSphere networks.

You can also configure the Supervisor Cluster networking manually by using an existing NSX deployment or by deploying a new instance of NSX.

See the Product Interoperability Matrix for information about compatibility of vCenter Server and VMware NSX®.

For information on the upgrade when you upgrade from vSphere with Tanzu Version 7.0 Update 1 to Version 7.0 Update 2, see Network Topology Upgrade.

- NSX Container Plugin (NCP) provides integration between NSX and Kubernetes. The main component of NCP runs in a container and communicates with NSX Manager and with the Kubernetes control plane. NCP monitors changes to containers and other resources and manages networking resources such as logical ports, segments, routers, and security groups for the containers by calling the NSX API.

The NCP creates one shared tier-1 gateway for system namespaces and a tier-1 gateway and load balancer for each namespace, by default. The tier-1 gateway is connected to the tier-0 gateway and a default segment.

System namespaces are namespaces that are used by the core components that are integral to functioning of the supervisor cluster and Tanzu Kubernetes. The shared network resources that include the tier-1 gateway, load balancer, and SNAT IP are grouped in a system namespace.

- NSX Edge provides connectivity from external networks to Supervisor Cluster objects. The NSX Edge cluster has a load balancer that provides a redundancy to the Kubernetes API servers residing on the control plane VMs and any application that must be published and be accessible from outside the Supervisor Cluster.

- A tier-0 gateway is associated with the NSX Edge cluster to provide routing to the external network. The uplink interface uses either the dynamic routing protocol, BGP, or static routing.

- Each vSphere Namespace has a separate network and set of networking resources shared by applications inside the namespace such as, tier-1 gateway, load balancer service, and SNAT IP address.

- Workloads running in Sphere Pods, regular VMs, or Tanzu Kubernetes clusters, that are in the same namespace, share a same SNAT IP for North-South connectivity.

- Workloads running in Sphere Pods or Tanzu Kubernetes clusters will have the same isolation rule that is implemented by the default firewall.

- A separate SNAT IP is not required for each Kubernetes namespace. East west connectivity between namespaces will be no SNAT.

- The segments for each namespace reside on the vSphere Distributed Switch (VDS) functioning in Standard mode that is associated with the NSX Edge cluster. The segment provides an overlay network to the Supervisor Cluster.

- Supervisor clusters have separate segments within the shared tier-1 gateway. For each Tanzu Kubernetes cluster, segments are defined within the tier-1 gateway of the namespace.

- The Spherelet processes on each ESXi hosts communicate with vCenter Server through an interface on the Management Network.

To learn more about Supervisor Cluster networking, watch the video vSphere 7 with Kubernetes Network Service - Part 1 - The Supervisor Cluster.

Networking Configuration Methods with NSX

- The simplest way to configure the Supervisor Cluster networking is by using the VMware Cloud Foundation SDDC Manager. For more information, see the VMware Cloud Foundation SDDC Manager documentation. For information, see Working with Workload Management.

- You can also configure the Supervisor Cluster networking manually by using an existing NSX deployment or by deploying a new instance of NSX. See Install and Configure NSX for vSphere with Tanzu for more information.

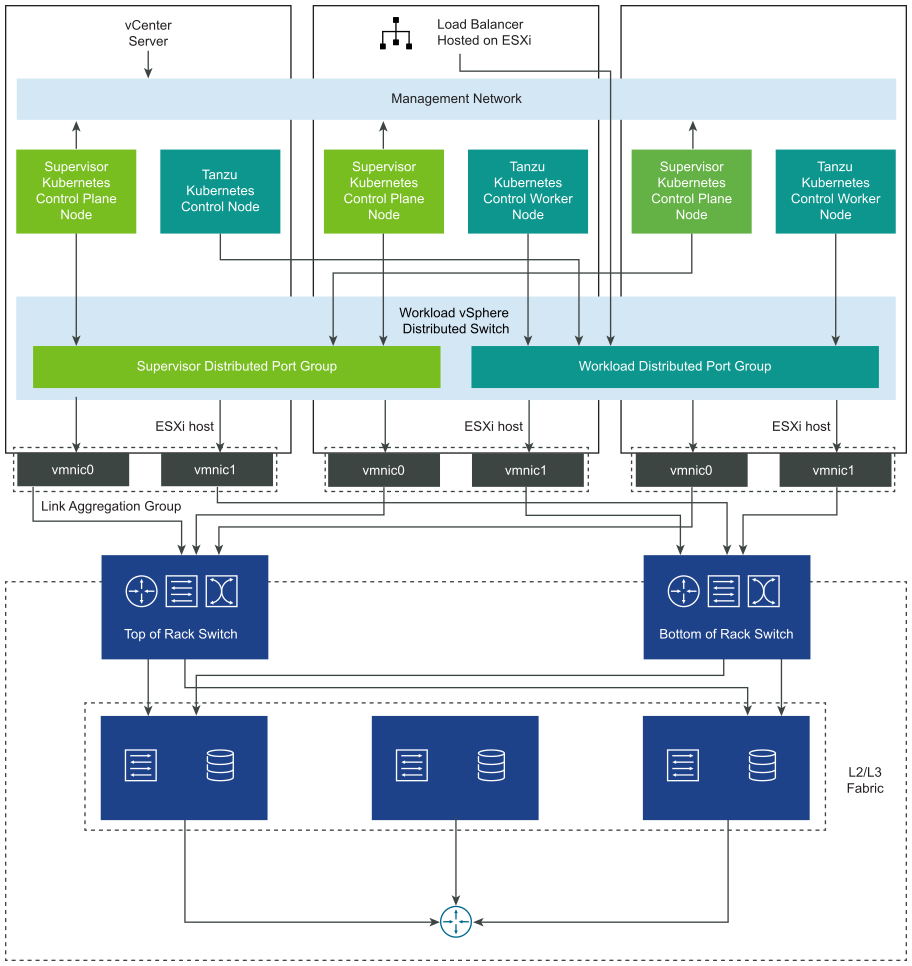

Supervisor Cluster Networking with vSphere Distributed Switch

A Supervisor Cluster that is backed by a vSphere Distributed Switch uses distributed port groups as Workload Networks for namespaces.

Depending on the topology that you implement for the Supervisor Cluster, you can use one or more distributed port groups as Workload Networks. The network that provides connectivity to the Kubernetes Control Plane VMs is called Primary Workload Network. You can assign this network to all the namespaces on the Supervisor Cluster, or you can use different networks for each namespace. The Tanzu Kubernetes clusters connect to the Workload Network that is assigned to the namespace where the clusters reside.

A Supervisor Cluster that is backed by a vSphere Distributed Switch uses a load balancer for providing connectivity to DevOps users and external services. You can use the NSX Advanced Load Balancer or the HAProxy load balancer.

For more information, see Configuring vSphere Networking and NSX Advanced Load Balancer for vSphere with Tanzu and Install and Configure the HAProxy Load Balancer.