ESXi can connect to external SAN storage using the Internet SCSI (iSCSI) protocol. In addition to traditional iSCSI, ESXi also supports iSCSI Extensions for RDMA (iSER).

With the iSER protocol, the host can use the same iSCSI framework, but replaces the TCP/IP transport with the Remote Direct Memory Access (RDMA) transport.

About iSCSI SAN

iSCSI SAN uses Ethernet connections between hosts and high-performance storage subsystems.

On the host side, the iSCSI SAN components include iSCSI host bus adapters (HBAs) or Network Interface Cards (NICs). The iSCSI network also includes switches and routers that transport the storage traffic, cables, storage processors (SPs), and storage disk systems.

The iSCSI SAN uses a client-server architecture.

The client, called iSCSI initiator, operates on your ESXi host. It initiates iSCSI sessions by issuing SCSI commands and transmitting them, encapsulated into the iSCSI protocol, to an iSCSI server. The server is known as an iSCSI target. Typically, the iSCSI target represents a physical storage system on the network.

The target can also be a virtual iSCSI SAN, for example, an iSCSI target emulator running in a virtual machine. The iSCSI target responds to the initiator's commands by transmitting required iSCSI data.

iSCSI Multipathing

When transferring data between the host server and storage, the SAN uses a technique known as multipathing. With multipathing, your ESXi host can have more than one physical path to a LUN on a storage system.

Generally, a single path from a host to a LUN consists of an iSCSI adapter or NIC, switch ports, connecting cables, and the storage controller port. If any component of the path fails, the host selects another available path for I/O. The process of detecting a failed path and switching to another is called path failover.

For more information on multipathing, see Understanding Multipathing and Failover in the ESXi Environment.

Nodes and Ports in the iSCSI SAN

A single discoverable entity on the iSCSI SAN, such as an initiator or a target, represents an iSCSI node.

Each node has a node name. ESXi uses several methods to identify a node.

- IP Address

- Each iSCSI node can have an IP address associated with it so that routing and switching equipment on your network can establish the connection between the host and storage. This address is like the IP address that you assign to your computer to get access to your company's network or the Internet.

- iSCSI Name

- A worldwide unique name for identifying the node. iSCSI uses the iSCSI Qualified Name (IQN) and Extended Unique Identifier (EUI).

- iSCSI Alias

- A more manageable name for an iSCSI device or port used instead of the iSCSI name. iSCSI aliases are not unique and are intended to be a friendly name to associate with a port.

Each node has one or more ports that connect it to the SAN. iSCSI ports are end-points of an iSCSI session.

iSCSI Naming Conventions

iSCSI uses a special unique name to identify an iSCSI node, either target or initiator.

iSCSI names are formatted in two different ways. The most common is the IQN format.

For more details on iSCSI naming requirements and string profiles, see RFC 3721 and RFC 3722 on the IETF website.

- iSCSI Qualified Name Format

-

The iSCSI Qualified Name (IQN) format takes the form

iqn.yyyy-mm.naming-authority:unique name, where:

- yyyy-mm is the year and month when the naming authority was established.

- naming-authority is the reverse syntax of the Internet domain name of the naming authority. For example, the iscsi.vmware.com naming authority can have the iSCSI qualified name form of iqn.1998-01.com.vmware.iscsi. The name indicates that the vmware.com domain name was registered in January of 1998, and iscsi is a subdomain, maintained by vmware.com.

- unique name is any name you want to use, for example, the name of your host. The naming authority must make sure that any names assigned following the colon are unique, such as:

- iqn.1998-01.com.vmware.iscsi:name1

- iqn.1998-01.com.vmware.iscsi:name2

- iqn.1998-01.com.vmware.iscsi:name999

- Enterprise Unique Identifier Format

-

The Enterprise Unique Identifier (EUI) format takes the form

eui.16_hex_digits.

For example, eui.0123456789ABCDEF.

The 16-hexadecimal digits are text representations of a 64-bit number of an IEEE EUI (extended unique identifier) format. The top 24 bits are a company ID that IEEE registers with a particular company. The remaining 40 bits are assigned by the entity holding that company ID and must be unique.

iSCSI Initiators

To access iSCSI targets, your ESXi host uses iSCSI initiators.

The initiator is a software or hardware installed on your ESXi host. The iSCSI initiator originates communication between your host and an external iSCSI storage system and sends data to the storage system.

In the ESXi environment, iSCSI adapters configured on your host play the role of initiators. ESXi supports several types of iSCSI adapters.

For information on configuring and using iSCSI adapters, see Configuring iSCSI and iSER Adapters and Storage with ESXi.

- Software iSCSI Adapter

- A software iSCSI adapter is a VMware code built into the VMkernel. Using the software iSCSI adapter, your host can connect to the iSCSI storage device through standard network adapters. The software iSCSI adapter handles iSCSI processing while communicating with the network adapter. With the software iSCSI adapter, you can use iSCSI technology without purchasing specialized hardware.

- Hardware iSCSI Adapter

-

A hardware iSCSI adapter is a third-party adapter that offloads iSCSI and network processing from your host. Hardware iSCSI adapters are divided into categories.

- Dependent Hardware iSCSI Adapter. Depends on VMware networking, and iSCSI configuration and management interfaces provided by VMware.

This type of adapter can be a card that presents a standard network adapter and iSCSI offload functionality for the same port. The iSCSI offload functionality depends on the host's network configuration to obtain the IP, MAC, and other parameters used for iSCSI sessions. An example of a dependent adapter is the iSCSI licensed Broadcom 5709 NIC.

- Independent Hardware iSCSI Adapter. Implements its own networking and iSCSI configuration and management interfaces.

Typically, an independent hardware iSCSI adapter is a card that either presents only iSCSI offload functionality or iSCSI offload functionality and standard NIC functionality. The iSCSI offload functionality has independent configuration management that assigns the IP, MAC, and other parameters used for the iSCSI sessions. An example of an independent adapter is the QLogic QLA4052 adapter.

Hardware iSCSI adapters might need to be licensed. Otherwise, they might not appear in the client or vSphere CLI. Contact your vendor for licensing information.

- Dependent Hardware iSCSI Adapter. Depends on VMware networking, and iSCSI configuration and management interfaces provided by VMware.

Using iSER Protocol with ESXi

In addition to traditional iSCSI, ESXi supports the iSCSI Extensions for RDMA (iSER) protocol. When the iSER protocol is enabled, the iSCSI framework on the ESXi host can use the Remote Direct Memory Access (RDMA) transport instead of TCP/IP.

The traditional iSCSI protocol carries SCSI commands over a TCP/IP network between an iSCSI initiator on a host and an iSCSI target on a storage device. The iSCSI protocol encapsulates the commands and assembles that data in packets for the TCP/IP layer. When the data arrives, the iSCSI protocol disassembles the TCP/IP packets, so that the SCSI commands can be differentiated and delivered to the storage device.

iSER differs from traditional iSCSI as it replaces the TCP/IP data transfer model with the Remote Direct Memory Access (RDMA) transport. Using the direct data placement technology of the RDMA, the iSER protocol can transfer data directly between the memory buffers of the ESXi host and storage devices. This method eliminates unnecessary TCP/IP processing and data coping, and can also reduce latency and the CPU load on the storage device.

In the iSER environment, iSCSI works exactly as before, but uses an underlying RDMA fabric interface instead of the TCP/IP-based interface.

Because the iSER protocol preserves the compatibility with iSCSI infrastructure, the process of enabling iSER on the ESXi host is similar to the iSCSI process. See Configure iSER with ESXi.

Establishing iSCSI Connections

In the ESXi context, the term target identifies a single storage unit that your host can access. The terms storage device and LUN describe a logical volume that represents storage space on a target. Typically, the terms device and LUN, in the ESXi context, mean a SCSI volume presented to your host from a storage target and available for formatting.

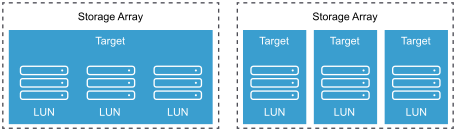

Different iSCSI storage vendors present storage to hosts in different ways. Some vendors present multiple LUNs on a single target. Others present multiple targets with one LUN each.

In these examples, three LUNs are available in each of these configurations. In the first case, the host detects one target but that target has three LUNs that can be used. Each of the LUNs represents individual storage volume. In the second case, the host detects three different targets, each having one LUN.

Host-based iSCSI initiators establish connections to each target. Storage systems with a single target containing multiple LUNs have traffic to all the LUNs on a single connection. With a system that has three targets with one LUN each, the host uses separate connections to the three LUNs.

This information is useful when you are trying to aggregate storage traffic on multiple connections from the host with multiple iSCSI adapters. You can set the traffic for one target to a particular adapter, and use a different adapter for the traffic to another target.

iSCSI Storage System Types

The types of ESXi storage that your host supports include active-active, active-passive, and ALUA-compliant.

- Active-active storage system

- Supports access to the LUNs simultaneously through all the storage ports that are available without significant performance degradation. All the paths are always active, unless a path fails.

- Active-passive storage system

- A system in which one storage processor is actively providing access to a given LUN. The other processors act as a backup for the LUN and can be actively providing access to other LUN I/O. I/O can be successfully sent only to an active port for a given LUN. If access through the active storage port fails, one of the passive storage processors can be activated by the servers accessing it.

- Asymmetrical storage system

- Supports Asymmetric Logical Unit Access (ALUA). ALUA-compliant storage systems provide different levels of access per port. With ALUA, hosts can determine the states of target ports and prioritize paths. The host uses some of the active paths as primary and others as secondary.

- Virtual port storage system

- Supports access to all available LUNs through a single virtual port. Virtual port storage systems are active-active storage devices, but hide their multiple connections though a single port. ESXi multipathing does not make multiple connections from a specific port to the storage by default. Some storage vendors supply session managers to establish and manage multiple connections to their storage. These storage systems handle port failovers and connection balancing transparently. This capability is often called transparent failover.

Discovery, Authentication, and Access Control

You can use several mechanisms to discover your storage and to limit access to it.

You must configure your host and the iSCSI storage system to support your storage access control policy.

- Discovery

-

A discovery session is part of the iSCSI protocol. It returns the set of targets you can access on an iSCSI storage system. The two types of discovery available on

ESXi are dynamic and static. Dynamic discovery obtains a list of accessible targets from the iSCSI storage system. Static discovery can access only a particular target by target name and address.

For more information, see Configure Dynamic or Static Discovery for iSCSI and iSER on ESXi Host.

- Authentication

-

iSCSI storage systems authenticate an initiator by a name and key pair.

ESXi supports the CHAP authentication protocol. To use CHAP authentication, the

ESXi host and the iSCSI storage system must have CHAP enabled and have common credentials.

For information on enabling CHAP, see Configuring CHAP Parameters for iSCSI or iSER Storage Adapters on ESXi Host.

- Access Control

-

Access control is a policy set up on the iSCSI storage system. Most implementations support one or more of three types of access control:

- By initiator name

- By IP address

- By the CHAP protocol

Only initiators that meet all rules can access the iSCSI volume.

Using only CHAP for access control can slow down rescans because the ESXi host can discover all targets, but then fails at the authentication step. iSCSI rescans work faster if the host discovers only the targets it can authenticate.

How Virtual Machines Access Data on an iSCSI SAN

ESXi stores a virtual machine's disk files within a VMFS datastore that resides on a SAN storage device. When virtual machine guest operating systems send SCSI commands to their virtual disks, the SCSI virtualization layer translates these commands to VMFS file operations.

When a virtual machine interacts with its virtual disk stored on a SAN, the following process takes place:

- When the guest operating system in a virtual machine reads or writes to SCSI disk, it sends SCSI commands to the virtual disk.

- Device drivers in the virtual machine’s operating system communicate with the virtual SCSI controllers.

- The virtual SCSI controller forwards the commands to the VMkernel.

- The VMkernel performs the following tasks.

- Locates an appropriate virtual disk file in the VMFS volume.

- Maps the requests for the blocks on the virtual disk to blocks on the appropriate physical device.

- Sends the modified I/O request from the device driver in the VMkernel to the iSCSI initiator, hardware or software.

- If the iSCSI initiator is a hardware iSCSI adapter, independent or dependent, the adapter performs the following tasks.

- Encapsulates I/O requests into iSCSI Protocol Data Units (PDUs).

- Encapsulates iSCSI PDUs into TCP/IP packets.

- Sends IP packets over Ethernet to the iSCSI storage system.

- If the iSCSI initiator is a software iSCSI adapter, the following takes place.

- The iSCSI initiator encapsulates I/O requests into iSCSI PDUs.

- The initiator sends iSCSI PDUs through TCP/IP connections.

- The VMkernel TCP/IP stack relays TCP/IP packets to a physical NIC.

- The physical NIC sends IP packets over Ethernet to the iSCSI storage system.

- Ethernet switches and routers on the network carry the request to the appropriate storage device.

Error Correction

To protect the integrity of iSCSI headers and data, the iSCSI protocol offers error correction methods known as header digests and data digests.

Both methods are supported by the ESXi host, and you can activate them. These methods are used to check the header and SCSI data transferred between iSCSI initiators and targets, in both directions.

Header and data digests check the noncryptographic data integrity beyond the integrity checks that other networking layers, such as TCP and Ethernet, provide. The digests check the entire communication path, including all elements that can change the network-level traffic, such as routers, switches, and proxies.

The existence and type of the digests are negotiated when an iSCSI connection is established. When the initiator and target agree on a digest configuration, this digest must be used for all traffic between them.

Enabling header and data digests requires additional processing for both the initiator and the target, and can affect throughput and CPU use performance.

For information on enabling header and data digests, see Configuring Advanced Parameters for iSCSI on ESXi Host.