Your Cloud Native Storage environment and virtual machines that participate in the Kubernetes cluster must meet several requirements.

Cloud Native Storage Requirements

- A compatible version of vSphere.

- A compatible version of Kubernetes.

- A Kubernetes cluster deployed on the virtual machines. For details about deploying the vSphere CSI plug-in and running the Kubernetes cluster on vSphere, see the VMware vSphere Container Storage Plug-in Documentation.

Requirements for Kubernetes Cluster Virtual Machines

- Virtual machines with hardware version 15 or later. Install VMware Tools on each node virtual machine.

- Virtual machine hardware recommendations:

- Set CPU and memory adequately based on workload requirements.

- Use the VMware Paravirtual SCSI controller for the primary disk on the Node VM.

- All virtual machines must have access to a shared datastore, such as vSAN.

- Set the disk.EnableUUID parameter on each node VM. See Configure Kubernetes Cluster in vSphere Virtual Machines.

- To avoid errors and unpredictable behavior, do not take snapshots of CNS node VMs.

Requirements for CNS File Volume

- Use vSphere version 7.0 or later with a compatible Kubernetes version.

- Use a compatible version of CSI. For information, see the VMware vSphere Container Storage Plug-in Documentation.

- Enable and configure the vSAN file service. You must configure the necessary file service domains, IP pools, network, and so on. For information, see the Administering VMware vSAN documentation.

- Follow specific guidelines to configure network access from a guest OS in the Kubernetes node to a vSAN file share. See Configuring Network Access to vSAN File Share.

Configuring Network Access to vSAN File Share

To be able to provision ReadWriteMany persistent volumes in your generic vSphere Kubernetes environment, configure necessary networks, switches, and routers from the Kubernetes nodes to the vSAN file service network.

Setting Up Network

- On every Kubernetes node, you can use a dedicated vNIC for the vSAN file share traffic. This option is required only if you want to use a secure data traffic path for your file volumes.

- If you use a dedicated vNIC, make sure that the traffic through the dedicated vNIC is routable to one or many vSAN file service networks.

- Make sure that only the guest OS on each Kubernetes node can directly access the vSAN file share through the file share IP address. The pods in the node cannot ping or access the vSAN file share by its IP address.

CNS CSI driver ensures that only those pods that are configured to use the CNS file volume can access the vSAN file share by creating a mount point in the guest OS.

- Avoid creating an IP address clash between the node VMs and vSAN file shares.

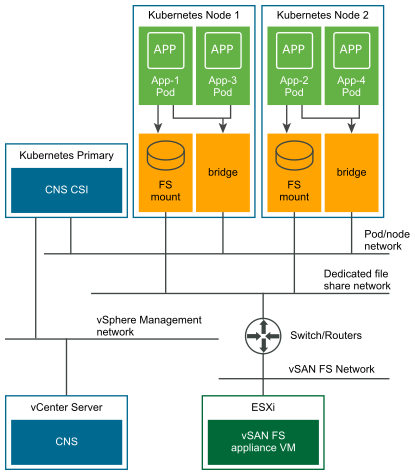

The following illustration is an example of the CNS network configuration with the vSAN file share service.

- The configuration uses separate networks for different items in the CNS environment.

Network Description vSphere management network Typically, in a generic Kubernetes cluster, every node has access to this network. Pod or node network Kubernetes uses this network for the node to node or pod to pod communication. Dedicated file share network CNS file volume data traffic uses this network. vSAN file share network Network where the vSAN file share is enabled and where file shares are available. - Every Kubernetes node has a dedicated vNIC for the file traffic. This vNIC is separate from the vNIC used for the node to node or pod to pod communication. This configuration is used only as an example, but is not mandatory.

- Only those applications that are configured to use the CNS file share have access to vSAN file shares through the mount point in the node guest OS. For example, in the illustration, the following takes place:

- App-1 and App-2 pods are configured to use a file volume, and have access to the file share through the mount point created by the CSI driver.

- App-3 and App-4 are not configured with a file volume and cannot access file shares.

- The vSAN file shares are deployed as containers in a vSAN file share appliance VM on the ESXi host. A Kubernetes deployer, which is a software or service that can configure, deploy, and manage Kubernetes clusters, configures necessary routers and switches, so that the guest OS in the Kubernetes node can access the vSAN file shares.

Security Limitations

- The CNS file functionality assumes that anyone who has the CNS file volume ID is an authorized user of the volume. Any user that has the CNS file volume ID can access the data stored in the volume.

- CNS file volume supports only the AUTH_SYS authentication, which is a user ID-based authentication. To protect access to the data in the CNS file volume, you must use appropriate user IDs for the containers accessing the CNS file volume.

- An unbound ReadWriteMany persistent volume referring to a CNS file volume can be bound by a persistent volume claim created by any Kubernetes user under any namespace. Make sure that only authorized users have access to Kubernetes to avoid security issues.

Configuring the CSI Driver to Access vSAN File Service Clusters

Depending on the configuration, the CSI driver can provision file volumes on one or several vSAN clusters where the file service is enabled.

You can restrict access to only specific vSAN clusters where the file service is enabled. When deploying the Kubernetes cluster, configure the CSI driver with access to specific file service vSAN clusters. As a result, the CSI driver can provision the file volumes only on those vSAN clusters.

In the default configuration, the CSI driver uses any file service vSAN cluster available in vCenter Server for the file volume provisioning. The CSI driver does not verify which file service vSAN cluster is accessible while provisioning file volumes.