When you enable vSphere IaaS control plane on vSphere clusters and they become Supervisors, Kubernetes control plane is created inside the hypervisor layer. This layer contains specific objects that enable the capability to run Kubernetes workloads within ESXi.

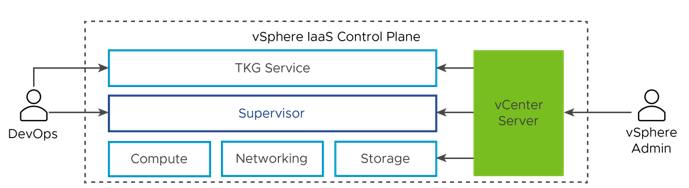

The diagram shows the vSphere IaaS control plane high-level architecture, with TKG on top, the Supervisor in the middle, then ESXi, networking, and storage at the bottom, and vCenter Server managing them.

A Supervisor runs on top of an SDDC layer that consists of ESXi for compute, NSX or VDS networking, and vSAN or another shared storage solution. Shared storage is used for persistent volumes for vSphere Pods and VMs running inside the Supervisor, and pods in a TKG cluster. After a Supervisor is created, as a vSphere administrator you can create vSphere Namespaces within the Supervisor. As a DevOps engineer, you can run workloads consisting of containers running inside vSphere Pods, deploy VMs through the VM service, and create TKG clusters.

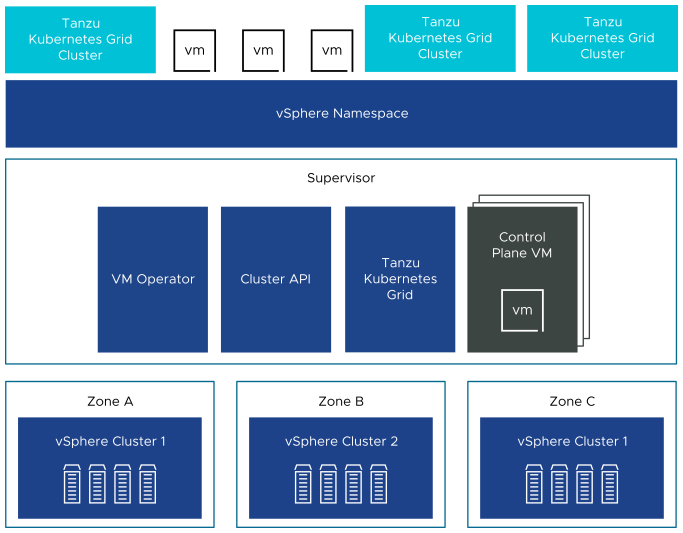

You can deploy a Supervisor on three vSphere Zones to provide cluster-level high-availability that protects your Kubernetes workloads against cluster-level failure. A vSphere Zone maps to one vSphere cluster that you can setup as an independent failure domain. In a three-zone deployment, all three vSphere clusters become one Supervisor. You can also deploy a Supervisor on one vSphere cluster, which will automatically create a vSphere Zone and map it to the cluster, unless you use a vSphere cluster that is already mapped to a zone. In a single cluster deployment, the Supervisor only has high availability on host level that is provided by vSphere HA.

On a three-zone Supervisor you can run Kubernetes workloads on TKG clusters and VMs created by using the VM service. A three-zone Supervisor has the following components:

- Supervisor control plane VM. Three Supervisor control plane VMs in total are created on the Supervisor. In a three-zone deployment, one control plane VM resides on each zone. The three Supervisor control plane VMs are load balanced as each one of them has its own IP address. Additionally, a floating IP address is assigned to one of the VMs and a 5th IP address is reserved for patching purposes. vSphere DRS determines the exact placement of the control plane VMs on the ESXi hosts part of the Supervisor and migrates them when needed.

- TKG and Cluster API. Modules running on the Supervisor and enable the provisioning and management of TKG clusters.

- Virtual Machine Service. A module that is responsible for deploying and running stand-alone VMs and VMs that make up TKG clusters.

In a three-zone Supervisor, a namespace resource pool is created on each vSphere cluster that is mapped to a zone. The namespace spreads across all three vSphere clusters in each zone. The resources utilized to e namespace on a three-zone Supervisor are taken from all three underlying vSphere cluster on equal parts. For example, if you dedicate 300 MHz of CPU, 100 MHz are taken from each vSphere cluster.

A Supervisor deployed on a single vSphere cluster also has three control plane VMs, which reside on the ESXi hosts part of the cluster. On a single-cluster Supervisor, you can run vSphere Pods in addition toTKG clusters and VMs. vSphere DRS is integrated with the Kubernetes Scheduler on the Supervisor control plane VMs, so that DRS determines the placement of vSphere Pods. When as a DevOps engineer you schedule a vSphere Pod, the request goes through the regular Kubernetes workflow then to DRS, which makes the final placement decision.

Because of the vSphere Pod support, a single-cluster Supervisor has the following additional components:

- Spherelet. An additional process called Spherelet is created on each host. It is a kubelet that is ported natively to ESXi and allows the ESXi host to become part of the Kubernetes cluster.

- Container Runtime Executive (CRX) component. CRX is similar to a VM from the perspective of Hostd and vCenter Server. CRX includes a paravirtualized Linux kernel that works together with the hypervisor. CRX uses the same hardware virtualization techniques as VMs and it has a VM boundary around it. A direct boot technique is used, which allows the Linux guest of CRX to initiate the main init process without passing through kernel initialization. This allows vSphere Pods to boot nearly as fast as containers.