If ESXi hosts in your vSphere IaaS control plane environment have one or more NVIDIA GRID GPU graphics devices, you can configure VMs to use the NVIDIA GRID virtual GPU (vGPU) technology. You can also configure other PCI devices on an ESXi host to make them available to a VM in a passthrough mode.

Deploying a VM with vGPU in vSphere IaaS Control Plane

NVIDIA GRID GPU graphics devices are designed to optimize complex graphics operations and enable them to run at high performance without overloading the CPU. NVIDIA GRID vGPU provides unparalleled graphics performance, cost-effectiveness, and scalability by sharing a single physical GPU among multiple VMs as separate vGPU-enabled passthrough devices.

Considerations

- Three-zone Supervisor does not support VMs with vGPU.

- VMs with vGPU devices that are managed by VM Service are automatically powered off when an ESXi host enters maintenance mode. This might temporarily affect workloads running in the VMs. The VMs are automatically powered on after the host exists the maintenance mode.

- DRS distributes vGPU VMs in a breadth-first manner across cluster's hosts. For more information, see DRS Placement of vGPU VMs in the vSphere Resource Management guide.

Requirements

To configure NVIDIA vGPU, follow these requirements:

- Verify that the ESXi is supported in the VMware Compatibility Guide, and check with the vendor to verify the host meets power and configuration requirements.

- Configure ESXi host graphics settings with at least one device in Shared Direct mode. See Configuring Host Graphics in the vSphere Resource Management documentation.

- The content library you use for VMs with vGPU devices must include images with the boot mode set to EFI, such as CentOS.

- Install NVIDIA vGPU software. NVIDIA provides a vGPU software package that includes the following components.

For more information, see appropriate NVIDIA Virtual GPU Software documentation.

- vGPU Manager that a vSphere administrator installs on the ESXi host. See VMware Knowledge Base article 2033434.

- Guest VM driver that a DevOps engineer installs in the VM after deploying and booting the VM. See Install the NVIDIA Guest Driver in a VM in vSphere IaaS Control Plane.

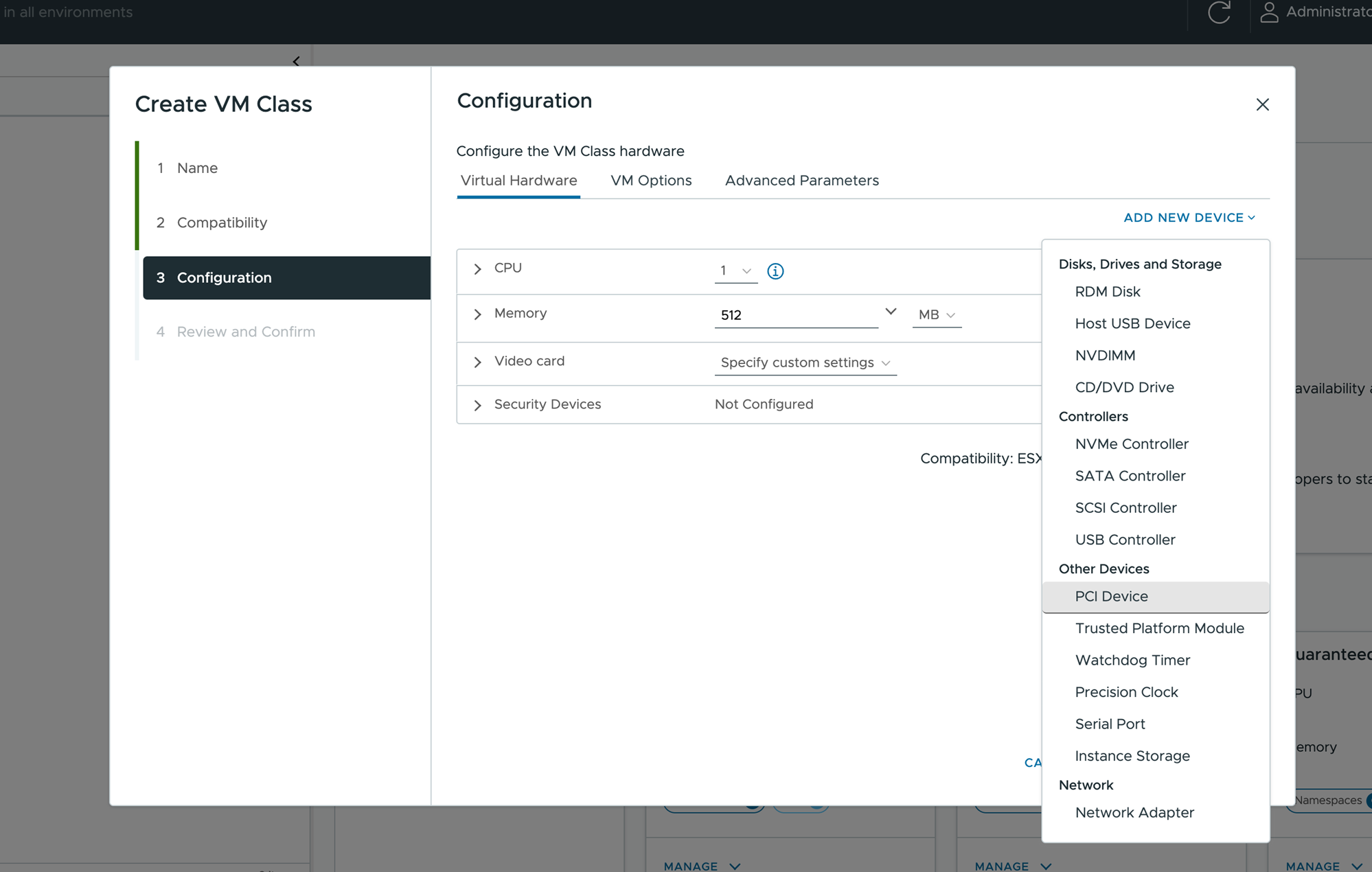

Add a vGPU Device to a VM Class Using the vSphere Client

Create or edit an existing VM class to add an NVIDIA GRID virtual GPU (vGPU).

Prerequisites

Procedure

Results

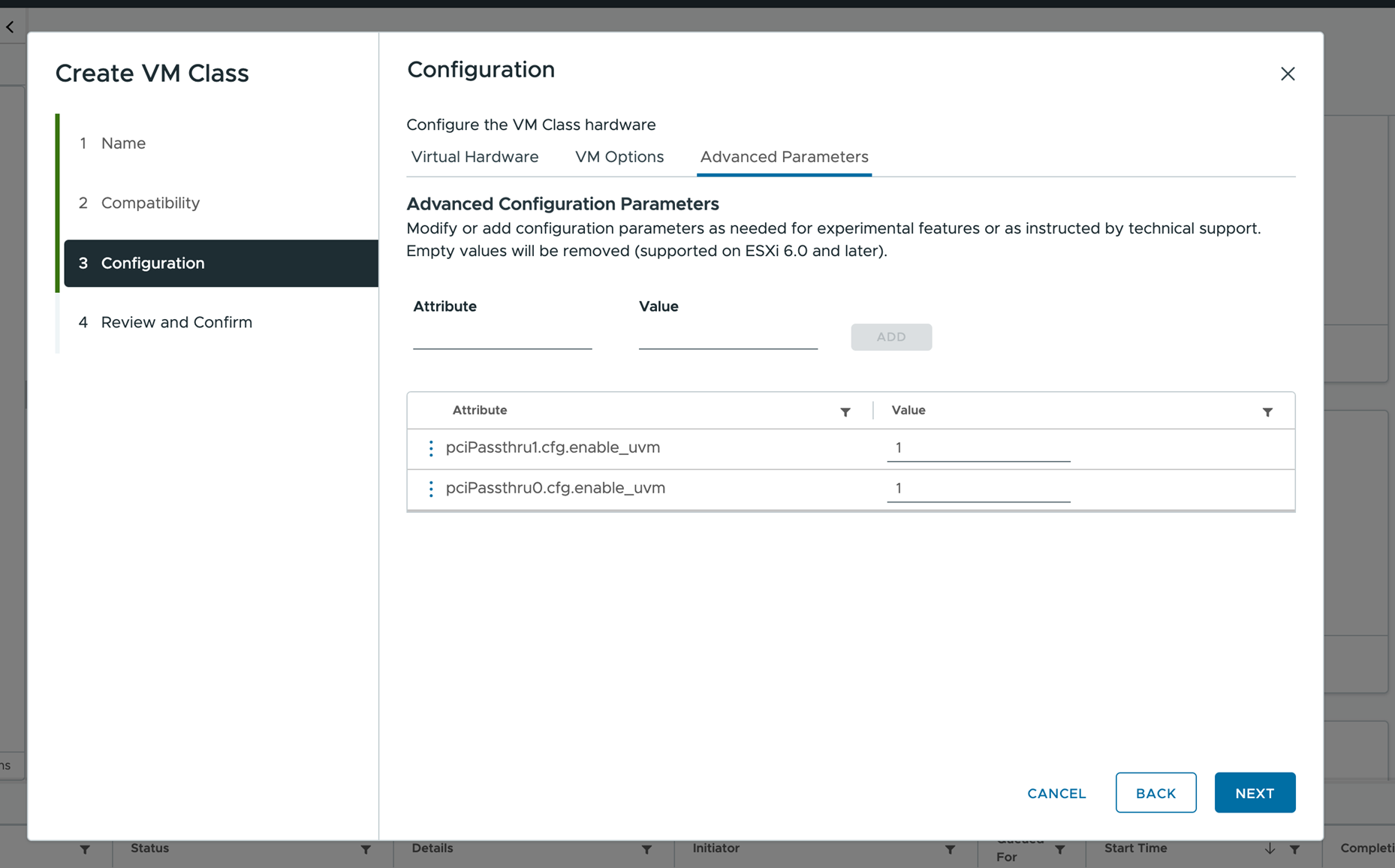

Add a vGPU Device to a VM Class Using Data Center CLI

In addition to the vSphere Client, you can use the Data Center CLI (DCLI) command to add vGPUs and advanced configurations.

For more information about DCLI commands, see Create and Manage VM Classes Using the Data Center CLI.

Procedure

Install the NVIDIA Guest Driver in a VM in vSphere IaaS Control Plane

If the VM includes a PCI device configured for vGPU, after you create and boot the VM in your vSphere IaaS control plane environment, install the NVIDIA vGPU graphics driver to fully enable GPU operations.

Prerequisites

- Deploy the VM with vGPU. Make sure that the VM YAML file references the VM class with vGPU definition. See Deploy a Virtual Machine in vSphere IaaS Control Plane.

- Verify that you downloaded the vGPU software package from the NVIDIA download site, uncompressed the package, and have the guest drive component ready. For information, see appropriate NVIDIA Virtual GPU Software documentation.

Note: The version of the driver component must correspond to the version of the vGPU Manager that a vSphere administrator installed on the ESXi host.

Procedure

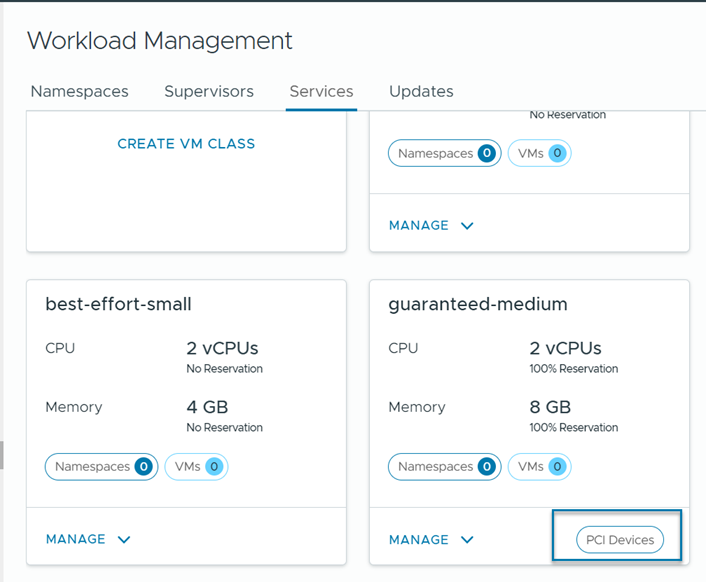

Deploying a VM with PCI Devices in vSphere IaaS Control Plane

In addition to vGPU, you can configure other PCI devices on an ESXi host to make them available to a VM in a passthrough mode.