Writing a pipeline to install Tanzu Operations Manager

This how-to-guide shows you how to write a pipeline for installing a new VMware Tanzu Operations Manager. If you already have a Tanzu Operations Manager VM, see Upgrading an existing Tanzu Operations Manager.

Prerequisites

Over the course of this guide, you will use Platform Automation Toolkit to create a pipeline using Concourse.

You need:

- For upgrade only: A running Tanzu Operations Manager VM that you would like to upgrade

- Credentials for an IaaS that Tanzu Operations Manager is compatible with

- It doesn't matter what IaaS you use for Tanzu Operations Manager, as long as your Concourse can connect to it. Pipelines built with Platform Automation Toolkit can be platform-agnostic.

- A Concourse instance with access to a CredHub instance and to the Internet

- A GitHub account

- Read/write credentials and bucket name for an S3 bucket

- An account on the Broadcom Support portal

- A MacOS workstation with:

- a text editor of your choice

- a terminal emulator of your choice

- a browser that works with Concourse, like Firefox or Chrome

gitinstalled- Docker installed

It will be very helpful to have a basic familiarity with the following. If you don't have basic familiarity with all these things, you will fine some basics explained here, along with links to resources to learn more:

Note While this guide uses GitHub to provide a git remote, and an S3 bucket as a blobstore, Platform Automation Toolkit supports arbitrary git providers and S3-compatible blobstores.

Specific examples are described in some detail, but if you follow along with different providers some details may be different. Also see Setting up S3 for file storage.

Similarly, in this guide, MacOS is assumed, but Linux should work well, too. Keep in mind that there might be differences in the paths that you will need to figure out.

Creating a Concourse pipeline

Platform Automation Toolkit's tasks and image are meant to be used in a Concourse pipeline.

Using your bash command-line client, create a directory to keep your pipeline files in, and cd into it.

mkdir your-repo-name

cd !$

This repo name should relate to your situation and be specific enough to be navigable from your local workstation.

Note !$ is a bash shortcut. Pronounced "bang, dollar-sign," it means "use the last argument from the most recent command." In this case, that's the directory you just created.

Gather variables to use in the pipeline (for upgrade only)

If you are upgrading, continue with the following. If not, skip to Creating a pipeline.

Before getting started with the pipeline, gather some variables in a file that you can use throughout your pipeline.

Open your text editor and create vars.yml. Here's what it should look like to start. You can add more variables as you go:

platform-automation-bucket: your-bucket-name

credhub-server: https://your-credhub.example.com

opsman-url: https://pcf.foundation.example.com

Important This example assumes that that you are using DNS and host names. You can use IP addresses for all these resources instead, but you still need to provide the information as a URL, for example: https://120.121.123.124.

Creating a pipeline

Create a file called pipeline.yml.

The examples in this guide use pipeline.yml, but you might create multiple pipelines over time. If there's a more sensible name for the pipeline you're working on, feel free to use that instead.

Start the file as shown here. This is YAML for "the start of the document." It's optional, but traditional:

---

Now you have a valid YAML pipeline file.

Getting fly

First, try to set your new YAML file as a pipeline with fly, the Concourse command-line Interface (CLI).

To check if you have fly installed:

fly -v

If it returns a version number, you're ready for the next steps. Skip ahead to Setting the pipeline

If it says something like -bash: fly: command not found, you need to get fly.

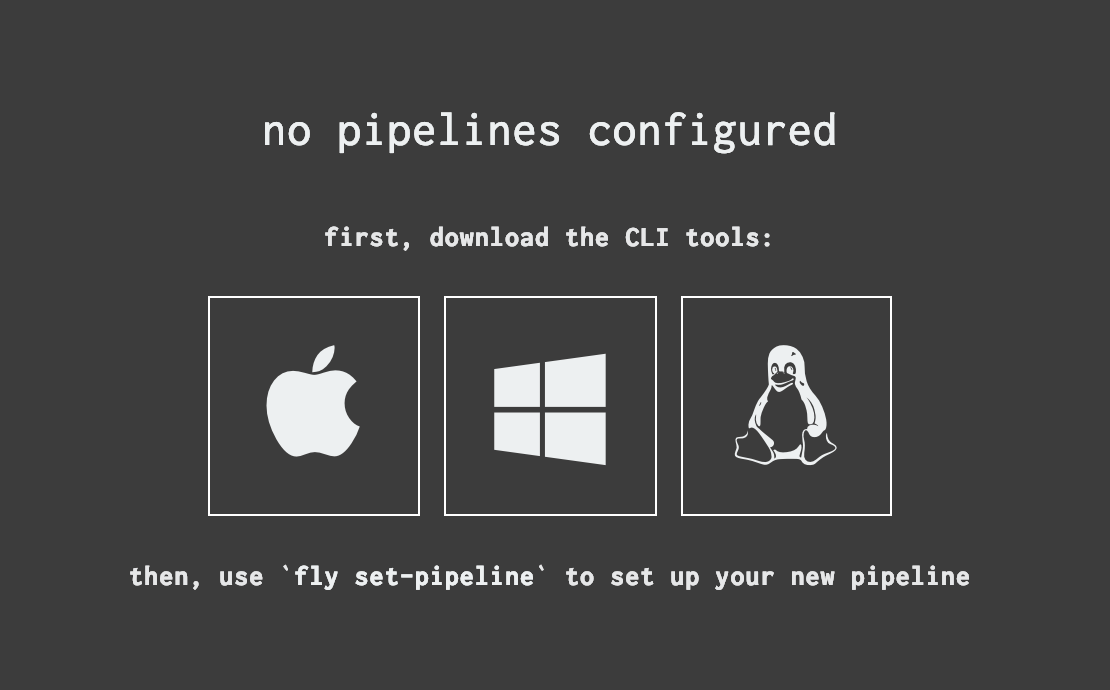

Navigate to the address for your Concourse instance in a web browser. At this point, you don't need to be signed in. If there are no public pipelines, you should see something like this:

If there are public pipelines, or if you're signed in and there are pipelines you can see, you'll see something similar in the lower-right hand corner.

Click the icon for your OS and save the file, move (mv) the resulting file to somewhere in your $PATH, and use chmod to make it executable:

Note About command-line examples: In some cases, you can copy-paste the examples directly into your terminal. Some of them won't work that way, or even if they did, would require you to edit them to replace our example values with your actual values. Best practice is to type all of the bash examples by hand, substituting values, if necessary, as you go. Don't forget that you can often hit the tab key to auto-complete the names of files that already exist; it makes all that typing just a little easier, and serves as a sort of command-line autocorrect.

Type the following into your terminal to get fly.

mv ~/Downloads/fly /usr/local/bin/fly

chmod +x !$

This means that you downloaded the fly binary, and moved it into the bash PATH, which is where bash looks for things to execute when you type a command. Then you added permissions that allow it to be executed (+x). Now, the CLI is installed, you won't have to do it again, because fly has the ability to update itself, (which is be described in more detail is a later section).

Setting the pipeline

Now set your pipeline with fly, the Concourse CLI.

fly keeps a list of Concourses it knows how to talk to. To find out if the Concourse you need is already on the list, type:

fly targets

If you see the address of the Concourse you want to use in the list, note its name, and use it in the login command. The examples in this book use the Concourse name control-plane.

fly -t control-plane login

If you don't see the Concourse you need, you can add it with the -c (--concourse-url)flag:

fly -t control-plane login -c https://your-concourse.example.com

You should see a login link you can click to complete login from your browser.

Important The -t flag sets the name when used with login and -c. In the future, you can leave out the -c argument.

If you ever want to know what a short flag stands for, you can run the command with -h (--help) at the end.

Time to set the pipeline. The example here use the name "foundation" for this pipeline, but if your foundation has a name, use that instead.

fly -t control-plane set-pipeline -p foundation -c pipeline.yml

It should say no changes to apply, which is expected, since the pipeline.yml file is still empty.

Note If fly says something about a "version discrepancy," "significant" or otherwise, run fly sync and try again. fly sync automatically updates the CLI with the version that matches the Concourse you're targeting.

Your first job

Before running your pipeline the first time, turn your directory into a git repository.

This allows to reverting edits to your pipeline as needed. This is one of many reasons you should keep your pipeline under version control.

But first, git init

This section describes a step-by-step approach for getting set up with git.

For an example of the repository file structure for single and multiple foundation systems, see Why use Git and GitHub?

Run

git init.gitshould come back with information about the commit you just created:git init git commit --allow-empty -m "Empty initial commit"If this gives you a config error instead, you might need to configure

gitfirst. See First-Time Git Setup to complete the initial setup. When you have finished going through the steps in this guide, try again.Now add your

pipeline.yml.git add pipeline.yml git commit -m "Add pipeline"If you are performing an upgrade, use this instead:

git add pipeline.yml vars.yml git commit -m "Add pipeline and starter vars"Check that everything is tidy:

git statusgitshould returnnothing to commit, working tree clean.

When this is done, you can safely make changes.

Important git commits are the basic unit of code history. Making frequent, small, commits with good commit messages makes it much easier to figure out why things are the way they are, and to return to the way things were in simpler, better times. Writing short commit messages that capture the intent of the change really does make the pipeline history much more legible, both to future-you, and to current and future teammates and collaborators.

The test task

Platform Automation Toolkit comes with a test task you can use to validate that it's been installed correctly.

Add the following to your

pipeline.yml, starting on the line after the---:jobs: - name: test plan: - task: test image: platform-automation-image file: platform-automation-tasks/tasks/test.ymlTry to set the pipeline now.

fly -t control-plane set-pipeline -p foundation -c pipeline.ymlNow you should be able to see your pipeline in the Concourse UI. It starts in the paused state, so click the play button to unpause it. Then click in to the gray box for the

testjob, and click the plus (+) button to schedule a build.It should return an error immediately, with

unknown artifact source: platform-automation-tasks. This is because there isn't a source for the task file yet.This preparation has resulted in a pipeline code that Concourse accepts.

Before starting the next step, make a commit:

git add pipeline.yml git commit -m "Add (nonfunctional) test task"Get the inputs you need by adding

getsteps to the plan before the task, as shown here:jobs: - name: test plan: - get: platform-automation-image resource: platform-automation params: globs: ["*image*.tgz"] unpack: true - get: platform-automation-tasks resource: platform-automation params: globs: ["*tasks*.zip"] unpack: true - task: test image: platform-automation-image file: platform-automation-tasks/tasks/test.ymlNote There is a smaller vSphere container image available. To use it instead of the general purpose image, you can use this glob to get the image:

- get: platform-automation-image resource: platform-automation params: globs: ["vsphere-platform-automation-image*.tar.gz"] unpack: trueNext, you might try to

fly setthis new pipeline. At this stage, you will see that it is not ready yet, andflywill return a message about invalid resources.This is because you need to make the

imageandfileavailable, so you need to set up some Resources.

Adding resources

Resources are Concourse's main approach to managing artifacts. You need an image and the tasks directory, so you need to tell Concourse how to get these things by declaring Resources for them.

In this case, you will download the image and the tasks directory from the Broadcom Support portal. Before you can declare the resources themselves, you must teach Concourse to talk to the Broadcom Support portal. (Many resource types are built in, but this one isn't.)

Add the following to your pipeline file, above the

jobsentry.resource_types: - name: pivnet type: docker-image source: repository: pivotalcf/pivnet-resource tag: latest-final resources: - name: platform-automation type: pivnet source: product_slug: platform-automation api_token: ((pivnet-refresh-token))The API token is a credential, which you pass in using the command-line when setting the pipeline, You don't want to accidentally check it in.

Important Bash commands that start with a space character are not saved in your history. This can be very useful for cases like this, where you want to pass a secret, but you don't want it saved. Commands in this guide that contain a secret start with a space, which can be easy to miss.

Get a refresh token from your Broadcom Support profile (when logged in, click your user name, then Edit Profile) and click Request New Refresh Token.) Then use that token in the following command:

# note the space before the command fly -t control-plane set-pipeline \ -p foundation \ -c pipeline.yml \ -v pivnet-refresh-token=your-api-tokenCaution When you get your Broadcom Support token as described above, any previous Broadcom Support tokens you have stop working. If you're using your Broadcom Support refresh token anywhere, retrieve it from your existing secret storage rather than getting a new one, or you'll end up needing to update it everywhere it's used.

Go back to the Concourse UI and trigger another build. This time, it should pass.

Now it's time to commit.

git add pipeline.yml git commit -m "Add resources needed for test task"It's better not to pass the Broadcom Support token every time you need to set the pipeline. Fortunately, Concourse can integrate with secret storage services, like CredHub. In this step, put the API token in CredHub so Concourse can get it.

Note Backslashes in bash examples: The following example has been broken across multiple lines by using backslash characters (

\) to escape the newlines. The backslash is used in here to keep the examples readable. When you're typing these out, you can skip the backslashes and put it all on one line.First, log in. Again, note the space at the start.

# note the starting space credhub login --server example.com \ --client-name your-client-id \ --client-secret your-client-secretNote Depending on your credential type, you may need to pass

client-idandclient-secret, as we do above, orusernameandpassword. We use theclientapproach because that's the credential type that automation should usually be working with. Nominally, a username represents a person, and a client represents a system; this isn't always exactly how things are in practice. Use whichever type of credential you have in your case. Note that if you exclude either set of flags, CredHub will interactively prompt forusernameandpassword, and hide the characters of your password when you type them. This method of entry can be better in some situations.Next, set the credential name to the path where Concourse will look for it:

# note the starting space credhub set \ --name /concourse/your-team-name/pivnet-refresh-token \ --type value \ --value your-credhub-refresh-tokenNow, set the pipeline again, without passing a secret this time.

fly -t control-plane set-pipeline \ -p foundation \ -c pipeline.ymlThis should succeed, and the diff Concourse shows you should replace the literal credential with

((pivnet-refresh-token)).Go to the UI again and re-run the test job; this should also succeed.

Downloading Tanzu Operations Manager

First, switch out the test job for one that downloads and installs Tanzu Operations Manager. Do this by changing:

- the

nameof the job - the

nameof the task - the

fileof the task

The first task in the job should be

download-product. It has an additional required input; theconfigfiledownload-productuses to talk to the Broadcom Support portal.- the

Before writing that file and making it available as a resource,

getit (and reference it in the params) as if it's there.It also has an additional output (the downloaded image). It will be used in a subsequent step, so you don't have to

putit anywhere.Finally, while it's fine for

testto run in parallel, the install process shouldn't, so you also need to addserial: trueto the job.jobs: - name: install-ops-manager serial: true plan: - get: platform-automation-image resource: platform-automation params: globs: ["*image*.tgz"] unpack: true - get: platform-automation-tasks resource: platform-automation params: globs: ["*tasks*.zip"] unpack: true - get: config - task: download-product image: platform-automation-image file: platform-automation-tasks/tasks/download-product.yml params: CONFIG_FILE: download-ops-manager.yml- If you try to

flythis up to Concourse, it will again throw errors about resources that don't exist, so the next step is to make them. The first new resource you need is the config file. - Push your git repo to a remote on GitHub to make this (and later, other) configuration available to the pipelines. GitHub has good instructions you can follow to create a new repository on GitHub. You can skip over the part about using

git initto set up your repo, since you did that earlier.

- If you try to

Now set up your remote and use

git pushto make it available. You will use this repository to hold our single foundation specific configuration. These instructions use the "Single repository for each Foundation" pattern to structure the configurations.You must add the repository URL to CredHub so that you can reference it later when you declare the corresponding resource.

# note the starting space throughout credhub set \ -n /concourse/your_team_name/foundation/pipeline-repo \ -t value -v [email protected]:username/your-repo-namedownload-ops-manager.ymlholds creds for communicating with the Broadcom Support portal, and uniquely identifies a Tanzu Operations Manager image to download.An example

download-ops-manager.ymlis shown below.Create a

download-ops-manager.ymlfor the IaaS you are using.

AWS

---

pivnet-api-token: ((pivnet_token))

pivnet-file-glob: "ops-manager-aws*.yml"

pivnet-product-slug: ops-manager

product-version-regex: ^2\.5\.\d+$

Azure

---

pivnet-api-token: ((pivnet_token))

pivnet-file-glob: "ops-manager-azure*.yml"

pivnet-product-slug: ops-manager

product-version-regex: ^2\.5\.\d+$

GCP

---

pivnet-api-token: ((pivnet_token))

pivnet-file-glob: "ops-manager-gcp*.yml"

pivnet-product-slug: ops-manager

product-version-regex: ^2\.5\.\d+$

OpenStack

---

pivnet-api-token: ((pivnet_token))

pivnet-file-glob: "ops-manager-openstack*.raw"

pivnet-product-slug: ops-manager

product-version-regex: ^2\.5\.\d+$

vSphere

---

pivnet-api-token: ((pivnet_token))

pivnet-file-glob: "ops-manager-vsphere*.ova"

pivnet-product-slug: ops-manager

product-version-regex: ^2\.5\.\d+$

Add and commit the new file:

git add download-ops-manager.yml git commit -m "Add download-ops-manager file for foundation" git pushNow that the download-ops-manager file you need is in git, you need to add a resource to tell Concourse how to get it as

config.Since this is (probably) a private repo, you need to create a deploy key Concourse can use to access it. Follow the GitHub instructions for creating a deploy key.

Then, put the private key in CredHub so you can use it in your pipeline:

# note the space at the beginning of the next line credhub set \ --name /concourse/your-team-name/plat-auto-pipes-deploy-key \ --type ssh \ --private the/filepath/of/the/key-id_rsa \ --public the/filepath/of/the/key-id_rsa.pubAdd this to the resources section of your pipeline file:

- name: config type: git source: uri: ((pipeline-repo)) private_key: ((plat-auto-pipes-deploy-key.private_key)) branch: mainNow place the Broadcom Support token in CredHub:

# note the starting space throughout credhub set \ -n /concourse/your_team_name/foundation/pivnet_token \ -t value -v your-pivnet-tokenNote Notice the additional element to the cred paths; the foundation name.

If you look at Concourse lookup rules, you'll see that it searches the pipeline-specific path before the team path. Since our pipeline is named for the foundation it's used to manage, we can use this to scope access to our foundation-specific information to just this pipeline.

By contrast, the Broadcom Support portal token may be valuable across several pipelines (and associated foundations), so we scoped that to our team.To perform interpolation in one of your input files, use the

prepare-tasks-with-secretstask. In earlier steps, you relied on Concourse's native integration with CredHub for interpolation. That worked because you needed to use the variable in the pipeline itself, not in one of our inputs.You can add it to your job after you have retrieved the

download-ops-manager.ymlinput, but before thedownload-producttask:jobs: - name: install-ops-manager serial: true plan: - get: platform-automation-image resource: platform-automation params: globs: ["*image*.tgz"] unpack: true - get: platform-automation-tasks resource: platform-automation params: globs: ["*tasks*.zip"] unpack: true - get: config - task: prepare-tasks-with-secrets image: platform-automation-image file: platform-automation-tasks/tasks/prepare-tasks-with-secrets.yml input_mapping: tasks: platform-automation-tasks output_mapping: tasks: platform-automation-tasks params: CONFIG_PATHS: config - task: download-product image: platform-automation-image file: platform-automation-tasks/tasks/download-product.yml params: CONFIG_FILE: download-ops-manager.ymlNotice the input mappings of the

prepare-tasks-with-secretstask. This allows us to use the output of one task as in input of another.An alternative to

input_mappingsis discussed in Configuration Management Strategies.Now, the

prepare-tasks-with-secretstask will find required credentials in the config files, and modify the tasks, so they will pull values from Concourse's integration of CredHub.The job will download the product now. This is a good commit point.

git add pipeline.yml git commit -m 'download the Ops Manager image' git push

Creating resources for your Tanzu Operations Manager

Before Platform Automation Toolkit can create a VM for your Tanzu Operations Manager installation, there are certain resources required by the VM creation and Tanzu Operations Manager director installation processes. These resources are created directly on the IaaS of your choice, and read in as configuration for your Tanzu Operations Manager.

There are two main ways of creating these resources. Use the method that is right for you and your setup.

Terraform

These are open source terraforming files recommended for use because they are maintained by VMware. These files are found in the open source paving repo on GitHub.

What follows is the recommended way to get these resources set up. The output can be used directly in subsequent steps as property configuration.

The paving repo provides instructions for use in the README file. Any manual variables that you need to fill out are located in a terraform.tfvars file, in the folder for the IaaS you are using. For more specific instructions, see the README for that IaaS.

If there are specific aspects of the paving repo that does not work for you, you can override some of the properties using an override.tf file.

Follow these steps to use the paving repository:

Clone the repo on the command line:

cd ../ git clone https://github.com/pivotal/paving.gitIn the checked out repository there are directories for each IaaS. Copy the terraform templates for the infrastructure of your choice to a new directory outside of the paving repo, so you can modify it:

# cp -Ra paving/${IAAS} paving-${IAAS} mkdir paving-${IAAS} cp -a paving/$IAAS/. paving-$IAAS cd paving-${IAAS}IAASmust be set to match one of the infrastructure directories at the top level of thepavingrepo; for example,aws,azure,gcp, ornsxt.In the new directory, the

terraform.tfvars.examplefile shows what values are required for that IaaS. Remove the.examplefrom the filename, and replace the examples with real values.Initialize Terraform which will download the required IaaS providers.

terraform initRun

terraform refreshto update the state with what currently exists on the IaaS.terraform refresh \ -var-file=terraform.tfvarsNext, you can run

terraform planto see what changes will be made to the infrastructure on the IaaS.terraform plan \ -out=terraform.tfplan \ -var-file=terraform.tfvarsFinally, you can run

terraform applyto create the required infrastructure on the IaaS.terraform apply \ -parallelism=5 \ terraform.tfplanSave the output from

terraform output stable_configinto avars.ymlfile inyour-repo-namefor future use:terraform output stable_config > ../your-repo-name/vars.ymlReturn to your working directory for the post-terraform steps:

cd ../your-repo-nameCommit and push the updated

vars.ymlfile:git add vars.yml git commit -m "Update vars.yml with terraform output" git push

Manual installation

VMware has extensive documentation to manually create the resources needed if you are unable or do not wish to use Terraform. As with the Terraform solution, however, there are different docs depending on the IaaS you are installing Tanzu Operations Manager onto.

When going through the documentation required for your IaaS, be sure to stop before deploying the Tanzu Operations Manager image. Platform Automation Toolkit will do this for you.

Note If you need to install an earlier version of Tanzu Operations Manager, select your desired version from the version selector at the top of the page.

Creating the Tanzu Operations Manager VM

Now that you have a Tanzu Operations Manager image and the resources required to deploy a VM, you can add the new task to the

install-opsmanjob.jobs: - name: install-ops-manager serial: true plan: - get: platform-automation-image resource: platform-automation params: globs: ["*image*.tgz"] unpack: true - get: platform-automation-tasks resource: platform-automation params: globs: ["*tasks*.zip"] unpack: true - get: config - task: prepare-tasks-with-secrets file: platform-automation-tasks/tasks/prepare-tasks-with-secrets.yml input_mapping: tasks: platform-automation-tasks output_mapping: tasks: platform-automation-tasks params: CONFIG_PATHS: config - task: download-product image: platform-automation-image file: platform-automation-tasks/tasks/download-product.yml params: CONFIG_FILE: download-ops-manager.yml - task: create-vm image: platform-automation-image file: platform-automation-tasks/tasks/create-vm.ymlIf you try to

flythis up to Concourse, it will again complain about resources that don't exist, so it's time to make them. Two new inputs need to be added forcreate-vm:configstate

The optional inputs are vars used with the config, so you will add those when you do the

config.- For the config file, write a Tanzu Operations Manager VM Configuration file to

opsman.yml.

The properties available vary by IaaS, for example:

- IaaS credentials

- networking setup (IP address, subnet, security group, etc)

- SSH key

- datacenter/availability zone/region

Continue with the next section for completing the

opsman.ymlfile.

Terraform outputs

If you used the paving repository from the Creating resources for your Tanzu Operations Manager section, the following steps will result in a filled out opsman.yml.

Tanzu Operations Manager must be deployed with the IaaS-specific configuration.

Copy and paste the relevant YAML below for your IaaS, and save the file as

opsman.yml.AWS

--8<-- "external/paving/ci/configuration/aws/ops-manager.yml"Azure

--8<-- "external/paving/ci/configuration/azure/ops-manager.yml"GCP

--8<-- "external/paving/ci/configuration/gcp/ops-manager.yml"vSphere+NSXT

--8<-- "external/paving/ci/configuration/nsxt/ops-manager.yml"Where:

- The

((parameters))in these examples map to outputs from theterraform-outputs.yml, which can be provided via vars file for YAML interpolation in a subsequent step.

- The

Note For a supported IaaS not listed above, see the Operations Manager config.

Manual configuration

If you created your infrastructure manually or would like additional configuration options, these are the acceptable keys for the opsman.yml file for each IaaS.

AWS

---

opsman-configuration:

aws:

region: us-west-2

vpc_subnet_id: subnet-0292bc845215c2cbf

security_group_ids: [ sg-0354f804ba7c4bc41 ]

key_pair_name: ops-manager-key # used to SSH to VM

iam_instance_profile_name: env_ops_manager

# At least one IP address (public or private) needs to be assigned to the

# VM. It is also permissible to assign both.

public_ip: 1.2.3.4 # Reserved Elastic IP

private_ip: 10.0.0.2

# Optional

# vm_name: ops-manager-vm # default - ops-manager-vm

# boot_disk_size: 100 # default - 200 (GB)

# instance_type: m5.large # default - m5.large

# NOTE - not all regions support m5.large

# assume_role: "arn:aws:iam::..." # necessary if a role is needed to authorize

# the OpsMan VM instance profile

# tags: {key: value} # key-value pair of tags assigned to the

# # Ops Manager VM

# Omit if using instance profiles

# And instance profile OR access_key/secret_access_key is required

# access_key_id: ((access-key-id))

# secret_access_key: ((secret-access-key))

# security_group_id: sg-123 # DEPRECATED - use security_group_ids

# use_instance_profile: true # DEPRECATED - will use instance profile for

# execution VM if access_key_id and

# secret_access_key are not set

# Optional Ops Manager UI Settings for upgrade-opsman

# ssl-certificate: ...

# pivotal-network-settings: ...

# banner-settings: ...

# syslog-settings: ...

# rbac-settings: ...

Azure

---

opsman-configuration:

azure:

tenant_id: 3e52862f-a01e-4b97-98d5-f31a409df682

subscription_id: 90f35f10-ea9e-4e80-aac4-d6778b995532

client_id: 5782deb6-9195-4827-83ae-a13fda90aa0d

client_secret: ((opsman-client-secret))

location: westus

resource_group: res-group

storage_account: opsman # account name of container

ssh_public_key: ssh-rsa AAAAB3NzaC1yc2EAZ... # ssh key to access VM

# Note that there are several environment-specific details in this path

# This path can reach out to other resource groups if necessary

subnet_id: /subscriptions/

/resourceGroups/

/providers/Microsoft.Network/virtualNetworks/

/subnets/

# At least one IP address (public or private) needs to be assigned # to the VM. It is also permissible to assign both. private_ip: 10.0.0.3 public_ip: 1.2.3.4 # Optional # cloud_name: AzureCloud # default - AzureCloud # storage_key: ((storage-key)) # only required if your client does not # have the needed storage permissions # container: opsmanagerimage # storage account container name # default - opsmanagerimage # network_security_group: ops-manager-security-group # vm_name: ops-manager-vm # default - ops-manager-vm # boot_disk_size: 200 # default - 200 (GB) # use_managed_disk: true # this flag is only respected by the # create-vm and upgrade-opsman commands. # set to false if you want to create # the new opsman VM with an unmanaged # disk (not recommended). default - true # storage_sku: Premium_LRS # this sets the SKU of the storage account # for the disk # Allowed values: Standard_LRS, Premium_LRS, # StandardSSD_LRS, UltraSSD_LRS # vm_size: Standard_DS1_v2 # the size of the Ops Manager VM # default - Standard_DS2_v2 # Allowed values: https://docs.microsoft.com/en-us/azure/virtual-machines/linux/sizes-general # tags: Project=ECommerce # Space-separated tags: key[=value] [key[=value] ...]. Use '' to # clear existing tags. # vpc_subnet: /subscriptions/... # DEPRECATED - use subnet_id # use_unmanaged_disk: false # DEPRECATED - use use_managed_disk # Optional Ops Manager UI Settings for upgrade-opsman # ssl-certificate: ... # pivotal-network-settings: ... # banner-settings: ... # syslog-settings: ... # rbac-settings: ...

GCP

---

opsman-configuration:

gcp:

# Either gcp_service_account_name or gcp_service_account json is required

# You must remove whichever you don't use

gcp_service_account_name: [email protected]

gcp_service_account: ((gcp-service-account-key-json))

project: project-id

region: us-central1

zone: us-central1-b

vpc_subnet: infrastructure-subnet

# At least one IP address (public or private) needs to be assigned to the

# VM. It is also permissible to assign both.

public_ip: 1.2.3.4

private_ip: 10.0.0.2

ssh_public_key: ssh-rsa some-public-key... # RECOMMENDED, but not required

tags: ops-manager # RECOMMENDED, but not required

# Optional

# vm_name: ops-manager-vm # default - ops-manager-vm

# custom_cpu: 2 # default - 2

# custom_memory: 8 # default - 8

# boot_disk_size: 100 # default - 100

# scopes: ["my-scope"]

# hostname: custom.hostname # info: https://cloud.google.com/compute/docs/instances/custom-hostname-vm

# Optional Ops Manager UI Settings for upgrade-opsman

# ssl-certificate: ...

# pivotal-network-settings: ...

# banner-settings: ...

# syslog-settings: ...

# rbac-settings: ...

OpenStack

---

opsman-configuration:

openstack:

project_name: project

auth_url: http://os.example.com:5000/v2.0

username: ((opsman-openstack-username))

password: ((opsman-openstack-password))

net_id: 26a13112-b6c2-11e8-96f8-529269fb1459

security_group_name: opsman-sec-group

key_pair_name: opsman-keypair

# At least one IP address (public or private) needs to be assigned to the VM.

public_ip: 1.2.3.4 # must be an already allocated floating IP

private_ip: 10.0.0.3

# Optional

# availability_zone: zone-01

# project_domain_name: default

# user_domain_name: default

# vm_name: ops-manager-vm # default - ops-manager-vm

# flavor: m1.xlarge # default - m1.xlarge

# identity_api_version: 2 # default - 3

# insecure: true # default - false

# Optional Ops Manager UI Settings for upgrade-opsman

# ssl-certificate: ...

# pivotal-network-settings: ...

# banner-settings: ...

# syslog-settings: ...

# rbac-settings: ...

vSphere

---

opsman-configuration:

vsphere:

vcenter:

ca_cert: cert # REQUIRED if insecure = 0 (secure)

datacenter: example-dc

datastore: example-ds-1

folder: /example-dc/vm/Folder # RECOMMENDED, but not required

url: vcenter.example.com

username: ((vcenter-username))

password: ((vcenter-password))

resource_pool: /example-dc/host/example-cluster/Resources/example-pool

# resource_pool can use a cluster - /example-dc/host/example-cluster

# Optional

# host: host # DEPRECATED - Platform Automation cannot guarantee

# the location of the VM, given the nature of vSphere

# insecure: 0 # default - 0 (secure) | 1 (insecure)

disk_type: thin # thin|thick

dns: 8.8.8.8

gateway: 192.168.10.1

hostname: ops-manager.example.com

netmask: 255.255.255.192

network: example-virtual-network

ntp: ntp.ubuntu.com

private_ip: 10.0.0.10

ssh_public_key: ssh-rsa ...... # REQUIRED Ops Manager >= 2.6

# Optional

# cpu: 1 # default - 1

# memory: 8 # default - 8 (GB)

# ssh_password: ((ssh-password)) # REQUIRED if ssh_public_key not defined

# (Ops Manager < 2.6 ONLY)

# vm_name: ops-manager-vm # default - ops-manager-vm

# disk_size: 200 # default - 160 (GB), only larger values allowed

# Optional Ops Manager UI Settings for upgrade-opsman

# ssl-certificate: ...

# pivotal-network-settings: ...

# banner-settings: ...

# syslog-settings: ...

# rbac-settings: ...

Using the Tanzu Operations Manager config file

After you have your config file, commit and push it:

git add opsman.yml git commit -m "Add opsman config" git pushThe

stateinput is a placeholder which will be filled in by thecreate-vmtask output. This will be used later to keep track of the VM so it can be upgraded, which you can learn about in the upgrade-how-to.Add the following to your

resourcessection of yourpipeline.yml.- name: vars type: git source: uri: ((pipeline-repo)) private_key: ((plat-auto-pipes-deploy-key.private_key)) branch: mainThis resource definition will allow

create-vmto use the variables fromvars.ymlin theopsman.ymlfile.Update the

create-vmtask in theinstall-opsmanto use thedownload-productimage, Tanzu Operations Manager configuration file, the variables file, and the placeholder state file.jobs: - name: install-ops-manager serial: true plan: - get: platform-automation-image resource: platform-automation params: globs: ["*image*.tgz"] unpack: true - get: platform-automation-tasks resource: platform-automation params: globs: ["*tasks*.zip"] unpack: true - get: config - get: vars - task: prepare-tasks-with-secrets file: platform-automation-tasks/tasks/prepare-tasks-with-secrets.yml input_mapping: tasks: platform-automation-tasks output_mapping: tasks: platform-automation-tasks params: CONFIG_PATHS: config - task: download-product image: platform-automation-image file: platform-automation-tasks/tasks/download-product.yml params: CONFIG_FILE: download-ops-manager.yml - task: create-vm image: platform-automation-image file: platform-automation-tasks/tasks/create-vm.yml params: VARS_FILES: vars/vars.yml input_mapping: state: config image: downloaded-productNote Defaults for tasks: We do not explicitly set the default parameters for

create-vmin this example. Becauseopsman.ymlis the default input toOPSMAN_CONFIG_FILE, it is redundant to set this param in the pipeline. See the Task reference available and default parameters.Now set the pipeline.

Before you run the job,

ensurethatstate.ymlis always persisted regardless of whether theinstall-opsmanjob failed or passed. To do this, you can add the following section to the job:jobs: - name: install-ops-manager serial: true plan: - get: platform-automation-image resource: platform-automation params: globs: ["*image*.tgz"] unpack: true - get: platform-automation-tasks resource: platform-automation params: globs: ["*tasks*.zip"] unpack: true - get: config - task: prepare-tasks-with-secrets file: platform-automation-tasks/tasks/prepare-tasks-with-secrets.yml input_mapping: tasks: platform-automation-tasks output_mapping: tasks: platform-automation-tasks params: CONFIG_PATHS: config - task: download-product image: platform-automation-image file: platform-automation-tasks/tasks/download-product.yml params: CONFIG_FILE: download-ops-manager.yml - task: create-vm image: platform-automation-image file: platform-automation-tasks/tasks/create-vm.yml params: VARS_FILES: vars/vars.yml input_mapping: state: config image: downloaded-product ensure: do: - task: make-commit image: platform-automation-image file: platform-automation-tasks/tasks/make-git-commit.yml input_mapping: repository: config file-source: generated-state output_mapping: repository-commit: config-commit params: FILE_SOURCE_PATH: state.yml FILE_DESTINATION_PATH: state.yml GIT_AUTHOR_EMAIL: "[email protected]" GIT_AUTHOR_NAME: "Platform Automation Toolkit Bot" COMMIT_MESSAGE: 'Update state file' - put: config params: repository: config-commit merge: trueSet the pipeline one final time, run the job, and see it pass.

fly -t control-plane set-pipeline \ -p foundation \ -c pipeline.ymlCommit the final changes to your repository.

git add pipeline.yml git commit -m "Install Ops Manager in CI" git push

Your install pipeline is now complete.