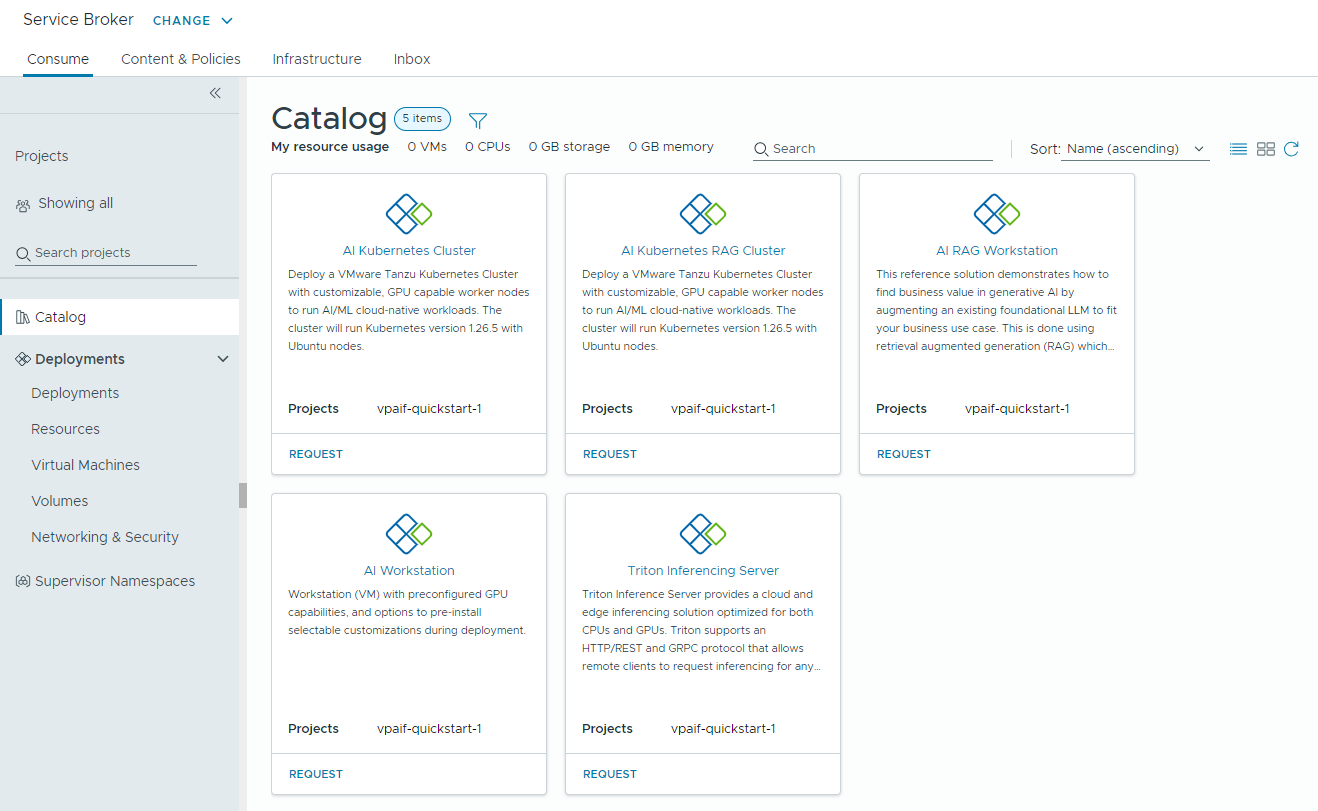

If your cloud administrator has set up Private AI Automation Services in VMware Aria Automation, you can access and request AI workfloads using the Automation Service Broker catalog.

- AI Workstation – a GPU-enabled virtual machine that can be configured with desired vCPU, vGPU, memory, and the option to pre-install AI/ML frameworks like PyTorch, CUDA Samples, and TensorFlow.

- AI RAG Workstation – a GPU-enabled virtual machine with Retrieval Augmented Generation (RAG) reference solution.

- Triton Inference Server – a GPU-enabled virtual machine with NVIDIA Triton Inference Server.

- AI Kubernetes Cluster – a VMware Tanzu Kubernetes Grid Cluster with GPU-capable worker nodes to run AI/ML cloud-native workloads.

- AI Kubernetes RAG Cluster – a VMware Tanzu Kubernetes Grid Cluster with GPU-capable worker nodes to run a reference RAG solution.

Before you begin

- Verify that your cloud administrator has configured Private AI Automation Services for your project.

- Verify that you have permissions to request AI catalog items.

How do I access the Private AI Automation Services catalog items

In Automation Service Broker, open the Consume tab and then click Catalog. The catalog items that are available to you are based on the project your selected. If you didn't select a project, all catalog items that are available to you appear in the catalog.

Remember that all values shown in the procedures described in this section are use case samples. Your account values depend on your environment.

How do I monitor my Private AI deployments

You use the Deployments page in Automation Service Broker to manage your deployments and the associated resources, making changes to deployments, troubleshooting failed deployments, making changes to the resources, and destroying unused deployments.

To manage your deployments, select .

For more information, see How do I manage my Automation Service Broker deployments.