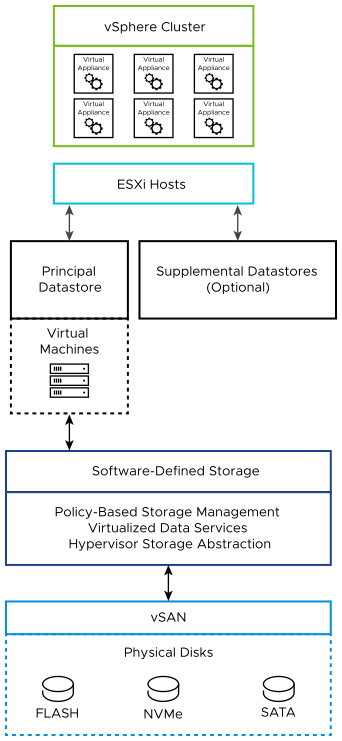

VMware Cloud Foundation uses VMware vSAN as the principal storage type for the management domain and is recommended for use as principal storage in VI workload domains. You must determine the size of the compute and storage resources for the vSAN storage, and the configuration of the network carrying vSAN traffic. For multiple availability zones, you extend the resource size and determine the configuration of the vSAN witness host.

Logical Design for vSAN for VMware Cloud Foundation

vSAN is a cost-efficient storage technology that provides a simple storage management user experience, and permits a fully automated initial deployment of VMware Cloud Foundation. It also provides support for future storage expansion and implementation of vSAN stretched clusters in a workload domain.

Workload Domain Type |

VMware Cloud Foundation Instances with a Single Availability Zone |

VMware Cloud FoundationInstances with Multiple Availability Zones |

|---|---|---|

Management domain (default cluster) |

|

|

Management domain (additional clusters) |

|

|

VI workload domain (all clusters) |

|

|

Hardware Configuration for vSAN for VMware Cloud Foundation

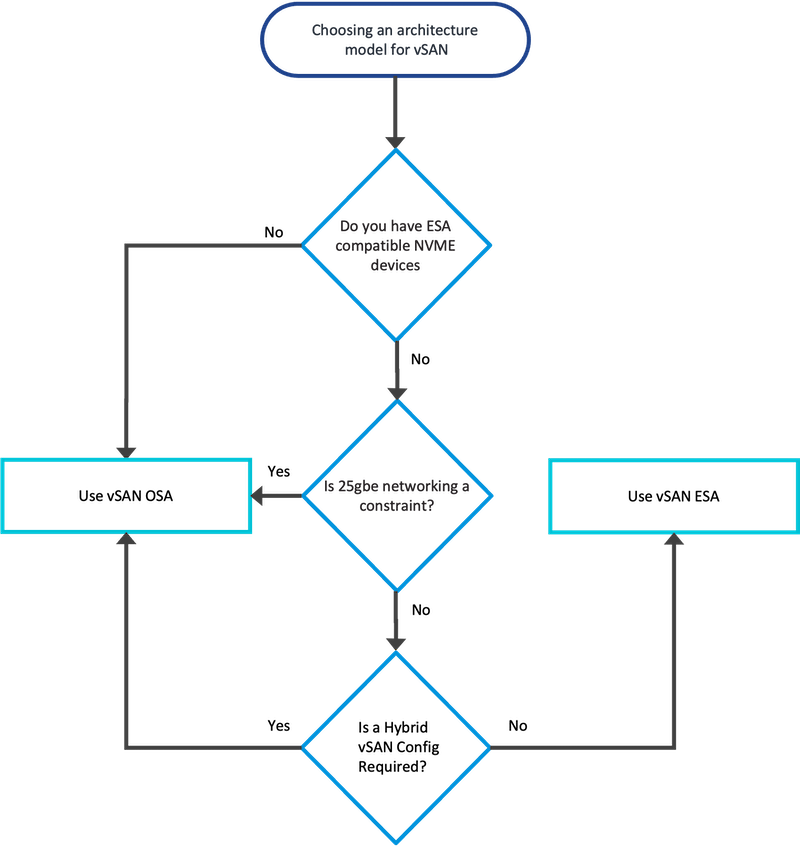

Determine the vSAN architecture and the storage controllers for performance and stability according to the requirements of the management components of VMware Cloud Foundation.

vSAN Physical Requirements and Dependencies

vSAN has the following requirements and options:

vSAN Express Storage Architecture (ESA)

All storage devices claimed by vSAN contribute to capacity and performance. Each host's storage devices claimed by vSAN form a storage pool. The storage pool represents the amount of caching and capacity provided by the host to the vSAN datastore.

ESA requires compatible NVMe devices.

ESXi hosts must be on the Hardware Compatibility Guide for vSAN ESA ReadyNode

vSAN Original Storage Architecture (OSA) as hybrid storage or all-flash storage.

A vSAN hybrid storage configuration requires both magnetic devices and flash caching devices. The cache tier must be at least 10% of the size of the capacity tier.

An all-flash vSAN configuration requires flash devices for both the caching and capacity tiers.

VMware vSAN ReadyNodes or hardware from the VMware Compatibility Guide to build your own.

For best practices, capacity considerations, and general recommendations about designing and sizing a vSAN cluster, see the VMware vSAN Design and Sizing Guide.

Network Design for vSAN for VMware Cloud Foundation

In the network design for vSAN in VMware Cloud Foundation, you determine the network configuration for vSAN traffic.

Consider the overall traffic bandwidth and decide how to isolate storage traffic.

Consider how much vSAN data traffic is running between ESXi hosts.

The amount of storage traffic depends on the number of VMs that are running in the cluster, and on how write-intensive the I/O process is for the applications running in the VMs.

For information on the physical network setup for vSAN traffic, and other system traffic, see Physical Network Infrastructure Design for VMware Cloud Foundation.

For information on the virtual network setup for vSAN traffic, and other system traffic, see Logical vSphere Networking Design for VMware Cloud Foundation.

The vSAN network design includes these components.

Design Component |

Description |

|---|---|

Physical NIC speed |

For best and predictable performance (IOPS) of the environment, this design uses a minimum of a 10-GbE connection, with 25-GbE recommended, for use with vSAN OSA all-flash configurations. For vSAN ESA, 25-GbE connection is recommended. |

VMkernel network adapters for vSAN |

The vSAN VMkernel network adapter on each ESXi host is created when you enable vSAN on the cluster. Connect the vSAN VMkernel network adapters on all ESXi hosts in a cluster to a dedicated distributed port group, including ESXi hosts that are not contributing storage resources to the cluster. |

VLAN |

All storage traffic should be isolated on its own VLAN. When a design uses multiple vSAN clusters, each cluster should use a dedicated VLAN or segment for its traffic. This approach increases security, prevents interference between clusters, and helps with troubleshooting cluster configuration. If a cluster spans a rack, the vSAN VLAN must be allocated per rack to enable Layer 3 multi-rack deployments. |

Jumbo frames |

vSAN traffic can be handled by using jumbo frames. Use jumbo frames for vSAN traffic only if the physical environment is already configured to support them, they are part of the existing design, or if the underlying configuration does not create a significant amount of added complexity to the design. |

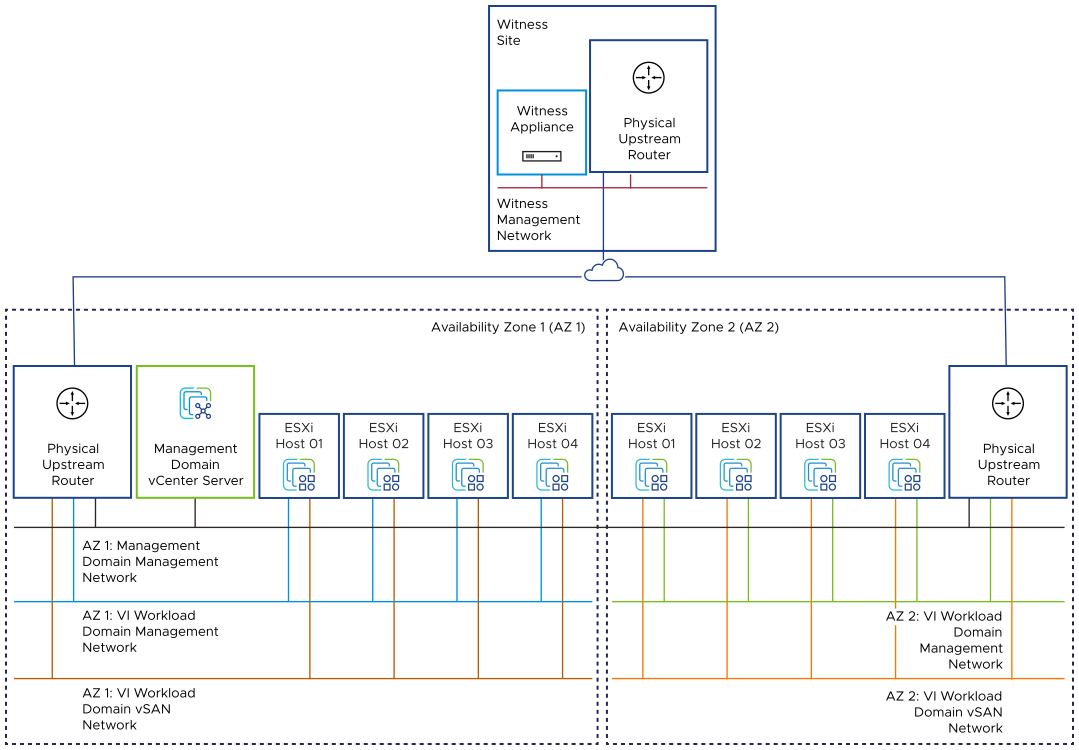

vSAN Witness Design for VMware Cloud Foundation

The vSAN witness appliance is a specialized ESXi installation that provides quorum and tiebreaker services for stretched clusters in VMware Cloud Foundation.

vSAN Witness Deployment Specification

You must deploy a witness ESXi host when using vSAN in a stretched cluster configuration. This appliance must be deployed in a third location that is not local to the ESXi hosts on either side of the stretched cluster.

Appliance Size |

Number of Supported Virtual Machines |

Maximum Number of Supported Witness Components |

|---|---|---|

Tiny |

10 |

750 |

Medium |

500 |

21,833 |

Large |

More than 500 |

45,000 |

Extra Large |

More than 500 |

64,000 |

vSAN Witness Network Design

When using two availability zones, connect the vSAN witness appliance to the workload domain vCenter Server so that you can perform the initial setup of the stretched cluster and have workloads failover between the zones.

VMware Cloud Foundation uses vSAN witness traffic separation where you can use a VMkernel adapter for vSAN witness traffic that is different from the adapter for vSAN data traffic. In this design, you configure vSAN witness traffic in the following way:

On each ESXi host in both availability zones, place the vSAN witness traffic on the management VMkernel adapter.

On the vSAN witness appliance, use the same VMkernel adapter for both management and witness traffic.

For information about vSAN witness traffic separation, see vSAN Stretched Cluster Guide on VMware Cloud Platform Tech Zone.

- Management network

-

Routed to the management networks in both availability zones. Connect the first VMkernel adapter of the vSAN witness appliance to this network. The second VMkernel adapter on the vSAN witness appliance is not used.

Place the following traffic on this network:

Management traffic

vSAN traffic

vSAN Design Requirements and Recommendations for VMware Cloud Foundation

Consider the requirements for using vSAN storage for standard and stretched clusters in VMware Cloud Foundation, such as required capacity, number of hosts, storage policies, and the similar best practices for having vSAN operate in an optimal way.

For related vSphere cluster requirements and recommendations, see vSphere Cluster Design Requirements and Recommendations for VMware Cloud Foundation.

vSAN Design Requirements

You must meet the following design requirements for standard and stretched clusters in your vSAN design for VMware Cloud Foundation.

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-REQD-CFG-001 |

Provide sufficient raw capacity to meet the initial needs of the workload domain cluster. |

Ensures that sufficient resources are present to create the workload domain cluster. |

None. |

VCF-VSAN-REQD-CFG-002 |

Provide at least the required minimum number of hosts according to the cluster type. |

Satisfies the requirements for storage availability. |

None. |

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-REQD-CFG-003 |

Verify the hardware components used in your vSAN deployment are on the vSAN Hardware Compatibility List. |

Prevents hardware-related failures during workload deployment |

Limits the number of compatible hardware configurations that can be used. |

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-MAX-REQD-CFG-001 |

Provide at least four nodes for the initial cluster. |

A vSAN Max cluster must contain at least four hosts. |

None. |

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-REQD-CFG-004 |

Add the following setting to the default vSAN storage policy: Site disaster tolerance = Site mirroring - stretched cluster |

Provides the necessary protection for virtual machines in each availability zone, with the ability to recover from an availability zone outage. |

You might need additional policies if third-party virtual machines are to be hosted in these clusters because their performance or availability requirements might differ from what the default VMware vSAN policy supports. |

VCF-VSAN-REQD-CFG-005 |

Configure two fault domains, one for each availability zone. Assign each host to their respective availability zone fault domain. |

Fault domains are mapped to availability zones to provide logical host separation and ensure a copy of vSAN data is always available even when an availability zone goes offline. |

You must provide additional raw storage when the site mirroring - stretched cluster option is selected, and fault domains are enabled. |

| VCF-VSAN-REQD-CFG-006 | Configure an individual vSAN storage policy for each stretched cluster. | The vSAN storage policy of a stretched cluster cannot be shared with other clusters. | You must configure additional vSAN storage policies. |

VCF-VSAN-WTN-REQD-CFG-001 |

Deploy a vSAN witness appliance in a location that is not local to the ESXi hosts in any of the availability zones. |

Ensures availability of vSAN witness components in the event of a failure of one of the availability zones. |

You must provide a third physically separate location that runs a vSphere environment. You might use a VMware Cloud Foundation instance in a separate physical location. |

VCF-VSAN-WTN-REQD-CFG-002 |

Deploy a witness appliance that corresponds to the required cluster capacity. |

Ensures the witness appliance is sized to support the projected workload storage consumption. |

The vSphere environment at the witness location must satisfy the resource requirements of the witness appliance. |

VCF-VSAN-WTN-REQD-CFG-003 |

Connect the first VMkernel adapter of the vSAN witness appliance to the management network in the witness site. |

Enables connecting the witness appliance to the workload domain vCenter Server. |

The management networks in both availability zones must be routed to the management network in the witness site. |

VCF-VSAN-WTN-REQD-CFG-004 |

Allocate a statically assigned IP address and hostname to the management adapter of the vSAN witness appliance. |

Simplifies maintenance and tracking, and implements a DNS configuration. |

Requires precise IP address management. |

VCF-VSAN-WTN-REQD-CFG-005 |

Configure forward and reverse DNS records for the vSAN witness appliance for the VMware Cloud Foundation instance. |

Enables connecting the vSAN witness appliance to the workload domain vCenter Server by FQDN instead of IP address. |

You must provide DNS records for the vSAN witness appliance. |

VCF-VSAN-WTN-REQD-CFG-006 |

Configure time synchronization by using an internal NTP time for the vSAN witness appliance. |

Prevents any failures in the stretched cluster configuration that are caused by time mismatch between the vSAN witness appliance and the ESXi hosts in both availability zones and workload domain vCenter Server. |

|

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-L3MR-REQD-CFG-001 |

Configure vSAN fault domains and place the nodes of each rack in their fault domain. |

Allows workload VMs to tolerate a rack failure by distributing copies of the data and witness components on nodes in separate racks. |

You must make the fault domain configuration manually vCenter Server after deployment and after cluster expansion. |

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-ESA-L3MR-REQD-CFG-001 |

Use a minimum of four racks for the cluster. |

Provides support for reprotecting vSAN objects if a single-rack failure occurs. |

Requires a minimum of four hosts in a cluster with adaptive RAID-5 erasure coding. |

VCF-VSAN-ESA-L3MR-REQD-CFG-002 |

Deactivate vSAN ESA auto policy management |

|

To align with the number of vSAN fault domains, you might have to create a default storage policy manually. |

vSAN Design Recommendations

In your vSAN design for VMware Cloud Foundation, you can apply certain best practices for standard and stretched clusters.

Recommendation ID |

Design Recommendation |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-RCMD-CFG-001 |

Provide sufficient raw capacity to meet the planned needs of the workload domain cluster. |

Ensures that sufficient resources are present in the workload domain cluster, preventing the need to expand the vSAN datastore in the future. |

None. |

VCF-VSAN-RCMD-CFG-002 |

Ensure that at least 30% of free space is always available on the vSAN datastore. |

This reserved capacity is set aside for host maintenance mode data evacuation, component rebuilds, rebalancing operations, and VM snapshots. |

Increases the amount of available storage needed. |

VCF-VSAN-RCMD-CFG-003 |

Use the default VMware vSAN storage policy. |

|

You might need additional policies for third-party virtual machines hosted in these clusters because their performance or availability requirements might differ from what the default VMware vSAN policy supports. |

VCF-VSAN-RCMD-CFG-004 |

Leave the default virtual machine swap file as a sparse object on vSAN. |

Sparse virtual swap files consume capacity on vSAN only as they are accessed. As a result, you can reduce the consumption on the vSAN datastore if virtual machines do not experience memory over-commitment, which would require the use of the virtual swap file. |

None. |

VCF-VSAN-RCMD-CFG-005 |

Use the existing vSphere Distributed Switch instance for the workload domain cluster. |

|

All traffic types can be shared over common uplinks. |

VCF-VSAN-RCMD-CFG-006 |

Configure jumbo frames on the VLAN for vSAN traffic. |

|

Every device in the network must support jumbo frames. |

VCF-VSAN-RCMD-CFG-007 |

Use a dedicated VLAN for vSAN traffic for each vSAN cluster. |

|

Increases the number of VLANs required. |

VCF-VSAN-RCMD-CFG-008 |

Configure vSAN in an all-flash configuration in the default workload domain cluster. |

Meets the performance needs of the default workload domain cluster. |

All vSAN disks must be flash disks, which might cost more than magnetic disks. |

Recommendation ID |

Design Recommendation |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-RCMD-CFG-009 |

Ensure that the storage I/O controller has a minimum queue depth of 256 set. |

Storage controllers with lower queue depths can cause performance and stability problems when running vSAN. vSAN ReadyNode servers are configured with the correct queue depths for vSAN. |

Limits the number of compatible I/O controllers that can be used for storage. |

VCF-VSAN-RCMD-CFG-010 |

Do not use the storage I/O controllers that are running vSAN disk groups for another purpose. |

Running non-vSAN disks, for example, VMFS, on a storage I/O controller that is running a vSAN disk group can impact vSAN performance. |

If non-vSAN disks are required in ESXi hosts, you must have an additional storage I/O controller in the host. |

VCF-VSAN-RCMD-CFG-011 |

Configure vSAN with a minimum of two disk groups per ESXi host. |

Reduces the size of the fault domain and spreads the I/O load over more disks for better performance. |

Using multiple disk groups requires more disks in each ESXi host. |

VCF-VSAN-RCMD-CFG-012 |

For the cache tier in each disk group, use a flash-based drive that is at least 600 GB in size. |

Provides enough cache for both hybrid or all-flash vSAN configurations to buffer I/O and ensure disk group performance. Additional space in the cache tier does not increase performance. |

Using larger flash disks can increase the initial host cost. |

Recommendation ID |

Design Recommendation |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-RCMD-CFG-013 |

Activate auto-policy management. |

Configures optimized storage policies based on the cluster type and the number of hosts in the cluster inventory. On changes to the number of hosts in the cluster or to host rebuild reserve, vSAN prompts you to make a suggested adjustment to the optimized storage policy. |

None. |

VCF-VSAN-RCMD-CFG-014 |

Activate vSAN ESA compression. |

Improves performance. |

PostgreSQL databases and other applications might use their own compression capabilities. In these cases, using a storage policy with the compression capability turned off will save CPU cycles. You can disable vSAN ESA compressions for such workloads through the use of the Storage Policy Based Management (SPBM) framework. |

VCF-VSAN-RCMD-CFG-015 |

Use NICs with a minimum 25-GbE capacity. |

10-GbE NICs will limit the scale and performance of a vSAN ESA cluster because, usually, performance requirements increase over the lifespan of the cluster. |

Requires 25-GbE or faster network fabric. |

| Recommendation ID |

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|---|

VCF-VSAN-MAX-RCMD-001 |

Limit the size of the vSAN Max cluster to 24 hosts. |

The total number of hosts for the vSAN Max cluster and vSAN compute clusters mounting the datastore must not exceed 128 hosts. A vSAN Max cluster with 24 nodes provides support for up to 104 vSAN compute hosts which is a good compute-to-storage ratio. |

Limits the maximum number of hosts. |

VCF-VSAN-MAX-RCMD-002 |

If the vSAN Max cluster consists of only four hosts, do not enable the Host Rebuild Reserve. |

If the auto-policy management feature is turned on, prevents vSAN Max from using adaptive RAID-5. |

vSAN does not reserve any of this capacity and presents it as free capacity for vSAN to self-repair if a single host failure occurs. |

VCF-VSAN-MAX-RCMD-003 |

Include TPM (Trusted Platform Module) in the hardware configuration of the hosts. |

Ensures that the keys issued to the hosts in a vSAN Max cluster using Data-at-Rest Encryption are cryptographically stored on a TPM device. |

Limits the choice of hardware configurations. |

Recommendation ID |

Design Recommendation |

Justification |

Implication |

|---|---|---|---|

VCF-VSAN-WTN-RCMD-CFG-001 |

Configure the vSAN witness appliance to use the first VMkernel adapter, that is the management interface, for vSAN traffic. |

Removes the requirement to have static routes on the witness appliance as vSAN traffic from the witness is routed over the management network. |

The management networks in both availability zones must be routed to the management network in the witness site. |

VCF-VSAN-WTN-RCMD-CFG-002 |

Place witness traffic on the management VMkernel adapter of all the ESXi hosts in the workload domain. |

Separates the witness traffic from the vSAN data traffic. Witness traffic separation provides the following benefits:

|

The management networks in both availability zones must be routed to the management network in the witness site. |