As a DevOps engineer, use the Automation Service Broker catalog to provision a GPU-enabled Tanzu Kubernetes Grid cluster with the NVIDIA GPU Operator configured and licensed, and ready to run GPU-enabled container workloads.

Procedure

- Log in to VMware Aria Automation at https://<aria_automation_cluster_fqdn>/csp/gateway/portal.

- On the main navigation bar, click Services.

- On the My Services page, click Service Broker.

- On the Consume tab, on the navigation bar, click Catalog.

- In the AI Kubernetes Cluster card, click Request .

- Configure the following settings and click Submit.

Setting Value Version Version of the catalog item Project Project where you want it to be deployed and the name for the deployment Deployment Name Name for the resulting deployment Control plane > Node count Number of control place nodes Control plane > VM Class VM class, based on CPU and memory requirements, for the control plane nodes Workers > Node count Number of worker nodes Workers > VM Class GPU-enabled VM class for the worker nodes NVIDIA AI enterprise API key The API key for access to the NVIDIA NGC registry. The API key is required to download the Helm charts of NVIDIA GPU Operator.

- Monitor the deployment process.

- On the Consume tab, click Deployments > Deployments.

- Click the name of deployment and then click the History tab.

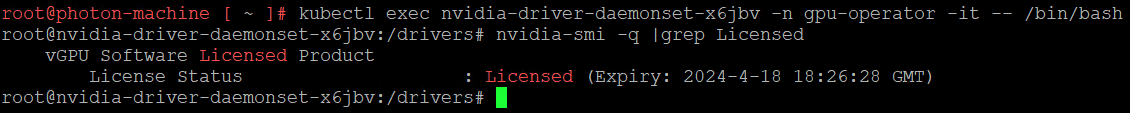

- After the deployment is completed, review the details to access the AI-ready TKG cluster by using kubectl.

Figure 1. Example of Access Details for an AI -Ready TKG Cluster

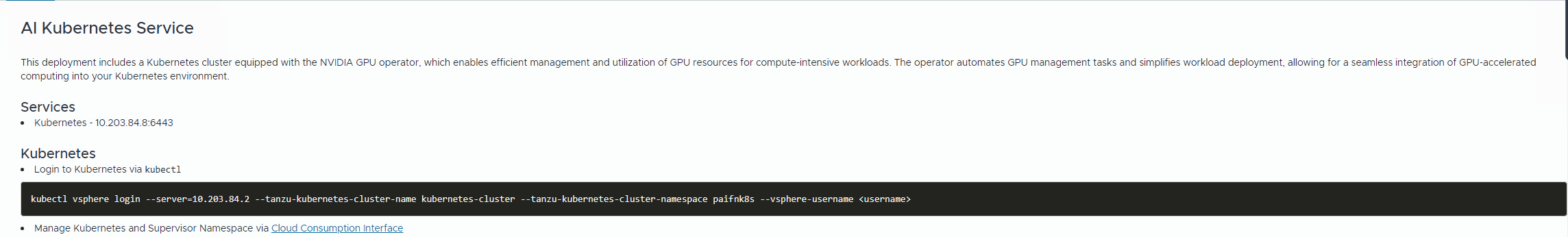

- Verify the NVIDIA GPU Operator deployment by running the following kubectl command on the Supervisor.

kubectl get pods -n gpu-operator

Figure 2. Example of Successful NVIDIA GPU Operator deployment

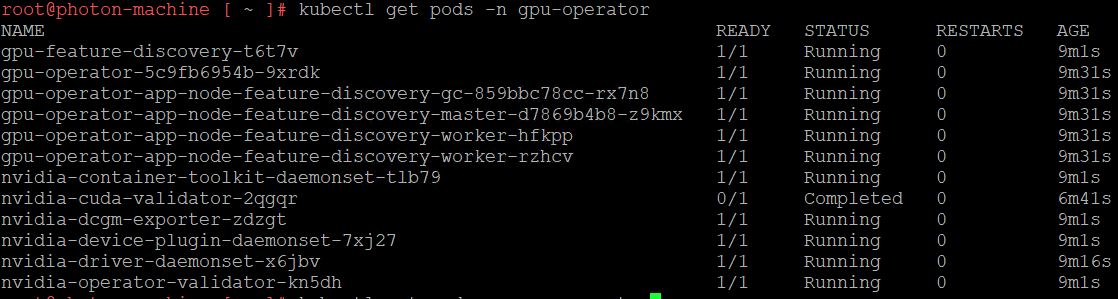

- Verify the NVIDIA license by running the following command.

You run a Bash shell into the nvidia-driver-daemonset pod where you can run the nvidia-smi command with the

-qargument to check the license status.kubectl exec nvidia-driver-daemonset-x6jbv -n gpu-operator -it -- /bin/bash

Figure 3. Example of a Licensed AI Kubernetes Cluster